抛出问题:处理互联网大量数据,用SQL数据库还是noSql数据库比较好?

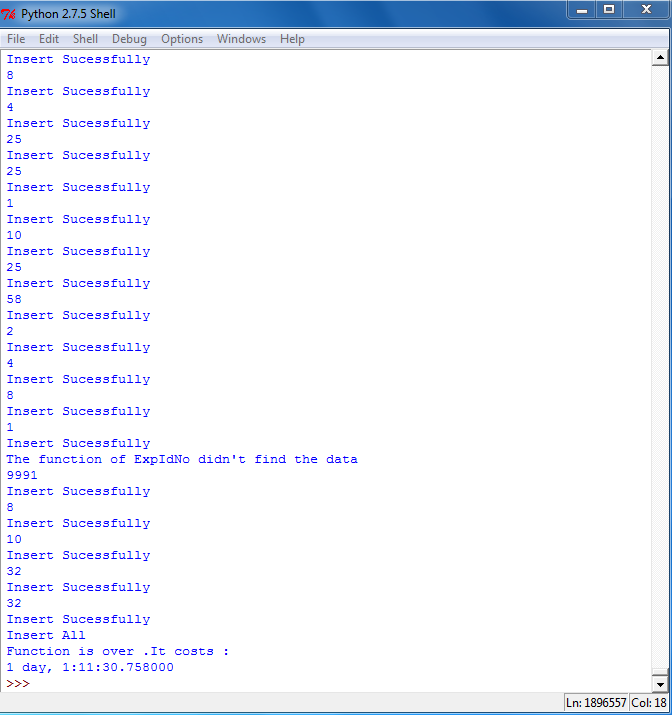

今天实习上班,看到前天下班前一小时跑的

每插入一条从一个39W的IP数据库查询属于哪个地区,再插入。总数据94W。太慢。

性能分析,找出瓶颈:

test 1: python+mysql (单条插入,不是块插入) insert 21039 条数据,耗时0:04:40.790000

join query 与一个表长39W的表join。耗时15min56.61sec

test 2: python+mysql 块插入

每次够50条就插入。

sample:

data = [ ('Jane','555-001'), ('Joe', '555-001'), ('John', '555-003') ] stmt = "INSERT INTO employees (name, phone) VALUES (%s,%s)" 注意:%s 不用写成“%s”,没有双引号,和execute()中不一致。 cursor.executemany(stmt, data)

sample code:

#encoding:utf-8 ''' Created on 2013-1-27 @author: JinHanJiang ''' ''' create table CREATE TABLE `Passwords` ( `id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT 'id主键', `pass` varchar(64) NOT NULL COMMENT '密码', `md5` varchar(32) DEFAULT NULL COMMENT '32位md5值', PRIMARY KEY (`id`), UNIQUE KEY `pass` (`pass`) ) ENGINE=MyISAM DEFAULT CHARSET=utf8 COMMENT='密码' ''' import os import re import time from datetime import datetime import hashlib import mysql.connector import random root_path = os.path.abspath('./') + os.sep f = open(root_path + 'file/f1.txt', 'r') fields = ['pass', 'md5'] def writeDB(params): conn = cur = None try: fields = '(`' + '`, `'.join(params['fields']) + '`)' stmt = "INSERT IGNORE INTO Passwords"+fields+" VALUES (%s,%s)" conn = mysql.connector.connect(host='127.0.0.1', database='password', user='root', password='admin') cur = conn.cursor() cur.executemany(stmt, params['datas']) except mysql.connector.Error as e: print e finally: if cur: cur.close() if conn: conn.commit() #如果数据库表类型是Innodb记的带个参数 conn.close() pos = 0 step = buff = 1024 * 1024 last = '' dstart = datetime.now() print "Program Start At: " + dstart.strftime('%Y-%m-%d %H:%M:%S') while 1: f.seek(pos) line = f.read(buff) #从文件中读取一段内容 datas = [] if not line: if '' is not last: data = (last, hashlib.md5(last).hexdigest().upper()) datas.append(data) params = {'fields': fields, 'datas': datas} writeDB(params) break; #如果内容为空跳出循环 pos += step #计算取下一段内容长度 lines = re.split(" ", line) #以回车( )分隔内容到数组中 lines[0] = str(last) + str(lines[0]) last = lines.pop() #将数组最后一条数据剔除,并存到last变量中,到下次循环再处理 for lin in lines: lin = lin.rstrip() #去除内容末尾的回车字符 if not lin: continue data = (lin, hashlib.md5(lin).hexdigest().upper()) datas.append(data) #封装内容 if len(datas) > 0: params = {'fields': fields, 'datas': datas} writeDB(params) time.sleep(random.random()) #让Cpu随机休息0 <= n < 1.0 s f.close() dend = datetime.now() print "Program End At:%s Time span %s"%(dend.strftime('%Y-%m-%d %H:%M:%S'), dend - dstart);

TEST 3 . 块插入,每条都和IP_data 交互查询(对ip_data表的IPSTART 和IPEND两列建立INDEX)。21000条数据JOIN 39W,耗时21min

TEST 4 . 块插入,每条都和IP_data 交互查询(对ip_data表的IPSTART 建立INDEX),不用between .用IP>IPSTART ORDER BY IPSTART DESC LIMIT 1。21000条数据JOIN 39W,耗时0:00:21.294000