<property>

<name>hbase.rootdir</name>

<value>hdfs://ns1/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop-2:2181,hadoop-3:2181,hadoop-5:2181</value>

</property>

</configuration>

修改hbase-env.sh 禁用自带zookeeper

export HBASE_MANAGES_ZK=false export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk

修改regionservers

vi regionservers

hadoop-3 hadoop-1 hadoop-4 hadoop-5

新建backup-masters

vi backup-masters

hadoop-1 hadoop-4

将HADOOP_HOME/etc/conf下hdfs-site.xml,core-site.xml拷贝到/usr/local/hbase-1.2.4/conf下

启动

在hadoop-2节点

start-hbase.sh

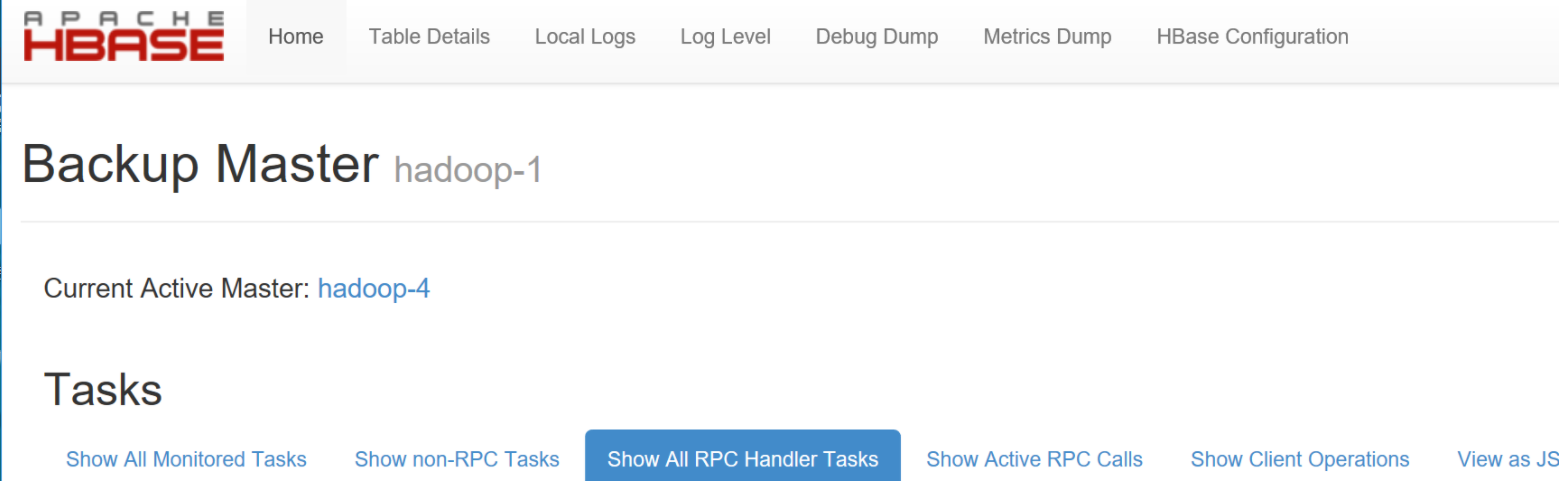

HA测试

打开浏览器,输入http://19

然后在浏览器输入备节点地址http://192.168.1.115:16010

Spark 写入Hbase

通过Spark创建一张表,并插入100万条数据

代码如下

import org.apache.hadoop.hbase.{HBaseConfiguration, TableName} import org.apache.hadoop.hbase.client.{ConnectionFactory, Put} import org.apache.hadoop.hbase.io.ImmutableBytesWritable import org.apache.hadoop.hbase.mapred.TableOutputFormat import org.apache.hadoop.hbase.util.Bytes import org.apache.hadoop.mapred.JobConf import org.apache.spark.{SparkConf, SparkContext} import org.apache.spark.streaming.{Seconds, StreamingContext} object testhbase { def main(args: Array[String]): Unit = { val conf = new SparkConf().setMaster("spark://192.168.1.116:7077").setAppName("hbase").setJars(List("C:\Users\hao\IdeaProjects\test\out\artifacts\test_jar\test.jar")) val sc=new SparkContext(conf) val rdd = sc.makeRDD(Array(1)).flatMap(_ => 0 to 1000000) rdd.foreachPartition(x => { val hbaseConf = HBaseConfiguration.create() hbaseConf.set("hbase.zookeeper.quorum", "hadoop-2,hadoop-3,hadoop-5") hbaseConf.set("hbase.zookeeper.property.clientPort", "2181") hbaseConf.set("hbase.defaults.for.version.skip", "true") val hbaseConn = ConnectionFactory.createConnection(hbaseConf) val table = hbaseConn.getTable(TableName.valueOf("word")) x.foreach(value => { var put = new Put(Bytes.toBytes(value.toString)) put.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("c1"), Bytes.toBytes(value.toString)) table.put(put) }) }) } }

7.Spark读取Hbase表并存入到hdfs中

import org.apache.hadoop.hbase.HBaseConfiguration import org.apache.hadoop.hbase.mapreduce.TableInputFormat import org.apache.hadoop.hbase.io.ImmutableBytesWritable import org.apache.hadoop.hbase.client.Result import org.apache.hadoop.hbase.HConstants val tmpConf = HBaseConfiguration.create() tmpConf.set("hbase.zookeeper.quorum", "hadoop-2,hadoop-3,hadoop-5") tmpConf.set("hbase.zookeeper.property.clientPort", "2181") tmpConf.set(TableInputFormat.INPUT_TABLE, "word") tmpConf.set(HConstants.HBASE_CLIENT_SCANNER_TIMEOUT_PERIOD, "120000"); val hBaseRDD = sc.newAPIHadoopRDD(tmpConf, classOf[TableInputFormat],classOf[ImmutableBytesWritable],classOf[Result]) val lineRdd=hBaseRDD.map(r=> (if(r._2.getFamilyMap("comumnfamily".getBytes).keySet.contains("column1".getBytes)){new String(r._2.getValue("data".getBytes,"log_date".getBytes))}else{"0"})+","+ (if(r._2.getFamilyMap("comumnfamily".getBytes).keySet.contains("column2".getBytes)){new String(r._2.getValue("data".getBytes,"area_code".getBytes))}else{"0"}) ) lineRdd.saveAsTextFile("hdfs://ns1/hbasehdfs01") lineRdd.repartition(1).saveAsTextFile("hdfs://ns1/hbasehdfs")