1. 获取某一个节点下所有的文本数据:

data = response.xpath('//div[@id="zoomcon"]') content = ''.join(data.xpath('string(.)').extract())

这段代码将获取,div为某一个特定id的所有文本数据:

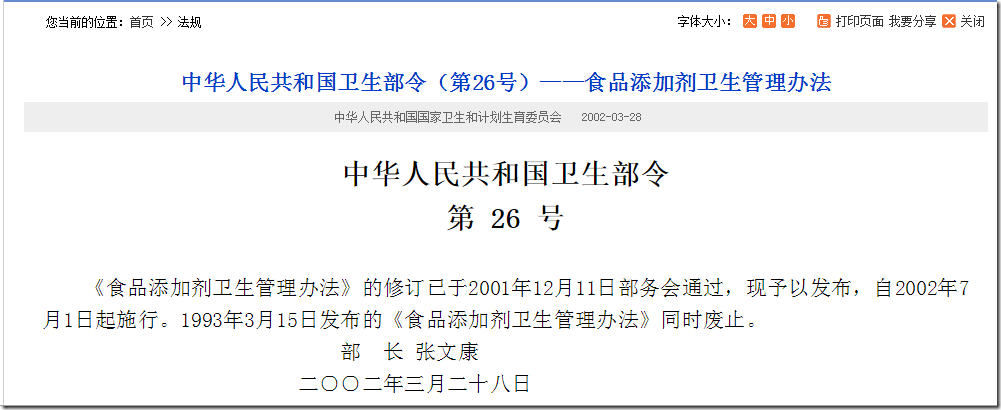

http://www.nhfpc.gov.cn/fzs/s3576/200804/cdbda975a377456a82337dfe1cf176a1.shtml

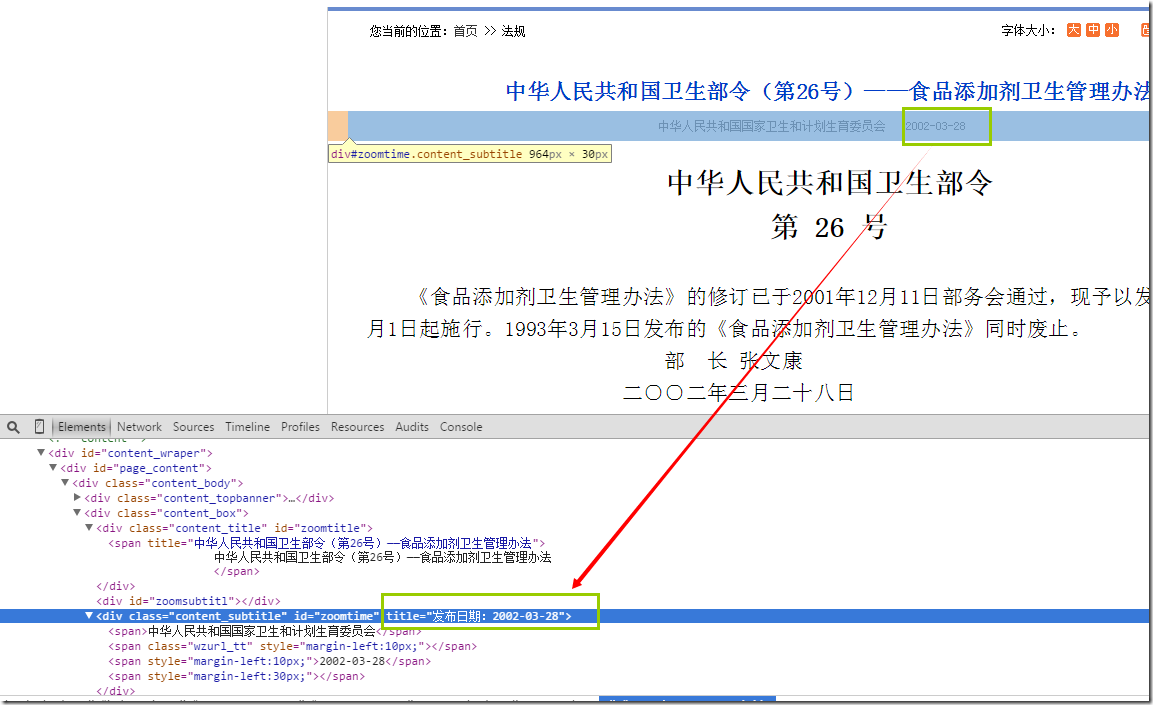

2. 获取html几点属性的值

>>> response.xpath("//div[@id='zoomtime']").extract() [u'<div class="content_subtitle" id="zoomtime" title="u53d1u5e03u65e5u671fuff1a2010-10-26"><span>u4e2du534eu4ebau6c11u5171u548cu56fdu56fdu5bb6u536bu751fu548cu8ba1u5212u751fu80b2u59d4u5458u4f1a</span><span class="wzurl_tt" style="margin-left:10px;"></span><span style="margin-left:10px;">2010-10-26</span> <span style="margin-left:30px;"></span> </div>'] >>> response.xpath("//div[@id='zoomtime']/@title").extract() [u'u53d1u5e03u65e5u671fuff1a2010-10-26']

这里需要获取的是某一个id下,属性title的值,使用的@title就可以获取到:

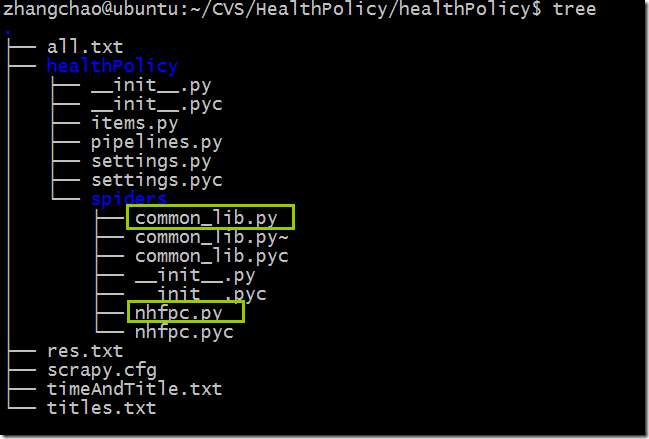

scrapy的项目结构:

nhfpc.py

# -*- coding: utf-8 -*- import scrapy import sys import hashlib from scrapy.contrib.spiders import CrawlSpider, Rule from scrapy.contrib.linkextractors import LinkExtractor from datetime import * from common_lib import * reload(sys) sys.setdefaultencoding('utf-8') class NhfpcItem(scrapy.Item): url = scrapy.Field() name = scrapy.Field() description = scrapy.Field() size = scrapy.Field() dateTime = scrapy.Field() class NhfpcSpider(scrapy.contrib.spiders.CrawlSpider): name = "nhfpc" allowed_domains = ["nhfpc.gov.cn"] start_urls = ( 'http://www.nhfpc.gov.cn/fzs/pzcfg/list.shtml', 'http://www.nhfpc.gov.cn/fzs/pzcfg/list_2.shtml', 'http://www.nhfpc.gov.cn/fzs/pzcfg/list_3.shtml', 'http://www.nhfpc.gov.cn/fzs/pzcfg/list_4.shtml', 'http://www.nhfpc.gov.cn/fzs/pzcfg/list_5.shtml', 'http://www.nhfpc.gov.cn/fzs/pzcfg/list_6.shtml', 'http://www.nhfpc.gov.cn/fzs/pzcfg/list_7.shtml', ) rules = ( Rule( LinkExtractor(allow='.*d{6}/.*'), callback='parse_item' ), Rule( LinkExtractor(allow='.*201307.*'), follow=True, ), ) def parse_item(self, response): retList = response.xpath("//div[@id='zoomtitle']/*/text()").extract() title = "" if len(retList) == 0: retList = response.xpath("//div[@id='zoomtitl']/*/text()").extract() title = retList[0].strip() else: title = retList[0].strip() content = "" data = response.xpath('//div[@id="zoomcon"]') if len(data) == 0: data = response.xpath('//div[@id="contentzoom"]') content = ''.join(data.xpath('string(.)').extract()) pubTime = "1970-01-01 00:00:00" time = response.xpath("//div[@id='zoomtime']/@title").extract() if len(time) == 0 : time = response.xpath("//ucmspubtime/text()").extract() else: time = ''.join(time).split(":")[1] pubTime = ''.join(time) pubTime = pubTime + " 00:00:00" #print pubTime #insertTime = datetime.now().strftime("%20y-%m-%d %H:%M:%S") insertTime = datetime.now() webSite = "nhfpc.gov.cn" values = [] values.append(title) md5Url=hashlib.md5(response.url.encode('utf-8')).hexdigest() values.append(md5Url) values.append(pubTime) values.append(insertTime) values.append(webSite) values.append(content) values.append(response.url) #print values insertDB(values)

common_lib.py

#!/usr/bin/python #-*-coding:utf-8-*- ''' This file include all the common routine,that are needed in the crawler project. Author: Justnzhang @(uestczhangchao@qq.com) Time:2014年7月28日15:03:44 ''' import os import sys import MySQLdb from urllib import quote, unquote import uuid reload(sys) sys.setdefaultencoding('utf-8') def insertDB(dictData): print "insertDB" print dictData id = uuid.uuid1() try: conn_local = MySQLdb.connect(host='192.168.30.7',user='xxx',passwd='xxx',db='xxx',port=3306) conn_local.set_character_set('utf8') cur_local = conn_local.cursor() cur_local.execute('SET NAMES utf8;') cur_local.execute('SET CHARACTER SET utf8;') cur_local.execute('SET character_set_connection=utf8;') values = [] # print values values.append("2") values.append("3") values.append("2014-04-11 00:00:00") values.append("2014-04-11 00:00:00") values.append("6") values.append("7") cur_local.execute("insert into health_policy values(NULL,%s,%s,%s,%s,%s,%s)",values) #print "invinsible seperator line-----------------------------------" conn_local.commit() cur_local.close() conn_local.close() except MySQLdb.Error,e: print "Mysql Error %d: %s" % (e.args[0], e.args[1]) if __name__ == '__main__': values = [1,2,4] insertDB(values)

SET FOREIGN_KEY_CHECKS=0; -- ---------------------------- -- Table structure for health_policy -- ---------------------------- DROP TABLE IF EXISTS `health_policy`; CREATE TABLE `health_policy` ( `hid` int(11) NOT NULL AUTO_INCREMENT, `title` varchar(1000) DEFAULT NULL COMMENT '政策标题', `md5url` varchar(1000) NOT NULL COMMENT '经过MD5加密后的URL', `pub_time` datetime DEFAULT NULL COMMENT '发布时间', `inser_time` datetime NOT NULL COMMENT '插入时间', `website` varchar(1000) DEFAULT NULL COMMENT '来源网站', `content` longtext COMMENT '政策内容', `url` varchar(1000) DEFAULT NULL, PRIMARY KEY (`hid`) ) ENGINE=InnoDB AUTO_INCREMENT=594 DEFAULT CHARSET=utf8;