本来下想在一台虚拟机上,搭建一个hadoop的测试hadoop,用于调试和阅读hadoop源代码,发现在虚拟机上执行:

$hostname -i

hostname: Unknown host

这个是因为没有设置/etc/hosts文件导致的,如果linux的环境是这样的,那么将会在启动datanode和tasktracker的时候失败,

datanode和namenode将会抛出如下的异常:

2015-01-28 12:36:36,506 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2015-01-28 12:36:36,535 INFO org.apache.hadoop.metrics2.impl.MetricsSourceAdapter: MBean for source MetricsSystem,sub=Stats registered.

2015-01-28 12:36:36,540 ERROR org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Error getting localhost name. Using 'localhost'...

java.net.UnknownHostException: PC_PAT1: PC_PAT1: Name or service not known

at java.net.InetAddress.getLocalHost(InetAddress.java:1473)

at org.apache.hadoop.metrics2.impl.MetricsSystemImpl.getHostname(MetricsSystemImpl.java:463)

at org.apache.hadoop.metrics2.impl.MetricsSystemImpl.configureSystem(MetricsSystemImpl.java:394)

at org.apache.hadoop.metrics2.impl.MetricsSystemImpl.configure(MetricsSystemImpl.java:390)

at org.apache.hadoop.metrics2.impl.MetricsSystemImpl.start(MetricsSystemImpl.java:152)

at org.apache.hadoop.metrics2.impl.MetricsSystemImpl.init(MetricsSystemImpl.java:133)

at org.apache.hadoop.metrics2.lib.DefaultMetricsSystem.init(DefaultMetricsSystem.java:40)

at org.apache.hadoop.metrics2.lib.DefaultMetricsSystem.initialize(DefaultMetricsSystem.java:50)

at org.apache.hadoop.hdfs.server.datanode.DataNode.instantiateDataNode(DataNode.java:1520)

at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:1539)

at org.apache.hadoop.hdfs.server.datanode.DataNode.secureMain(DataNode.java:1665)

at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:1682)

Caused by: java.net.UnknownHostException: PC_PAT1: Name or service not known

at java.net.Inet6AddressImpl.lookupAllHostAddr(Native Method)

at java.net.InetAddress$1.lookupAllHostAddr(InetAddress.java:901)

at java.net.InetAddress.getAddressesFromNameService(InetAddress.java:1293)

at java.net.InetAddress.getLocalHost(InetAddress.java:1469)

... 11 more

解决方案是在/etc/hosts文件中添加如下红色的内容,即172.18.140.24 PC_PAT1

hadoop@PC_PAT1:~/hadoop-1.0.3> less /etc/hosts

#

# hosts This file describes a number of hostname-to-address

# mappings for the TCP/IP subsystem. It is mostly

# used at boot time, when no name servers are running.

# On small systems, this file can be used instead of a

# "named" name server.

# Syntax:

#

# IP-Address Full-Qualified-Hostname Short-Hostname

#

#127.0.0.1 localhost

#127.0.0.1 localhost.localdomain localhost cpyftest-2

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

172.18.140.24 PC_PAT1

# special IPv6 addresses

::1 localhost ipv6-localhost ipv6-loopback

fe00::0 ipv6-localnet

ff00::0 ipv6-mcastprefix

ff02::1 ipv6-allnodes

ff02::2 ipv6-allrouters

ff02::3 ipv6-allhosts

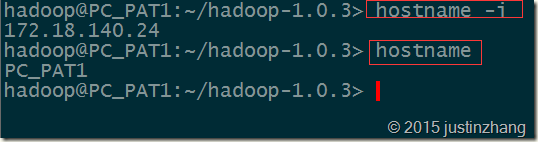

添加后,hostname –i 将会得到正确的结果:

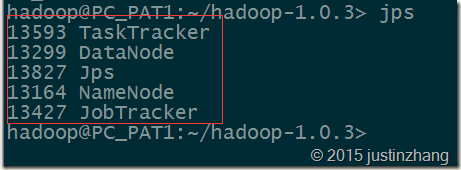

将hostname unkown的问题解决后,重启hadoop集群,可以发现,datanode/tasktracker , namenode/jobtracker都启动了:

ERROR org.apache.hadoop.hdfs.server.namenode.NameNode: java.io.IOException: Incomplete HDFS URI, no host: hdfs://data_181.uc:9000

着实郁闷到了,原来在Hadoop中,主机名不要包含下划线“_”,但可以有横线“-”。

Incomplete HDFS URI