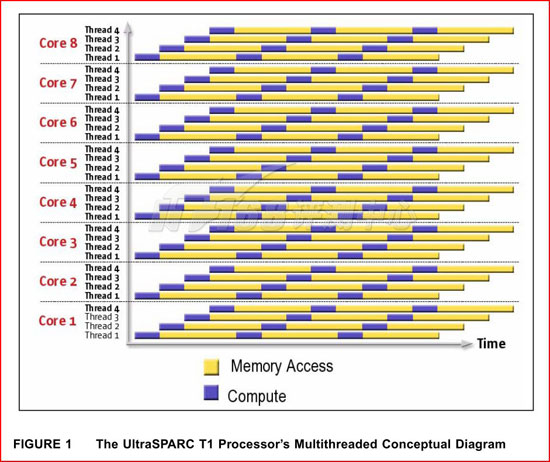

关于多线程:

In single thread per core processors, instruction execution suspends during memory access and resumes after data read action is completed.

Such linear instruction processing takes time.

Using VMT mechanism in SPARC64 VI, when thread1/process 1 starts its memory access, instruction control is switched to thread2 and thread2/process 2 is executed. When thread2 starts its memory access, instruction control is switched back to thread1 and the suspended thread1/process 1 is resumed.

The two thread switching mechanism minimizes processor wait time, efficiently utilizes memory access time and maximizes the performance of SPARC Enterprise mid-range and high-end models.

Using SMT mechanism in SPARC64 VII/VII+, thread1 and thread2 run in parallel, minimizing processing time.

22nm-32nm-45nm

IVB-SNB-

第一代

比起从Pentium 4的NetBurst架构到Core 微架构的较大变化来说,从Core 微架到Nehalem架构的基本核心部分的变化则要小一些,

简单说来,Nehalem还是基本建立在Core微架构(Core Microarchitecture)的骨架上,外加增添了SMT、3层Cache、TLB和分支预测的等级化、IMC、QPI和支持DDR3等技术。

第二代sandy2010年:

第二代Core i7/i5首次引入了智能动态加速技术“Turbo Boost”(睿频),能够根据工作负载,自动以适当速度开启全部核心,或者关闭部分限制核心、提高剩余核心的速度,比如一颗热设计功耗(TDP)为95W的四核心处理器,可能会三个核心完全关闭,最后一个大幅提速,一直达到95W TDP的限制。

对于移动版的CPU来说,其型号命名规则如下:

-29xxXM:Core i7,四核心,八线程,支持Turbo,8MB三级缓存,至尊版

-28xxQM:Core i7,四核心,八线程,支持Turbo,8MB三级缓存

-27xxQM:Core i7,四核心,八线程,支持Turbo,6MB三级缓存

-26xxM:Core i7,双核心,四线程,支持Turbo,4MB三级缓存

-25xxM:Core i5,双核心,四线程,支持Turbo,3MB三级缓存

其后缀的意义为:

- QM:四核心标准版

-M:双核心标准版 由此可见,Core i7/i5/i3及其各个子系列的不同依然取决于是否支持超线程、睿频加速,以及三级缓存容量的大小,还是略有混乱。

第三代ivy2012年

Top Eight Features of the Intel Core i7 Processors for Test, Measurement, and Control

Publish Date: 三月 10, 2011 | 29 评级 | 4.38 out of 5 | ![]() PDF

PDF

Table of Contents

- Introduction

- 1 – New Platform Architecture

- 2 – Higher-Performance Multiprocessor Systems with QPI

- 3 – CPU Performance Boost via Intel Turbo Boost Technology

- 4 – Improved Cache Latency with Smart L3 Cache

- 5 – Optimized Multithreaded Performance through Hyper-Threading

- 6 – Higher Data-Throughput via PCI Express 2.0 and DDR3 Memory Interface

- 7 – Improved Virtualization Performance

- 8 – Remote Management of Networked Systems with Intel Active Management Technology (AMT)

- Conclusion

1. Introduction

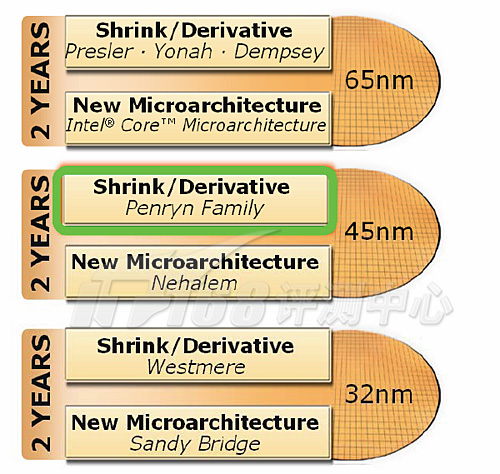

The Intel Penryn mircoarchitecture, which included the Core 2 family of processors, was the first mainstream Intel microarchitecture based on the 45nm fabrication process. This allowed Intel to create higher-performance processors that consumed similar or less power than previous-generation processors.

The Intel Nehalem microarchitecture that encompasses the Core i7 class of processors uses a 45nm fabrication process for different processors in the Core i7 family. Besides using the power consumption benefits of 45nm, Intel made some dramatic changes in the Nehalem microarchitecture to offer new features and capabilities in the Core i7 family of processors. This white paper explores the details on some key features and their impact on test, measurement, and control applications.

2. 1 – New Platform Architecture

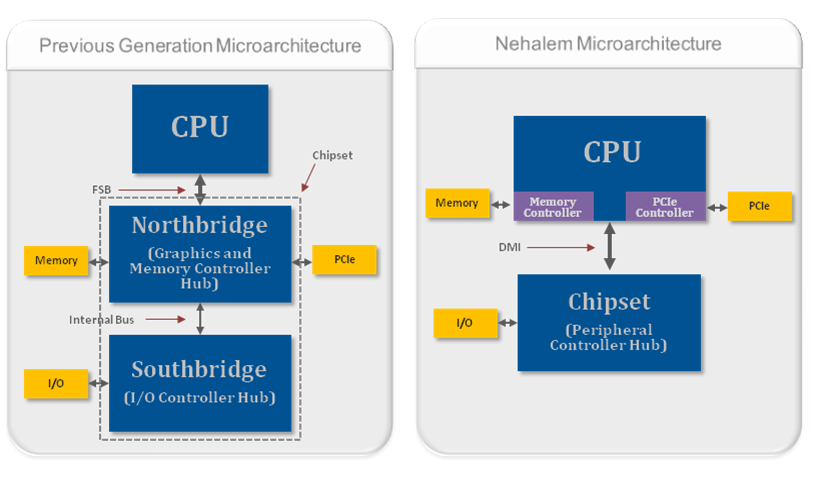

As shown in Figure 1, the previous Intel microarchitectures for a single processor system included three discrete components: a CPU; a Graphics and Memory Controller Hub (GMCH), also known as the northbridge; and an I/O Controller Hub (ICH), also known as the southbridge. The GMCH and ICH combined are referred to as the chipset.

In the older Penryn architecture, the front-side bus (FSB) was the interface for exchanging data between the CPU and the northbridge. If the CPU had to read or write data into system memory or over the PCI Express bus, then the data had to traverse over the external FSB. In the new Nehalem microarchitecture, Intel moved the memory controller and PCI Express controller from the northbridge onto the CPU die, reducing the number of external databus that the data had to traverse. These changes help increase data-throughput and reduce the latency for memory and PCI Express data transactions. These improvements make the Core i7 family of processors ideal for test and measurement applications such as high-speed design validation and high-speed data record and playback.

Figure 1. These block diagrams represent the higher-level architectural differences between the previous generation of Intel microarchitectures and the new Nehalem microarchitecture for single-processor systems.

3. 2 – Higher-Performance Multiprocessor Systems with QPI

Not only was the memory controller moved to the CPU for Nehalem processors, Intel also introduced a distributed shared memory architecture using Intel QuickPath Interconnect (QPI). QPI is the new point-to-point interconnect for connecting a CPU to either a chipset or another CPU. It provides up to 25.6 GB/s of total bidirectional data throughput per link.

Intel’s decision to move the memory controller in the CPU and introduce the new QPI databus has had an impact for single-processor systems. However, this impact is much more significant for multiprocessor systems. Figure 2 illustrates the typical block diagrams of multiprocessor systems based on the previous generation and the Nehalem microarchitecture.

Figure 2: These block diagrams represent the higher-level architectural differences between the previous generation of Intel microarchitectures and the new Nehalem microarchitecture for multiprocessor systems.

The Nehalem microarchitecture integrated the memory controller on the same die as the Core i7 processor and introduced the high-speed QPI databus. As shown in Figure 2, in a Nehalem-based multiprocessor system each CPU has access to local memory but they also can access memory that is local to other CPUs via QPI transactions. For example, one Core i7 processor can access the memory region local to another processor through QPI either with one direct hop or through multiple hops.

With these new features, the Core i7 processors lend themselves well to the creation of higher-performance processing systems. For maximum performance gains in a multiprocessor system, application software should be multithreaded and aware of this new architecture. Also, execution threads should explicitly attempt to allocate memory for their operation within the memory space local to the CPU on which they are executing.

By combining a multiprocessor computer with PXI-MXI-Express to a PXI system, processor intensive applications can take advantage of the multiple CPUs. Examples of these types of applications range from design simulation to hardware-in-the-loop (HIL).

4. 3 – CPU Performance Boost via Intel Turbo Boost Technology

About five years ago, Intel and AMD introduced multicore CPUs. Since then a lot of applications and development environments have been upgraded to take advantage of multiple processing elements in a system. However, because of the software investment required to re-architect applications, there are still a significant number of applications that are single threaded. Before the advent of multicore CPUs, these applications saw performance gains by executing on new CPUs that simply offered higher clock frequencies. With multicore CPUs, this trend was broken as newer CPUs offered more discrete processing cores rather than higher clock frequencies.

To provide a performance boost for lightly threaded applications and to also optimize the processor power consumption, Intel introduced a new feature called Intel Turbo Boost. Intel Turbo Boost is an innovative feature that automatically allows active processor cores to run faster than the base operating frequency when certain conditions are met.

Intel Turbo Boost is activated when the OS requests the highest processor performance state. The maximum frequency of the specific processing core on the Core i7 processor is dependent on the number of active cores, and the amount of time the processor spends in the Turbo Boost state depends on the workload and operating environment.

Figure 3. Intel Turbo Boost features offer processing performance gains for all applications regardless of the number of execution threads created.

Figure 3 illustrates how the operating frequencies of the processing cores in the quad-core Core i7 processor change to offer the best performance for a specific workload type. In an idle state, all four cores operate at their base clock frequency. If an application that creates four discrete execution threads is initiated, then all four processing cores start operating at the quad-core turbo frequency. If the application creates only two execution threads, then two idle cores are put in a low-power state and their power is diverted to the two active cores to allow them to run at an even higher clock frequency. Similar behavior would apply in the case where the applications generate only a single execution thread.

The Intel Core i7-820QM quad-core processor that is used in the NI PXIe-8133 embedded controller has a base clock frequency of 1.73 GHz. If the application is using only one CPU core, Turbo Boost technology automatically increases the clock frequency of the active CPU core on the Intel Core i7-820QM processor from 1.73 GHz to up to 3.06 GHz and places the other three cores in an idle state, thereby providing optimal performance for all application types.

Number of Active Cores

Mode

Base Frequency

Maximum Turbo Boost Frequency

4

Quad-Core

1.73 GHz

2.0 GHz

2

Dual-Core

1.73 GHz

2.8 GHz

1

Single-Core

1.73 GHz

3.06 GHz

Figure 4. This table showcases how Turbo Boost is able to increase the performance for a variety of applications when using the PXIe-8133 in quad-core, dual-core, or single-core mode.

The duration of time that the processor spends in a specific Turbo Boost state depends on how soon it reaches thermal, power, and current thresholds. With adequate power supply and heat dissipation solutions, a Core i7 processor can be made to operate in the Turbo Boost state for an extended duration of time. In the case of the NI PXIe-8133 embedded controller, users can manually control the number of active processor cores through the controller’s BIOS to fine tune the operation of the Turbo Boost feature for optimizing performance for specific application types.

For real-time applications, Intel Turbo Boost could be utilized, but to ensure best possible execution determinism thorough testing should be done. When using the NI PXIe-8133 embedded controller, Intel Turbo Boost can be disabled through the BIOS for applications that prefer to not use it.

5. 4 – Improved Cache Latency with Smart L3 Cache

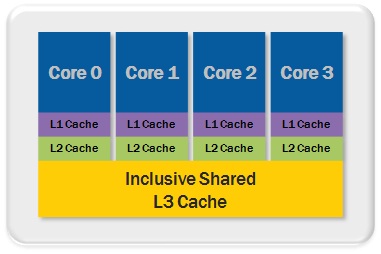

Cache is a block of high-speed memory for temporary data storage located on the same silicon die as the CPU. If a single processing core, in a multicore CPU, requires specific data while executing an instruction set, it first searches for the data in its local caches (L1 and L2). If the data is not available, also known as a cache-miss, it then accesses the larger L3 cache. In an exclusive L3 cache, if that attempt is unsuccessful, then the core performs cache snooping – searches the local caches of other cores – to check whether they have data that it needs. If this attempt also results in a cache-miss, it then accesses the slower system RAM for that information. The latency of reading and writing from the cache is much lower than that from the system RAM, therefore a smarter and larger cache greatly helps in improving processor performance.

The Core i7 family of processors features an inclusive shared L3 cache that can be up to 12 MB in size. Figure 4 shows the different types of caches and their layout for the Core i7-820QM quad-core processor used in the NI PXIe-8133 embedded controller. The NI PXIe-8133 embedded controller features four cores, where each core has 32 kilobytes for instructions and 32 kilobytes for data of L1 cache, 256 kilobytes per core of L2 cache, along with 8 megabytes of shared L3 cache. The L3 cache is shared across all cores and its inclusive nature helps increase performance and reduces latency by reducing cache snooping traffic to the processor cores. An inclusive shared L3 cache guarantees that if there is a cache-miss, then the data is outside the processor and not available in the local caches of other cores, which eliminates unnecessary cache snooping.

Figure 4. The inclusive shared L3 cache in the Core i7 processors offers better cache latency for increased performance.

This feature provides improvement for the overall performance of the processor and is beneficial for a variety of applications including test, measurement, and control.

6. 5 – Optimized Multithreaded Performance through Hyper-Threading

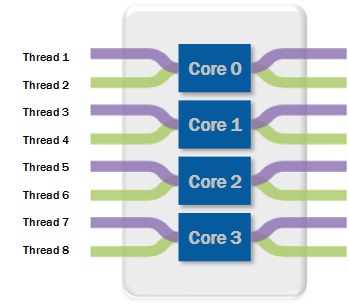

Intel introduced Hyper-Threading Technology on its processors in 2002. Hyper-threading exposes a single physical processing core as two logical cores to allow them to share resources between execution threads and therefore increase the system efficiency (see Figure 5). Because of the lack of OSs that could clearly differentiate between logical and physical processing cores, Intel removed this feature when it introduced multicore CPUs. With the release of OSs such as Windows Vista and Windows 7, which are fully aware of the differences between logical and physical core, Intel brought back the hyper-threading feature in the Core i7 family of processors.

Hyper-Threading Technology benefits from larger caches and increased memory bandwidth of the Core i7 processors, delivering greater throughput and responsiveness for multithreaded applications.

Figure 5. Hyper-threading allows simultaneous execution of two execution threads on the same physical CPU core.

7. 6 – Higher Data-Throughput via PCI Express 2.0 and DDR3 Memory Interface

To support the need of modern applications to move data at a faster rate, the Core i7 processors offer increased throughput for the external databus and its memory channels.

The new processors feature the PCI Express 2.0 databus, which doubles the data throughput from PCI Express 1.0 while maintaining full hardware and software compatibility with PCI Express 1.0. A x16 PCI Express 2.0 link has a maximum throughput of 8 GB/s/direction.

To allow data from the PCI Express 2.0 databus to be stored in system RAM, the Core i7 processors feature multiple DDR3 1333 MHz memory channels. A system with two channels of DDR3 1333 MHz RAM had a theoretical memory bandwidth of 21.3 GB/s. This throughput matches well with the theoretical maximum throughput of a x16 PCI Express 2.0 link. The NI PXIe-8133 embedded controlleruses both of these features to allow users to theoretical stream data at 8 GB/s in a PXI Express system.

Certain test and measurement applications – such as high-speed design validation and RF record and playback – that require continuous acquisition or generation of data at extremely high rates benefit greatly from these improvements.

8. 7 – Improved Virtualization Performance

Virtualization is a technology that enables running multiple OSs side-by-side on the same processing hardware. In the test, measurement, and control space, engineers and scientists have used this technology to consolidate discrete computing nodes into a single system. With the Nehalem mircoarchitecture, Intel has added new features such as hardware-assisted page-table management and directed I/O in the Core i7 processors and its chipsets that allow software to further improve their performance in virtualized environments.

These improvements coupled with increases in memory bandwidth and processing performance allow engineers and scientists to build more capable and complex virtualized systems for test, measurement, and control.

9. 8 – Remote Management of Networked Systems with Intel Active Management Technology (AMT)

AMT provides system administrators the ability to remotely monitor, maintain, and update systems. Intel AMT is part of the Intel Management Engine, which is built into the chipset of a Nehalem-based system. This feature allows administrators to boot systems from a remote media, track hardware and software assets, and perform remote troubleshooting and recovery.

Engineers can use this feature for managing deployed automated test or control systems that need high uptime. Test, measurement, and control applications are able to use AMT to perform remote data collection and monitor application status. When an application or system failure occurs, AMT enables the user to remotely diagnose the problem and access debug screens. This allows for the problem to be resolved sooner and no longer requires interaction with the actual system. When software updates are required, AMT allows for these to be done remotely, ensuring that the system is updated as quickly as possible since downtime can be very costly. AMT is able to provide many remote management benefits for PXI systems.

For customers using the NI PXIe-8133, National Instruments offers a NI Labs download that enables AMT capabilities on this embedded controller. Click here to learn more about the NI Labs Download: Intel Active Management Technology (AMT) for the NI PXIe-8133 Embedded Controller.

10. Conclusion

The Core i7 family of processors based on the Intel Nehalem microarchitecture offers many new and improved features that benefit a wide variety of applications including test, measurement, and control. Engineers and scientists can expect to see processing performance gains as well as increases in memory and data throughput when comparing this microarchitecture to previous microarchitectures.