An overview of the main types of neural network architecture 神经网络结构的主要类型

什么是神经网络的结构?

神经网络中神经元的组织方式。

1. 目前应用领域中最常用的结构类型是feet-forward 神经网络, 信息来自输入单元,并且以一个方向流动,通过隐含层,直到所有信息到达输出单元。

2 .一种非常有趣的结构类型是recurrent神经网络,information can flow round in cycles. 这种网络能够记住信息一段时间,They can exhibit all sorts of interesting oscillations but they are much more difficult to train in part because they are so much more complicated in what they can do. 然而最近,人们在训练recurrent神经网络上有了很大进展,他们现在能做令人印象深刻的事。

3. 最后一种结构是symmetrically-connected 网络,在这种网络中,权值是相同的in both directions between two units.

feet-forward 神经网络

These are the commonest type of neural network in practical applications. 最常用

– The first layer is the input and the last layer is the output. 第一层是输入,最后一层是输出

– If there is more than one hidden layer, we call them “deep” neural networks. 如果多于一层的隐藏层,我们就称为“深度”神经网络

• They compute a series of transformations between their input an output. So at each layer, you get a new representation of the input in which things that were similar in the previous layer may have become less similar, or things that were dissimilar in the previous layer may become more similar. So in speech recongntion, for example, we'd like the same thing said by different speakers to become more similar, and different thing said by the same speaker to be less similar as we go up through the layers of the network.

– In order to achieve this, we need the activities of the neurons in each layer to be a non-linear function of the activities in the layer below.

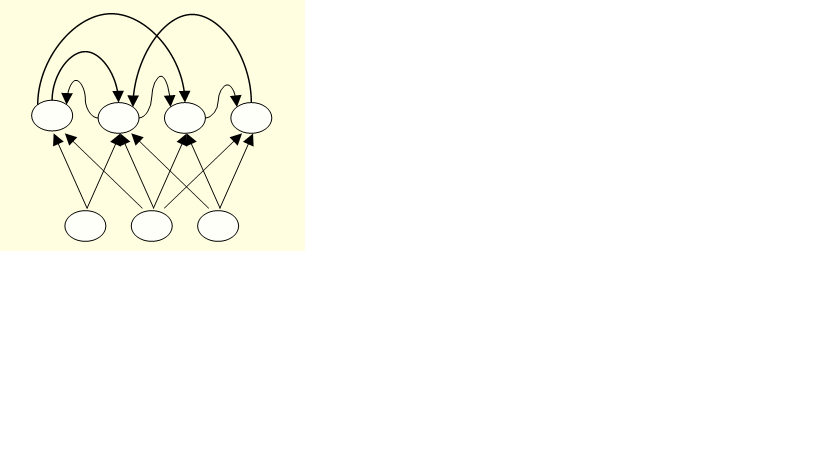

Recurrent networks

他比前向网络强大得多They have directed cycles in their connection graph.在他们的连接图中是有方向的循环

That means you can sometimes get back to where you started by following the arrows. 这意味着你有时想回到起点,沿着这些箭头即可

They can have complicated dynamics and this can make them very difficult to train.

– There is a lot of interest at present in finding efficient ways of training recurrent nets. 目前在寻找训练recurren网络高效方法上引起了很多的兴趣

They are more biologically realistic. 同时他们是基于生物学现实的

Recurrent nets with multiple hidden layers are just a special case of a general recurrent neural net that has some of its hidden to hidden connections missing.

Recurrent neural networks for modeling sequences

Recurrent neural networks are a very naturalway to model sequential data: 非常适合对序列数据建模, 我们需要做的是在隐含单元之间建立联系

– They are equivalent to very deep nets withone hidden layer per time slice. 隐含单元表现的像网络,very deep in time.

所以每个时间步长隐含单元的状态决定了下一时刻时间步长隐含单元状态

– Except that they use the same weights at every time slice and they get input at every time slice.

他们区别与前向网络的一个方面是我们在每个时间步长使用相同的权值。所以下图红色箭头,隐含单元正在决定下时刻的隐含单元。红色箭头描述权值矩阵,在每个时间步长都是相同的

• They have the ability to remember information in their hidden state for a long time.

他们在每个时间也获得输入,在每个时间戳也给出输出,这些也都使用相同的权值矩阵。他们能在隐藏单元储存信息很长一段时间

– But its very hard to train them to use this potential.

An example of what recurrent neural nets can now do

Ilya Sutskever (2011) trained a special type of recurrent neural net to predict the next character in a sequence. So llya trained it on lots and lots of strings from English Wikipedia. It's seeing English characters and trying to predict the next English character. He actually used 86 different characters to allow for punctuation, and digits , and capital letters and so on. After you trained it, one way of seeing how well it can do is to see whether it assigns high probability to the next character that actually occurs. Another way of seeing get it to generate text. So what you do is you give it a string of characters and get it to predit probabilitites for the next character.Then you pick the next character from that probability distribution. It's no use picking the most likely character. If you do that after a while it starts saying the United States of the United States of the United States of the United States of the United States. That tells you something about Wikipedia.

Some text generated one character at a time by Ilya Sutskever’s recurrent neural network

In 1974 Northern Denver had been overshadowed by CNL, and several Irish intelligence agencies in the Mediterranean

region. However, on the Victoria, Kings Hebrew stated that Charles decided to

escape during an alliance. The mansion house was completed in 1882, the second in

its bridge are omitted, while closing is the proton reticulum composed below it aims,

such that it is the blurring of appearing on any well-paid type of box printer.

symmetrically-connected 网络

与recurrent网络相似,These are like recurrent networks, but the connections between units are symmetrical (they have the same weight in both directions)

– John Hopfield (and others) realized that symmetric networks are much easier to analyze than recurrent networks.

– They are also more restricted in what they can do. because they obey an energy function

For example, they cannot model cycles.

• Symmetrically connected nets without hidden units are called “Hopfield nets”

Symmetrically connected networks with hidden units

• These are called “Boltzmann machines”.

– They are much more powerful models than Hopfield nets.

– They are less powerful than recurrent neural networks.

– They have a beautifully simple learning algorithm.

• We will cover Boltzmann machines towards the end of the course