在处理序列数据集时,有时会遇到变长度的样本。此时因为尺寸不一致,无法直接利用pytorch中dataloader的默认加载方式(沿着批维度直接Stack)。

处理这种数据集,一种办法是可以事先记录每个样本的长度,并把所有的数据集样本补全至最长的样本长度,这样所有样本长度一致,可以直接加载。但是会有一个问题,就是例如在使用RNN建模时,这些padding的0值会对模型造成额外影响.参考这篇文章。

pytorch中通过函数torch.nn.utils.rnn.pack_padded_sequence()以及torch.nn.utils.rnn.pad_packed_sequence()来解决这个问题。torch.nn.utils.rnn.pack_padded_sequence()通过利用

pad之后的样本和每个原始序列的长度对补全后的样本进行pack。这样RNN模型在计算时,根据原来的样本长度就知道每个样本在何时结束,从而避免额外的pad的0值的影响。计算完之后通过torch.nn.utils.rnn.pad_packed_sequence()将输出的格式转换为pack之前的格式。

collate_fn

另一种办法是通过自定义collate_fn,并将其传入DataLoader,从而实现自定义的批数据聚合方式。这里给出一些示例。

这篇文章给出了一种解决思路

示例1

问题背景

想要使用pytorch 框架中的 Dataset 和 Dataloader 类,将变长序列整合为batch数据 (主要是对长短不一的序列进行补齐),通过自定义collate_fn函数,实现对变长数据的处理。

主要思路

Dataset 主要负责读取单条数据,建立索引方式。

Dataloader 负责将数据聚合为batch。

测试环境: python 3.6 ,pytorch 1.2.0

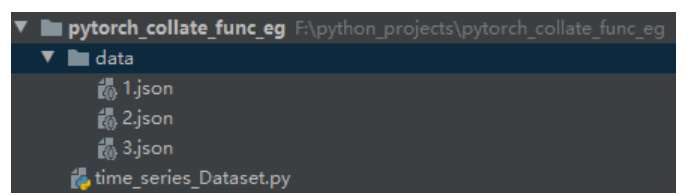

数据路径:

data路径下存储的是待存储的数据样本。

举例:其中的 1.json 样本格式为:

![]()

定义数据集class,进行数据索引

数据集class定义代码:

1 import os 2 import numpy as np 3 import torch 4 from torch.utils.data import Dataset 5 from tqdm import tqdm 6 class time_series_dataset(Dataset): 7 def __init__(self, data_root): 8 """ 9 :param data_root: 数据集路径 10 """ 11 self.data_root = data_root 12 file_list = os.listdir(data_root) 13 file_prefix = [] 14 for file in file_list: 15 if '.json' in file: 16 file_prefix.append(file.split('.')[0]) 17 file_prefix = list(set(file_prefix)) 18 self.data = file_prefix 19 def __len__(self): 20 return len(self.data) 21 def __getitem__(self, index): 22 prefix = self.data[index] 23 import json 24 with open(self.data_root+prefix+'.json','r',encoding='utf-8') as f: 25 data_dic=json.load(f) 26 feature = np.array(data_dic['feature']) 27 length=len(data_dic['feature']) 28 feature = torch.from_numpy(feature) 29 label = np.array(data_dic['label']) 30 label = torch.from_numpy(label) 31 sample = {'feature': feature, 'label': label, 'id': prefix,'length':length} 32 return sample

这里dataset将每个样本的数据,标签、以及每个样本的长度都包裹在一个字典里并返回。

数据集实例化:

1 dataset = time_series_dataset("./data/") # "./data/" 为数据集文件存储路径

基于此数据集的实际数据格式如下:

举例: dataset[0]

1 {'feature': tensor([17, 14, 16, 18, 14, 16], dtype=torch.int32), 2 'label': tensor([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 3 0], dtype=torch.int32), 4 'id': '2', 5 'length': 6}

定义collate_fn函数,传入Dataloader类

自定义collate_fn代码

1 from torch.nn.utils.rnn import pad_sequence 2 3 def collate_func(batch_dic): 4 batch_len=len(batch_dic) # 批尺寸 5 max_seq_length=max([dic['length'] for dic in batch_dic]) # 一批数据中最长的那个样本长度 6 mask_batch=torch.zeros((batch_len,max_seq_length)) # mask 7 fea_batch=[] 8 label_batch=[] 9 id_batch=[] 10 for i in range(len(batch_dic)): # 分别提取批样本中的feature、label、id、length信息 11 dic=batch_dic[i] 12 fea_batch.append(dic['feature']) 13 label_batch.append(dic['label']) 14 id_batch.append(dic['id']) 15 mask_batch[i,:dic['length']]=1 # mask 16 res={} 17 res['feature']=pad_sequence(fea_batch,batch_first=True) # 将信息封装在字典res中 18 res['label']=pad_sequence(label_batch,batch_first=True) 19 res['id']=id_batch 20 res['mask']=mask_batch 21 return res

pytorch中的dataloader返回的是一个list,也即collate_func的输入是一个列表。

说明: mask 字段用以存储变长序列的实际长度,补零的部分记为0,实际序列对应位置记为1。返回数据的格式及包含的字段,根据自己的需求进行定义。

这一段似乎用映射map更合适:

1 for i in range(len(batch_dic)): 2 dic=batch_dic[i] 3 fea_batch.append(dic['feature']) 4 label_batch.append(dic['label']) 5 id_batch.append(dic['id']) 6 mask_batch[i,:dic['length']]=1

1 fea_batch = list(map(lambda x: x['feature'], batch_dic)) 2 label_batch = list(map(lambda x: x['label'], batch_dic)) 3 id_batch = list(map(lambda x: x['id'], batch_dic))

Dataloader实例化调用代码:

1 train_loader = DataLoader(dataset, batch_size=3, num_workers=1, shuffle=True,collate_fn=collate_func)

完整流程代码

1 import os 2 import numpy as np 3 import torch 4 from torch.utils.data import Dataset 5 from torch.utils.data import DataLoader 6 from tqdm import tqdm 7 class time_series_dataset(Dataset): 8 def __init__(self, data_root): 9 """ 10 :param data_root: 数据集路径 11 """ 12 self.data_root = data_root 13 file_list = os.listdir(data_root) 14 file_prefix = [] 15 for file in file_list: 16 if '.json' in file: 17 file_prefix.append(file.split('.')[0]) 18 file_prefix = list(set(file_prefix)) 19 self.data = file_prefix 20 def __len__(self): 21 return len(self.data) 22 def __getitem__(self, index): 23 prefix = self.data[index] 24 import json 25 with open(self.data_root+prefix+'.json','r',encoding='utf-8') as f: 26 data_dic=json.load(f) 27 feature = np.array(data_dic['feature']) 28 length=len(data_dic['feature']) 29 feature = torch.from_numpy(feature) 30 label = np.array(data_dic['label']) 31 label = torch.from_numpy(label) 32 sample = {'feature': feature, 'label': label, 'id': prefix,'length':length} 33 return sample 34 def collate_func(batch_dic): 35 #from torch.nn.utils.rnn import pad_sequence 36 batch_len=len(batch_dic) 37 max_seq_length=max([dic['length'] for dic in batch_dic]) 38 mask_batch=torch.zeros((batch_len,max_seq_length)) 39 fea_batch=[] 40 label_batch=[] 41 id_batch=[] 42 for i in range(len(batch_dic)): 43 dic=batch_dic[i] 44 fea_batch.append(dic['feature']) 45 label_batch.append(dic['label']) 46 id_batch.append(dic['id']) 47 mask_batch[i,:dic['length']]=1 48 res={} 49 res['feature']=pad_sequence(fea_batch,batch_first=True) 50 res['label']=pad_sequence(label_batch,batch_first=True) 51 res['id']=id_batch 52 res['mask']=mask_batch 53 return res 54 if __name__ == "__main__": 55 dataset = time_series_dataset("./data/") 56 batch_size=3 57 train_loader = DataLoader(dataset, batch_size=batch_size, num_workers=4, shuffle=True,collate_fn=collate_func) 58 for batch_idx, batch in tqdm(enumerate(train_loader),total=int(len(train_loader.dataset) / batch_size) + 1): 59 inputs,labels,masks,ids=batch['feature'],batch['label'],batch['mask'],batch['id'] 60 break

以上代码仅为参考,非最佳实践。

示例2

1 from torch.nn.utils.rnn import pack_sequence 2 from torch.utils.data import DataLoader 3 4 def my_collate(batch): 5 # batch contains a list of tuples of structure (sequence, target) 6 data = [item[0] for item in batch] 7 data = pack_sequence(data, enforce_sorted=False) 8 targets = [item[1] for item in batch] 9 return [data, targets] 10 11 # ... 12 # later in you code, when you define you DataLoader - use the custom collate function 13 loader = DataLoader(dataset, 14 batch_size, 15 shuffle, 16 collate_fn=my_collate, # use custom collate function here 17 pin_memory=True)

示例3

沿一般的维度填充

I wrote a simple code that maybe someone here can re-use. I wanted to make something that pads a generic dim, and I don’t use an RNN of any type so PackedSequence was a bit of overkill for me. It’s simple, but it works for me.

1 def pad_tensor(vec, pad, dim): 2 """ 3 args: 4 vec - tensor to pad 5 pad - the size to pad to 6 dim - dimension to pad 7 8 return: 9 a new tensor padded to 'pad' in dimension 'dim' 10 """ 11 pad_size = list(vec.shape) 12 pad_size[dim] = pad - vec.size(dim) 13 return torch.cat([vec, torch.zeros(*pad_size)], dim=dim) 14 15 16 class PadCollate: 17 """ 18 a variant of callate_fn that pads according to the longest sequence in 19 a batch of sequences 20 """ 21 22 def __init__(self, dim=0): 23 """ 24 args: 25 dim - the dimension to be padded (dimension of time in sequences) 26 """ 27 self.dim = dim 28 29 def pad_collate(self, batch): 30 """ 31 args: 32 batch - list of (tensor, label) 33 34 reutrn: 35 xs - a tensor of all examples in 'batch' after padding 36 ys - a LongTensor of all labels in batch 37 """ 38 # find longest sequence 39 max_len = max(map(lambda x: x[0].shape[self.dim], batch)) 40 # pad according to max_len 41 batch = map(lambda (x, y): 42 (pad_tensor(x, pad=max_len, dim=self.dim), y), batch) 43 # stack all 44 xs = torch.stack(map(lambda x: x[0], batch), dim=0) 45 ys = torch.LongTensor(map(lambda x: x[1], batch)) 46 return xs, ys 47 48 def __call__(self, batch): 49 return self.pad_collate(batch)

to be used with the data loader:

1 train_loader = DataLoader(ds, ..., collate_fn=PadCollate(dim=0))

示例4

If you are going to pack your padded sequences later, you can also immediately sort the batches from longest sequence to shortest:

如果你打算后续对padded的样本进行pack操作,你可以对批样本从长到短进行排序:(这种做法是比较实用的,因为通常后续需要进行pack操作)

1 def sort_batch(batch, targets, lengths): 2 """ 3 Sort a minibatch by the length of the sequences with the longest sequences first 4 return the sorted batch targes and sequence lengths. 5 This way the output can be used by pack_padded_sequences(...) 6 """ 7 seq_lengths, perm_idx = lengths.sort(0, descending=True) 8 seq_tensor = batch[perm_idx] 9 target_tensor = targets[perm_idx] 10 return seq_tensor, target_tensor, seq_lengths 11 12 def pad_and_sort_batch(DataLoaderBatch): 13 """ 14 DataLoaderBatch should be a list of (sequence, target, length) tuples... 15 Returns a padded tensor of sequences sorted from longest to shortest, 16 """ 17 batch_size = len(DataLoaderBatch) 18 batch_split = list(zip(*DataLoaderBatch)) 19 20 seqs, targs, lengths = batch_split[0], batch_split[1], batch_split[2] 21 max_length = max(lengths) 22 23 padded_seqs = np.zeros((batch_size, max_length)) 24 for i, l in enumerate(lengths): 25 padded_seqs[i, 0:l] = seqs[i][0:l] 26 27 return sort_batch(torch.tensor(padded_seqs), torch.tensor(targs).view(-1,1), torch.tensor(lengths))

假设你的Dataset具有以下形式:

1 def __getitem__(self, idx): 2 return self.sequences[idx], torch.tensor(self.targets[idx]), self.sequence_lengths[idx]

使用时将pad_and_sort collator传到 DataLoader:

1 train_gen = Data.DataLoader(train_data, batch_size=128, shuffle=True, collate_fn=pad_and_sort_batch)

示例5

1 def collate_fn_padd(batch): 2 ''' 3 Padds batch of variable length 4 5 note: it converts things ToTensor manually here since the ToTensor transform 6 assume it takes in images rather than arbitrary tensors. 7 ''' 8 ## get sequence lengths 9 lengths = torch.tensor([ t.shape[0] for t in batch ]).to(device) 10 ## padd 11 batch = [ torch.Tensor(t).to(device) for t in batch ] 12 batch = torch.nn.utils.rnn.pad_sequence(batch) 13 ## compute mask 14 mask = (batch != 0).to(device) 15 return batch, lengths, mask

参考:

https://blog.csdn.net/lrs1353281004/article/details/106129660

https://discuss.pytorch.org/t/dataloader-for-various-length-of-data/6418