pytorch中如何自适应调整学习率?

pytorch中torch.optim.lr_scheduler提供了一些基于epochs数目的自适应学习率调整方法。

torch.optim.lr_scheduler.ReduceLROnPlateau基于一些验证集误差测量实现动态学习率缩减。

1.torch.optim.lr_scheduler.LambdaLR(optimizer,lr_lambda,last_epoch=-1)

根据epoch,将每个参数组(parameter group)的学习速率设置为初始lr乘以一个给定的函数(epoch为自变量)。当last_epoch = -1时,将初始lr设置为lr。

lr_lambda (function or list) – A function which computes a multiplicative factor given an integer parameter epoch, or a list of such functions, one for each group in optimizer.param_groups.

函数或列表,一个给定epoch计算乘积系数的函数,或这样的函数的一个list,list中的每一个函数对应于optimizer.param_groups中的一个参数组。

last_epoch (int) – The index of last epoch. Default: -1.

Example:

>>> # Assuming optimizer has two groups. >>> lambda1 = lambda epoch: epoch // 30 >>> lambda2 = lambda epoch: 0.95 ** epoch >>> scheduler = LambdaLR(optimizer, lr_lambda=[lambda1, lambda2]) >>> for epoch in range(100): >>> scheduler.step() >>> train(...) >>> validate(...)

state_dict():以dict的形式返回 scheduler的状态。

It contains an entry for every variable in self.__dict__ which is not the optimizer.

The learning rate lambda functions will only be saved if they are callable objects and not if they are functions or lambdas.

load_state_dict(state_dict):加载scheduler的状态。

参数:state_dict(dict) - scheduler的状态。 应该是对state_dict()的调用返回的对象。

2.torch.optim.lr_scheduler.StepLR(optimizer, step_size, gamma=0.1, last_epoch=-1)

将每个参数组的学习速率设置为每隔step_size epochs,衰减gamma倍。 当last_epoch = -1时,将初始lr设置为lr。

step_size (int) – Period of learning rate decay. 衰减周期

gamma (float) – Multiplicative factor of learning rate decay. Default: 0.1. 衰减系数

last_epoch (int) – The index of last epoch. Default: -1.

Example

>>> # Assuming optimizer uses lr = 0.05 for all groups >>> # lr = 0.05 if epoch < 30 >>> # lr = 0.005 if 30 <= epoch < 60 >>> # lr = 0.0005 if 60 <= epoch < 90 >>> # ... >>> scheduler = StepLR(optimizer, step_size=30, gamma=0.1) >>> for epoch in range(100): >>> scheduler.step() >>> train(...) >>> validate(...)

3.torch.optim.lr_scheduler.MultiStepLR(optimizer, milestones, gamma=0.1, last_epoch=-1)

一旦epochs数达到其中一个里程碑,将每个参数组的学习速率衰减gamma倍。 当last_epoch = -1时,将初始lr设置为lr。

- milestones (list) – List of epoch indices. Must be increasing. 衰减点,必须递增。

- gamma (float) – Multiplicative factor of learning rate decay. Default: 0.1. 衰减系数。

- last_epoch (int) – The index of last epoch. Default: -1.

Example

>>> # Assuming optimizer uses lr = 0.05 for all groups >>> # lr = 0.05 if epoch < 30 >>> # lr = 0.005 if 30 <= epoch < 80 >>> # lr = 0.0005 if epoch >= 80 >>> scheduler = MultiStepLR(optimizer, milestones=[30,80], gamma=0.1) >>> for epoch in range(100): >>> scheduler.step() >>> train(...) >>> validate(...)

4. torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma, last_epoch=-1)

将每个参数组的学习率设置为每个epoch衰减gamma倍。 当last_epoch = -1时,将初始lr设置为lr。

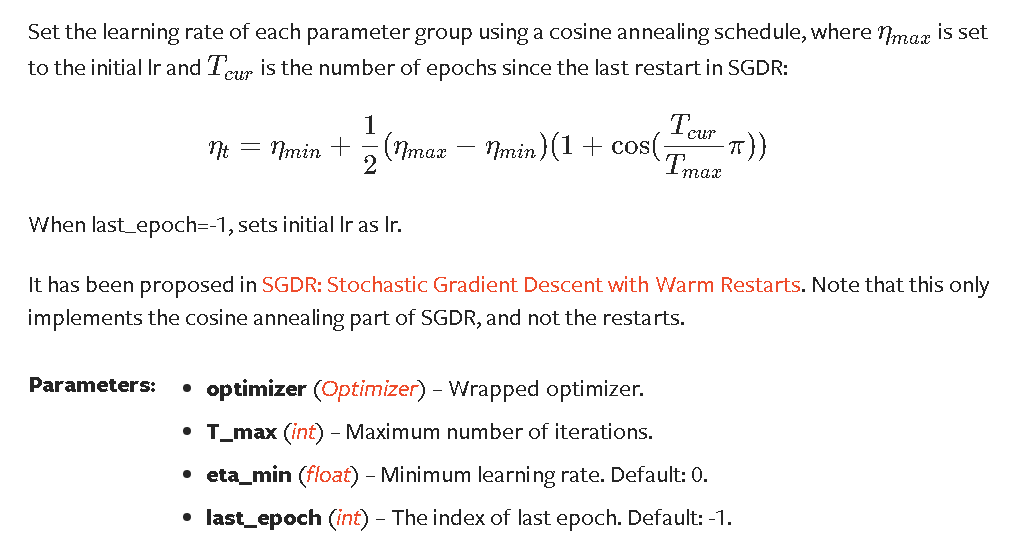

5. torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max, eta_min=0, last_epoch=-1)

采用余弦退火机制设置每个参数组的学习率,

余弦退火与热重启

6. torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode='min', factor=0.1, patience=10, verbose=False, threshold=0.0001, threshold_mode='rel', cooldown=0, min_lr=0, eps=1e-08)

当指标停止改进时降低学习率。一旦学习停滞,模型通常会将学习率降低2-10倍。该调度程序读取度量指标,如果对“patience”数量的epochs没有看到改进,则学习速率降低。

- mode (str) – One of min, max. In min mode, lr will be reduced when the quantity monitored has stopped decreasing; in max mode it will be reduced when the quantity monitored has stopped increasing. Default: ‘min’. 指定指标是增长还是减小,默认减小min。

- factor (float) – Factor by which the learning rate will be reduced. new_lr = lr * factor. Default: 0.1. 衰减系数

- patience (int) – Number of epochs with no improvement after which learning rate will be reduced. For example, if patience = 2, then we will ignore the first 2 epochs with no improvement, and will only decrease the LR after the 3rd epoch if the loss still hasn’t improved then. Default: 10. 耐心值

- verbose (bool) – If

True, prints a message to stdout for each update. Default:False. - threshold (float) – Threshold for measuring the new optimum, to only focus on significant changes. Default: 1e-4.

- threshold_mode (str) – One of rel, abs. In rel mode, dynamic_threshold = best * ( 1 + threshold ) in ‘max’ mode or best * ( 1 - threshold ) in min mode. In abs mode, dynamic_threshold = best + threshold in max mode or best - threshold in min mode. Default: ‘rel’.

- cooldown (int) – Number of epochs to wait before resuming normal operation after lr has been reduced. Default: 0. 机制冷却时间

- min_lr (float or list) – A scalar or a list of scalars. A lower bound on the learning rate of all param groups or each group respectively. Default: 0. 最小学习率

- eps (float) – Minimal decay applied to lr. If the difference between new and old lr is smaller than eps, the update is ignored. Default: 1e-8. 最小衰减量,如果衰减前后lr小于eps,则忽略衰减。