urlopen 爬取网页

爬取网页

read() 读取内容

read() , readline() ,readlines() , fileno() , close() :这些方法的使用方式与文件对象完全一样

ret = request.urlopen("http://www.baidu.com")

print(ret.read()) #read() 读取网页

urlretrieve 写入文件

直接 将你要爬取得 网页 写到本地

import urllib.request

ret = urllib.request.urlretrieve("url地址","保存的路径地址")

urlcleanup 清除缓存

清除 request.urlretrieve 产生的缓存

print(request.urlcleanup())

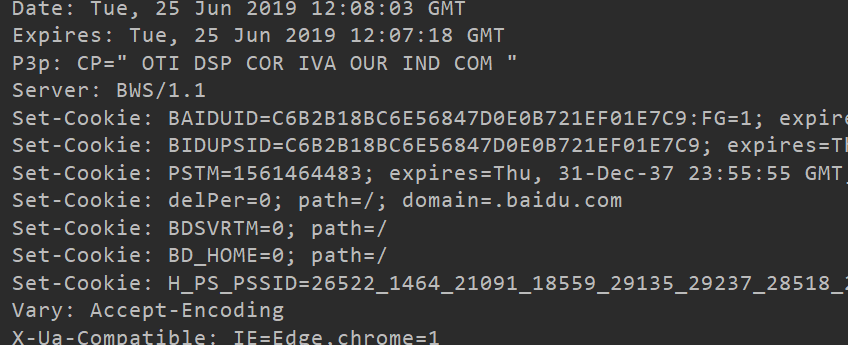

info 显示请求信息

info 返回一个httplib.HTTPMessage对象,表示远程服务器返回的头信息

ret = request.urlopen("http://www.baidu.com")

print(ret.info())

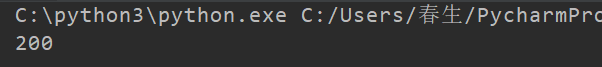

etcode 获取状态码

ret = request.urlopen("http://www.baidu.com")

print(ret.getcode()) # 返回200 就是 正常

geturl 获取当前正在爬取的网址

ret = request.urlopen("http://www.baidu.com")

print(ret.geturl)

超时设置

timeout 是以秒来计算的

ret = request.urlopen("http://www.baidu.com",timeout="设置你的超时时间")

print(ret.read())

模拟get 请求

from urllib import request

name = "python" # 你要搜索的 内容

url = "http://www.baidu.com/s?wd=%s"%name

ret_url = request.Request(url) # 发送请求

ret = request.urlopen(url)

print(ret.read().decode("utf-8"))

print(ret.geturl())

解决中文问题 request.quote

from urllib import request

name = "春生" # 你要搜索的 内容

url = "http://www.baidu.com/s?wd=%s"%name

ret_url = request.Request(url) # 发送请求

ret = request.urlopen(url)

print(ret.read().decode("utf-8"))

print(ret.geturl())

解决 request.quote

name = "春生" # 你要搜索的 内容

name = request.quote(name) # 解决中文问题

url = "http://www.baidu.com/s?wd=%s"%name

ret_url = request.Request(url) # 发送请求

ret = request.urlopen(url)

print(ret.read().decode("utf-8"))

模拟post 请求

from urllib import request, parse

url = "https://www.iqianyue.com/mypost"

mydata = parse.urlencode({

"name":"哈哈",

"pass":"123"

}).encode("utf-8")

ret_url = request.Request(url, mydata) # 发送请求

ret = request.urlopen(ret_url) # 爬取网页

print(ret.geturl()) # 打印当前爬取的url

print(ret.read().decode("utf-8"))

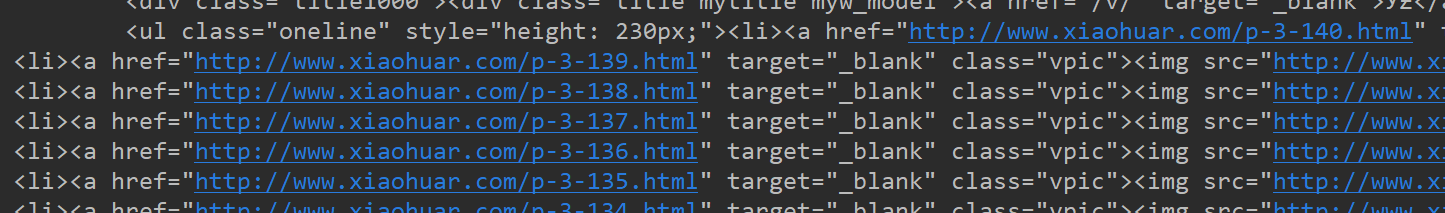

模拟浏览器 发送请求头

request.Request(url, headers=headers) 加上请求头 模拟浏览器

from urllib import request, parse

url = "http://www.xiaohuar.com/"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36"

}

ret_url = request.Request(url, headers=headers) # 发送请求

ret = request.urlopen(ret_url)

print(ret.geturl())

print(ret.read().decode("gbk"))

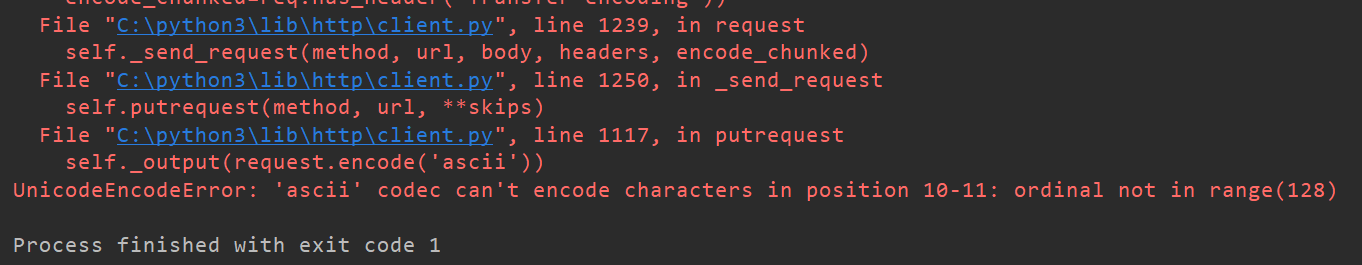

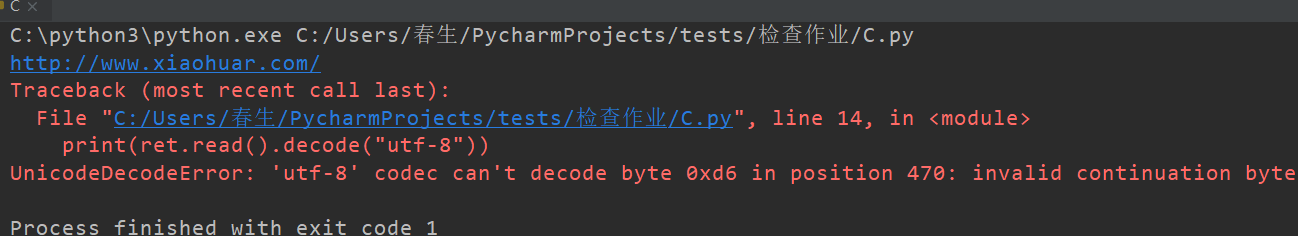

编码出现错误 报错 解决方式

出线的问题

from urllib import request, parse

url = "http://www.xiaohuar.com/"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36"

}

ret_url = request.Request(url, headers=headers) # 发送请求

ret = request.urlopen(ret_url)

print(ret.geturl())

print(ret.read().decode("utf-8"))

解决问题

decode("utf-8","ignore")

加上 "ignore" 就可以忽略掉

from urllib import request, parse

url = "http://www.xiaohuar.com/"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36"

}

ret_url = request.Request(url, headers=headers) # 发送请求

ret = request.urlopen(ret_url)

print(ret.geturl())

print(ret.read().decode("utf-8","ignore"))