通过 kubeadm 安装,版本关系:Docker 19.03.8、Kubernetes 1.17.3、Kubesphere 3.0

一、安装 docker

https://docs.docker.com/engine/install/centos

docker 版本与 k8s 版本对应关系:https://github.com/kubernetes/kubernetes/releases

# 删除旧版本 sudo yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine # 配置 yum 源,也可以用阿里云:http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sudo yum install -y yum-utils sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo # 安装 sudo yum install -y docker-ce-19.03.8 docker-ce-cli-19.03.8 containerd.io # 启动,开机自启 sudo systemctl start docker sudo systemctl enable docker

配置镜像加速

sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json << EOF { "registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com", "https://hub-mirror.c.163.com"], "exec-opts": ["native.cgroupdriver=systemd"] } EOF sudo systemctl daemon-reload sudo systemctl restart docker

二、安装 kubeadm

https://developer.aliyun.com/mirror/kubernetes

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm

准备

# 关闭防火墙 systemctl stop firewalld.service systemctl disable firewalld.service # 替换阿里 yum 源 mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo yum makecache # 将 SELinux 设置为 permissive 模式(相当于将其禁用) setenforce 0 sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config # 关闭 swap sed -ri 's/.*swap.*/#&/' /etc/fstab swapoff -a # swap 为 0 free -g # 允许 iptables 检查桥接流量 cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system

安装

# 添加阿里云 Yum 源,安装 kubeadm,kubelet 和 kubectl cat << EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg exclude=kubelet kubeadm kubectl EOF # 安装 # 由于官网未开放同步方式, 可能会有索引gpg检查失败的情况, 这时请用 yum install -y --nogpgcheck kubelet kubeadm kubectl 安装 # yum list | grep kube yum install -y kubelet-1.17.3 kubeadm-1.17.3 kubectl-1.17.3 --disableexcludes=kubernetes # 开机启动 systemctl enable --now kubelet systemctl enable kubelet && systemctl start kubelet # 查看kubelet的状态 systemctl status kubelet # 查看kubelet版本 kubelet --version

重新启动 kubelet

sudo systemctl daemon-reload sudo systemctl restart kubelet

三、使用 kubeadm 安装 Kubernetes

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm

先手动下载镜像

vim images.sh #!/bin/bash images=( kube-apiserver:v1.17.3 kube-proxy:v1.17.3 kube-controller-manager:v1.17.3 kube-scheduler:v1.17.3 coredns:1.6.5 etcd:3.4.3-0 pause:3.1 ) for imageName in ${images[@]} ; do docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName done sh images.sh

然后初始化安装 kubernetes

init 参数:https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init

--apiserver-advertise-address 的值换成 master 主机的 IP

kubeadm init --apiserver-advertise-address=10.70.19.33 --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version v1.17.3 --service-cidr=10.96.0.0/16 --pod-network-cidr=192.168.0.0/16

按照提示执行

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.70.19.33:6443 --token tqaitp.3imn92ur339n4olo --discovery-token-ca-cert-hash sha256:fb3da80b6f1dd5ce6f78cb304bc1d42f775fdbbdc80773ff7c59acb46e11341a

允许主节点部署 pod

# 允许master节点部署pod kubectl taint nodes --all node-role.kubernetes.io/master- # 如果不允许调度 kubectl taint nodes master1 node-role.kubernetes.io/master=:NoSchedule # 污点可选参数: # NoSchedule: 一定不能被调度 # PreferNoSchedule: 尽量不要调度 # NoExecute: 不仅不会调度, 还会驱逐Node上已有的Pod

每个集群只能安装一个 Pod 网络。

安装 Pod 网络后,可以通过 kubectl get pods --all-namespaces 检查 CoreDNS Pod 是否 Running 来确认其是否正常运行。 一旦 CoreDNS Pod 启用并运行,就可以加入节点。

# 主节点安装网络 # kubectl apply -f https://raw.githubusercontent.com/coreos/flanne/master/Documentation/kube-flannel.yml kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml kubectl get ns # 查看所有名称空间的 pods # kubectl get pods --all-namespaces kubectl get pod -o wide -A # 查看指定名称空间的 pods kubectl get pods -n kube-system

其它节点加入主节点

# 其它节点加入,token 会失效 kubeadm join 10.70.19.33:6443 --token tqaitp.3imn92ur339n4olo --discovery-token-ca-cert-hash sha256:fb3da80b6f1dd5ce6f78cb304bc1d42f775fdbbdc80773ff7c59 # 如果超过 2 小时忘记了令牌 # 打印新令牌 kubeadm token create --print-join-command # 创建个永不过期的令牌 kubeadm token create --ttl 0 --print-join-command # 主节点监控 pod 进度,等待 3-10 分钟,完全都是 running 以后继续 watch kubectl get pod -n kube-system -o wide # 等到所有的 status 都变为 running kubectl get nodes

四、先安装 sc(StorageClass),这里使用 NFS

https://kubernetes.io/zh/docs/concepts/storage/storage-classes

https://kubernetes.io/zh/docs/concepts/storage/dynamic-provisioning

安装 NFS 服务器

yum install -y nfs-utils #执行命令 vi /etc/exports,创建 exports 文件,文件内容如下: echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports # 创建共享目录 mkdir -p /nfs/data #执行以下命令,启动 nfs 服务 systemctl enable rpcbind systemctl enable nfs-server systemctl start rpcbind systemctl start nfs-server exportfs -r # 检查配置是否生效 exportfs # 输出结果如下所示 # /nfs/data <world>

测试 Pod 挂载 NFS(可不测试)

vim /opt/pod.yaml apiVersion: v1 kind: Pod metadata: name: vol-nfs namespace: default spec: volumes: - name: html nfs: path: /nfs/data #1000G server: nfs 服务器地址 containers: - name: myapp image: nginx volumeMounts: - name: html mountPath: /usr/share/nginx/html/ # 部署 pod kubectl apply -f /opt/pod.yaml # 查看 kubectl describe pod vol-nfs

搭建 NFS-Client

#服务器端防火墙开放111、662、875、892、2049的 tcp / udp 允许,否则远端客户无法连接。 #安装客户端工具 yum install -y nfs-utils # 执行以下命令检查 nfs 服务器端是否有设置共享目录 showmount -e nfs服务器IP # 输出结果如下所示 # Export list for 172.26.165.243: # /nfs/data * # 执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmount mkdir /root/nfsmountmount -t nfs nfs服务器IP:/nfs/data /root/nfsmount # 写入一个测试文件 echo "hello nfs server" > /root/nfsmount/test.txt # 在 nfs 服务器上执行以下命令,验证文件写入成功 cat /nfs/data/test.txt

创建 provisioner

# 先创建授权 vim nfs-rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: nfs-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["watch", "create", "update", "patch"] - apiGroups: [""] resources: ["services", "endpoints"] verbs: ["get","create","list", "watch","update"] - apiGroups: ["extensions"] resources: ["podsecuritypolicies"] resourceNames: ["nfs-provisioner"] verbs: ["use"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-provisioner subjects: - kind: ServiceAccount name: nfs-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-provisioner-runner apiGroup: rbac.authorization.k8s.io kubectl apply -f nfs-rbac.yaml

创建 nfs-client 授权

vim nfs-deployment.yaml kind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccount: nfs-provisioner containers: - name: nfs-client-provisioner image: lizhenliang/nfs-client-provisioner volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME #供应者的名字 value: storage.pri/nfs #名字虽然可以随便起,以后引用要一致 - name: NFS_SERVER value: 10.70.19.33 - name: NFS_PATH value: /nfs/data volumes: - name: nfs-client-root nfs: server: 10.70.19.33 path: /nfs/data # 这个镜像中 volume 的 mountPath 默认为 /persistentvolumes,不能修改,否则运行时会报错 kubectl apply -f nfs-deployment.yaml

创建 storageclass

vim storageclass-nfs.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: storage-nfs provisioner: storage.pri/nfs # 主要 reclaimPolicy: Delete kubectl apply -f storageclass-nfs.yaml # 查看 kubectl get sc # 设置为默认,再查看会表示 default kubectl patch storageclass storage-nfs -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

五、最小安装 Kubesphere

https://kubesphere.io/zh/docs/quick-start/minimal-kubesphere-on-k8s

安装 metrics-server(可不装)

# 已经改好了镜像和配置,可以直接使用,这样就能监控到 pod、node 的资源情况(默认只有 cpu、memory 的资源审计信息,更专业的需要对接 Prometheus vim mes.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:aggregated-metrics-reader labels: rbac.authorization.k8s.io/aggregate-to-view: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-admin: "true" rules: - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: apiregistration.k8s.io/v1beta1 kind: APIService metadata: name: v1beta1.metrics.k8s.io spec: service: name: metrics-server namespace: kube-system group: metrics.k8s.io version: v1beta1 insecureSkipTLSVerify: true groupPriorityMinimum: 100 versionPriority: 100 --- apiVersion: v1 kind: ServiceAccount metadata: name: metrics-server namespace: kube-system --- apiVersion: apps/v1 kind: Deployment metadata: name: metrics-server namespace: kube-system labels: k8s-app: metrics-server spec: selector: matchLabels: k8s-app: metrics-server template: metadata: name: metrics-server labels: k8s-app: metrics-server spec: serviceAccountName: metrics-server volumes: # mount in tmp so we can safely use from-scratch images and/or read-only containers - name: tmp-dir emptyDir: {} containers: - name: metrics-server image: mirrorgooglecontainers/metrics-server-amd64:v0.3.6 imagePullPolicy: IfNotPresent args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname ports: - name: main-port containerPort: 4443 protocol: TCP securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - name: tmp-dir mountPath: /tmp nodeSelector: kubernetes.io/os: linux kubernetes.io/arch: "amd64" --- apiVersion: v1 kind: Service metadata: name: metrics-server namespace: kube-system labels: kubernetes.io/name: "Metrics-server" kubernetes.io/cluster-service: "true" spec: selector: k8s-app: metrics-server ports: - port: 443 protocol: TCP targetPort: main-port --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:metrics-server rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces - configmaps verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system kubectl apply -f mes.yaml # 查看 kubectl top nodes

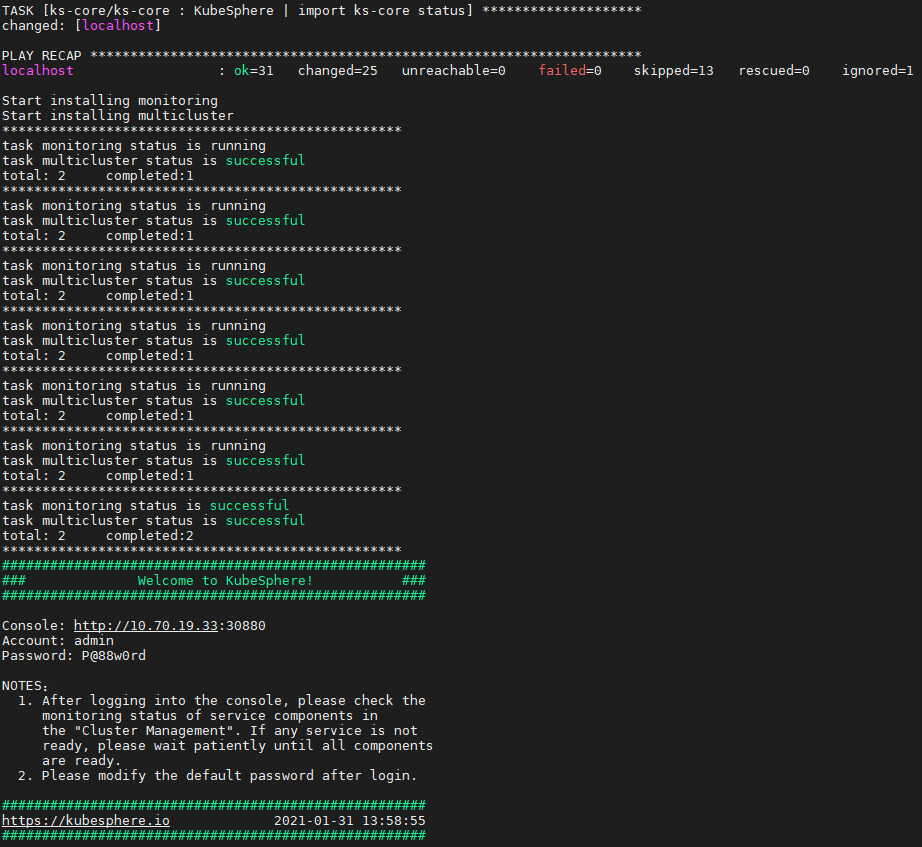

安装 Kubesphere

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/kubesphere-installer.yaml # 最小安装里 metrics_server 默认为 false,上面我们自己已经安装 kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/cluster-configuration.yaml # 查看安装过程 kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

若有 pod 一直无法启动,可看看是否为 etcd 监控证书找不到

证书在下面路径:

--etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

--etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

--etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

创建证书:

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt --from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key

创建后可以看到 kube-etcd-client-certs

ps -ef | grep kube-apiserver

安装过程中不要出现 failed

使用 kubectl get pod --all-namespaces 查看所有 Pod 是否在 KubeSphere 的相关命名空间中正常运行。如果是,请通过以下命令检查控制台的端口(默认为 30880)

kubectl get svc/ks-console -n kubesphere-system

确保在安全组中打开了端口 30880,并通过 NodePort (IP:30880) 使用默认帐户和密码 (admin/P@88w0rd) 访问 Web 控制台。

登录控制台后,您可以在服务组件中检查不同组件的状态。如果要使用相关服务,可能需要等待某些组件启动并运行。

启用可插拔组件:https://kubesphere.io/zh/docs/pluggable-components

六、卸载

kubeadm reset rm -rf /etc/cni/net.d/ rm -rf ~/.kube/config

docker container stop $(docker container ls -a -q)

docker system prune --all --force --volumes

kuboard 也是类似的产品 https://kuboard.cn