一、Heapster介绍

Heapster是容器集群监控和性能分析工具,天然的支持Kubernetes和CoreOS。

Kubernetes有个出名的监控agent—cAdvisor。开源软件 cAdvisor(Container Advisor)用于监控所在节点的容器运行状态,当前已经被默认集成到 kubelet 组件内,默认使用 tcp 4194 端口。在大规模容器集群,一般使用Heapster+Influxdb+Grafana 平台实现集群性能数据的采集,存储与展示。

cAdvisor web界面访问: http://< Node-IP >:4194

cAdvisor也提供Restful API: https://github.com/google/cadvisor/blob/master/docs/api.md

二、环境原理介绍

1.基础环境

Kubernetes + heapster + Influxdb + grafana

2.原理

Heapster:集群中各 node 节点的 cAdvisor 的数据采集汇聚系统,通过调用 node 上kubelet 的 api,再通过 kubelet 调用 cAdvisor 的 api 来采集所在节点上所有容器的性能数据。Heapster 对性能数据进行聚合,并将结果保存到后端存储系统,heapster 支持多种后端存储系统,如 memory,Influxdb 等。

Influxdb:分布式时序数据库(每条记录有带有时间戳属性),主要用于实时数据采集, 时间跟踪记录,存储时间图表,原始数据等。Influxdb 提供 rest api 用于数据的存储与查询。

Grafana:通过 dashboard 将 Influxdb 中的时序数据展现成图表或曲线等形式,便于查看集群运行状态。

Heapster,Influxdb,Grafana 均以 Pod 的形式启动与运行。

三、部署 Kubernetes 集群性能监控

1.准备images

kubernetes 部署服务时,为避免部署时发生 pull 镜像超时的问题,建议提前将相关镜像pull 到相关所有节点(以下以 kubenode1 为例),或搭建本地镜像系统。

需要从 gcr.io pull 的镜像,已利用 Docker Hub 的"Create Auto-Build GitHub"功能(Docker Hub 利用 GitHub 上的 Dockerfile 文件 build 镜像),在个人的 Docker Hub build 成功,可直接 pull 到本地使用。

# heapster

[root@kubenode1 ~]# docker pull netonline/heapster-amd64:v1.5.1

# influxdb

[root@kubenode1 ~]# docker pull netonline/heapster-influxdb-amd64:v1.3.3

# grafana

[root@kubenode1 ~]# docker pull netonline/heapster-grafana-amd64:v4.4.3

2.下载 yaml 范本

# release 下载页:https://github.com/kubernetes/heapster/releases # release 中的 yaml 范本有时较https://github.com/kubernetes/heapster/tree/master/deploy/kube-config/influxdb 的 yaml 新,但区别不大

[root@kubenode1 ~]# cd /usr/local/src/

[root@kubenode1 src]# wget -O heapster-v1.5.1.tar.gz https://github.com/kubernetes/heapster/archive/v1.5.1.tar.gz

# yaml 范本在 heapster/deploy/kube-config/influxdb 目录,另有 1 个 heapster-rbac.yaml 在 heapster/deploy/kube-config/rbac 目录,两者目录结构同 github

[root@kubenode1 src]# tar -zxvf heapster-v1.5.1.tar.gz -C /usr/local/

[root@kubenode1 src]# ln -s /usr/local/heapster-1.5.1 /usr/local/heapster

3.heapster-rbac.yaml

# heapster 需要向 kubernetes-master 请求 node 列表,需要设置相应权限;

# 默认不需要对 heapster-rbac.yaml 修改,将 kubernetes 集群自带的 ClusterRole :system:heapster 做 ClusterRoleBinding,完成授权

[root@kubenode1 ~]# cd /usr/local/heapster/deploy/kube-config/rbac/

[root@kubenode1 rbac]# vi heapster-rbac.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: heapster

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

4.heapster.yaml

hepster.yaml 由 3 个模块组成:ServiceAccout,Deployment,Service。

1)ServiceAccount

默认不需要修改 ServiceAccount 部分,设置 ServiceAccount 资源,获取 rbac 中定义的权限。

2)Deployment

# 修改处:第 23 行,变更镜像名;

# --source:配置采集源,使用安全端口调用 kubernetes 集群 api;

# --sink:配置后端存储为 influxdb;地址采用 influxdb 的 service 名,需要集群 dns 正常工作,如果没有配置 dns 服务,可使用 service 的 ClusterIP 地址

[root@kubenode1 ~]# cd /usr/local/heapster/deploy/kube-config/influxdb/

[root@kubenode1 influxdb]# sed -i 's|gcr.io/google_containers/heapster-amd64:v1.5.1|netonline/heapster-amd64:v1.5.1|g' heapster.yaml

[root@kubenode1 influxdb]# vi heapster.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: heapster

template:

metadata:

labels:

task: monitoring

app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: netonline/heapster-amd64:v1.5.1

imagePullPolicy: IfNotPresent

command:

- /heapster

- --source=kubernetes:https://kubernetes.default

- --sink=influxdb:http://monitoring-influxdb.kube-system.svc:8086

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

3)Service

默认不需要修改 Service 部分。

5.influxdb.yaml

influxdb.yaml 由 2 个模块组成:Deployment,Service。

1)Deployment

# 修改处:第 16 行,变更镜像名;

[root@kubenode1 influxdb]# sed -i 's|gcr.io/google_containers/heapster-influxdb-amd64:v1.3.3|netonline/heapster-influxdb-amd64:v1.3.3|g' influxdb.yaml

[root@kubenode1 influxdb]# vi influxdb.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-influxdb

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: influxdb

template:

metadata:

labels:

task: monitoring

app: influxdb

spec:

containers:

- name: influxdb

image: netonline/heapster-influxdb-amd64:v1.3.3

volumeMounts:

- mountPath: /data

name: influxdb-storage

volumes:

- name: influxdb-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-influxdb

name: monitoring-influxdb

namespace: kube-system

spec:

ports:

- port: 8086

targetPort: 8086

selector:

k8s-app: influxdb

2)Service

默认不需要修改 Service 部分,注意 Service 名字的对应即可。

6.grafana.yaml

grafana.yaml 由 2 个模块组成:Deployment,Service。

1)Deployment

# 修改处:第 16 行,变更镜像名;

# 修改处:第 43 行,取消注释;“GF_SERVER_ROOT_URL”的 value 值设定后,只能通过API Server proxy 访问 grafana;

# 修改处:第 44 行,注释本行;

# INFLUXDB_HOST 的 value 值设定为 influxdb 的 service 名,依赖于集群 dns,或者直接使用 ClusterIP

[root@kubenode1 influxdb]# sed -i 's|gcr.io/google_containers/heapster-grafana-amd64:v4.4.3|netonline/heapster-grafana-amd64:v4.4.3|g' grafana.yaml

[root@kubenode1 influxdb]# sed -i '43s|# value:|value:|g' grafana.yaml

[root@kubenode1 influxdb]# sed -i '44s|value:|# value:|g' grafana.yaml

[root@kubenode1 influxdb]# vi grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

task: monitoring

app: grafana

spec:

containers:

- name: grafana

image: netonline/heapster-grafana-amd64:v4.4.3

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

# If you're only using the API Server proxy, set this value instead:

# value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

# You could also use NodePort to expose the service at a randomly-generated port

type: NodePort

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: grafana

2)Service

默认不需要修改 Service 部分,注意 Service 名字的对应即可。

四、验证Kubernetes 集群性能监控

1.启动监控相关服务

# 将 heapster-rbac.yaml 复制到 influxdb/目录;

[root@kubenode1 ~]# cd /usr/local/heapster/deploy/kube-config/influxdb/

[root@kubenode1 influxdb]# cp /usr/local/heapster/deploy/kube-config/rbac/heapster-rbac.yaml .

[root@kubenode1 influxdb]# kubectl create -f .

2.查看相关服务

[root@kubernetes-master-001 /usr/local/heapster/deploy/kube-config/influxdb]# kubectl get deployments.apps -n kube-system |grep -E 'heapster|monitoring'

heapster 1/1 1 1 7m57s

monitoring-grafana 1/1 1 1 12m

monitoring-influxdb 1/1 1 1 4m31s

[root@kubernetes-master-001 /usr/local/heapster/deploy/kube-config/influxdb]# kubectl get pods -n kube-system |grep -E 'heapster|monitoring'

heapster-5b94686b4d-dn2gf 1/1 Running 0 8m9s

monitoring-grafana-f65bfb89-d6fr5 1/1 Running 0 11m

monitoring-influxdb-7b75748dfd-2xf67 1/1 Running 0 60s

五、访问Dashboard

浏览器访问访问 dashboard:https://192.168.13.100:30028/#/pod?namespace=default (前提要装Dasheboard界面)

注意:Dasheboard 没有配置 hepster 监控平台时,不能展示 node,Pod 资源的 CPU 与内存等 metric 图形

Node 资源 CPU/内存 metric 图形:

Pod 资源 CPU/内存 metric 图形:

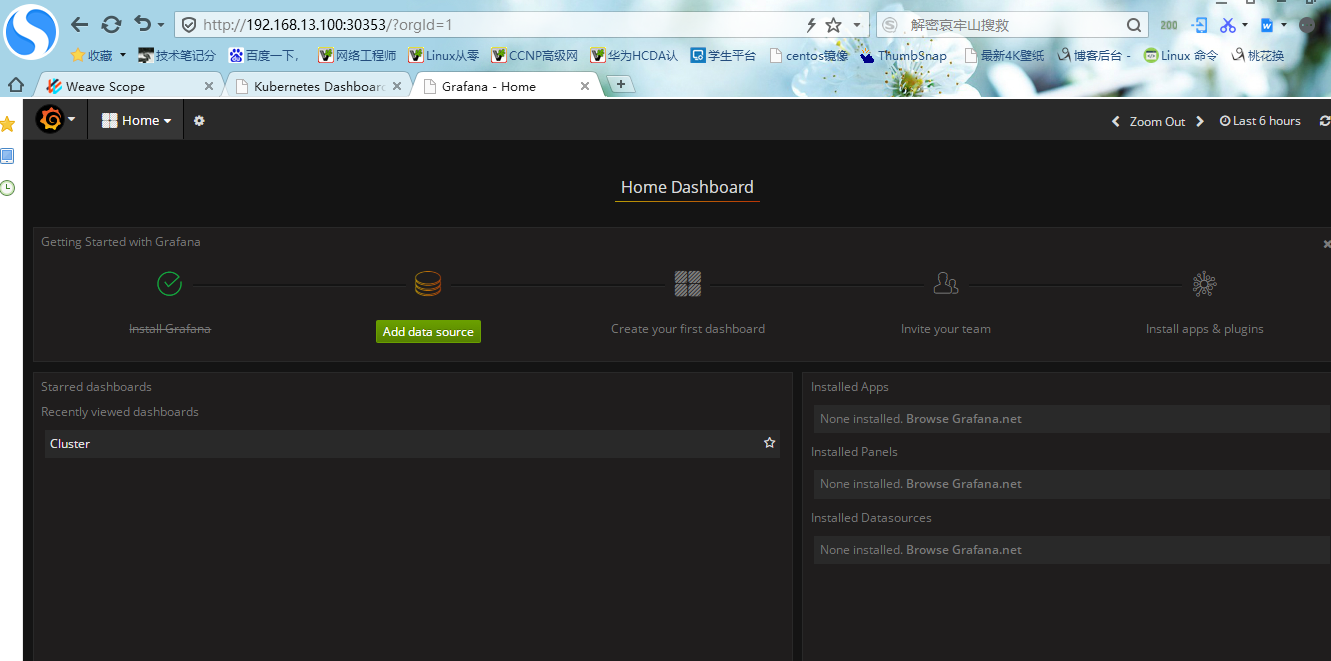

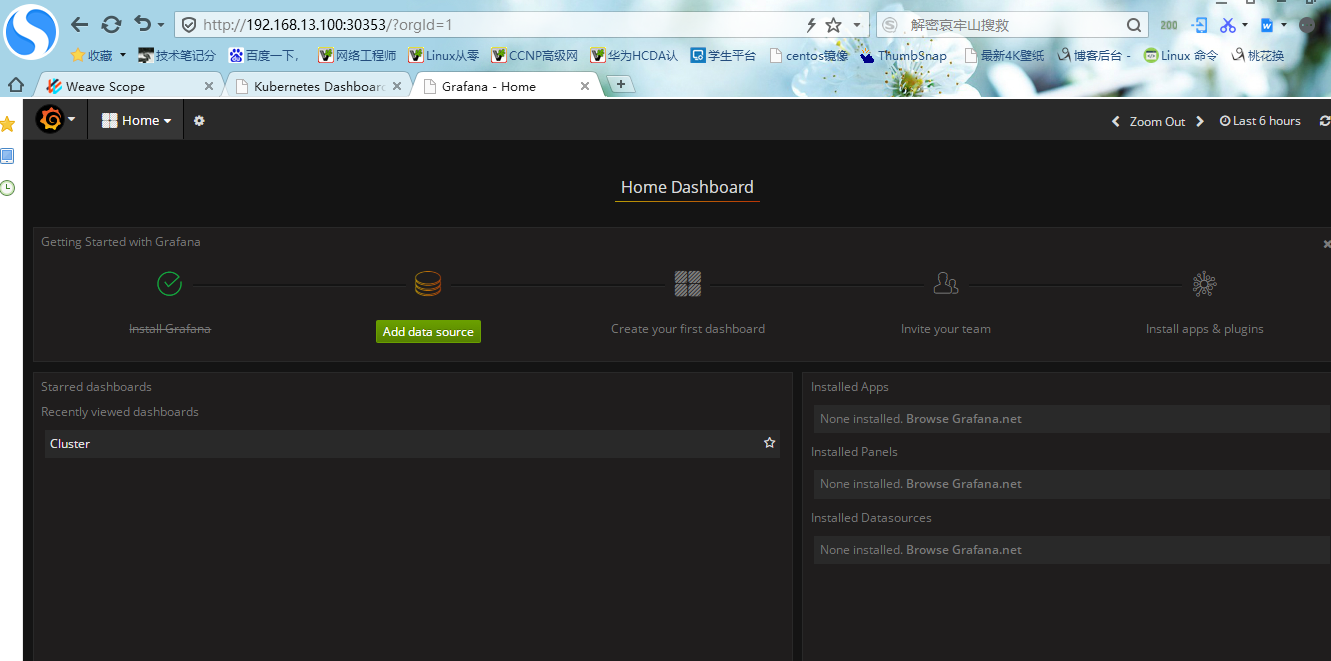

六、访问 grafana

1.查看grafana对外访问端口

[root@kubernetes-master-001 /usr/local/heapster/deploy/kube-config/influxdb]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

monitoring-grafana NodePort 10.106.49.124 <none> 80:30353/TCP 118m

2.浏览器访问

浏览器访问:http://192.168.13.100:30353/?orgId=1

3.集群节点信息

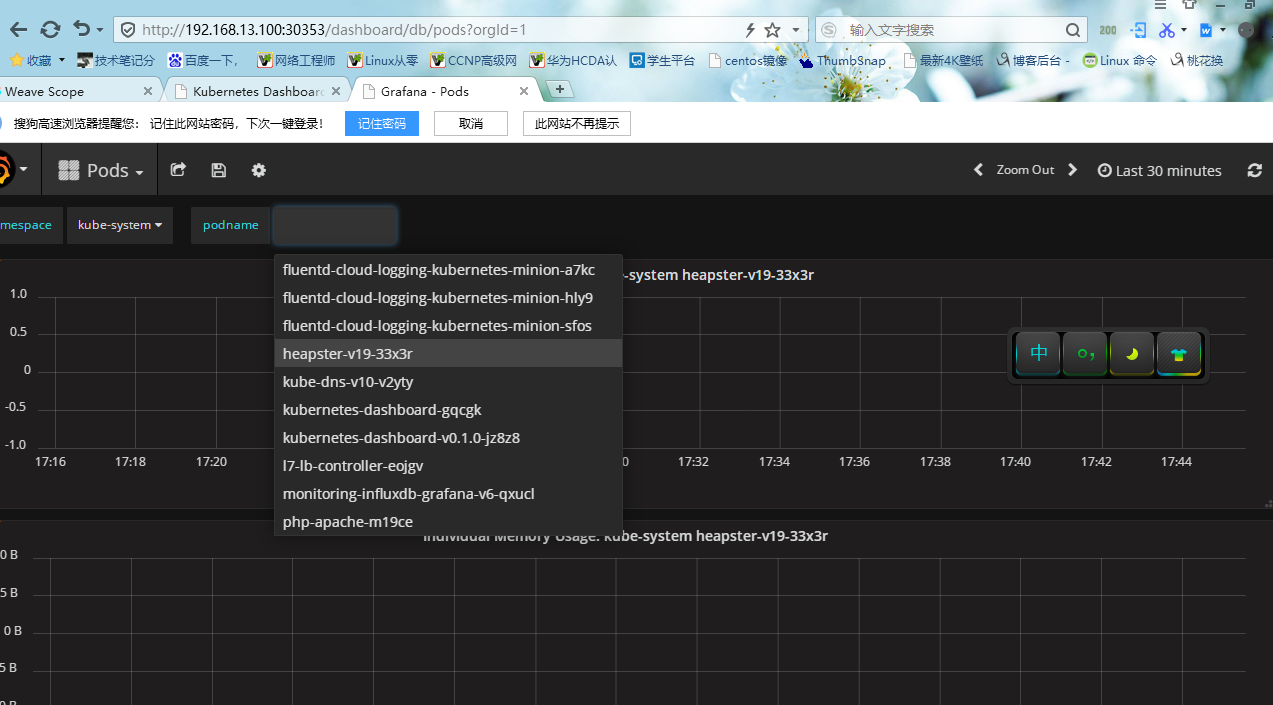

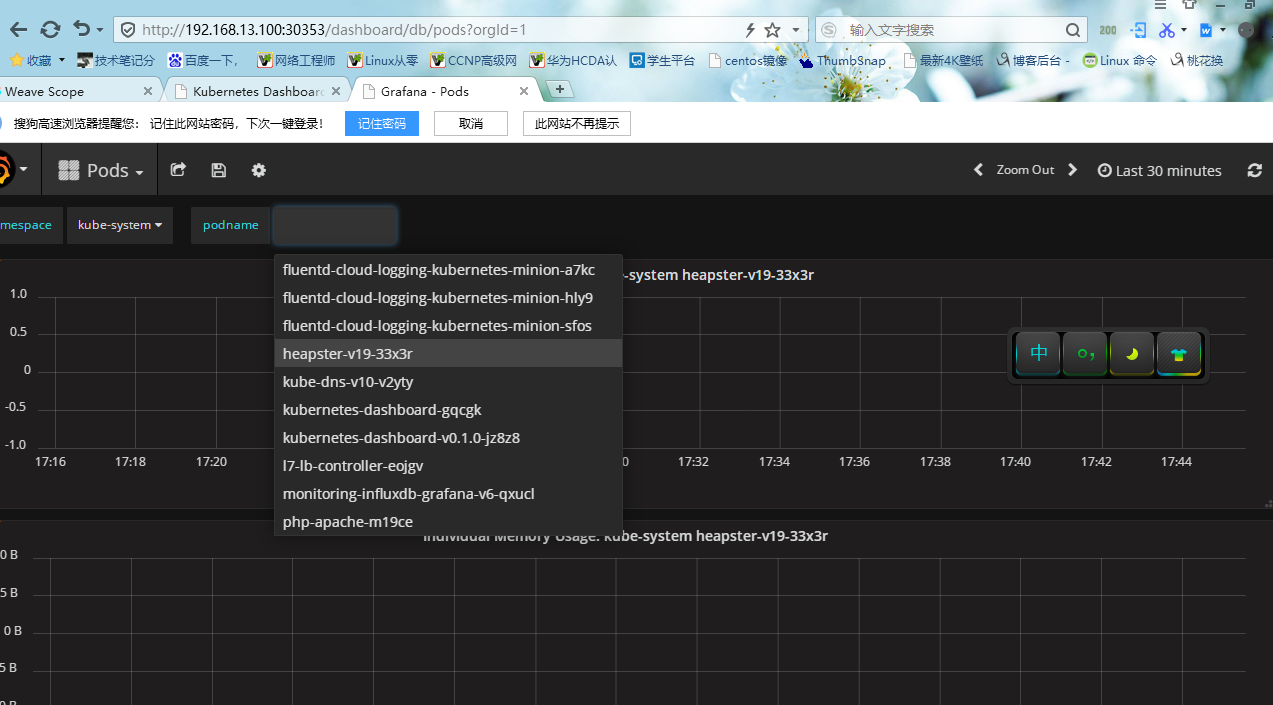

4.Pod信息

七、K8S监控组件metrics-server

1.创建用户

Metrics-server需要读取kubernetes中数据,所以需要创建一个有权限的用户来给metrics-server使用。

[root@kubernetes-master-001 ~]# kubectl create clusterrolebinding system:anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/system:anonymous created

2.创建配置文件

[root@kubernetes-master-001 ~]# vi components.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:aggregated-metrics-reader

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rules:

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

- name: ca-ssl

hostPath:

path: /etc/kubernetes/ssl

containers:

- name: metrics-server

image: registry.cn-hangzhou.aliyuncs.com/k8sos/metrics-server:v0.4.1

imagePullPolicy: IfNotPresent

args:

- --cert-dir=/tmp

- --secure-port=4443

- --metric-resolution=30s

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

ports:

- name: main-port

containerPort: 4443

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- name: tmp-dir

mountPath: /tmp

- name: ca-ssl

mountPath: /etc/kubernetes/ssl

nodeSelector:

kubernetes.io/os: linux

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: main-port

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

3.创建服务

[root@kubernetes-master-001 ~]# kubectl apply -f components.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created