源代码如下:

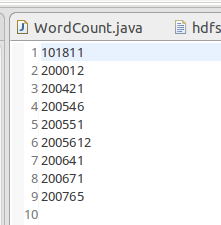

package mapreduce; import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class WordCount { public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Job job = Job.getInstance(); job.setJobName("WordCount"); job.setJarByClass(WordCount.class); job.setMapperClass(doMapper.class); job.setReducerClass(doReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); Path in = new Path("hdfs://localhost:9000/user/hadoop/mymapreduce1/in/buyer_favorite1.txt"); Path out = new Path("hdfs://localhost:9000/user/hadoop/mymapreduce1/out"); FileInputFormat.addInputPath(job, in); FileOutputFormat.setOutputPath(job, out); System.exit(job.waitForCompletion(true) ? 0 : 1); } public static class doMapper extends Mapper<Object, Text, Text, IntWritable>{ public static final IntWritable one = new IntWritable(1); public static Text word = new Text(); @Override protected void map(Object key, Text value, Context context) throws IOException, InterruptedException { StringTokenizer tokenizer = new StringTokenizer(value.toString(), " "); word.set(tokenizer.nextToken()); context.write(word, one); } } public static class doReducer extends Reducer<Text, IntWritable, Text, IntWritable>{ private IntWritable result = new IntWritable(); @Override protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable value : values) { sum += value.get(); } result.set(sum); context.write(key, result); } } }

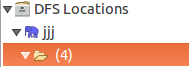

这里在运行时需要用到一个名为:hadoop-eclipse-plugin-2.8.3.jar 的插件。通过它可以也可以观看hdfs中的文件情况!如下图:

但是并不能通过这个插件来添加或修改文件,本人是通过shell命令来向hdfs中添加文件的。

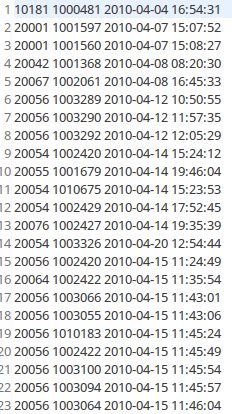

数据文件的部分内容如下:

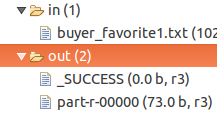

运行截图如下:

注意:

1.操作hdfs和运行程序前一定要把hadoop开启。

2.本人无法通过浏览器访问localhost:50070,但是并不影响通过shell命令对hdfs的文件操作。

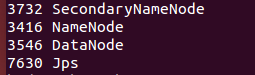

3.在命令行输入 jps 命令应该显示如下几个进程:

缺一不可。