一、Helm

1.1、什么是Helm

在没使用 helm 之前,向 kubernetes 部署应用,我们要依次部署 deployment、svc 等,步骤较繁琐。况且随着很多项目微服务化,复杂的应用在容器中部署以及管理显得较为复杂,helm 通过打包的方式,支持发布的版本管理和控制,很大程度上简化了 Kubernetes 应用的部署和管理

Helm 本质就是让 K8s 的应用管理(Deployment,Service 等 ) 可配置,能动态生成。通过动态生成 K8s 资源清单文件(deployment.yaml,service.yaml)。然后调用 Kubectl 自动执行 K8s 资源部署

Helm 是官方提供的类似于 YUM 的包管理器,是部署环境的流程封装。Helm 有两个重要的概念:chart 和release

- chart :是创建一个应用的信息集合,包括各种 Kubernetes 对象的配置模板、参数定义、依赖关系、文档说明等。chart 是应用部署的自包含逻辑单元。可以将 chart 想象成 apt、yum 中的软件安装包

- release :是 chart 的运行实例,代表了一个正在运行的应用。当 chart 被安装到 Kubernetes 集群,就生成一个 release。chart 能够多次安装到同一个集群,每次安装都是一个 release

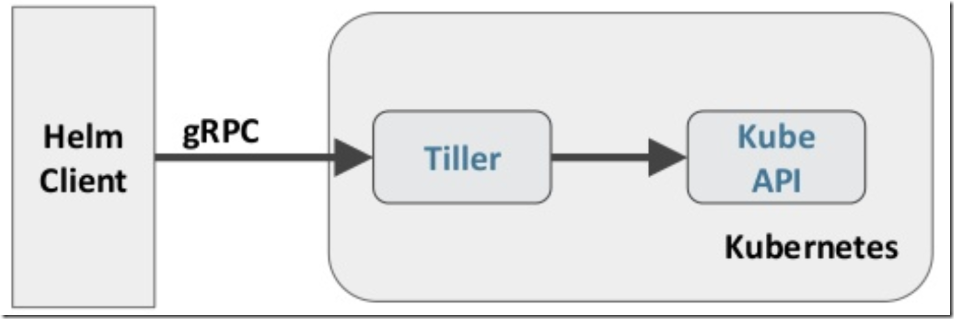

Helm 包含两个组件:Helm 客户端和 Tiller 服务器,如下图所示

Helm 客户端负责 chart 和 release 的创建和管理以及和 Tiller 的交互。Tiller 服务器运行在 Kubernetes 集群中,它会处理 Helm 客户端的请求,与 Kubernetes API Server 交互

1.2、Helm 部署

1)下载helm软件包

[root@k8s-master01 helm]# wget https://storage.googleapis.com/kubernetes-helm/helm-v2.13.1-linux-amd64.tar.gz [root@k8s-master01 helm]# ls helm-v2.13.1-linux-amd64.tar.gz [root@k8s-master01 helm]# tar xf helm-v2.13.1-linux-amd64.tar.gz [root@k8s-master01 helm]# ls helm-v2.13.1-linux-amd64.tar.gz linux-amd64 [root@k8s-master01 helm]# cp linux-amd64/helm /usr/local/bin/ [root@k8s-master01 helm]# chmod +x /usr/local/bin/helm

2)Kubernetes APIServer 开启了 RBAC 访问控制,所以需要创建 tiller 使用的 service account: tiller 并分配合适的角色给它,创建rbac-config.yaml 文件

[root@k8s-master01 helm]# cat rbac-config.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

[root@k8s-master01 helm]# kubectl create -f rbac-config.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

#helm初始化

[root@k8s-master01 helm]# helm init --service-account tiller --skip-refresh

Creating /root/.helm

Creating /root/.helm/repository

Creating /root/.helm/repository/cache

Creating /root/.helm/repository/local

Creating /root/.helm/plugins

Creating /root/.helm/starters

Creating /root/.helm/cache/archive

Creating /root/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /root/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

[root@k8s-master01 helm]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5c98db65d4-6vgp6 1/1 Running 4 4d

coredns-5c98db65d4-8zbqt 1/1 Running 4 4d

etcd-k8s-master01 1/1 Running 4 4d

kube-apiserver-k8s-master01 1/1 Running 4 4d

kube-controller-manager-k8s-master01 1/1 Running 4 4d

kube-flannel-ds-amd64-dqgj6 1/1 Running 0 17h

kube-flannel-ds-amd64-mjzxt 1/1 Running 0 17h

kube-flannel-ds-amd64-z76v7 1/1 Running 3 4d

kube-proxy-4g57j 1/1 Running 3 3d23h

kube-proxy-qd4xm 1/1 Running 4 4d

kube-proxy-x66cd 1/1 Running 3 3d23h

kube-scheduler-k8s-master01 1/1 Running 4 4d

tiller-deploy-58565b5464-lrsmr 0/1 Running 0 5s

#查看helm版本信息

[root@k8s-master01 helm]# helm version

Client: &version.Version{SemVer:"v2.13.1", GitCommit:"618447cbf203d147601b4b9bd7f8c37a5d39fbb4", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.13.1", GitCommit:"618447cbf203d147601b4b9bd7f8c37a5d39fbb4", GitTreeState:"clean"}1.3、helm仓库

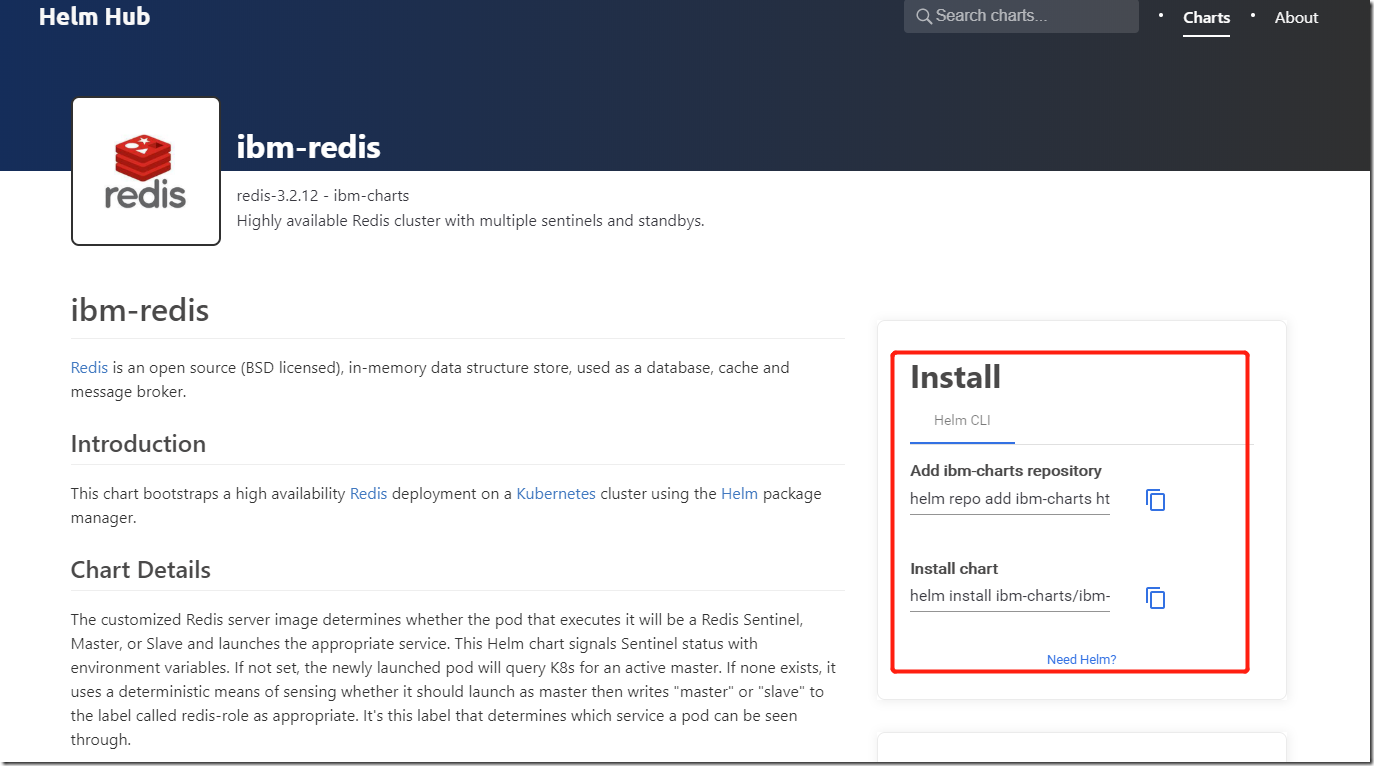

仓库地址:https://hub.helm.sh/

1.4、helm自定义模板

#创建文件夹

[root@k8s-master01 helm]# mkdir hello-world

[root@k8s-master01 helm]# cd hello-world/

#创建自描述文件 Chart.yaml , 这个文件必须有 name 和 version 定义

[root@k8s-master01 hello-world]# cat Chart.yaml

name: hello-world

version: 1.0.0

#创建模板文件,用于生成 Kubernetes 资源清单(manifests)

[root@k8s-master01 hello-world]# mkdir templates

[root@k8s-master01 hello-world]# cd templates/

[root@k8s-master01 templates]# cat deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: hello-world

spec:

replicas: 1

template:

metadata:

labels:

app: hello-world

spec:

containers:

- name: hello-world

image: {{ .Values.image.repository }}:{{ .Values.image.tag }}

ports:

- containerPort: 80

protocol: TCP

[root@k8s-master01 templates]# cat service.yaml

apiVersion: v1

kind: Service

metadata:

name: hello-world

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

app: hello-world

#创建values.yaml

[root@k8s-master01 templates]# cd ../

[root@k8s-master01 hello-world]# cat values.yaml

image:

repository: hub.dianchou.com/library/myapp

tag: 'v1'

##使用命令 helm install RELATIVE_PATH_TO_CHART 创建一次Release

[root@k8s-master01 hello-world]# helm install .

NAME: icy-zebu

LAST DEPLOYED: Thu Feb 6 16:15:35 2020

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

hello-world-7676d54884-xzv2v 0/1 ContainerCreating 0 1s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-world NodePort 10.108.3.115 <none> 80:31896/TCP 1s

==> v1beta1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

hello-world 0/1 1 0 1s

[root@k8s-master01 hello-world]# helm ls

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

icy-zebu 1 Thu Feb 6 16:15:35 2020 DEPLOYED hello-world-1.0.0 default

[root@k8s-master01 hello-world]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-world-7676d54884-xzv2v 1/1 Running 0 33s版本更新:

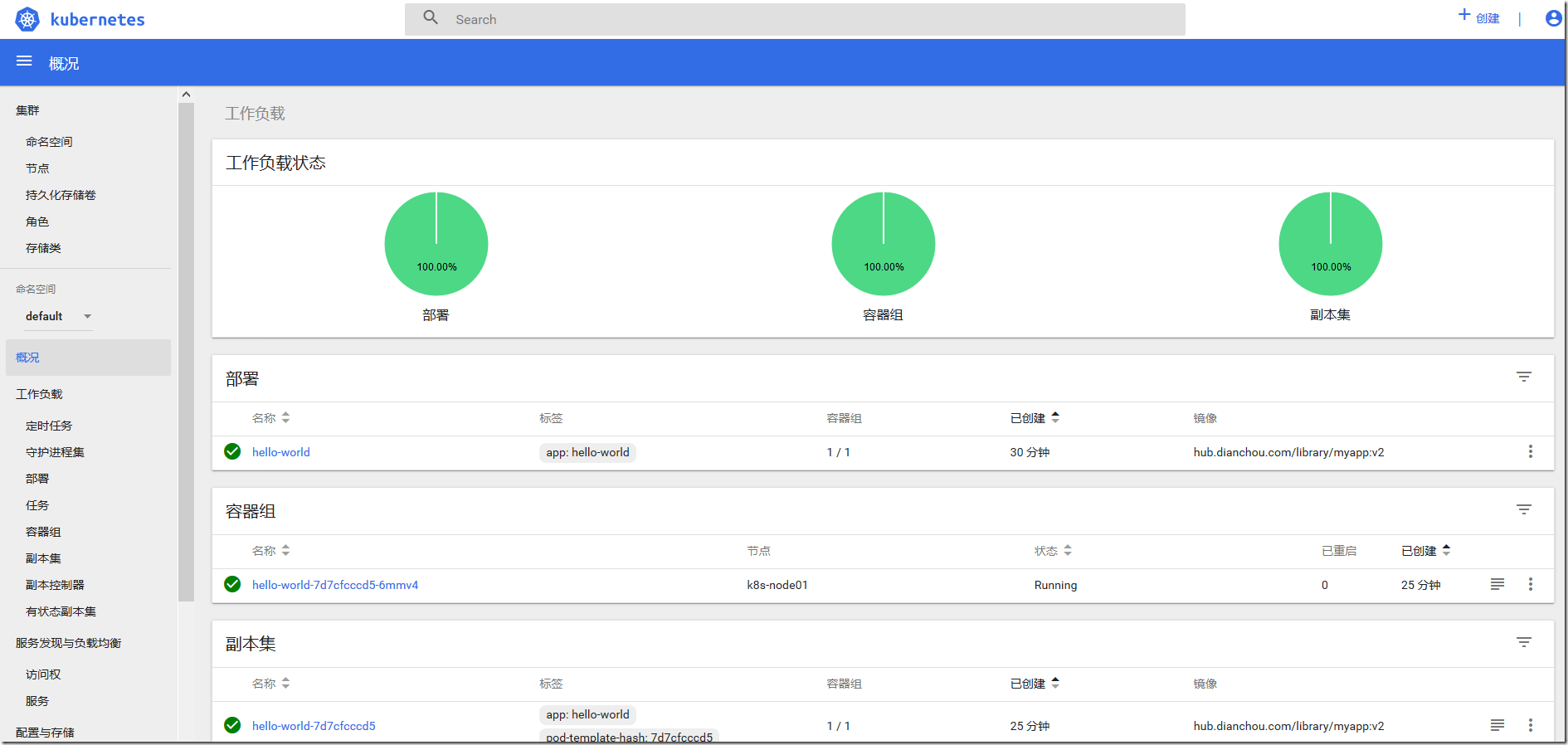

#在 values.yaml 中的值可以被部署 release 时用到的参数 --values YAML_FILE_PATH 或 --setkey1=value1, key2=value2 覆盖掉 #安装指定版本 helm install --set image.tag='latest' . #升级版本 helm upgrade -f values.yaml test ------------------------------------------------------------------- [root@k8s-master01 hello-world]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE hello-world NodePort 10.108.3.115 <none> 80:31896/TCP 3m19s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d2h [root@k8s-master01 hello-world]# curl 10.0.0.11:31896 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> #更新 [root@k8s-master01 hello-world]# helm ls NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE icy-zebu 1 Thu Feb 6 16:15:35 2020 DEPLOYED hello-world-1.0.0 default [root@k8s-master01 hello-world]# helm upgrade icy-zebu --set image.tag='v2' . [root@k8s-master01 hello-world]# curl 10.0.0.11:31896 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

1.5、helm命令总结

# 列出已经部署的 Release $ helm ls # 查询一个特定的 Release 的状态 $ helm status RELEASE_NAME # 移除所有与这个 Release 相关的 Kubernetes 资源 $ helm delete cautious-shrimp # helm rollback RELEASE_NAME REVISION_NUMBER $ helm rollback cautious-shrimp 1 # 使用 helm delete --purge RELEASE_NAME 移除所有与指定 Release 相关的 Kubernetes 资源和所有这个Release 的记录 $ helm delete --purge cautious-shrimp $ helm ls --deleted # 使用模板动态生成K8s资源清单,非常需要能提前预览生成的结果。 # 使用--dry-run --debug 选项来打印出生成的清单文件内容,而不执行部署 helm install . --dry-run --debug --set image.tag=lates

二、dashboard安装

#更新helm仓库

[root@k8s-master01 dashboard]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Skip local chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

#下载dashboard相关配置

[root@k8s-master01 dashboard]# helm fetch stable/kubernetes-dashboard

[root@k8s-master01 dashboard]# ls

kubernetes-dashboard-1.10.1.tgz

[root@k8s-master01 dashboard]# tar xf kubernetes-dashboard-1.10.1.tgz

[root@k8s-master01 dashboard]# ls

kubernetes-dashboard kubernetes-dashboard-1.10.1.tgz

[root@k8s-master01 dashboard]# cd kubernetes-dashboard/

[root@k8s-master01 kubernetes-dashboard]# ls

Chart.yaml README.md templates values.yaml

#创建kubernetes-dashboard.yaml

[root@k8s-master01 kubernetes-dashboard]# cat kubernetes-dashboard.yaml

image:

repository: k8s.gcr.io/kubernetes-dashboard-amd64

tag: v1.10.1

ingress:

enabled: true

hosts:

- k8s.frognew.com

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

tls:

- secretName: frognew-com-tls-secret

hosts:

- k8s.frognew.com

rbac:

clusterAdminRole: true

#helm创建

[root@k8s-master01 kubernetes-dashboard]# helm install -n kubernetes-dashboard --namespace kube-system -f kubernetes-dashboard.yaml .

#提供外界访问,修改成nodePort

[root@k8s-master01 kubernetes-dashboard]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 4d2h

kubernetes-dashboard ClusterIP 10.110.55.113 <none> 443/TCP 5m10s

tiller-deploy ClusterIP 10.97.87.192 <none> 44134/TCP 114m

[root@k8s-master01 kubernetes-dashboard]# kubectl edit svc kubernetes-dashboard -n kube-system

service/kubernetes-dashboard edited #type: NodePort

[root@k8s-master01 kubernetes-dashboard]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 4d2h

kubernetes-dashboard NodePort 10.110.55.113 <none> 443:31147/TCP 6m54s

tiller-deploy ClusterIP 10.97.87.192 <none> 44134/TCP 116m

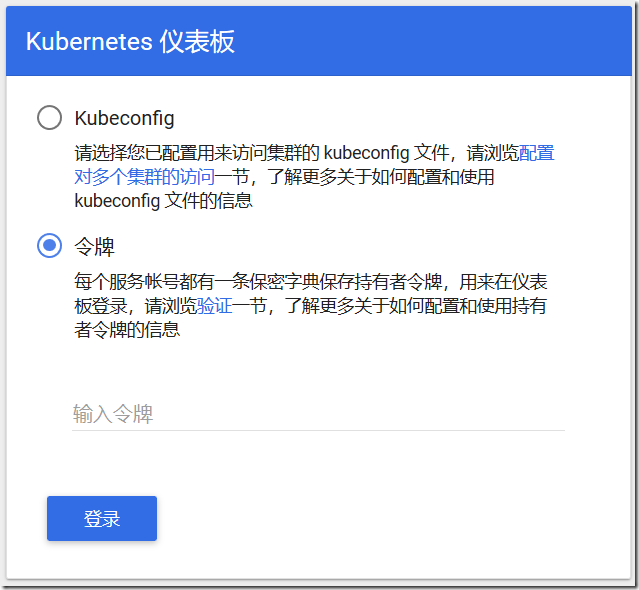

#浏览器访问

https://10.0.0.11:31147查看token并粘贴登录:

[root@k8s-master01 kubernetes-dashboard]# kubectl -n kube-system get secret | grep kubernetes-dashboard-token

kubernetes-dashboard-token-kcn7d kubernetes.io/service-account-token 3 11m

[root@k8s-master01 kubernetes-dashboard]# kubectl describe secret kubernetes-dashboard-token-kcn7d -n kube-system

Name: kubernetes-dashboard-token-kcn7d

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: 261d33a1-ab30-47fc-a759-4f0402ccf33d

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1rY243ZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjI2MWQzM2ExLWFiMzAtNDdmYy1hNzU5LTRmMDQwMmNjZjMzZCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.IJTrscaOCfoUMQ4k_fDoVaGoaSVwhR7kmtmAT1ej36ABx7UQwYiYN4pur36l_nSwAIsAlF38hivYX_bp-wf13VWTl9_eospOFSd2lTnvPZjjgQQCpf-voZfpS4P4hTntDaPVQ_cql_2xs1uX4VaL8LpLsVZlrL_Y1MRjvEsCq-Od_ChD4jKA0xMfNUNr8HTN3cTmijYSNGJ_5FkkezRb00NGs0S1ANPyoFb8KbaqmyP9ZF_rVxl6tuolEUGkYNQ6AUJstmcoxnF5Dt5LyE6dsyT6XNDH9GvmCrDV6NiXbxrZtlVFWwgpORTvyN12d-UeNdSc8JEq2yrFB7KiJ8Xwkw三、prometheus安装

Prometheus github 地址:https://github.com/coreos/kube-prometheus

3.1、组件说明

1)MetricServer:是kubernetes集群资源使用情况的聚合器,收集数据给kubernetes集群内使用,如kubectl,hpa,scheduler等。

2)PrometheusOperator:是一个系统监测和警报工具箱,用来存储监控数据。

3)NodeExporter:用于各node的关键度量指标状态数据。

4)KubeStateMetrics:收集kubernetes集群内资源对象数据,制定告警规则。

5)Prometheus:采用pull方式收集apiserver,scheduler,controller-manager,kubelet组件数据,通过http协议传输。

6)Grafana:是可视化数据统计和监控平台

3.2、构建记录

1)下载相关配置文件

[root@k8s-master01 prometheus]# git clone https://github.com/coreos/kube-prometheus.git [root@k8s-master01 prometheus]# cd kube-prometheus/manifests/

2)修改 grafana-service.yaml 文件,使用 nodepode 方式访问 grafana

[root@k8s-master01 manifests]# vim grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

type: NodePort #添加内容

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30100 #添加内容

selector:

app: grafana3)修改 prometheus-service.yaml,改为 nodepode

[root@k8s-master01 manifests]# vim prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort #添加

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30200 #添加

selector:

app: prometheus

prometheus: k8s

sessionAffinity: ClientIP4)修改 alertmanager-service.yaml,改为 nodepode

[root@k8s-master01 manifests]# vim alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

alertmanager: main

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort #添加

ports:

- name: web

port: 9093

targetPort: web

nodePort: 30300 #添加

selector:

alertmanager: main

app: alertmanager

sessionAffinity: ClientIP6)运行pod

[root@k8s-master01 manifests]# pwd /root/k8s/prometheus/kube-prometheus/manifests [root@k8s-master01 manifests]# kubectl apply -f . #多运行几遍 [root@k8s-master01 manifests]# kubectl apply -f . [root@k8s-master01 manifests]# kubectl get pod -n monitoring NAME READY STATUS RESTARTS AGE alertmanager-main-0 2/2 Running 0 51s alertmanager-main-1 2/2 Running 0 43s alertmanager-main-2 2/2 Running 0 35s grafana-7dc5f8f9f6-79zc8 1/1 Running 0 64s kube-state-metrics-5cbd67455c-7s7qr 4/4 Running 0 31s node-exporter-gbwqq 2/2 Running 0 64s node-exporter-n4rvn 2/2 Running 0 64s node-exporter-r894g 2/2 Running 0 64s prometheus-adapter-668748ddbd-t8wk6 1/1 Running 0 64s prometheus-k8s-0 3/3 Running 1 46s prometheus-k8s-1 3/3 Running 1 46s prometheus-operator-7447bf4dcb-tf4jc 1/1 Running 0 65s [root@k8s-master01 manifests]# kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8s-master01 196m 9% 1174Mi 62% k8s-node01 138m 13% 885Mi 47% k8s-node02 104m 10% 989Mi 52% [root@k8s-master01 manifests]# kubectl top pod NAME CPU(cores) MEMORY(bytes) hello-world-7d7cfcccd5-6mmv4 0m 1Mi

3.3、prometheus访问测试

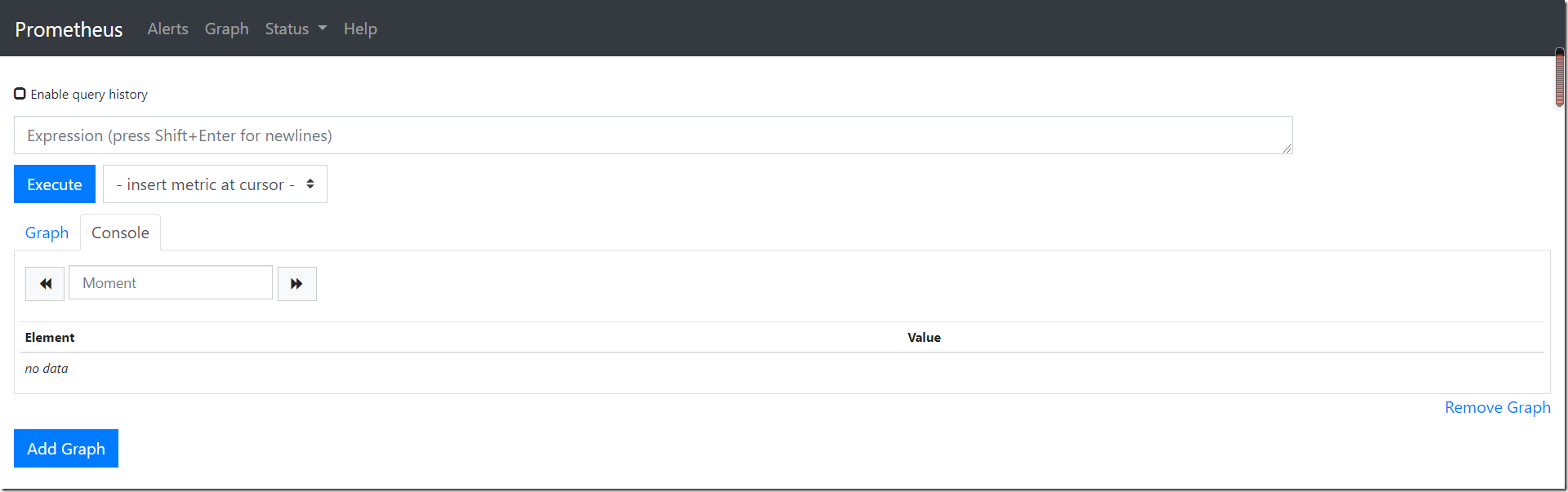

prometheus 对应的 nodeport 端口为 30200,访问http://MasterIP:30200

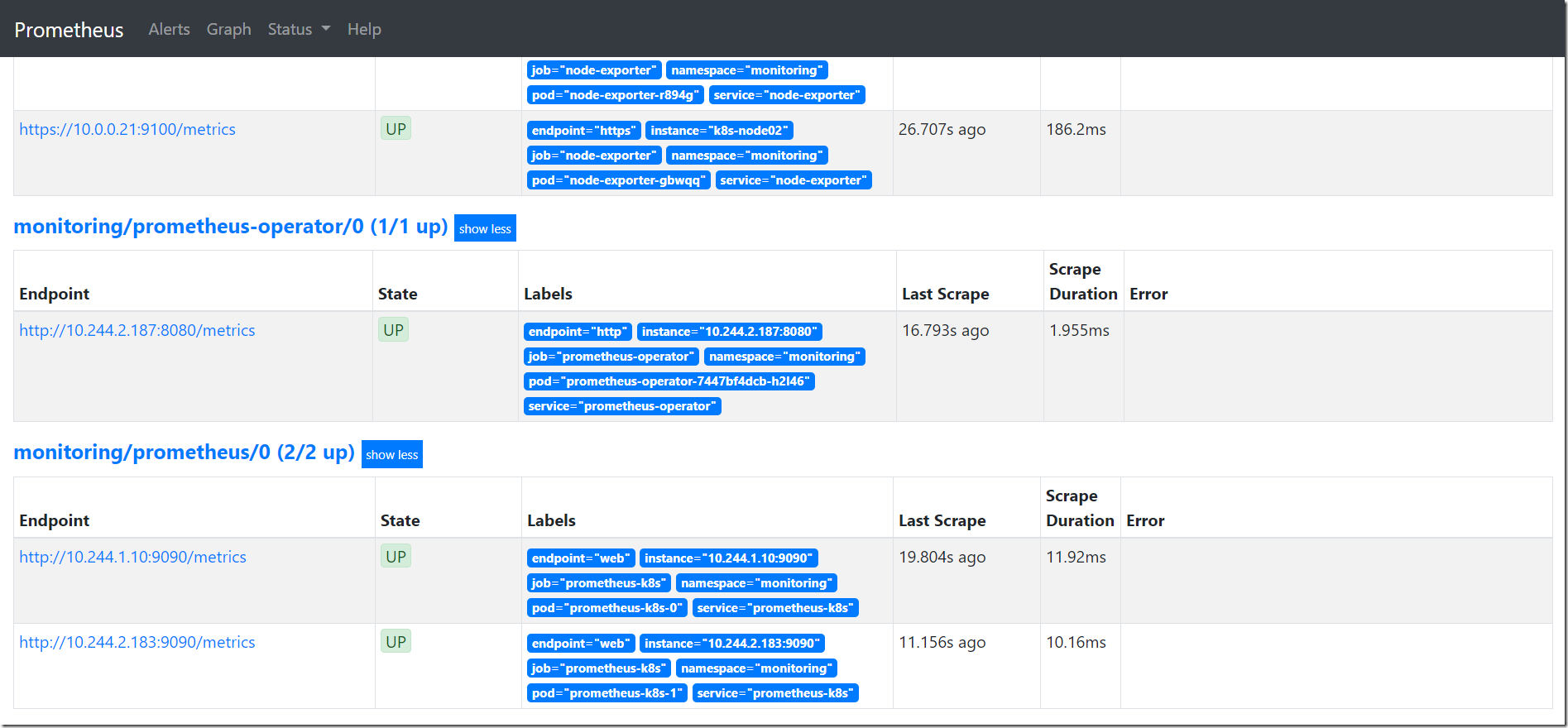

通过访问http://MasterIP:30200/target可以看到 prometheus 已经成功连接上了 k8s 的 apiserver

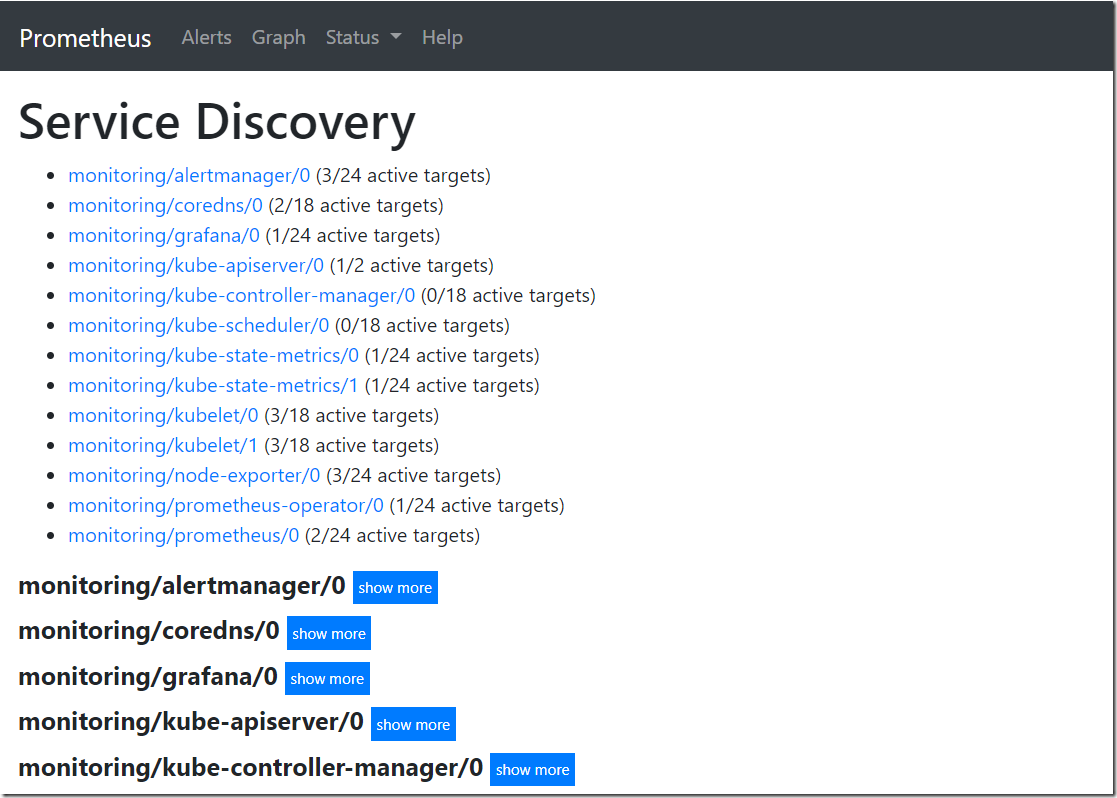

查看 service-discovery

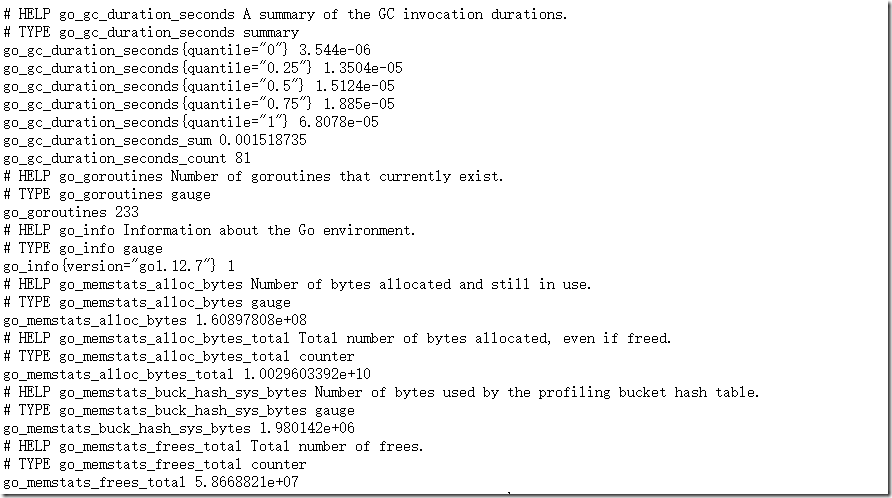

Prometheus 自己的指标:

http://10.0.0.11:30200/metrics

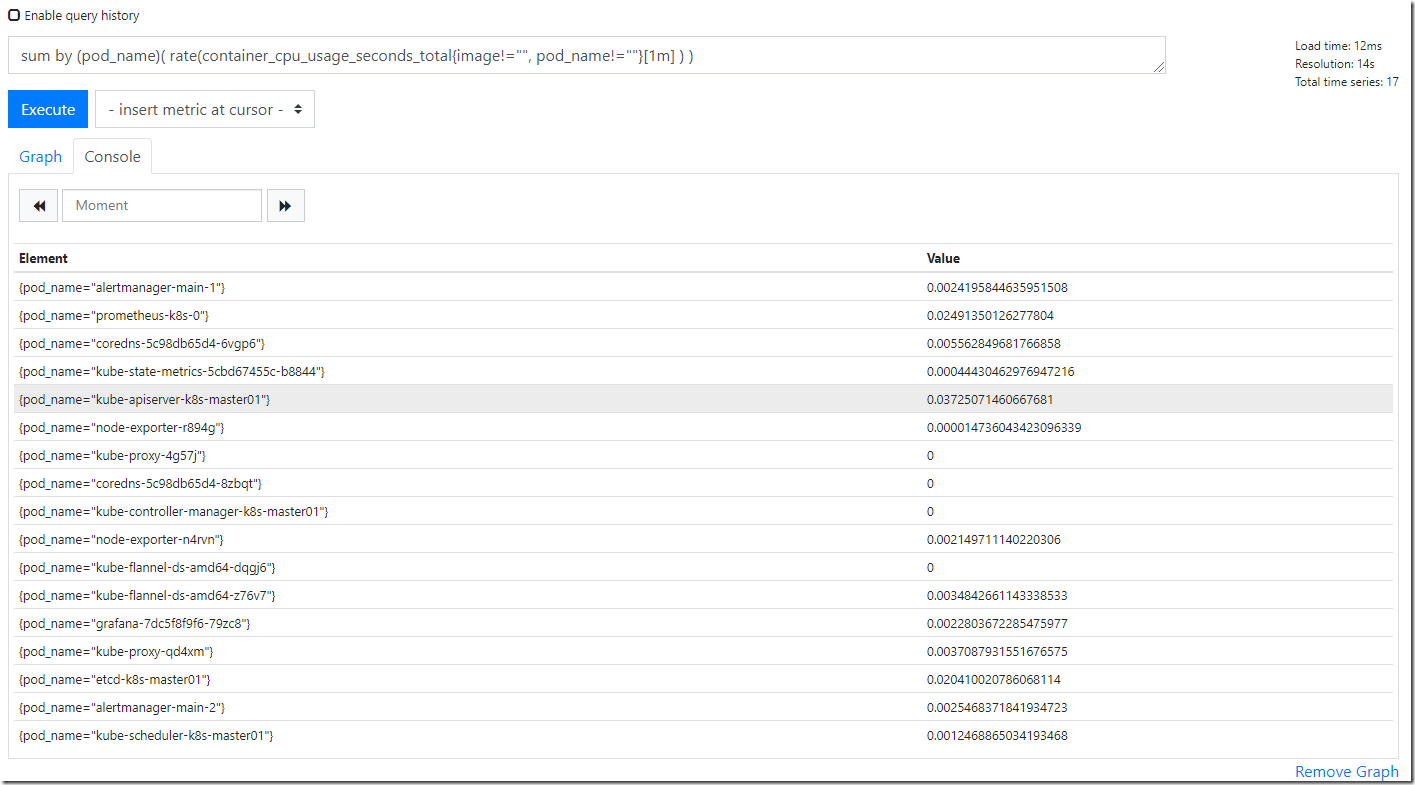

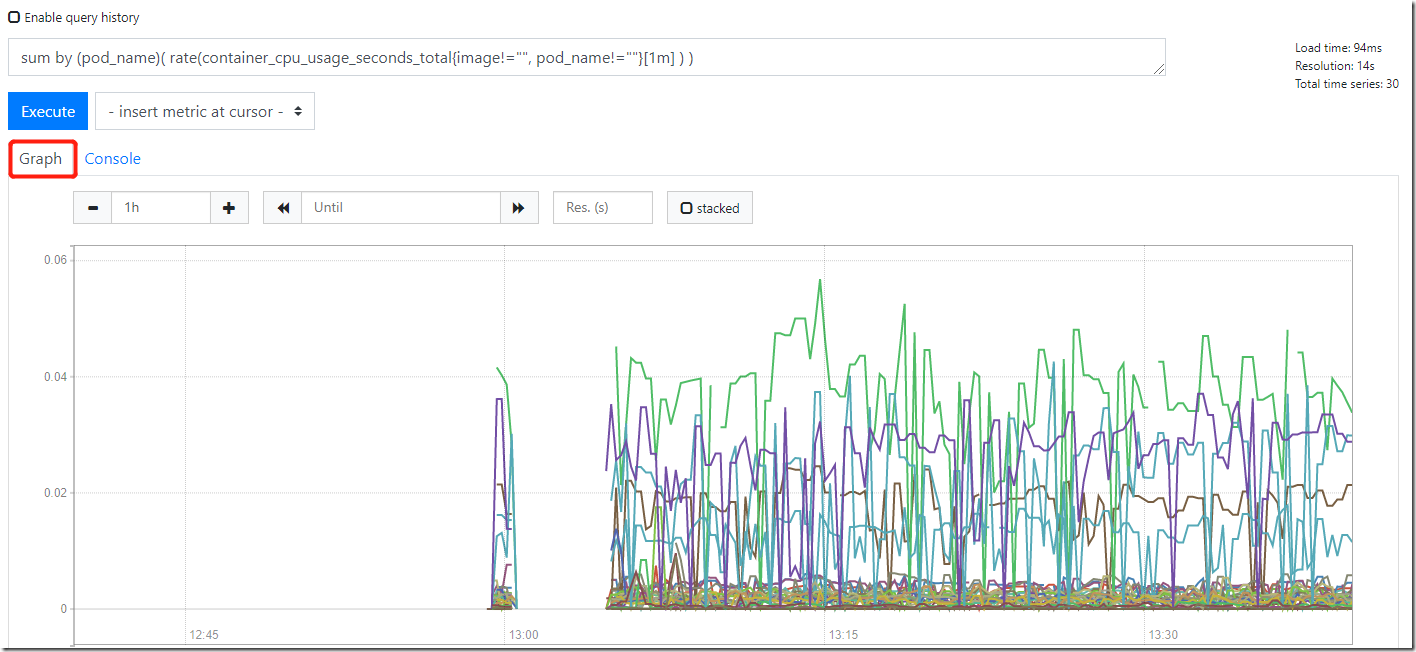

prometheus 的 WEB 界面上提供了基本的查询 K8S 集群中每个 POD 的 CPU 使用情况,查询条件如下:

sum by (pod_name)( rate(container_cpu_usage_seconds_total{image!="", pod_name!=""}[1m] ) )3.4、grafana访问测试

http://10.0.0.11:30100/login admin admin (默认要修改密码)

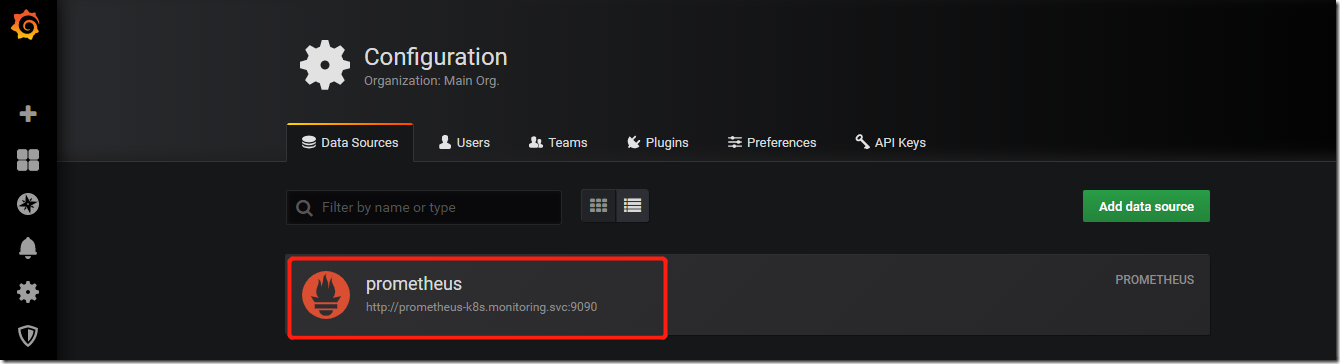

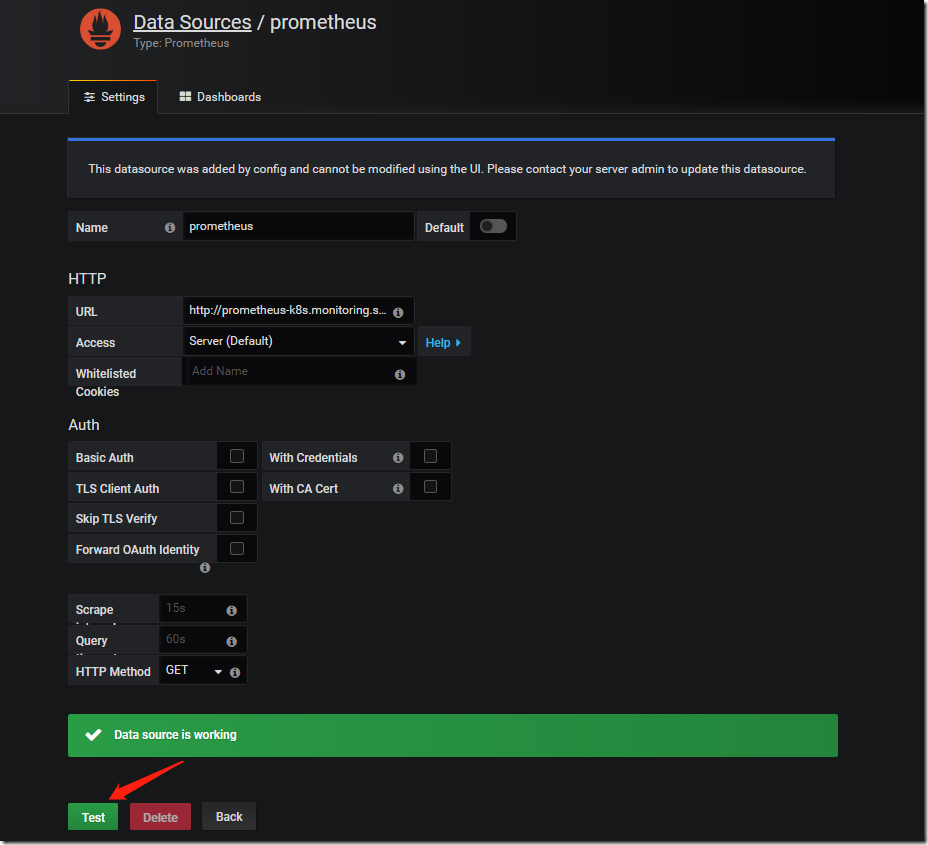

添加数据来源:默认已经添加好

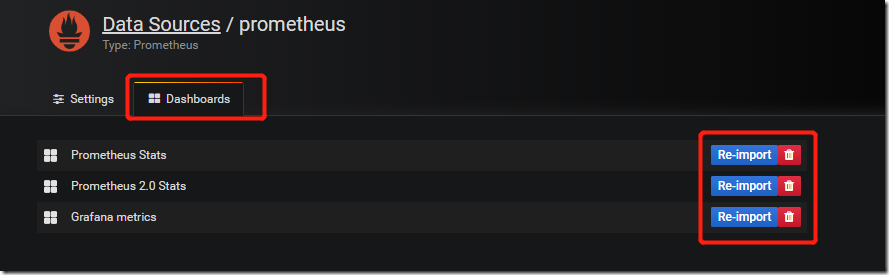

导入模板:

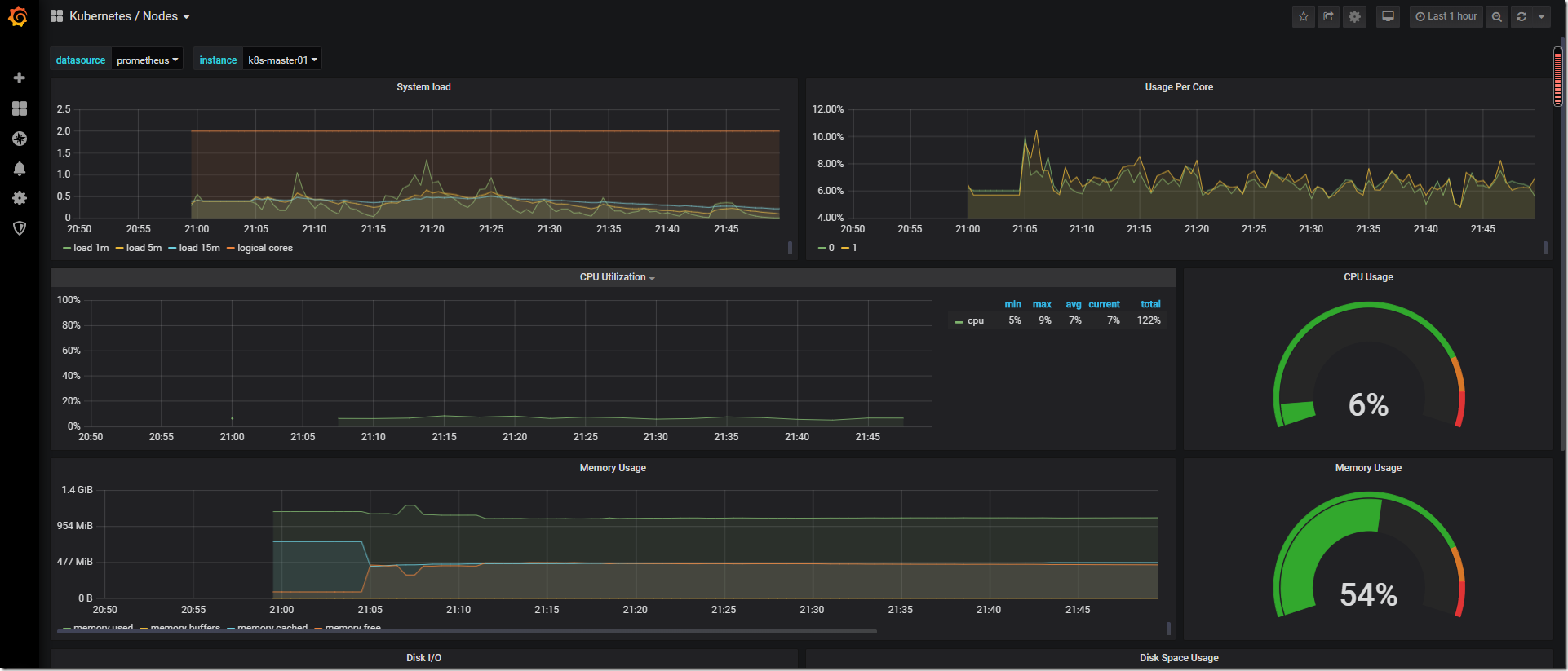

查看node节点状态:

四、HPA(Horizontal Pod Autoscaling)

Horizontal Pod Autoscaling 可以根据 CPU 利用率自动伸缩一个 Replication Controller、Deployment 或者Replica Set 中的 Pod 数量

#创建pod [root@k8s-master01 ~]# kubectl run php-apache --image=gcr.io/google_containers/hpa-example:latest --requests=cpu=200m --expose --port=8 [root@k8s-master01 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE php-apache-59546868d4-s5xsx 1/1 Running 0 4s [root@k8s-master01 ~]# kubectl top pod php-apache-59546868d4-s5xsx NAME CPU(cores) MEMORY(bytes) php-apache-59546868d4-s5xsx 0m 6Mi #创建 HPA 控制器 [root@k8s-master01 ~]# kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10 horizontalpodautoscaler.autoscaling/php-apache autoscaled [root@k8s-master01 ~]# kubectl get hpa NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE php-apache Deployment/php-apache <unknown>/50% 1 10 0 13s [root@k8s-master01 ~]# kubectl get hpa NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE php-apache Deployment/php-apache 0%/50% 1 10 1 5m7s #增加负载,查看负载节点数目 [root@k8s-master01 ~]# while true; do wget -q -O- http://10.244.1.24/; done OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK! [root@k8s-master01 ~]# kubectl get hpa -w NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE php-apache Deployment/php-apache 200%/50% 1 10 1 32m php-apache Deployment/php-apache 200%/50% 1 10 4 32m php-apache Deployment/php-apache 442%/50% 1 10 4 32m php-apache Deployment/php-apache 442%/50% 1 10 8 33m ... [root@k8s-master01 ~]# kubectl get pod -o wide #电脑性能低,效果不明显

五、pod资源限制

Kubernetes 对资源的限制实际上是通过 cgroup 来控制的,cgroup 是容器的一组用来控制内核如何运行进程的相关属性集合。针对内存、CPU 和各种设备都有对应的 cgroup

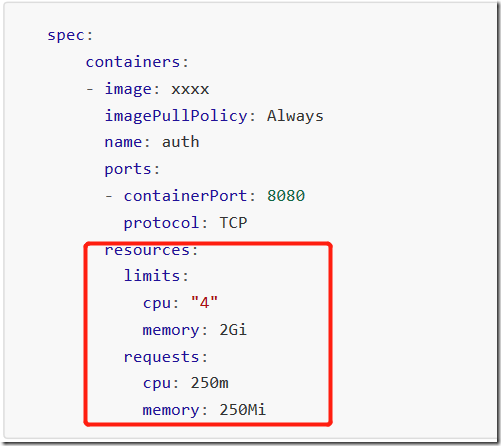

默认情况下,Pod 运行没有 CPU 和内存的限额。这意味着系统中的任何 Pod 将能够像执行该 Pod 所在的节点一样,消耗足够多的 CPU 和内存。一般会针对某些应用的 pod 资源进行资源限制,这个资源限制是通过resources 的 requests 和 limits 来实现

requests 要分分配的资源,limits 为最高请求的资源值。可以简单理解为初始值和最大值

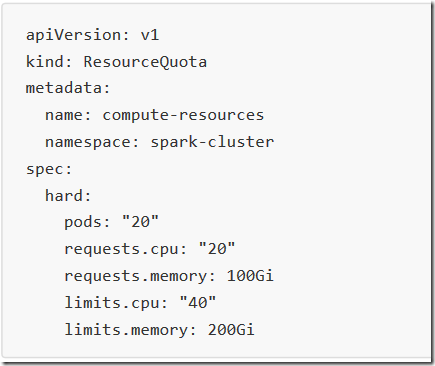

5.1、资源限制 - 名称空间

1)算资源配额

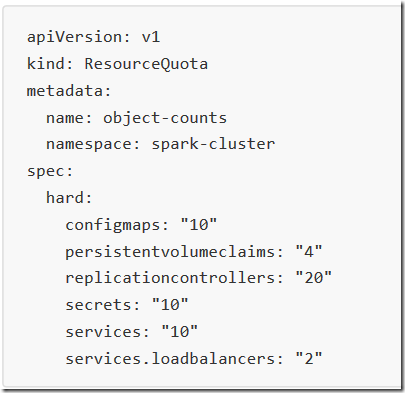

2)配置对象数量配额限制

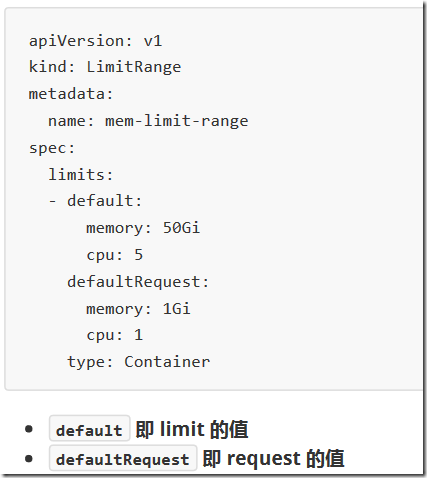

3)配置 CPU 和内存 LimitRange