Boosting algorithms

Perceptrons:Early Deep Learning Agorithms

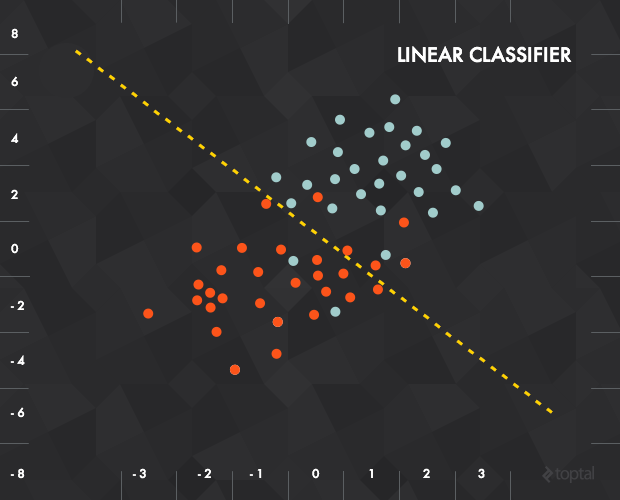

one of the earliest supervised training algorithms is that of the perceptron,a basic neural network building block.

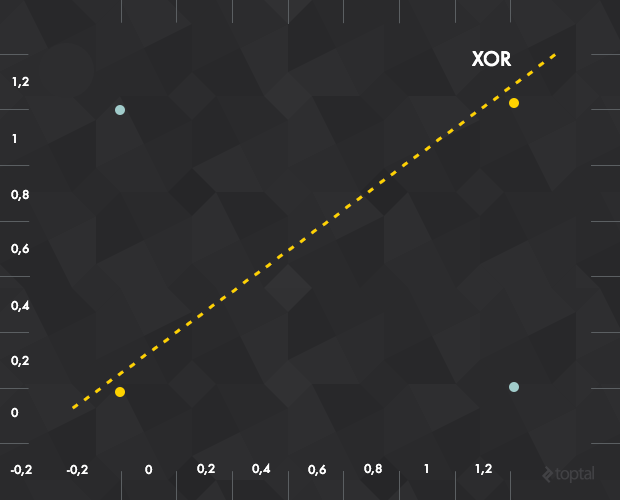

Simple Perceptron Drawbacks

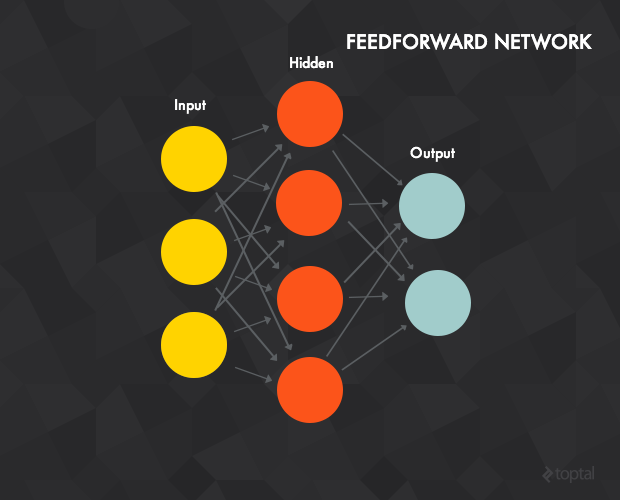

To solve the problem,we'll need to use a multiple layer perceptron,also known as feedforward neural network

Feedforward Neural Networks for Deep Learning

A neural network is really just a composition of perceptrons,connected in different ways and operating on different activation functions.

Training Perceptrons

The most common deep learning algorithm for the supervised training of the multilayer is known as backpropagation.

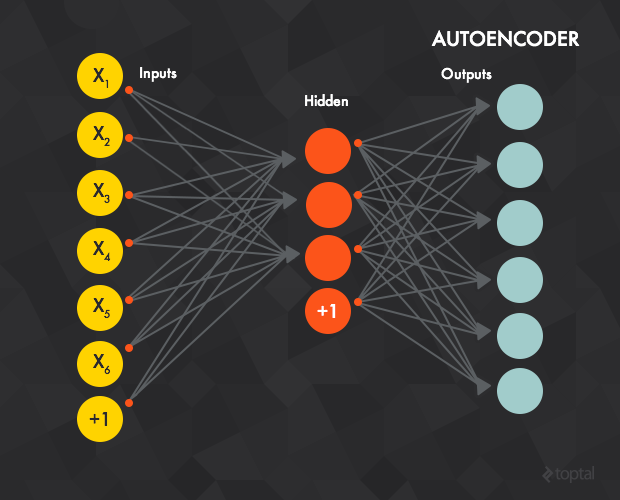

Autoencoders

An autoencoders is typically a feedforward neural network which aims to learn a compressed,distributed representation (encoding) of a dataset.

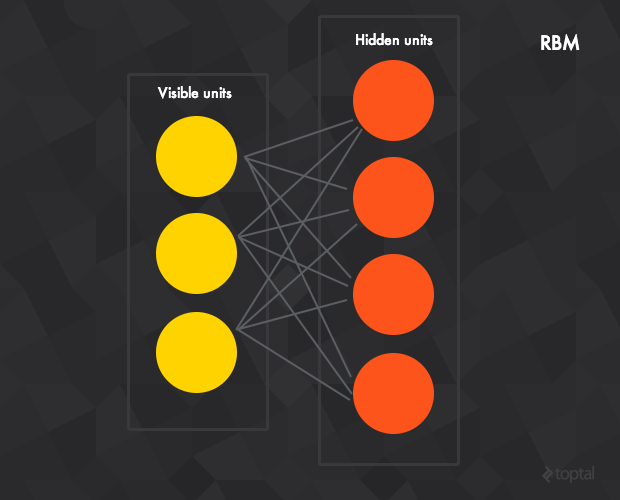

Restricted Boltzmann Machines

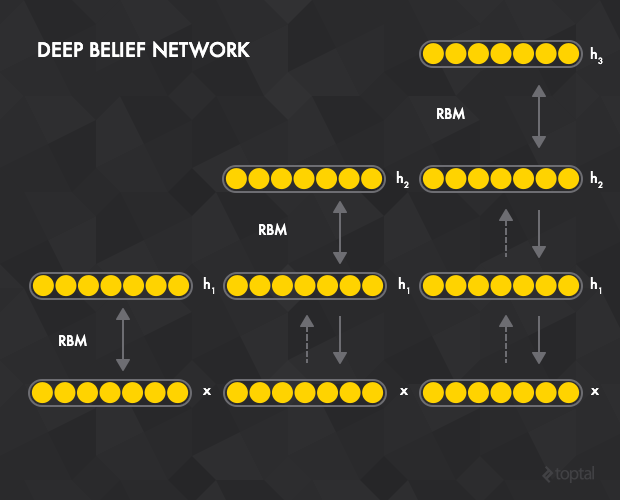

Deep Belief Networks

As with autoencoders,we can also stack Bolzmann machines to create a class known as deep belief networks(DBNs)

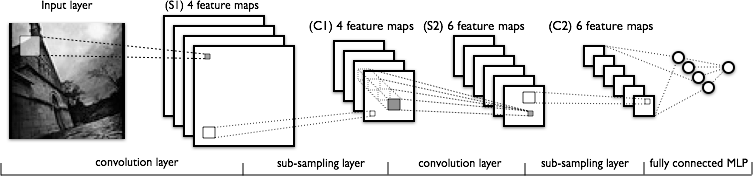

Convolutional Networks

examples

You can see an example of convolutional networks trained (with backpropagation) on the MNIST data set(grayscale images of handwritten letters)

There's also a nice JavaScirpt visualization of a similar network here

The Unknown Word

| The First Column | The Second Column |

|---|---|

| BP | Back propagation |

| quantile | 分位数['kwontail] |

| freeforward network | 前馈神经网络 |

| composition | [kompe'zition]组合 |

| grayscale | 灰度级 |

| perceptrons | [pe'septron]感知机 |

| resurgence | [ri'se:rgens]复苏,复活 |

| acquisition | 成果[ae kwi'zition] |

| composition | [ka:mpe'zition]构图 |

| resume | 恢复[ri'zju:m]简历 |

| propagation | 传播,传输[prope'geition] |

| sigmoid | S形的,S形 |

| logistic | 逻辑的[lo'dgistik] |

| region proposal | 候选区域 |

| proposal | [pre'pouzl]建议 |

| FPS | Frames Per Second 每秒传输帧数 |