(1)使用Docker-compose实现Tomcat+Nginx负载均衡

1、项目结构

2、nginx的配置文件

1 upstream tomcats { 2 server cat1:8080; # 主机名:端口号 3 server cat2:8080; # tomcat默认端口号8080 4 server cat3:8080; # 默认使用轮询策略 5 } 6 7 server { 8 listen 2420; 9 server_name localhost; 10 11 location / { 12 proxy_pass http://tomcats; # 请求转向tomcats 13 } 14 }

3、docker-compose.yml

1 version: "3.8" 2 services: 3 nginx: 4 image: nginx 5 container_name: zngx 6 ports: 7 - 80:2420 8 volumes: 9 - ./nginx/default.conf:/etc/nginx/conf.d/default.conf # 挂载配置文件 10 depends_on: 11 - tomcat01 12 - tomcat02 13 - tomcat03 14 15 tomcat01: 16 image: tomcat 17 container_name: cat1 18 volumes: 19 - ./tomcat1:/usr/local/tomcat/webapps/ROOT # 挂载web目录 20 21 tomcat02: 22 image: tomcat 23 container_name: cat2 24 volumes: 25 - ./tomcat2:/usr/local/tomcat/webapps/ROOT 26 27 tomcat03: 28 image: tomcat 29 container_name: cat3 30 volumes: 31 - ./tomcat3:/usr/local/tomcat/webapps/ROOT

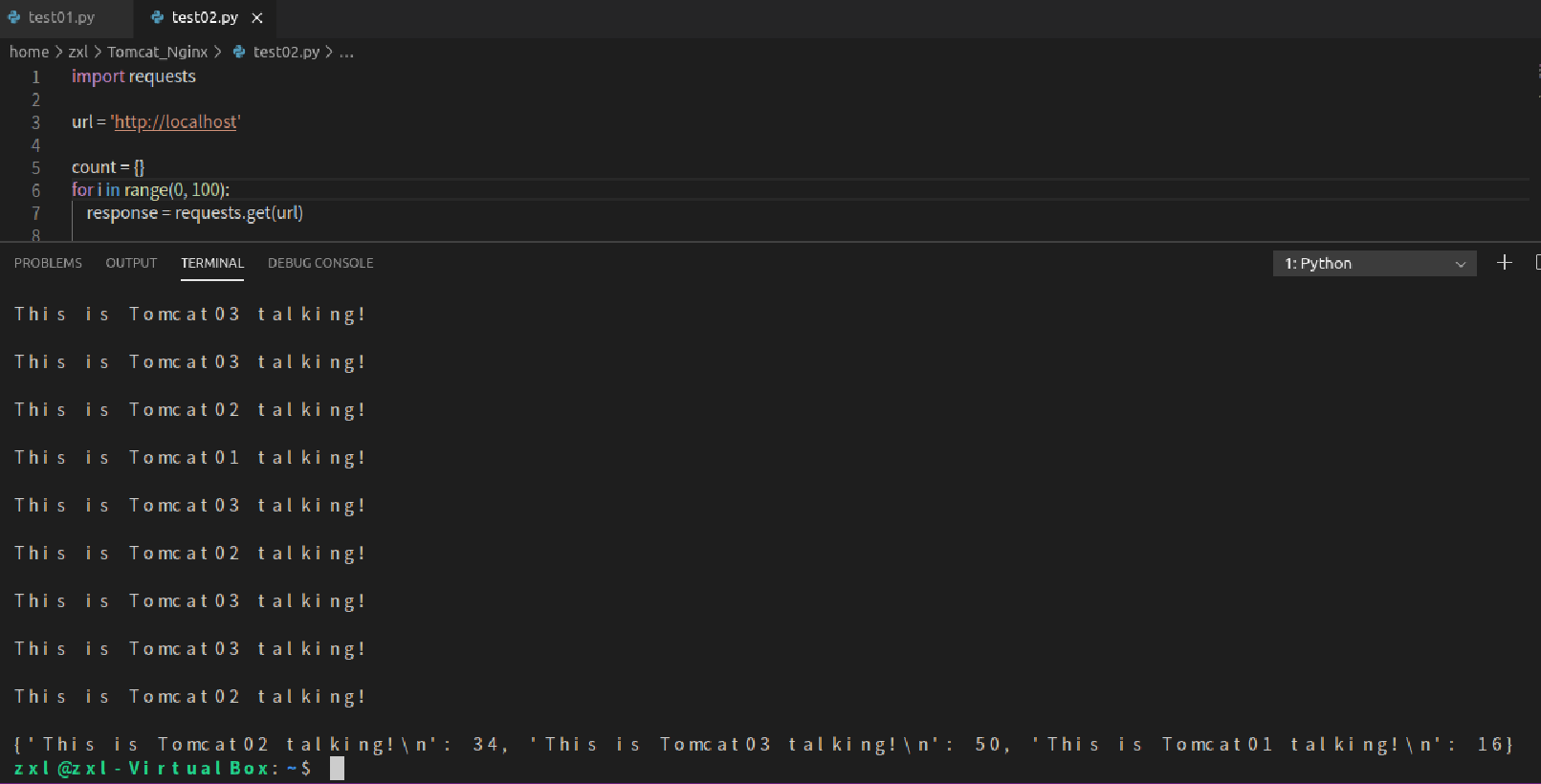

4、负载均衡测试

4.1轮询测试

1 import requests 2 url = 'http://localhost' 3 for i in range(0, 10): 4 response = requests.get(url) 5 print(response.text)

4.2权重策略

修改default.conf配置文件的权重,然后重启容器

1 import requests 2 url = 'http://localhost' 3 count = {} 4 for i in range(0, 100): 5 response = requests.get(url) 6 7 if response.text in count: 8 count[response.text] += 1 9 else: 10 count[response.text] = 0 11 print(response.text) 12 print(count)

参考资料:

(2) 使用Docker-compose部署javaweb运行环境

要求:

- 分别构建tomcat、数据库等镜像服务;

- 成功部署Javaweb程序,包含简单的数据库操作;

- 为上述环境添加nginx反向代理服务,实现负载均衡。

1、docker-compose.yml

version: "3.3" #貌似高版本的不太支持 services: mysql: image: mysql_sp container_name: spring_mysql build: context: ./mysql dockerfile: Dockerfile volumes: - ./mysql/setup.sh:/mysql/setup.sh - ./mysql/schema.sql:/mysql/schema.sql - ./mysql/privileges.sql:/mysql/privileges.sql ports: - 8083:3306 # 方便在外部查询 nginx: image: nginx container_name: tn_nginx ports: - 8082:80 volumes: - ./nginx/default.conf:/etc/nginx/conf.d/default.conf # 挂载配置文件 depends_on: - tomcat01 - tomcat02 - tomcat03 tomcat01: hostname: tomcat01 image: tomcat container_name: tomcat1 volumes: - ./webapps:/usr/local/tomcat/webapps # 挂载web目录 tomcat02: hostname: tomcat02 image: tomcat container_name: tomcat2 volumes: - ./webapps:/usr/local/tomcat/webapps # 挂载web目录 tomcat03: hostname: tomcat03 image: tomcat container_name: tomcat3 volumes: - ./webapps:/usr/local/tomcat/webapps # 挂载web目录

2、Mysql-dockerfile

FROM mysql:5.7 #允许免密登录 ENV MYSQL_ALLOW_EMPTY_PASSWORD yes #设置root密码 ENV MYSQL_ROOT_PASSWORD 123456 #设置容器启动时执行的命令 CMD ["sh","/mysql/setup.sh"] #设置暴露端口 EXPOSE 3306

3、setup.sh

#!/bin/bash set -e #查看mysql服务的状态,方便调试,这条语句可以删除 echo `service mysql status` echo '1.启动mysql....' #启动mysql service mysql start sleep 3 echo `service mysql status` echo '2.开始导入数据....' #导入数据 mysql < /mysql/schema.sql echo '3.导入数据完毕....' sleep 3 echo `service mysql status` #增加用户docker mysql < /mysql/privileges.sql echo '成功添加用户spring' #sleep 3 echo `service mysql status` echo `mysql容器启动完毕,且数据导入成功` tail -f /dev/null

4、schema.sql

create database `springtest` default character set utf8 collate utf8_general_ci; use springtest; DROP TABLE IF EXISTS `_User`; CREATE TABLE `_User` ( `userId` int(11) NOT NULL AUTO_INCREMENT, `userName` char(10) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL, `userSex` char(2) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL, `contactType` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL COMMENT '联系类型:QQ/TEL', `contactDetail` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL COMMENT '具体号码', `openid` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL COMMENT '微信唯一标识码', `grade` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL COMMENT '所在年级', `creditIndex` int(255) NOT NULL DEFAULT 60 COMMENT '默认值60,完成任务时增加', PRIMARY KEY (`userId`) USING BTREE, UNIQUE INDEX `UserName`(`userName`) USING BTREE, UNIQUE INDEX `openid`(`openid`) USING BTREE ) ENGINE = InnoDB AUTO_INCREMENT = 182 CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Compact;

5、privileges.sql

use mysql; select host, user from user; create user spring identified by '123456'; -- 将springtest数据库的权限授权给创建的用户spring,密码为123456: grant all on springtest.* to spring@'%' identified by '123456' with grant option; -- 这一条命令一定要有:刷新权限 flush privileges;

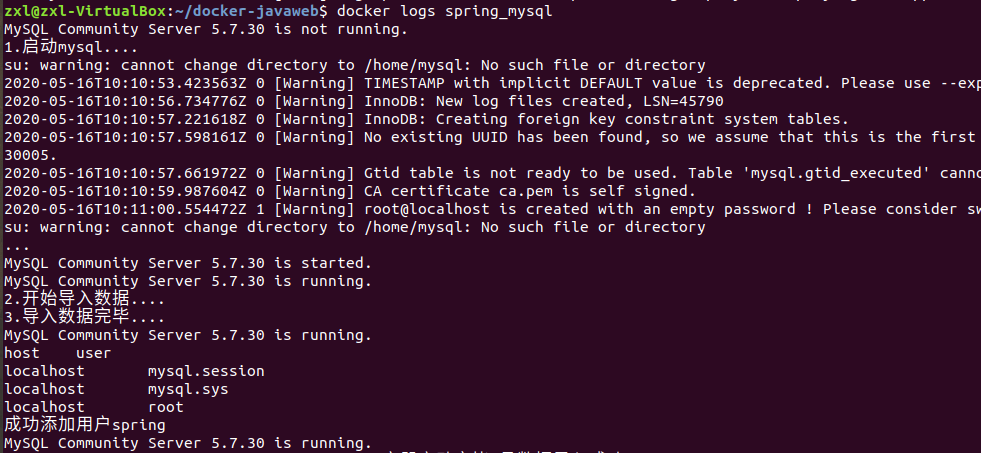

6、运行docker-compose,查看容器日志

docker-compose up -d --build

docker logs spring_mysql

docker logs tomcat1

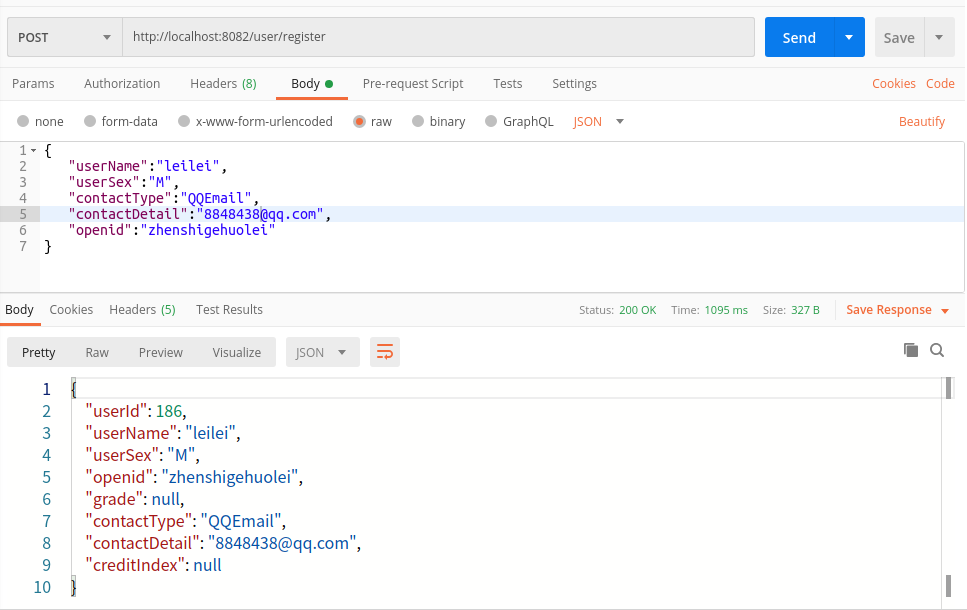

7、接口测试

8、进入数据库验证

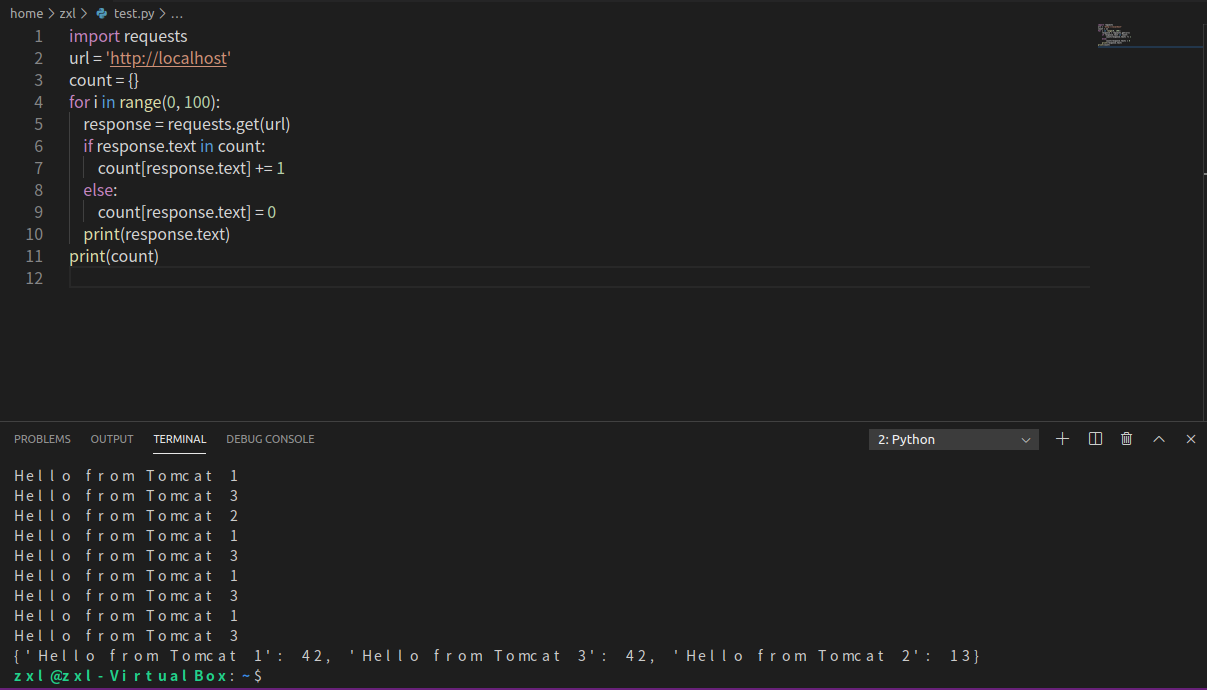

9、配置负载均衡测试

#default.conf

upstream tomcats { server tomcat1:8080 weight=3; server tomcat2:8080 weight=1; server tomcat3:8080 weight=3; } server { listen 80; server_name localhost; location / { proxy_pass http://tomcats; } }

参考资料:

(3)使用Docker搭建大数据集群环境

一、搭建hadoop环境

1、获取ubuntu镜像

1 docker pull ubuntu 2 mkdir build 3 sudo docker run -it -v /home/zxl/build:/root/build --name ubuntu ubuntu

2、进入容器换源

cat<<EOF>/etc/apt/sources.list #<<EOF>是覆盖;<<EOF>>则变成追加

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

# deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse

EOF

3、初始化容器

1 apt-get update 2 apt-get install vim # 安装vim软件 3 apt-get install ssh # 安装sshd 4 /etc/init.d/ssh start # 运行脚本即可开启sshd服务器 5 vim ~/.bashrc 6 /etc/init.d/ssh start # 在该文件中最后一行添加如下内容,实现ssh服务自启

4、ssh免密配置

1 cd ~/.ssh 2 ssh-keygen -t rsa # 按三四次回车即可 3 cat id_rsa.pub >> authorized_keys

5、安装JDK8(据说太高版本会有依赖问题)

1 apt-get install openjdk-8-jdk 2 vim ~/.bashrc 3 export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ 4 export PATH=$PATH:$JAVA_HOME/bin 5 source ~/.bashrc # 使.bashrc生效

6、commit容器ubuntu并创建镜像ubuntu/jdk8

1 sudo docker commit 容器id ubuntu/jdk8 2 sudo docker run -it -v /home/zxl/build:/root/build --name ubuntu-jdk8 ubuntu/jdk8 #挂载~/build目录,实现文件共享

7、安装hadoop

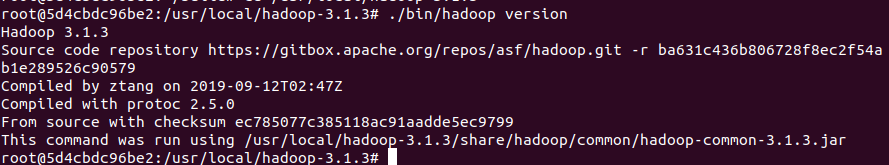

1 cd /root/build 2 tar -zxvf hadoop-3.1.3.tar.gz -C /usr/local 3 cd /usr/local/hadoop-3.1.3 4 ./bin/hadoop version # 验证安装

二、配置hadoop集群

1、hadoop-env.sh

1 cd /usr/local/hadoop-3.1.3/etc/hadoop #进入配置文件存放目录 2 vim hadoop-env.sh 3 export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ # 在任意位置添加

2、core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop-3.1.3/tmp</value>

<description>A base for other temporary derectories.</description>

</property>

</configuration>

3、hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop-3.1.3/tmp/dfs/name</value>

</property>

<property>

<name>dfs.namenode.data.dir</name>

<value>file:/usr/local/hadoop-3.1.3/tmp/dfs/data</value>

</property>

</configuration>

4、mapred-site.xml

<configuration>

<property>

<!--使用yarn运行MapReduce程序-->

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<!--jobhistory地址host:port-->

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<!--jobhistory的web地址host:port-->

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

<property>

<!--指定MR应用程序的类路径-->

<name>mapreduce.application.classpath</name>

<value>/usr/local/hadoop-3.1.3/share/hadoop/mapreduce/lib/*,/usr/local/hadoop-3.1.3/share/hadoop/mapreduce/*</value>

</property>

</configuration>

5、yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.5</value>

</property>

</configuration>

6、修改start-dfs.sh和stop-dfs.sh,添加如下参数(6和7的参数最好放在function后面)

1 HDFS_DATANODE_USER=root 2 HADOOP_SECURE_DN_USER=hdfs 3 HDFS_NAMENODE_USER=root 4 HDFS_SECONDARYNAMENODE_USER=root

7、修改start-yarn.sh和stop-yarn.sh,添加如下参数

1 YARN_RESOURCEMANAGER_USER=root 2 HADOOP_SECURE_DN_USER=yarn 3 YARN_NODEMANAGER_USER=root

8、commit容器并得到ubuntu/hadoop镜像

1 sudo docker commit 容器id ubuntu/hadoop

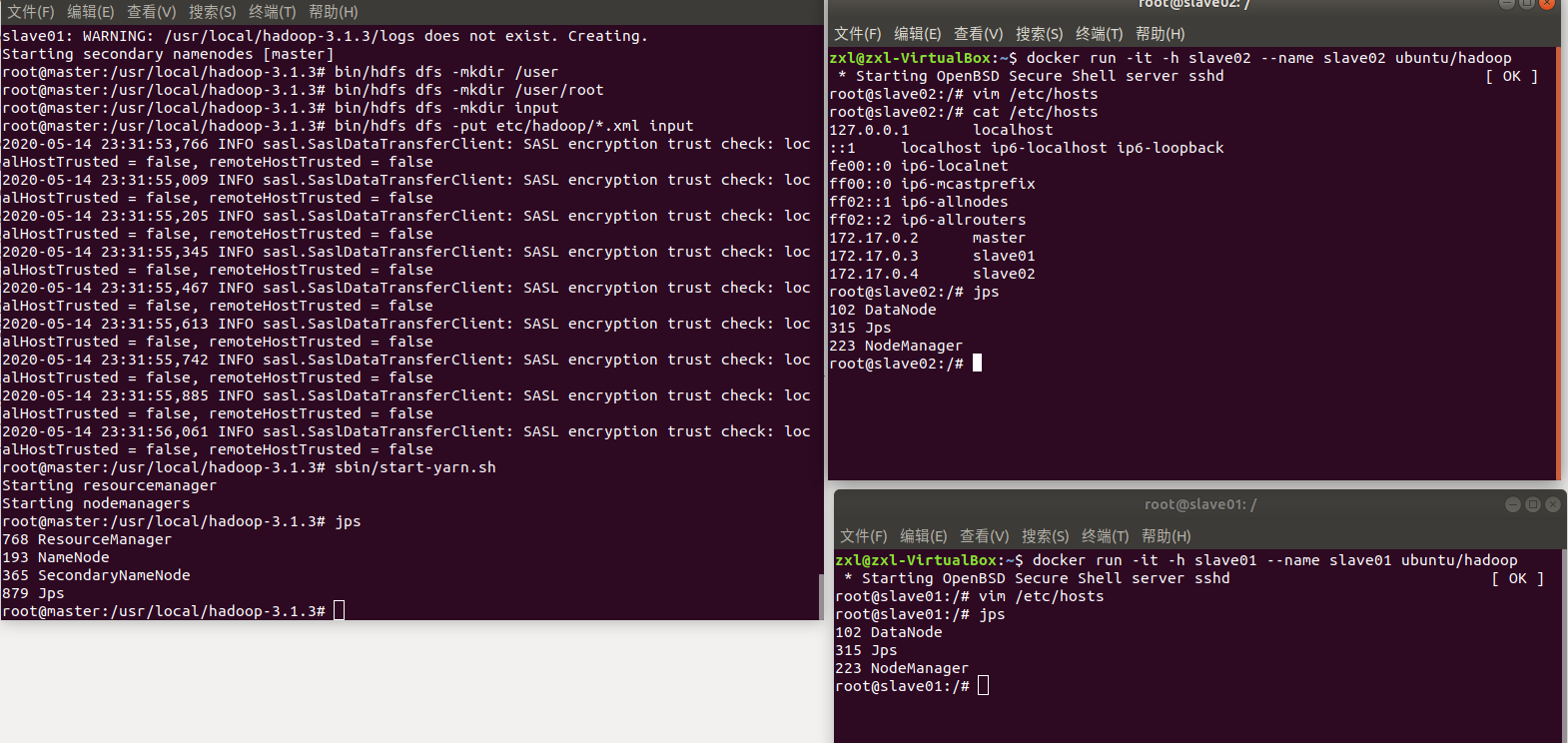

三、运行hadoop集群

1、开启三个终端分三次运行ubuntu/hadoop镜像

1 # 第一个终端 2 sudo docker run -it -h master --name master ubuntu/hadoop 3 # 第二个终端 4 sudo docker run -it -h slave01 --name slave01 ubuntu/hadoop 5 # 第三个终端 6 sudo docker run -it -h slave02 --name slave02 ubuntu/hadoop

2、分别修改各自容器的/etc/hosts

172.17.0.2 master 172.17.0.3 slave01 172.17.0.4 slave02

3、ssh测试

1 ssh slave01 2 ssh slave02

4、修改workers文件(将里面的localhost替换为如下)

1 slave01 2 slave02

四、测试hadoop集群

1、环境配置

bin/hdfs namenode -format #首次启动Hadoop需要格式化

sbin/start-all.sh #启动所有服务

2、建立hdfs测试目录

1 bin/hdfs dfs -mkdir /user 2 bin/hdfs dfs -mkdir /user/root 3 bin/hdfs dfs -mkdir input

3、上传master终端上的一个test样例

4、运行wordcount程序

bin/hadoop jar /usr/local/hadoop-3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount input output

5、查看运行结果

./bin/hdfs dfs -cat output/*

参考资料:

(四)、小结

1、在进入容器内,如果需要下载东西,可以提前换源。这里我学到了两种换源方式

1.1、容器外操作

COPY ./sources.list /etc/apt/sources.list

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释 deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse # 预发布软件源,不建议启用 # deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse

1.2、容器内操作

cat<<EOF>/etc/apt/sources.list #<<EOF>是覆盖;<<EOF>>则变成追加 deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse # deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse # deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse EOF

2、master用ssh切换到slave出现要输入密码,输入后报错Permssion denied,please try again

原因是在之前的ssh免密配置时少做了一步,导致免密失效。并且在进入容器后,其相应的密码也并非root密码,可以用passwd命令更改。

3、此次作业花了大概三四天做完,中间掺杂着一些其他课的实验,好在有时遇到的问题大部分可以百度搜到,另外还有一些优秀同学的博客值得学习。