官方安装文档:https://www.elastic.co/guide/en/elasticsearch/reference/current/zip-targz.html

官方硬件和配置项推荐:https://www.elastic.co/guide/en/elasticsearch/guide/master/hardware.html

事件--->input---->codec--->filter--->codec--->output

2、环境设置

系统:Centos7.4 IP地址:11.11.11.30 JDK:1.8 Elasticsearch-6.4.3 Logstash-6.4.0 kibana-6.4.0

注释:截至2018年11月8日最新版为6.4.3

iptables -F

2.1、前期准备

1、安装JDK

JDK的下载地址:https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

[root@localhost ~]# tar zxvf jdk-8u152-linux-x64.tar.gz -C /usr/local/ 解压后配置全局变量 [root@localhost ~]# vi /etc/profile.d/jdk.sh JAVA_HOME=/usr/local/jdk1.8 CLASSPATH=.:${JAVA_HOME}/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib PATH=$JAVA_HOME/bin:$PATH export JAVA_HOME CLASSPATH PATH [root@localhost ~]# source /etc/profile.d/jdk.sh [root@localhost ~]# java -version java version "1.8.0_152" Java(TM) SE Runtime Environment (build 1.8.0_152-b16) Java HotSpot(TM) 64-Bit Server VM (build 25.152-b16, mixed mode)

注释:也可以直接yum安装java也是可以的!!!

2、修改全局参数

vi /etc/sysctl.conf vm.max_map_count=655360 sysctl -p vi /etc/security/limits.conf * soft nproc 65536 * hard nproc 65536 * soft nofile 65536 * hard nofile 65536

#以下两个参数不是必须的,原因在于配置第二台elk的时候启动elasticsearch的时候报JVM内存无法分配内存错误

* soft memlock unlimited * hard memlock unlimited

3、创建elk用户及下载所需文件

#创建运行ELK的用户 useradd elk passwd elk #创建目录存放软件 [root@elk ~]# su - elk

[elk@elk ~]$ mkdir /home/elk/{Application,Data,Log}

注释: 软件安装目录:/home/elk/Application 软件数据目录:/home/elk/Data 软件日志目录:/home/elk/Log #下载并解压kibana,elasticsearch,logstash到/home/elk/Application目录下 下载地址:https://www.elastic.co/start #因最新版本下载过慢,下载之前同事已经下载好的...... #解压文件 [elk@elk ~]$ cd /usr/local/src/ [elk@elk src]$ ll 总用量 530732 -rw-r--r--. 1 root root 97872736 11月 7 14:55 elasticsearch-6.4.3.tar.gz -rw-r--r--. 1 root root 10813704 11月 7 15:41 filebeat-6.4.0-x86_64.rpm -rw-r--r--. 1 root root 55751827 11月 7 15:41 kafka_2.11-2.0.0.tgz -rw-r--r--. 1 root root 187936225 11月 7 15:39 kibana-6.4.0-linux-x86_64.tar.gz -rw-r--r--. 1 root root 153887188 11月 7 15:41 logstash-6.4.0.tar.gz -rw-r--r--. 1 root root 37191810 11月 7 15:41 zookeeper-3.4.13.tar.gz

[elk@elk src]$ tar xf elasticsearch-6.4.3.tar.gz -C /home/elk/Application/ [elk@elk src]$ tar xf kibana-6.4.0-linux-x86_64.tar.gz -C /home/elk/Application/ [elk@elk src]$ tar xf logstash-6.4.0.tar.gz -C /home/elk/Application/

[elk@elk src]$ ll /home/elk/Application/ 总用量 0 drwxr-xr-x. 8 elk elk 143 10月 31 07:22 elasticsearch-6.4.3 drwxrwxr-x. 11 elk elk 229 8月 18 07:50 kibana-6.4.0-linux-x86_64 drwxrwxr-x. 12 elk elk 255 11月 7 15:46 logstash-6.4.0 [elk@elk src]$

3、服务配置

3.1、配置elasticsearch

#内存大的话可以根据需求要修改 [elk@elk Application]$ vi elasticsearch/config/jvm.options -Xms8g -Xmx8g [elk@elk Application]$ vi elasticsearch/config/elasticsearch.yml #集群时使用的集群名称 cluster.name: my-es #本节点名称 node.name: node-1 #数据存储的目录 #path.data: /data/es-data #多个目录可以用逗号隔开 path.data: /home/elk/Data/elasticsearch #日志存放目录 path.logs: /home/elk/Log/elasticsearch #数据不允许放入sawp分区 bootstrap.memory_lock: true #本地监听地址 network.host: 11.11.11.30

#设置集群中master节点的初始列表,可以通过这些节点来自动发现新加入的集群节点

discovery.zen.ping.unicast.hosts: ["11.11.11.30", "11.11.11.31"]

#监听端口 http.port: 9200

# 设置节点间交互的tcp端口(集群),(默认9300)

transport.tcp.port: 9300

#增加参数,使head插件可以访问es

http.cors.enabled: true

http.cors.allow-origin: "*"

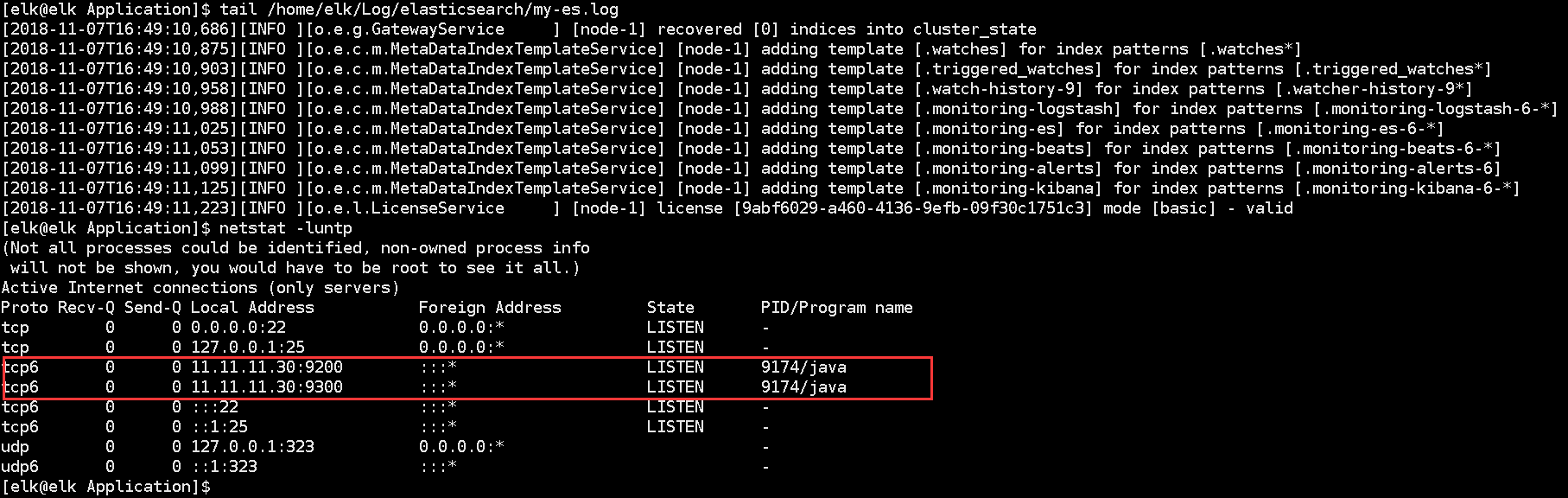

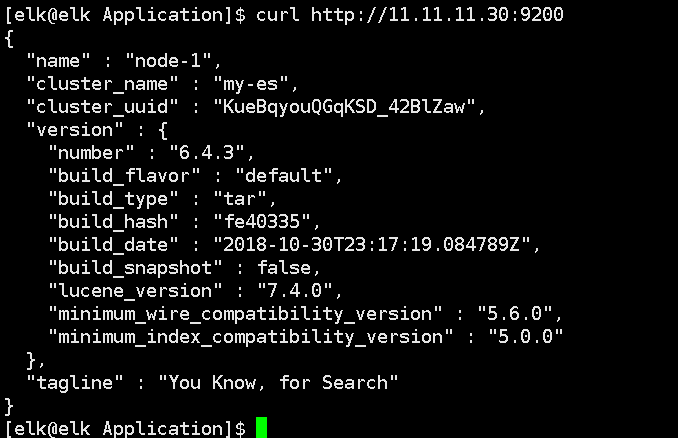

#看下自己修改过的配置 [elk@elk Application]$ grep '^[a-Z]' elasticsearch/config/elasticsearch.yml 启动elasticsearch(要使用elk这个用户启动) nohup /home/elk/Application/elasticsearch/bin/elasticsearch >> /home/elk/Log/elasticsearch/elasticsearch.log 2>&1 & 日志地址: [elk@elk Application]$ tail /home/elk/Log/elasticsearch/my-es.log

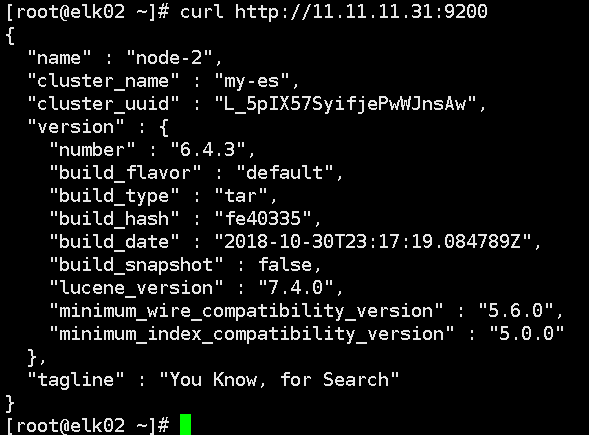

3.1.1、部署第二台elk,修改的配置文件如下,其他跟elk配置一致

[elk@elk02 Application]$ grep '^[a-Z]' elasticsearch/config/elasticsearch.yml cluster.name: my-es #一定要跟第一台一致 node.name: node-2 #一定要跟第一台不同 path.data: /home/elk/Data/elasticsearch path.logs: /home/elk/Log/elasticsearch bootstrap.memory_lock: true network.host: 11.11.11.31 http.port: 9200 transport.tcp.port: 9300 http.cors.enabled: true http.cors.allow-origin: "*"

mkdir /home/elk/Log/elasticsearch/

启动elasticsearch nohup /home/elk/Application/elasticsearch/bin/elasticsearch >> /home/elk/Log/elasticsearch/elasticsearch.log 2>&1 &

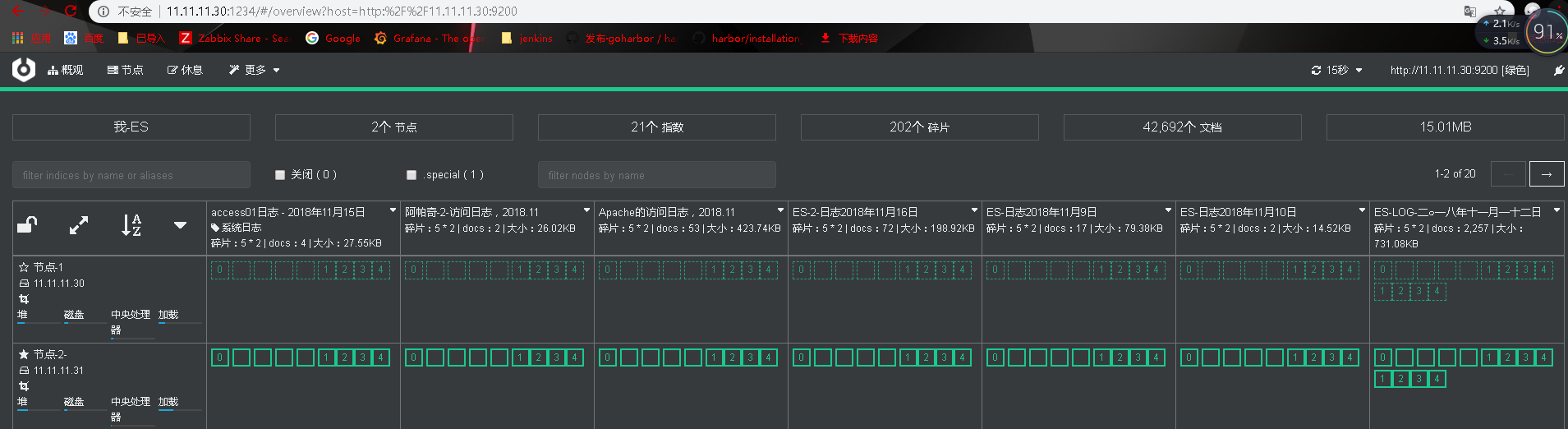

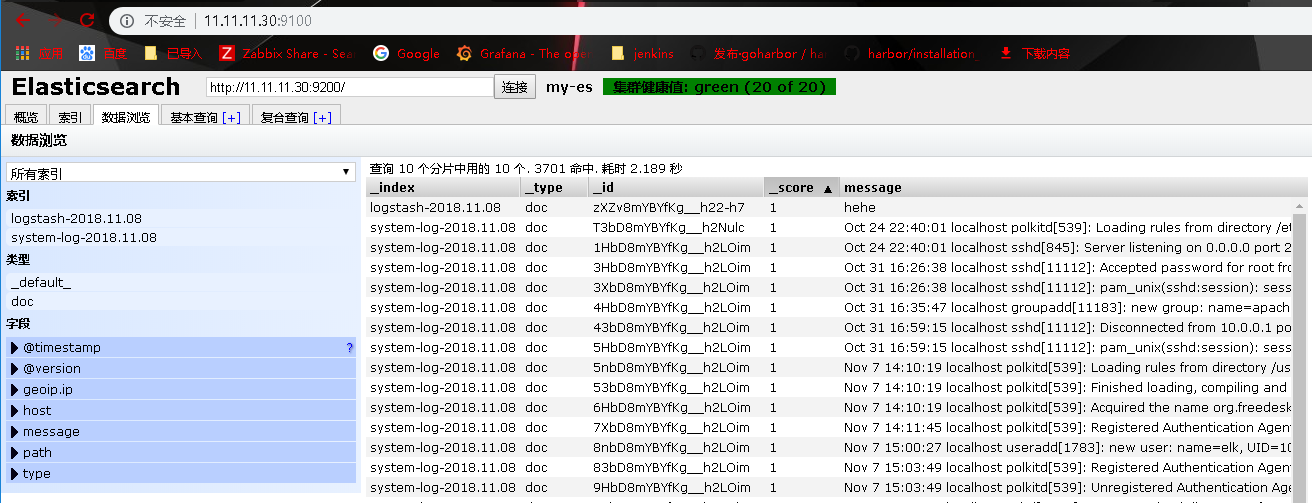

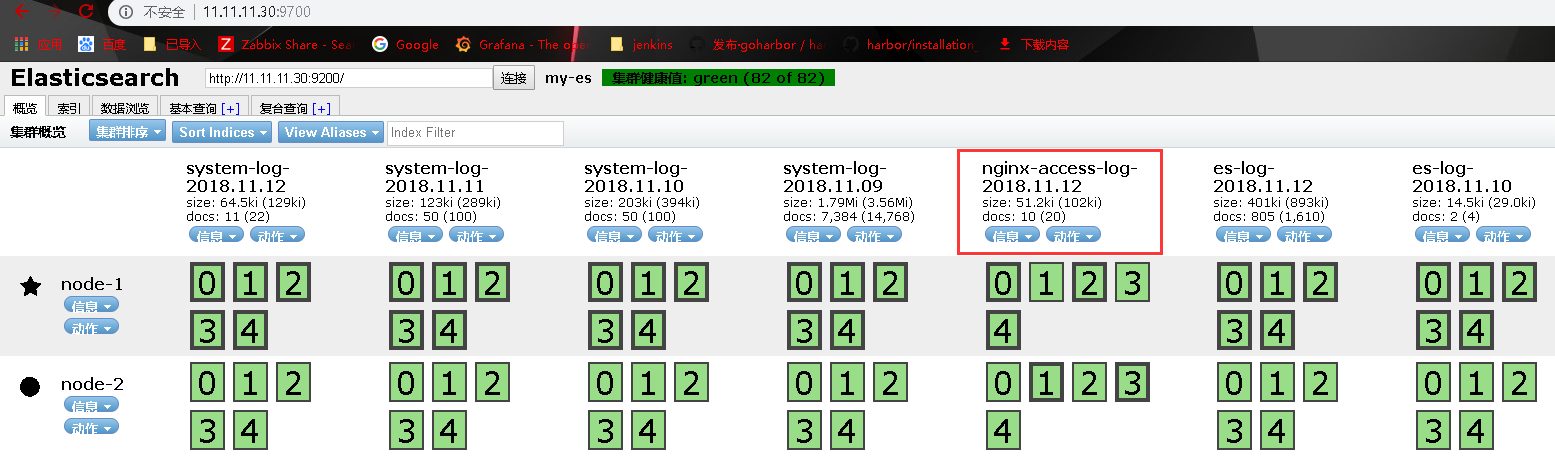

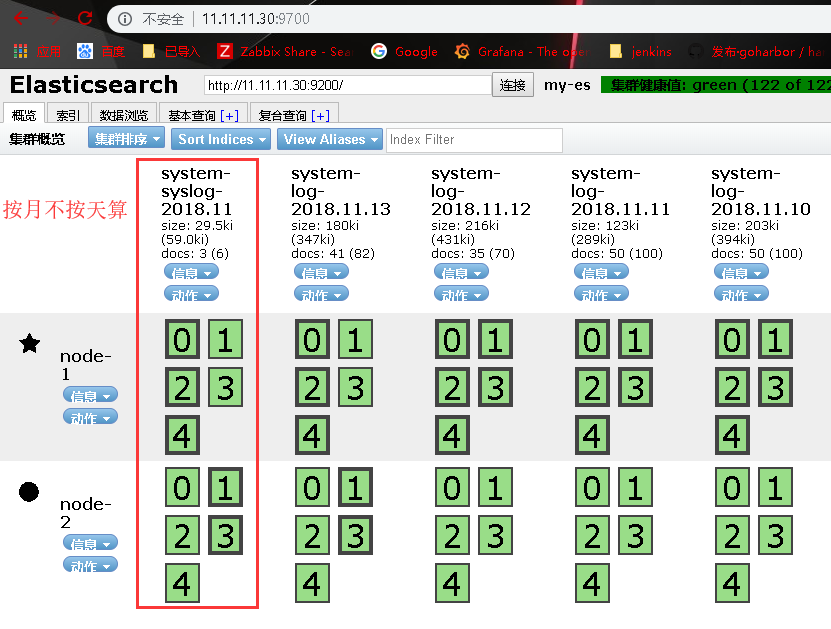

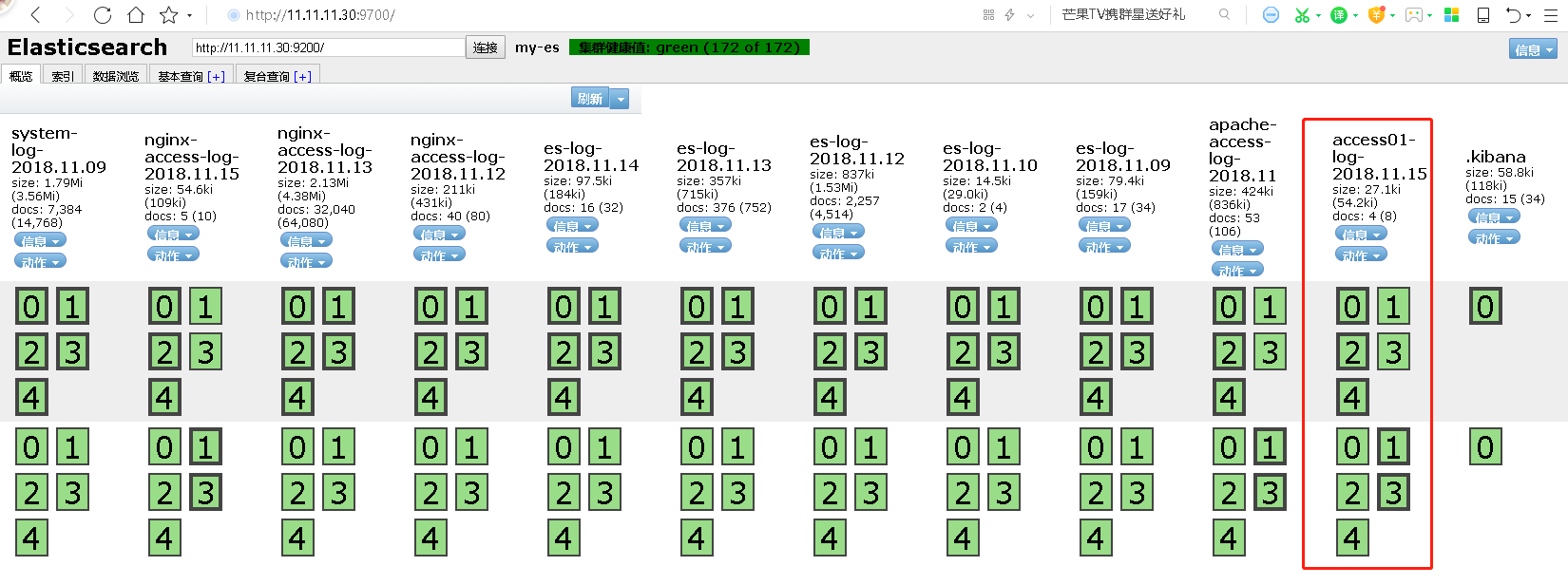

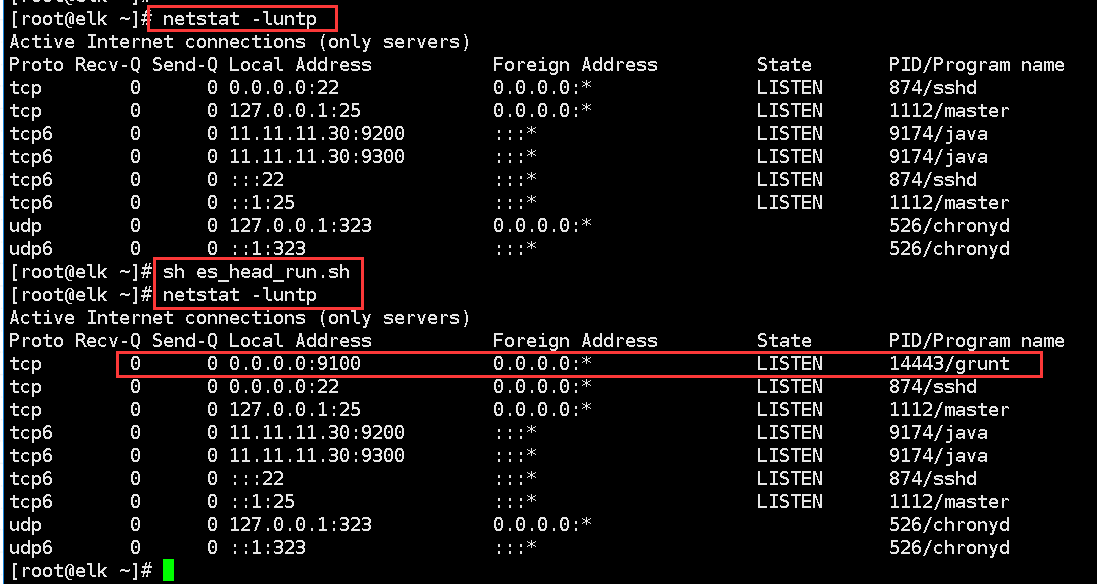

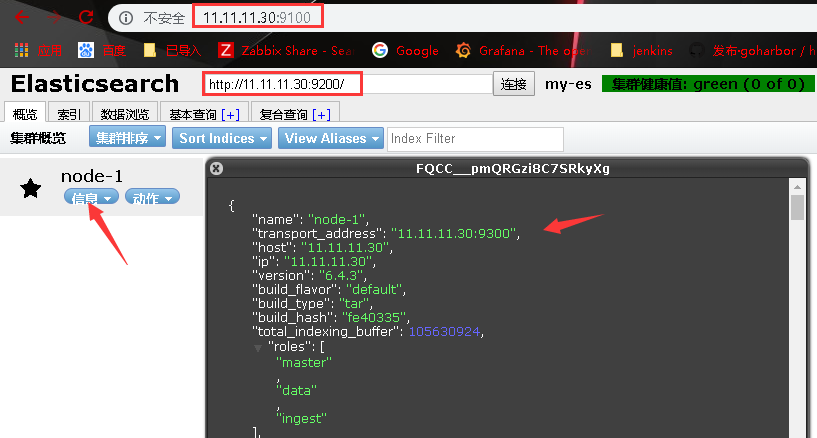

3.1.2、根据附录中的1,安装完head后的结果如下图

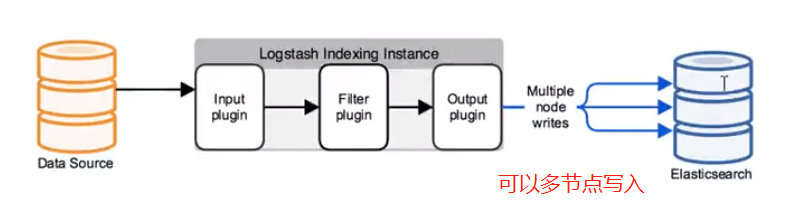

3.2、配置logstash(拓展请查看附录3)

这是收集数据,转发数据,对日志进行过滤

logstash官方说明:https://www.elastic.co/guide/en/logstash/current/introduction.html

写入插件:https://www.elastic.co/guide/en/logstash/current/input-plugins.html

输出插件:https://www.elastic.co/guide/en/logstash/current/output-plugins.html

[elk@elk Application]$ vi logstash/config/jvm.options -Xms4g -Xmx4g [elk@elk Application]$ vi logstash/config/logstash.yml #pipeline线程数,默认为cpu核数

pipeline.workers: 8

#batcher一次批量获取的待处理文档数 pipeline.batch.size: 1000

#batcher等待时长,默认50ms pipeline.batch.delay: 50

示例:

#logstash启动的时候要读取的配置文件

[elk@elk config]$ cat logstash-sample.conf

#从哪获取数据 input { file { path => ["/var/log/messages","/var/log/secure"] type => "system-log" start_position => "beginning" } }

#对进来的文件内容进行过滤 filter { }

#过滤后的数据发到哪里 output { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "system-log-%{+YYYY.MM}" #user => "elastic" #password => "changeme" } }

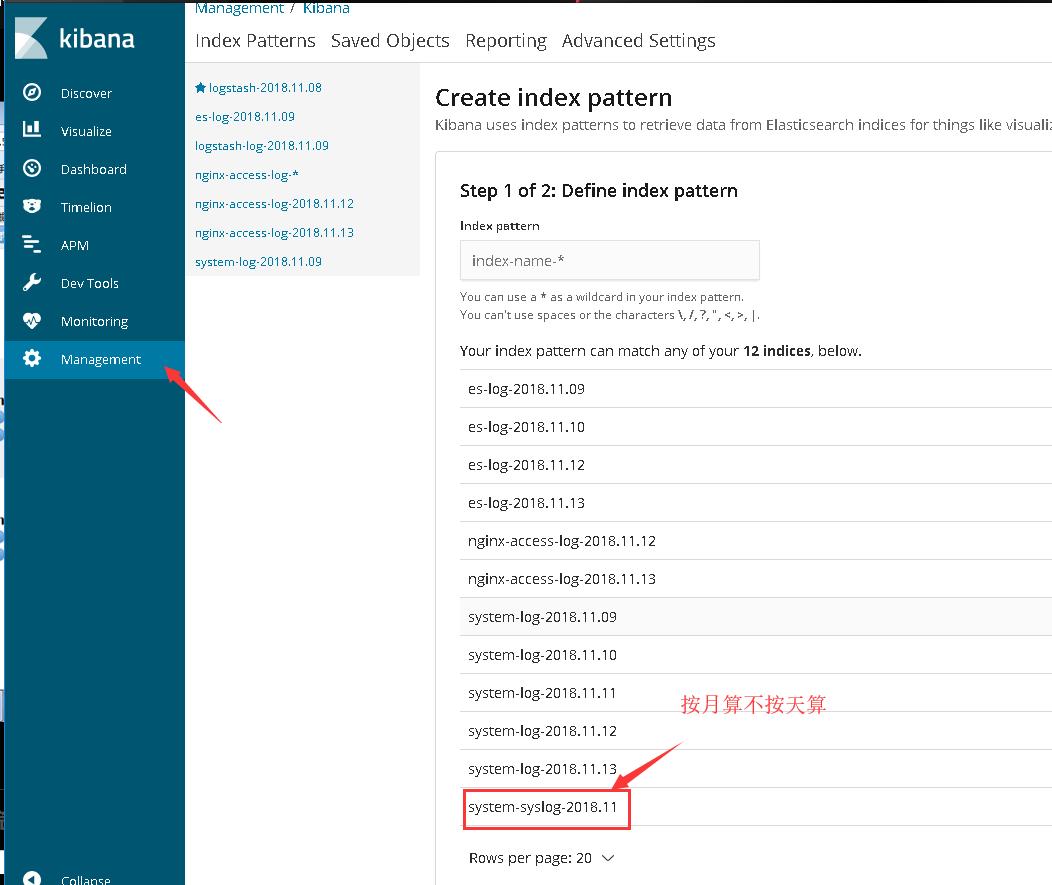

注释:如果日志量不大的话,不建议使用天计算(+YYYY.MM.dd);kikana到时不好添加!!!!

#启动

[elk@elk ~]$ mkdir /home/elk/Log/logstash -p

nohup /home/elk/Application/logstash/bin/logstash -f /home/elk/Application/logstash/config/logstash-sample.conf --config.reload.automatic >> /home/elk/Log/logstash/logstash.log 2>&1 &

#查看是否运行

[elk@elk config]$ jobs -l

[1]+ 16289 运行中 nohup /home/elk/Application/logstash/bin/logstash -f /home/elk/Application/logstash/config/logstash-sample.conf --config.reload.automatic >> /home/elk/Log/logstash/logstash.log 2>&1 &

1 [elk@elk Application]$ vi logstash/config/logstash.conf 2 input { 3 kafka { 4 bootstrap_servers => "192.168.2.6:9090" 5 topics => ["test"] 6 } 7 8 } 9 filter{ 10 json{ 11 source => "message" 12 } 13 14 ruby { 15 code => "event.set('index_day_hour', event.get('[@timestamp]').time.localtime.strftime('%Y.%m.%d-%H'))" 16 } 17 mutate { 18 rename => { "[host][name]" => "host" } 19 } 20 mutate { 21 lowercase => ["host"] 22 } 23 24 25 } 26 output { 27 elasticsearch { 28 index => "%{host}-%{app_id}-%{index_day_hour}" 29 hosts => ["http://192.168.2.7:9200"] 30 } 31 }

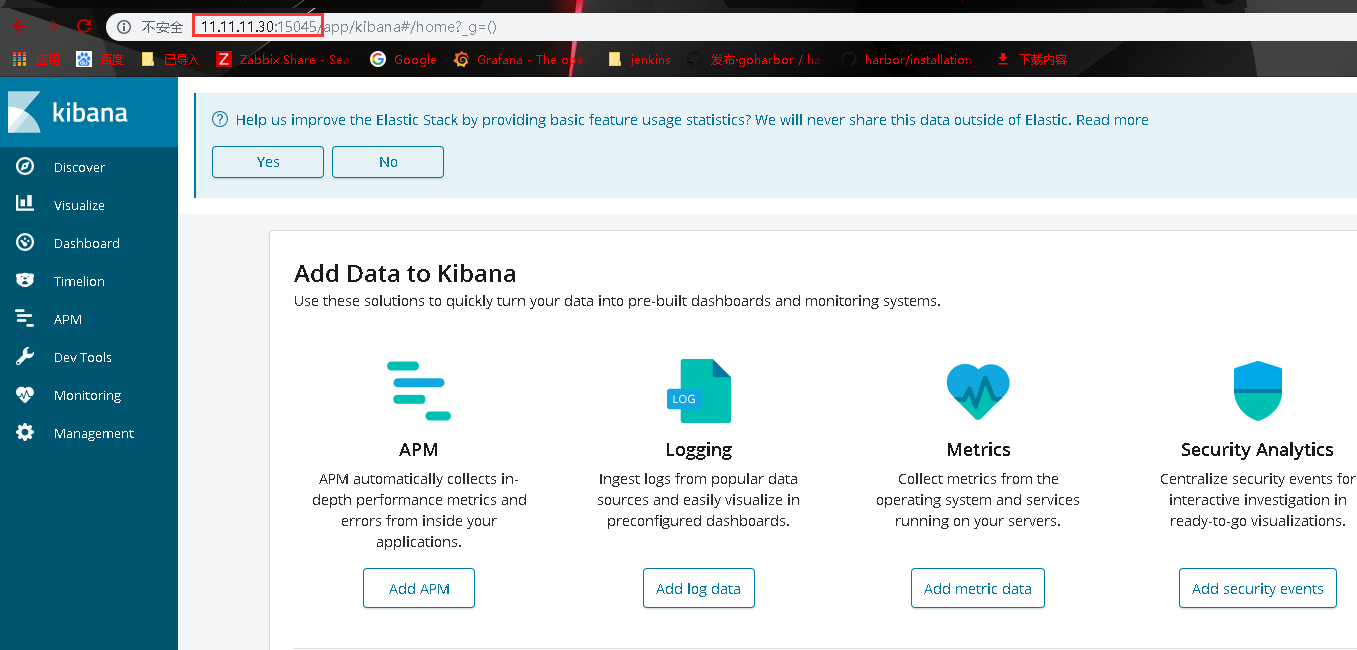

3.3、配置kibana(过滤file)

[elk@elk Application]$ vi kibana/config/kibana.yml server.port: 15045 #页面访问端口 server.host: "11.11.11.30" #监听IP地址 elasticsearch.url: "http://11.11.11.30:9200" elasticsearch.pingTimeout: 1500

[root@elk ~]# grep '^[a-Z]' /home/elk/Application/kibana/config/kibana.yml

#服务启动的端口 server.port: 15045

#监听地址

server.host: "11.11.11.30" #elas端口,一定要对上

elasticsearch.url: "http://11.11.11.30:9200" #kibana的日志也写入els内,这是个索引的名字,会在head上显示

kibana.index: ".kibana" elasticsearch.pingTimeout: 1500 [root@elk ~]#

[root@elk ~]# mkdir /home/elk/Log/kibana -p

[root@elk ~]# nohup /home/elk/Application/kibana/bin/kibana >> /home/elk/Log/kibana/kibana.log 2>&1 &

[root@elk ~]# netstat -luntp

tcp 0 0 11.11.11.30:15045 0.0.0.0:* LISTEN 16437/node

tcp6 0 0 :::9100 :::* LISTEN 14477/grunt

tcp6 0 0 11.11.11.30:9200 :::* LISTEN 15451/java

tcp6 0 0 11.11.11.30:9300 :::* LISTEN 15451/java

tcp6 0 0 127.0.0.1:9600 :::* LISTEN 16289/java

注释:星星是主,圆圈是副

node-2这台主机重启后新启动了系统,但是在head显示不出来,重启下node-1的els就可以看到了。。。。。!

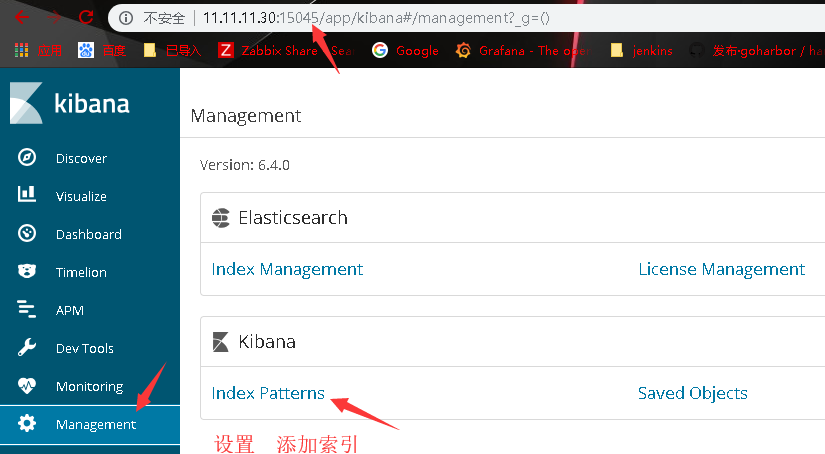

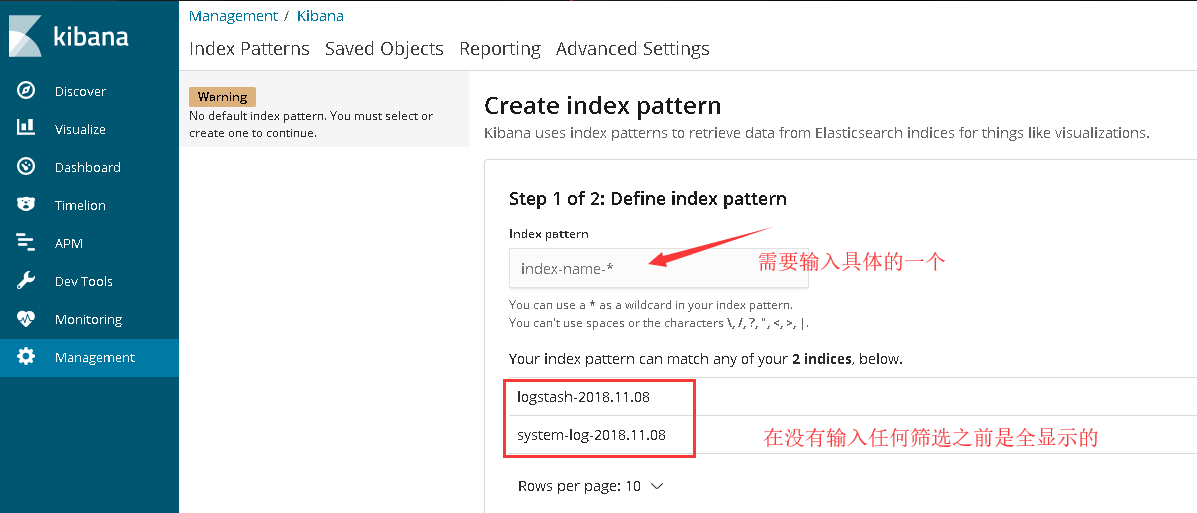

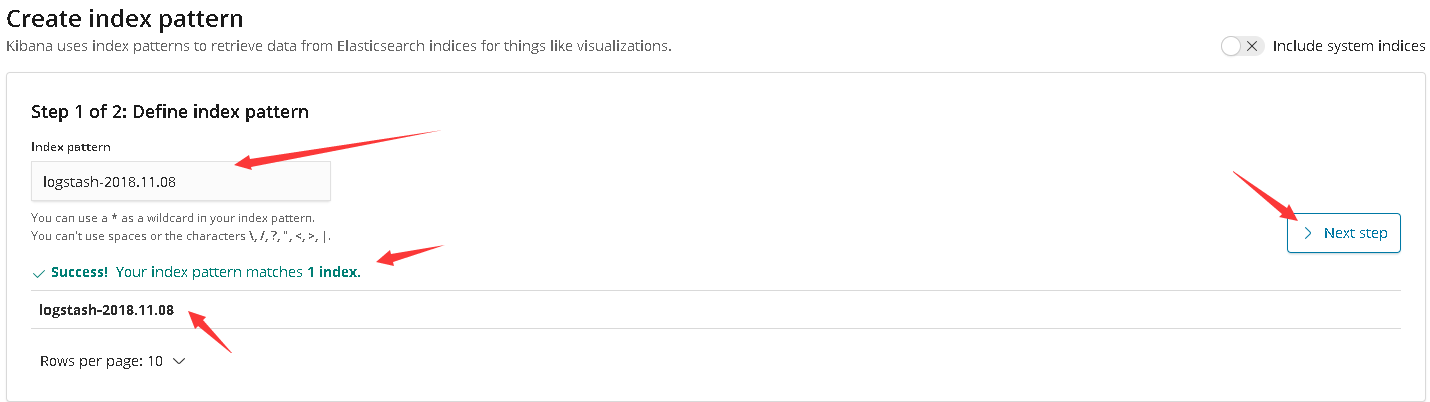

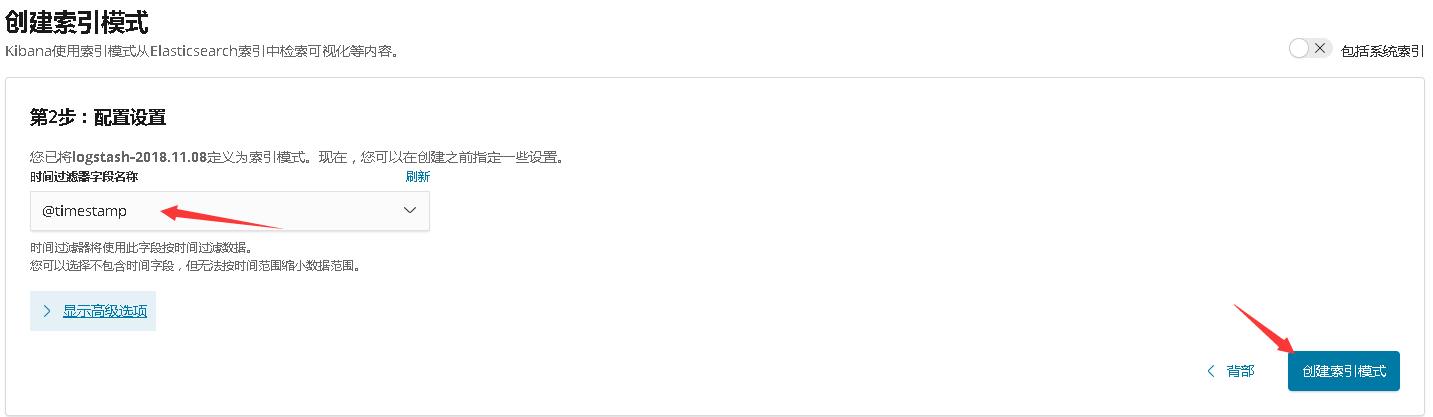

4、使用

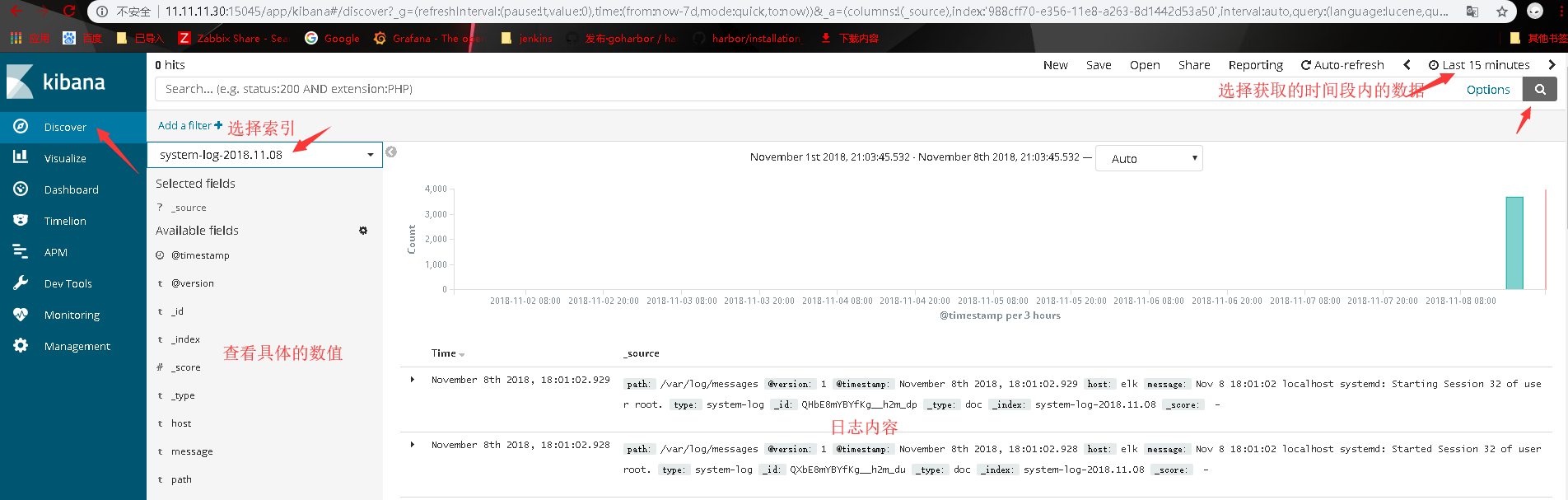

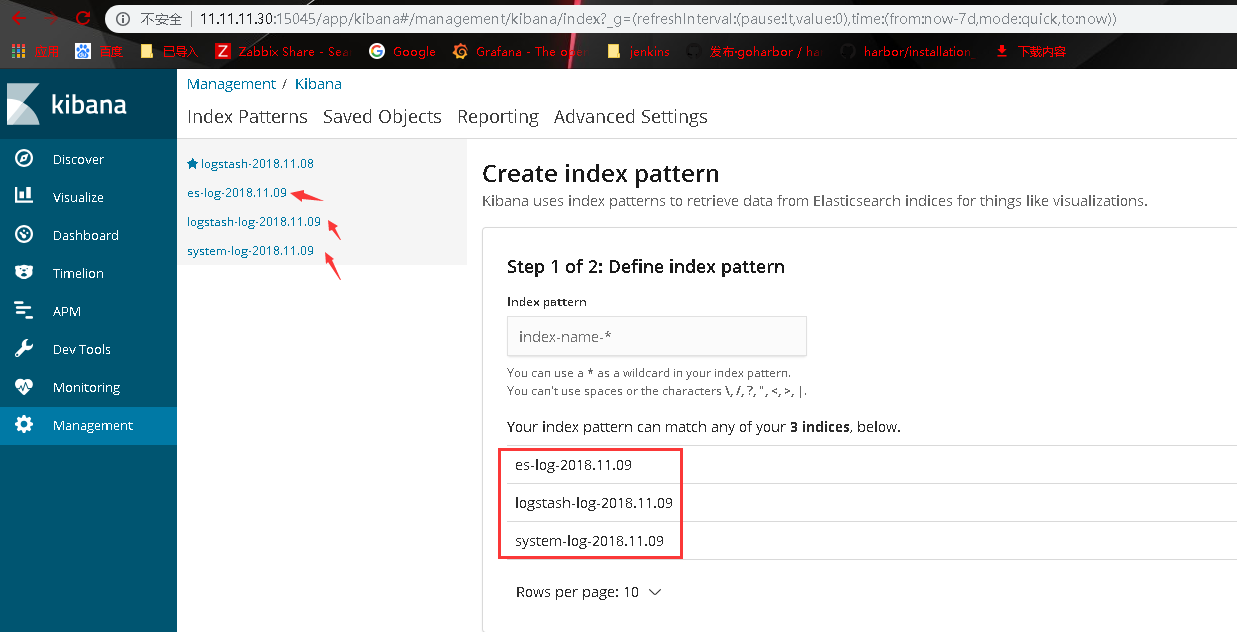

4.1、添加索引

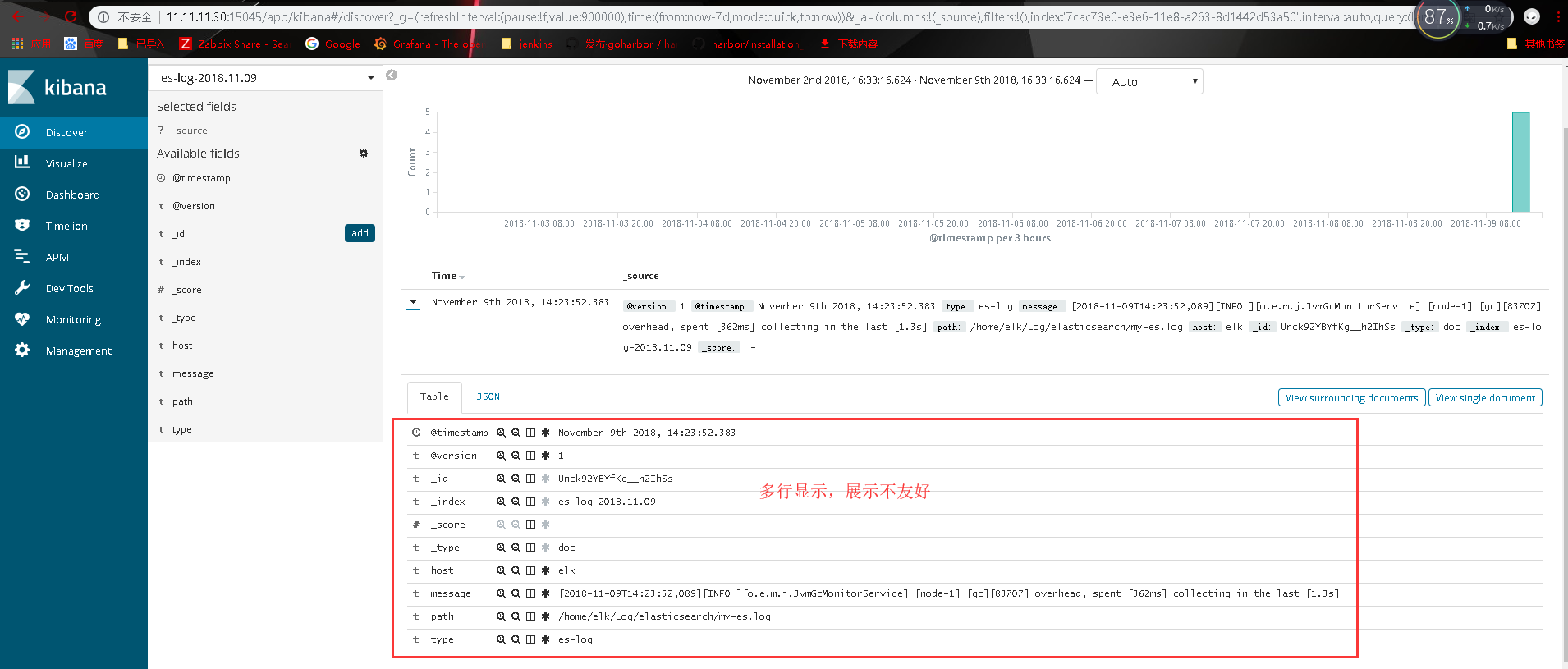

4.2、logstash过滤java日志

[root@elk ~]# cat /home/elk/Application/logstash/config/logstash.conf input { file { path => "/var/log/messages" type => "system-log" start_position => "beginning" } file { path => "/home/elk/Log/elasticsearch/my-es.log" type => "es-log" } file { path => "/home/elk/Log/logstash/logstash.log" type => "logstash-log" } } output { if [type] == "system-log" { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "system-log-%{+YYYY.MM}" #user => "elastic" #password => "changeme" } } if [type] == "es-log" { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "es-log-%{+YYYY.MM}" } } if [type] == "logstash-log" { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "logstash-log-%{+YYYY.MM}" } } } [root@elk ~]# [root@elk ~]# nohup /home/elk/Application/logstash/bin/logstash -f /home/elk/Application/logstash/config/logstash.conf --config.reload.automatic >> /home/elk/Log/logstash/logstash.log 2>&1 & [root@elk ~]# netstat -luntp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 874/sshd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1112/master tcp 0 0 11.11.11.30:15045 0.0.0.0:* LISTEN 16437/node tcp6 0 0 :::9100 :::* LISTEN 14477/grunt tcp6 0 0 11.11.11.30:9200 :::* LISTEN 15451/java tcp6 0 0 11.11.11.30:9300 :::* LISTEN 15451/java tcp6 0 0 :::22 :::* LISTEN 874/sshd tcp6 0 0 ::1:25 :::* LISTEN 1112/master tcp6 0 0 127.0.0.1:9600 :::* LISTEN 20746/java udp 0 0 127.0.0.1:323 0.0.0.0:* 526/chronyd udp6 0 0 ::1:323 :::* 526/chronyd [root@elk ~]#

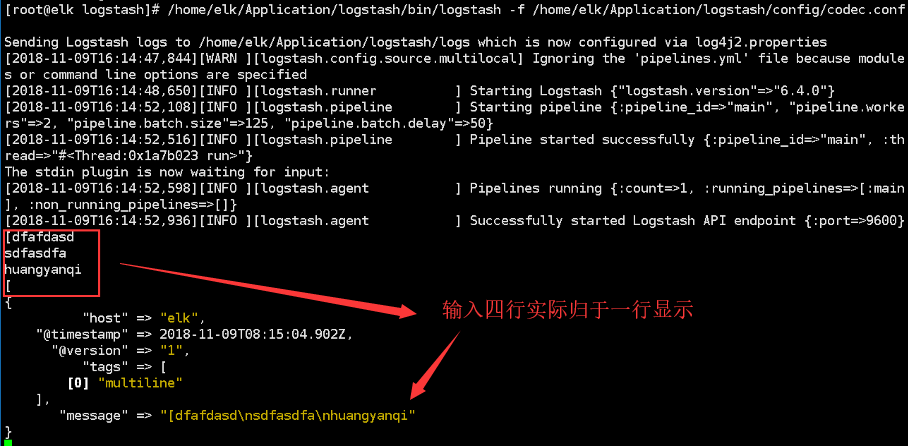

4.2.1、java日志的处理(多行归一行)

多行并一行,官方地址:https://www.elastic.co/guide/en/logstash/current/plugins-codecs-multiline.html

测试规则的可用性

[elk@elk config]$ cat codec.conf input { stdin { codec => multiline{ pattern => "^[" negate => true what => "previous" } } } filter { } output { stdout { codec => rubydebug } } [elk@elk config]$ [root@elk logstash]# /home/elk/Application/logstash/bin/logstash -f /home/elk/Application/logstash/config/codec.conf

用于处理真正的logstash配置:

#删除已有的缓存,删除后会重新收集 [root@elk ~]# find / -name .sincedb* /home/elk/Application/logstash/data/plugins/inputs/file/.sincedb_97cbda73a2aaa9193a01a3b39eb761f3 /home/elk/Application/logstash/data/plugins/inputs/file/.sincedb_5227a954e2d5a4a3f157592cbe63c166 /home/elk/Application/logstash/data/plugins/inputs/file/.sincedb_452905a167cf4509fd08acb964fdb20c [root@elk ~]# rm -rf /home/elk/Application/logstash/data/plugins/inputs/file/.sincedb* #修改过后的文件如下 [elk@elk config]$ cat logstash.conf input { file { path => "/var/log/messages" type => "system-log" start_position => "beginning" } file { path => "/home/elk/Log/elasticsearch/my-es.log" type => "es-log" start_position => "beginning" codec => multiline{ pattern => "^[" negate => true what => "previous" } } } output { if [type] == "system-log" { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "system-log-%{+YYYY.MM}" #user => "elastic" #password => "changeme" } } if [type] == "es-log" { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "es-log-%{+YYYY.MM}" } } if [type] == "logstash-log" { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "logstash-log-%{+YYYY.MM}" } } } [elk@elk config]$ #使用root账号启动 [root@elk config]# nohup /home/elk/Application/logstash/bin/logstash -f /home/elk/Application/logstash/config/logstash.conf >> /home/elk/Log/logstash/logstash.log 2>&1 & #刷新head

#els界面查看

5、收集nginx日志(转json格式)

关键在于logstash收集到的日志格式,一定要是json格式的

方法1:nginx配置日志格式,然后输入到els

log_format access_log_json '{"user_ip":"$http_x_real_ip","lan_ip":"$remote_addr","log_time":"$time_iso8601","user_req":"$request","http_code":"$status","body_bytes_sent":"$body_bytes_sent","req_time":"$request_time","user_ua":"$http_user_agent"}'; access_log /var/log/nginx/access_json.log access_log_json;

方法2:文件直接获取,写入Redis 然后再使用Python脚本读取Redis,写成Json后再写入ELS

5.1、安装nignx并创建访问日志

[root@elk02 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo [root@elk02 ~]# yum -y install nginx [root@elk02 ~]# systemctl start nginx.service [root@elk02 ~]# netstat -luntp [root@elk02 ~]# ab -n 1000 -c 1 http://11.11.11.31/ #访问一千次,并发为1 [root@elk02 ~]# tail -5 /var/log/nginx/access.log 11.11.11.31 - - [09/Nov/2018:18:09:08 +0800] "GET / HTTP/1.0" 200 3700 "-" "ApacheBench/2.3" "-" 11.11.11.31 - - [09/Nov/2018:18:09:08 +0800] "GET / HTTP/1.0" 200 3700 "-" "ApacheBench/2.3" "-" 11.11.11.31 - - [09/Nov/2018:18:09:08 +0800] "GET / HTTP/1.0" 200 3700 "-" "ApacheBench/2.3" "-" 11.11.11.31 - - [09/Nov/2018:18:09:08 +0800] "GET / HTTP/1.0" 200 3700 "-" "ApacheBench/2.3" "-" 11.11.11.31 - - [09/Nov/2018:18:09:08 +0800] "GET / HTTP/1.0" 200 3700 "-" "ApacheBench/2.3" "-" [root@elk02 ~]#

注释:用户真是IP 远程用户 时间 请求 状态码 发送数据大小 从哪跳转过来的 **** 获取代理的IP地址

[root@elk02 nginx]# vim nginx.conf

#添加如下日子规则******

log_format access_log_json '{"user_ip":"$http_x_real_ip","lan_ip":"$remote_addr","log_time":"$time_iso8601","user_req":"$request","http_code":"$status","body_bytes_sent":"$body_bytes_sent","req_time":"$request_time","user_ua":"$http_user_agent"}';

access_log /var/log/nginx/access.log access_log_json;

[root@elk02 nginx]# systemctl reload nginx [root@elk02 ~]# ab -n 1000 -c 1 http://11.11.11.31/ [root@elk02 nginx]# tailf /var/log/nginx/access_json.log {"user_ip":"-","lan_ip":"11.11.11.31","log_time":"2018-11-09T20:18:12+08:00","user_req":"GET / HTTP/1.0","http_code":"200","body_bytes_sent":"3700","req_time":"0.000","user_ua":"ApacheBench/2.3"} {"user_ip":"-","lan_ip":"11.11.11.31","log_time":"2018-11-09T20:18:12+08:00","user_req":"GET / HTTP/1.0","http_code":"200","body_bytes_sent":"3700","req_time":"0.000","user_ua":"ApacheBench/2.3"} {"user_ip":"-","lan_ip":"11.11.11.31","log_time":"2018-11-09T20:18:12+08:00","user_req":"GET / HTTP/1.0","http_code":"200","body_bytes_sent":"3700","req_time":"0.000","user_ua":"ApacheBench/2.3"} {"user_ip":"-","lan_ip":"11.11.11.31","log_time":"2018-11-09T20:18:12+08:00","user_req":"GET / HTTP/1.0","http_code":"200","body_bytes_sent":"3700","req_time":"0.000","user_ua":"ApacheBench/2.3"}

5.2、开始收集、分析

5.21、收集测试

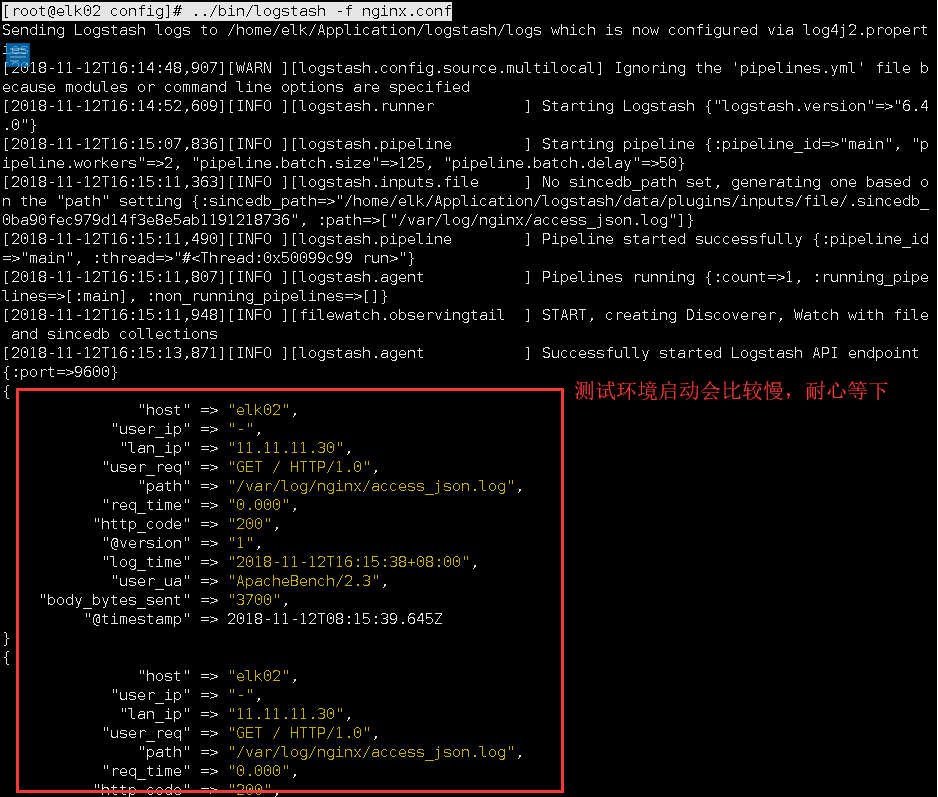

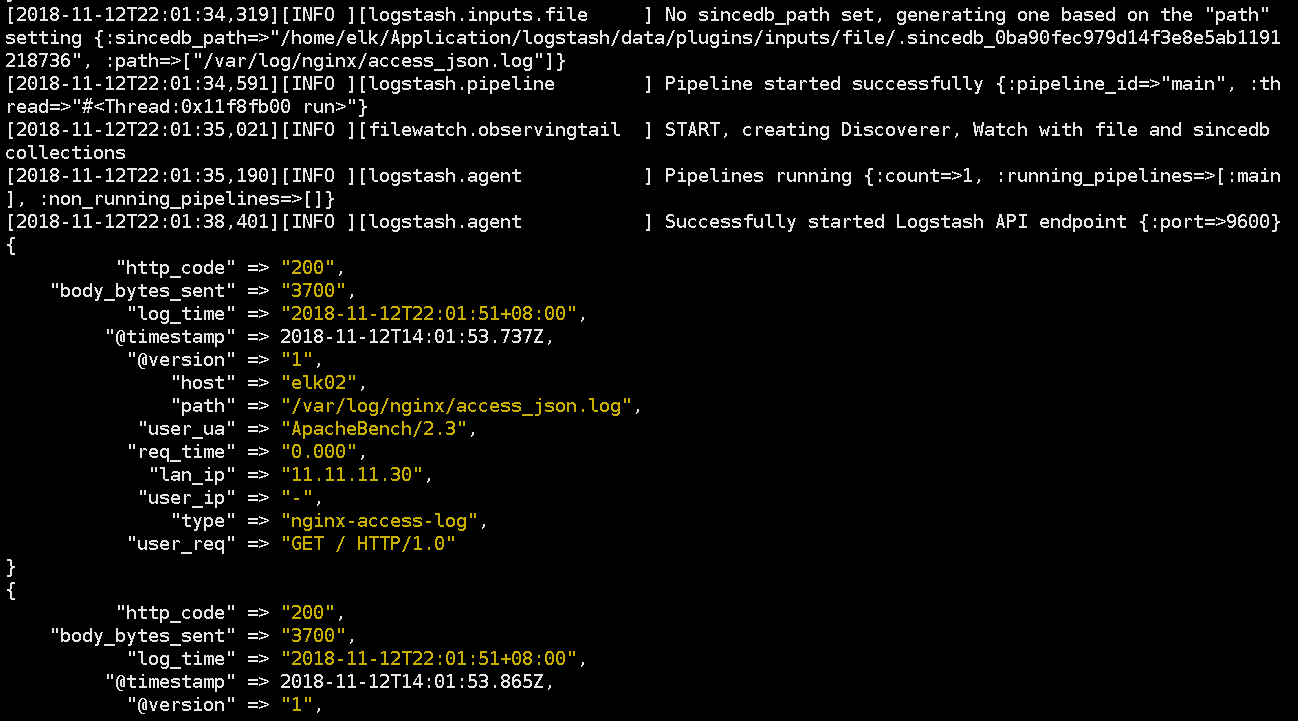

#logstash服务启动的端口是9600,如果已存在kill掉,使用这个nginx配置文件启动 [root@elk02 config]# pwd /home/elk/Application/logstash/config [root@elk02 config]# vim nginx.conf input { file { path => "/var/log/nginx/access_json.log" codec => "json" } } output { stdout { codec => rubydebug } } [root@elk02 config]# ../bin/logstash -f nginx.conf 然后使用11.30这台服务器过ad压力测试 [root@elk config]# ab -n 10 -c 1 http://11.11.11.31/ 在elk02即11.31上可以看到如下效果

5.2.2、写入配置els内

这里为了方便直接修改nginx.conf这个文件内了,实际最好写入到logstash.conf文件内

[root@elk02 config]# pwd /home/elk/Application/logstash/config [root@elk02 config]# cat nginx.conf input { file { type => "nginx-access-log" path => "/var/log/nginx/access_json.log" codec => "json" } } output { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "nginx-access-log-%{+YYYY.MM}" } } [root@elk02 config]#

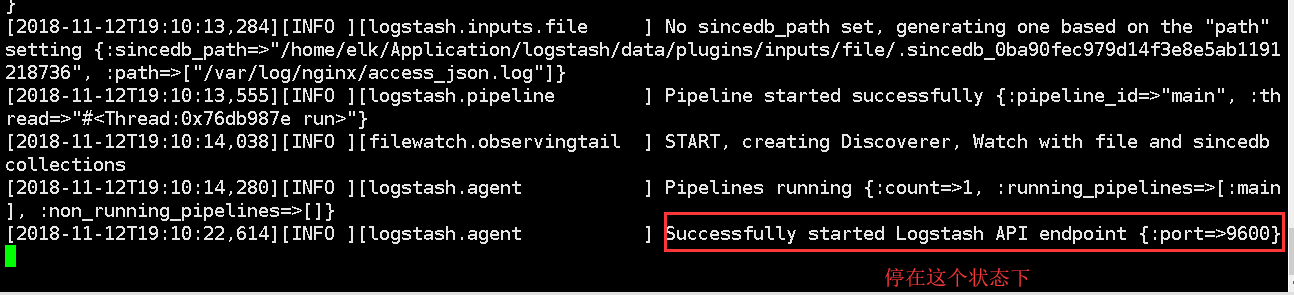

[root@elk02 config]# ../bin/logstash -f nginx.conf #运行logstash指定使用nginx.conf这个配置文件内。

[root@elk ~]# ab -n 10 -c 1 http://11.11.11.31/ #一定要产生日志才能被发现,因为是从文件末尾开始收集的;

如果没有显示处理方法:

1、在原有的nginx.conf配置文件内添加如下:

input { file { type => "nginx-access-log" path => "/var/log/nginx/access_json.log" codec => "json" } } output { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "nginx-access-log-%{+YYYY.MM}"

}

stdout { codec => rubydebug } }

[root@elk02 config]# ../bin/logstash -f nginx.conf

[root@elk ~]# ab -n 10 -c 1 http://11.11.11.31/

2、找到它并删除它重新获取数据

[root@elk02 config]# find / -name .sincedb*

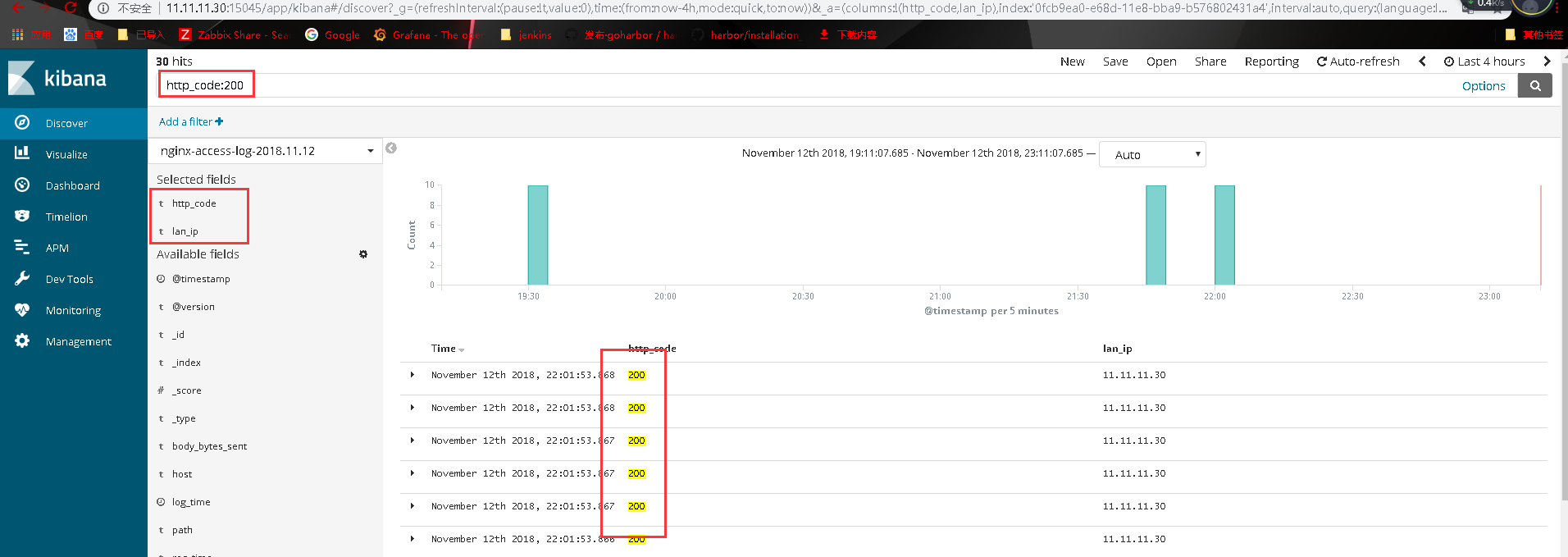

6、web页面kibana的使用

6.1、搜索关键值

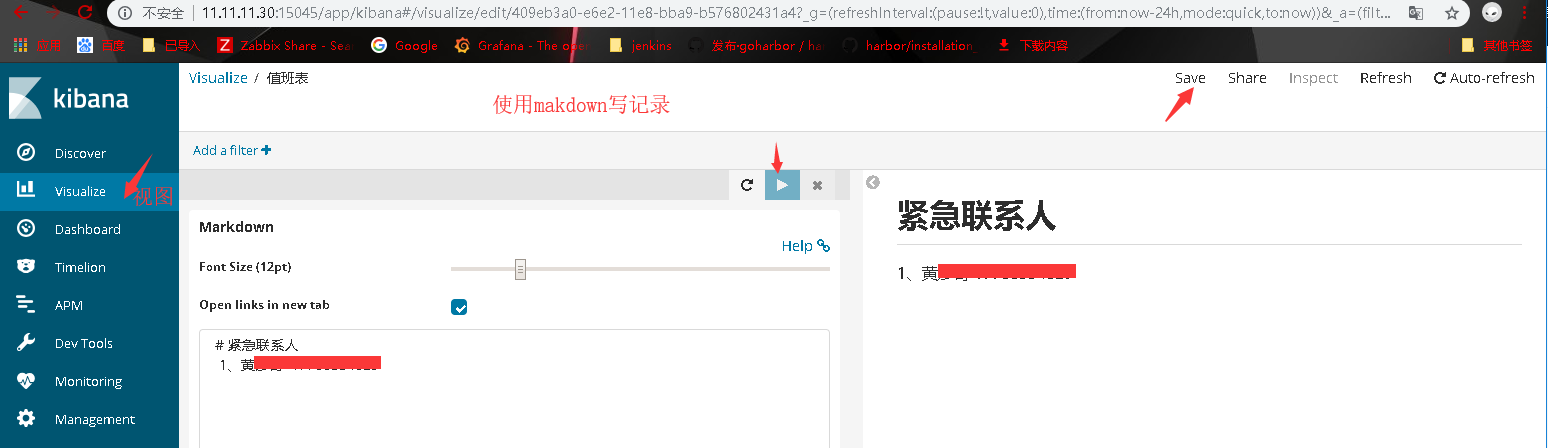

6.2、视图的用法

6.2.1、makdown的使用方法

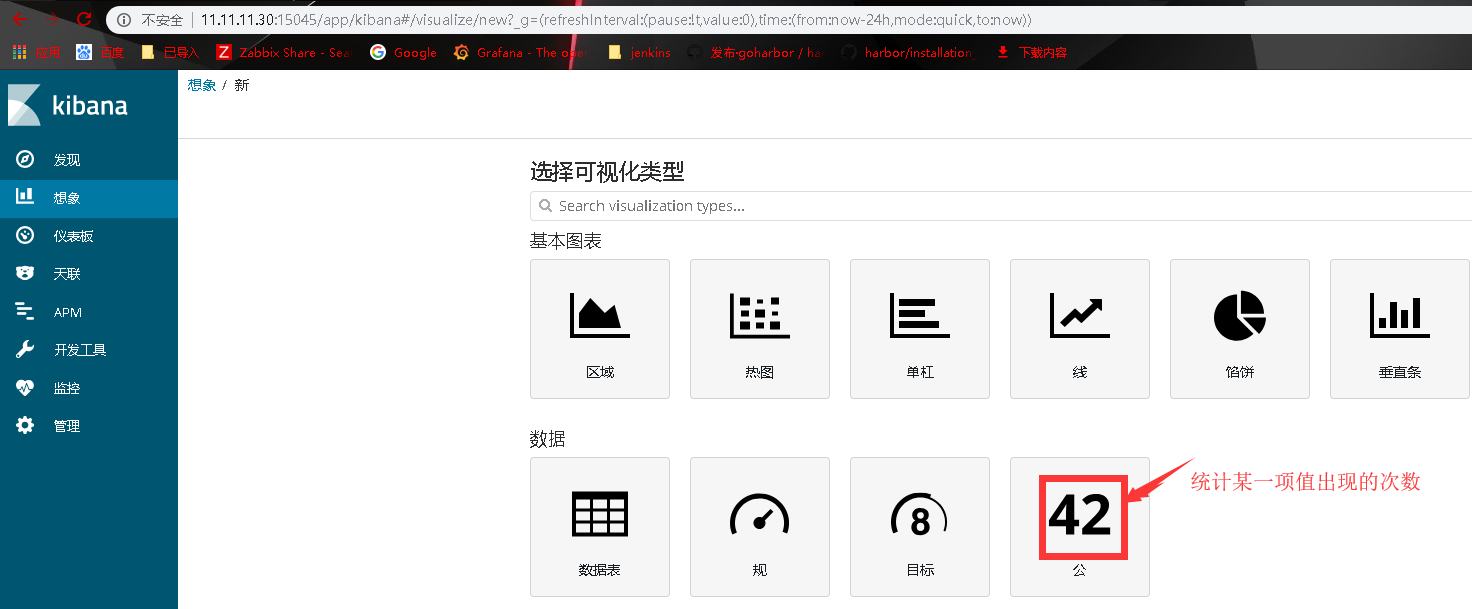

6.2.2、统计某一值得方法

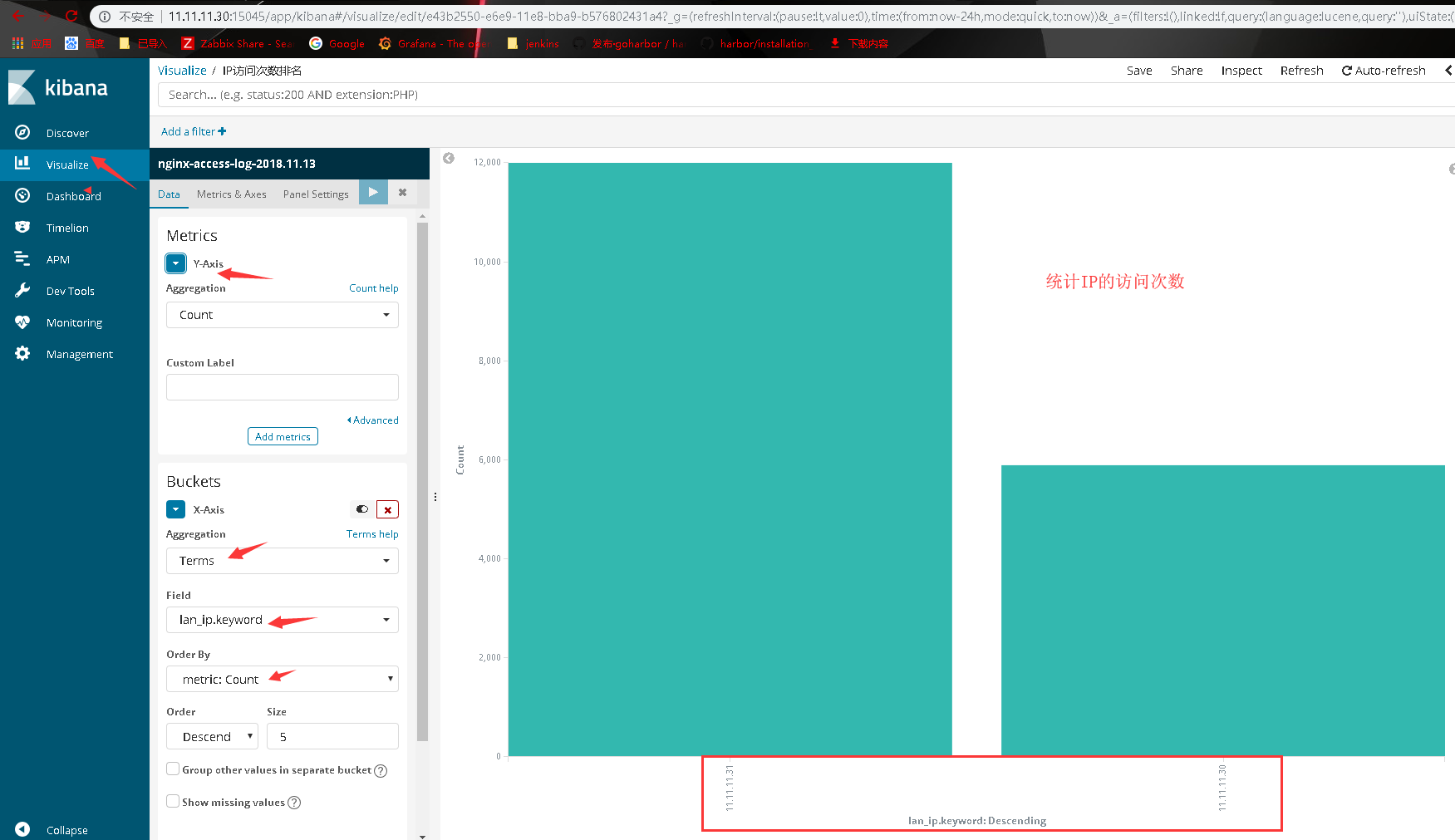

6.2.3、统计IP的访问次数

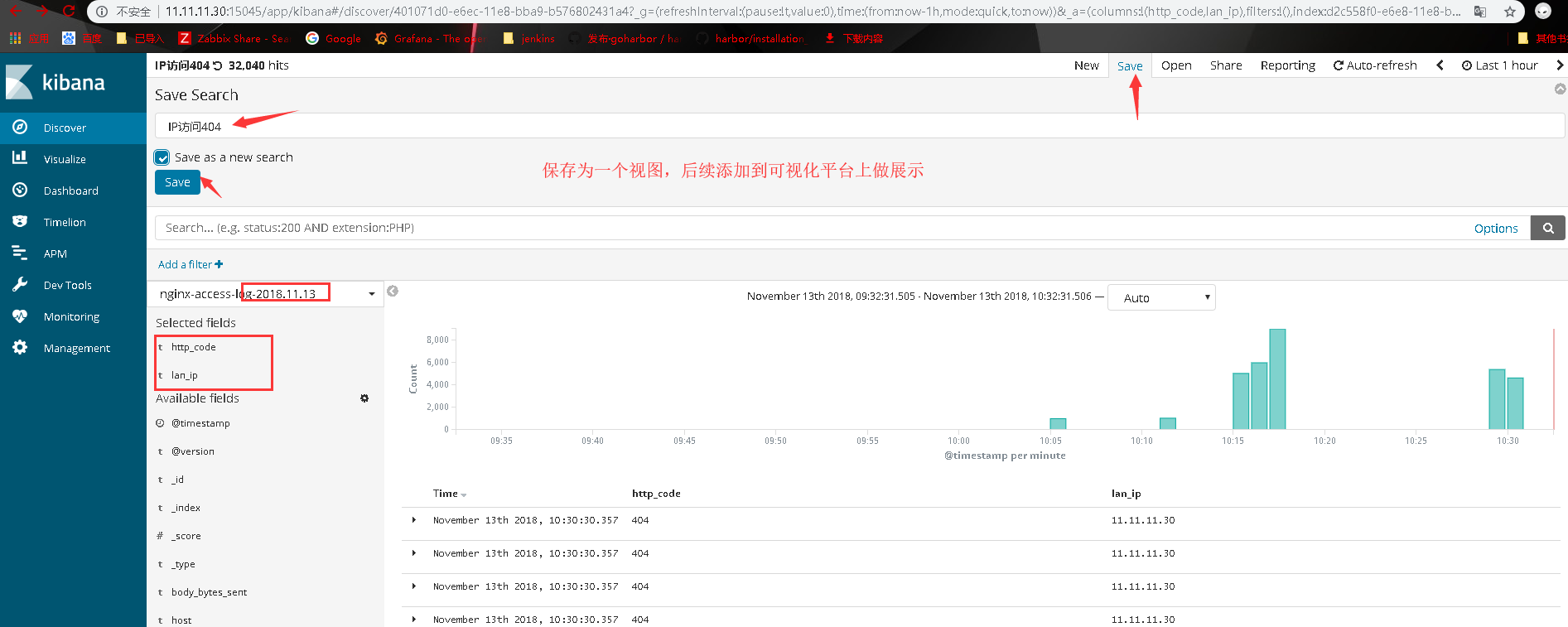

6.2.4、可视化平台展示

7、日志收集

官方参考地址:https://www.elastic.co/guide/en/logstash/current/plugins-inputs-syslog.html

7.1、rsyslog系统日志

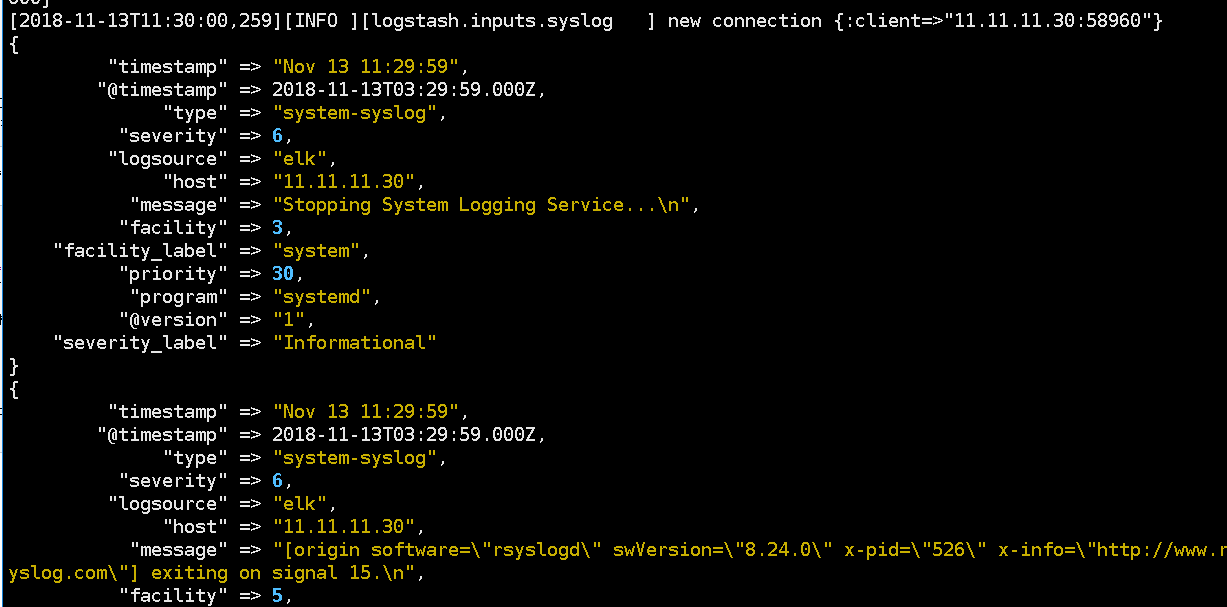

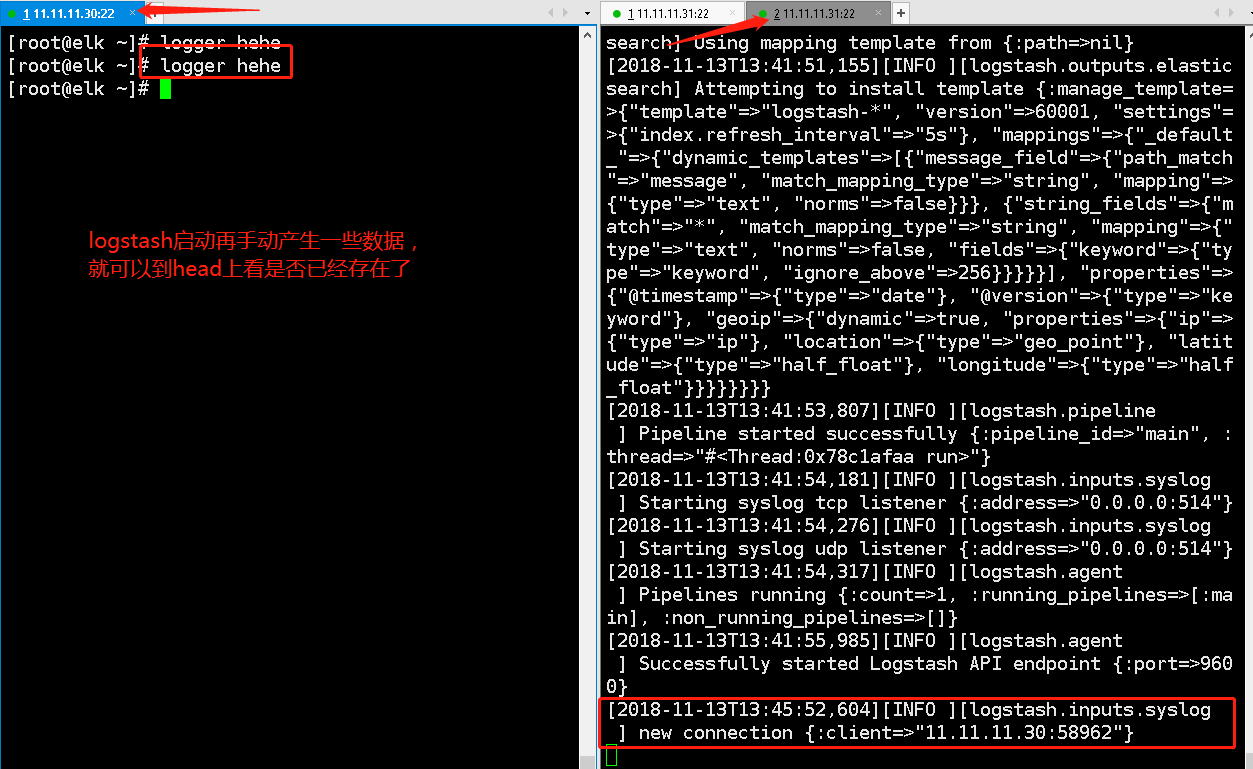

1、测试是否能收集到syslog日志{11.31}

[root@elk02 config]# cat syslog.conf input { syslog { type => "system-syslog" port => 514 } } output { stdout { codec => rubydebug } } [root@elk02 config]#

2、运行配置后查看端口是否开启{11.31}

[root@elk02 config]# pwd /home/elk/Application/logstash/config [root@elk02 config]# ../bin/logstash -f systlog.conf Sending Logstash logs to /home/elk/Application/logstash/logs which is now configured via log4j2.properties [2018-11-13T11:19:49,206][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified [2018-11-13T11:19:53,753][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.4.0"} [2018-11-13T11:20:08,746][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50} [2018-11-13T11:20:10,815][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x64bb4375 run>"} [2018-11-13T11:20:12,403][INFO ][logstash.inputs.syslog ] Starting syslog tcp listener {:address=>"0.0.0.0:514"} [2018-11-13T11:20:12,499][INFO ][logstash.inputs.syslog ] Starting syslog udp listener {:address=>"0.0.0.0:514"} [2018-11-13T11:20:12,749][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]} [2018-11-13T11:20:14,078][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} #查看是否有9600和514端口 [root@elk02 config]# netstat -luntp

3、编辑/etc/rsyslog.conf日志{11.30}

#在最后添加如下一行 [root@elk ~]# tail -2 /etc/rsyslog.conf *.* @@11.11.11.31:514 # ### end of the forwarding rule ### [root@elk ~]# 注释:里边有些地址后边有横杠,代表有新的消息先写入内存缓存再写入对应的目录内。 #重启rsys的服务 [root@elk ~]# systemctl restart rsyslog.service #重启完成后就能在11.31上看到有新的消息输出了

看下是否是实时输出

7.1、syslog实际部署

[root@elk02 config]# cat systlog.conf input { syslog { type => "system-syslog" port => 514 } } output { elasticsearch { hosts => ["http://11.11.11.31:9200"] index => "system-syslog-%{+YYYY.MM}" } } [root@elk02 config]# pwd /home/elk/Application/logstash/config [root@elk02 config]#

[root@elk02 config]# ../bin/logstash -f systlog.conf

注释:然后看下11.31是否开启了514端口用于接收数据

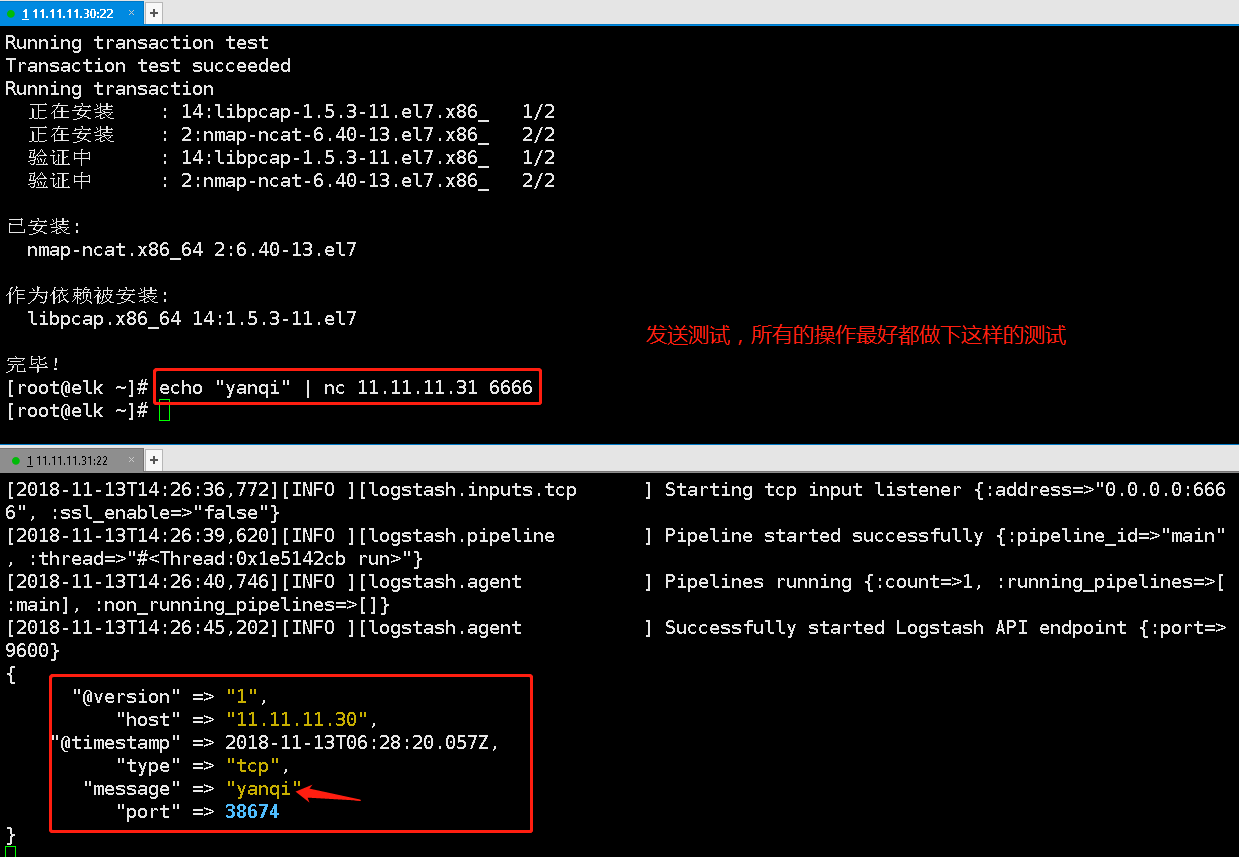

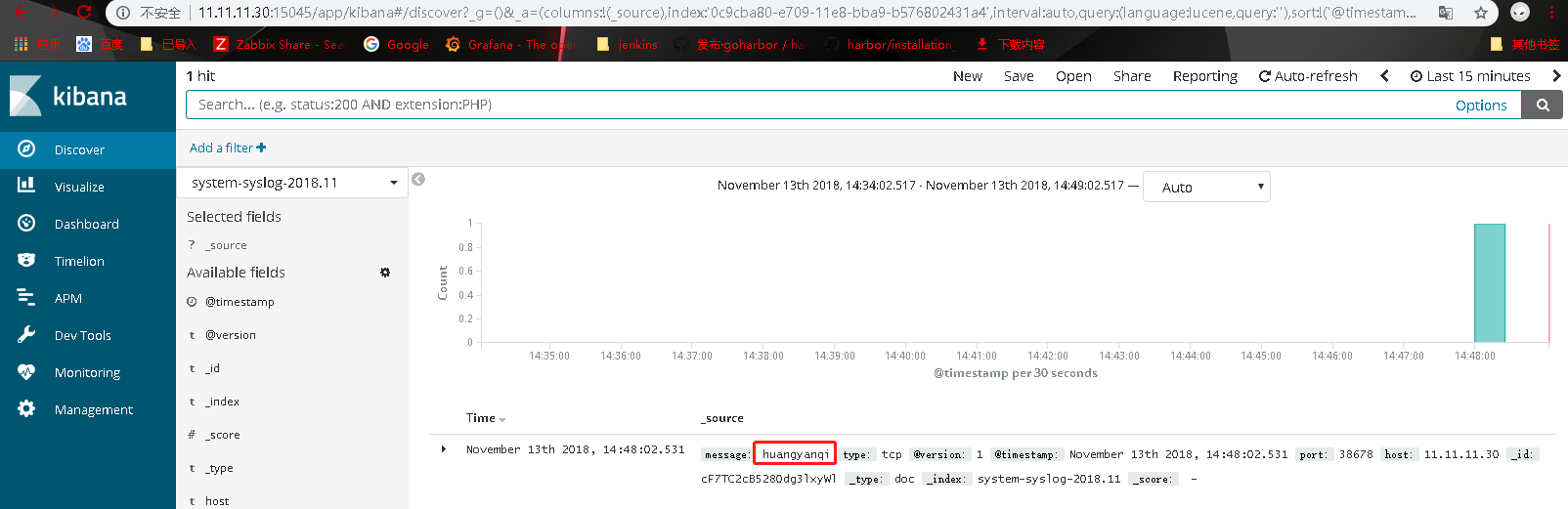

7.2、TCP日志

测试可行性

[root@elk02 config]# cat tcp.conf input { tcp { type => "tcp" port => "6666" mode => "server" } } output { stdout { codec => rubydebug } } [root@elk02 config]# pwd /home/elk/Application/logstash/config [root@elk02 config]#

[root@elk02 config]# ../bin/logstash -f tcp.conf

注释:这时就可以查看是否有6666端口存在,存在则代表已经启动了。

写入ES内

[root@elk02 config]# cat tcp.conf input { tcp { type => "tcp" port => "6666" mode => "server" } } output { elasticsearch { hosts => ["http://11.11.11.31:9200"] index => "system-syslog-%{+YYYY.MM}" } } [root@elk02 config]# pwd /home/elk/Application/logstash/config [root@elk02 config]#

8、grok(httpd日志)

参考地址:https://www.elastic.co/guide/en/logstash/current/plugins-filters-grok.html

#查看httpd的日志格式

[root@elk ~]# yum -y install httpd [root@elk ~]# systemctl start httpd.service [root@elk conf]# pwd /etc/httpd/conf [root@elk conf]# vim httpd.conf LogFormat "%h %l %u %t "%r" %>s %b "%{Referer}i" "%{User-Agent}i"" combined LogFormat "%h %l %u %t "%r" %>s %b" common

注释: 访问IP地址 - 用户 时间戳

http://httpd.apache.org/docs/current/mod/mod_log_config.html

8.1、官方模板存参考位置:

/home/elk/Application/logstash/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns 下 [root@elk patterns]# ll 总用量 112 -rw-r--r--. 1 elk elk 1831 8月 18 08:23 aws -rw-r--r--. 1 elk elk 4831 8月 18 08:23 bacula -rw-r--r--. 1 elk elk 260 8月 18 08:23 bind -rw-r--r--. 1 elk elk 2154 8月 18 08:23 bro -rw-r--r--. 1 elk elk 879 8月 18 08:23 exim -rw-r--r--. 1 elk elk 10095 8月 18 08:23 firewalls -rw-r--r--. 1 elk elk 5338 8月 18 08:23 grok-patterns -rw-r--r--. 1 elk elk 3251 8月 18 08:23 haproxy -rw-r--r--. 1 elk elk 987 8月 18 08:23 httpd -rw-r--r--. 1 elk elk 1265 8月 18 08:23 java -rw-r--r--. 1 elk elk 1087 8月 18 08:23 junos -rw-r--r--. 1 elk elk 1037 8月 18 08:23 linux-syslog -rw-r--r--. 1 elk elk 74 8月 18 08:23 maven -rw-r--r--. 1 elk elk 49 8月 18 08:23 mcollective -rw-r--r--. 1 elk elk 190 8月 18 08:23 mcollective-patterns -rw-r--r--. 1 elk elk 614 8月 18 08:23 mongodb -rw-r--r--. 1 elk elk 9597 8月 18 08:23 nagios -rw-r--r--. 1 elk elk 142 8月 18 08:23 postgresql -rw-r--r--. 1 elk elk 845 8月 18 08:23 rails -rw-r--r--. 1 elk elk 224 8月 18 08:23 redis -rw-r--r--. 1 elk elk 188 8月 18 08:23 ruby -rw-r--r--. 1 elk elk 404 8月 18 08:23 squid [root@elk patterns]#

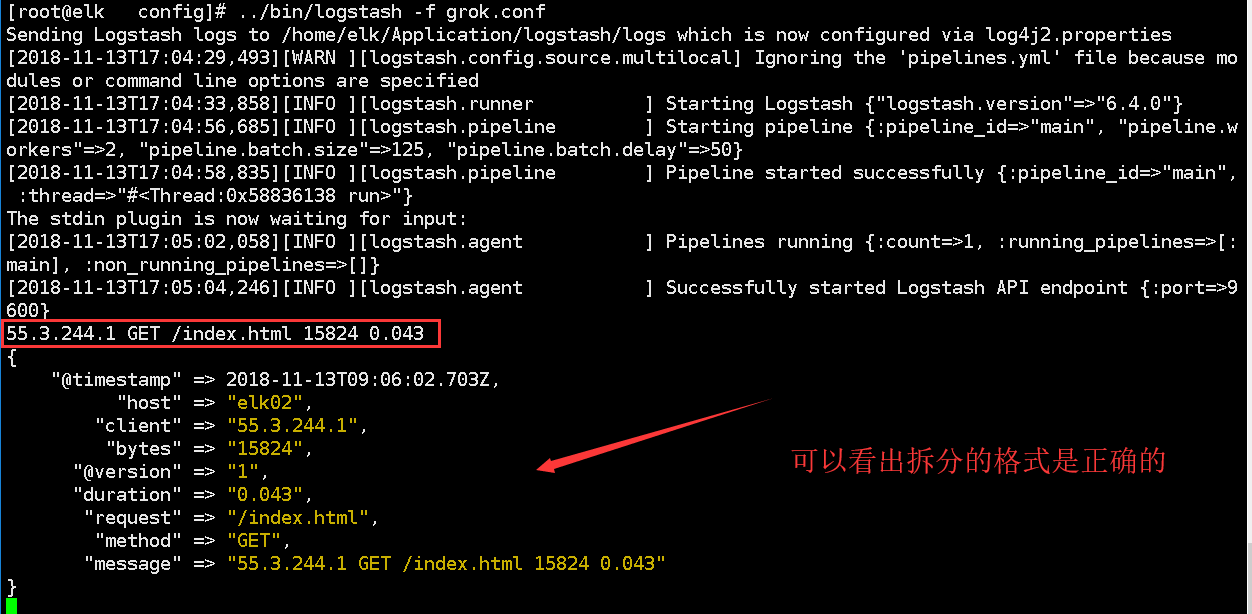

8.2、测试拆分格式(重点查看上边给的目录下的 httpd文档)

试拆分格式(重点查看官方文档grok)

这个是参考的官方网页文档

[root@elk config]# pwd /home/elk/Application/logstash/config [root@elk config]# cat grok.conf input { stdin {} } filter { grok { match => { "message" => "%{IP:client} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}" } } } output { stdout { codec => rubydebug } } [root@elk config]#

8.3、实际收集日志测试

httpd的日志类型为默认类型

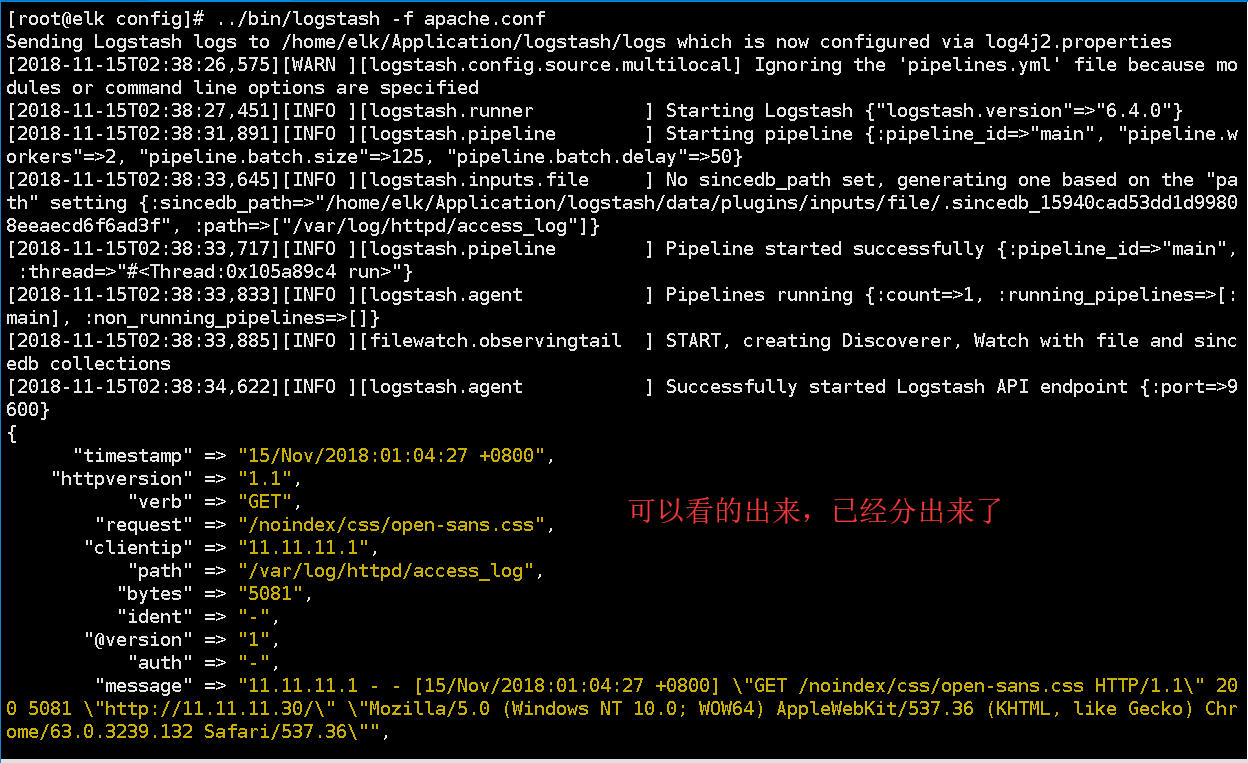

[root@elk config]# cat apache.conf input { file { path => "/var/log/httpd/access_log" start_position => "beginning" } } filter { grok { match => { "message" => "%{HTTPD_COMMONLOG}"} } } output { stdout { codec => rubydebug } } [root@elk config]# pwd /home/elk/Application/logstash/config [root@elk config]#

注释:HTTPD_COMMONLOG 是在httpd文件内拷贝出来的,后边已经定义了变量,直接引用即可!!!

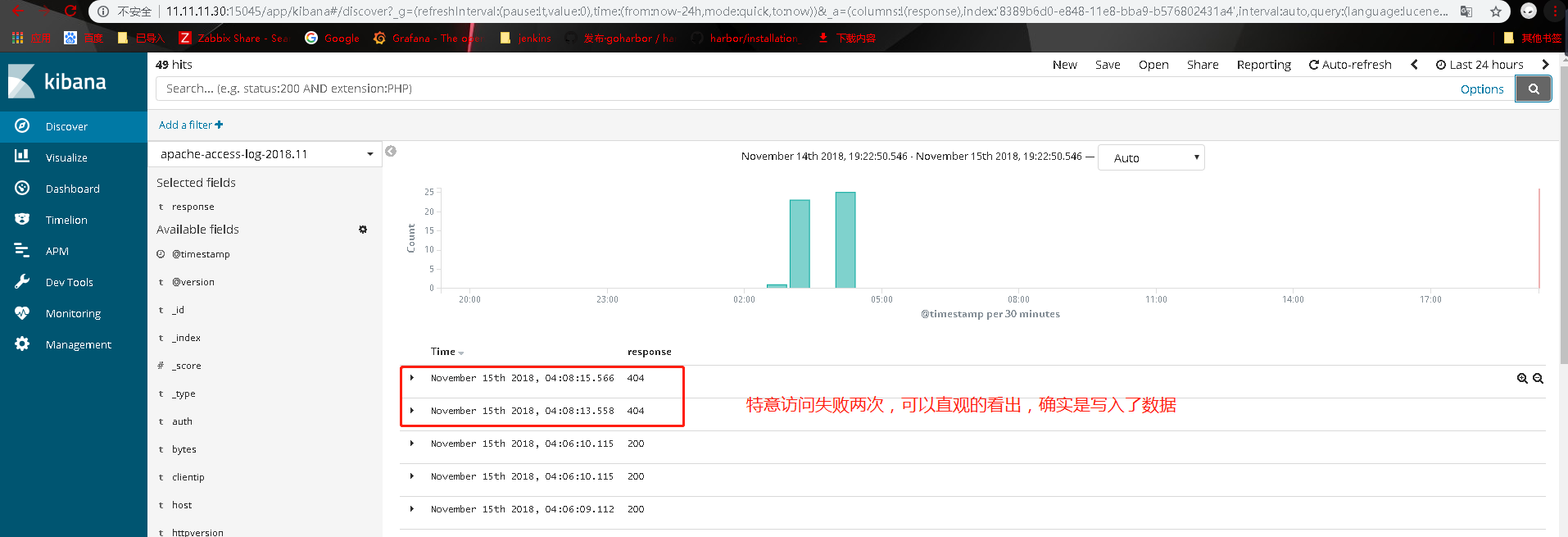

8.4、最终版(apache)

[root@elk config]# cat apache.conf input { file { path => "/var/log/httpd/access_log" start_position => "beginning" type => "apache-access-log" } } filter { grok { match => { "message" => "%{HTTPD_COMMONLOG}"} } } output { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "apache-access-log-%{+YYYY.MM}" } } [root@elk config]# pwd /home/elk/Application/logstash/config [root@elk config]#

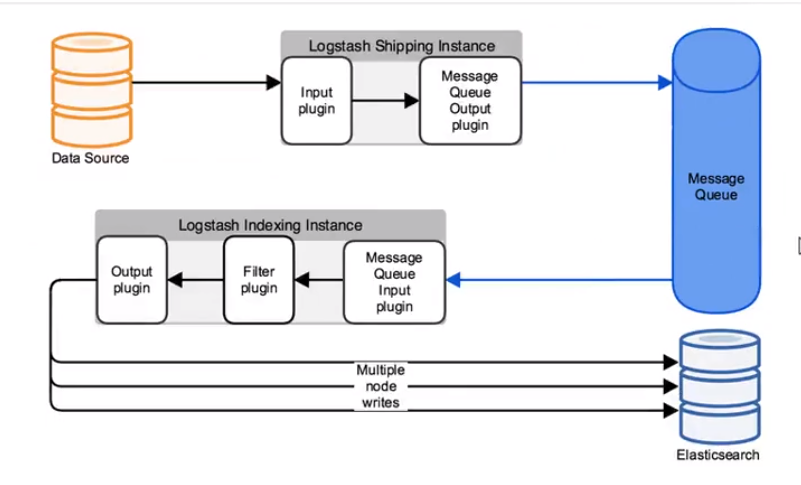

9、消息队列(低耦合)

冗余架构官方地址:https://www.elastic.co/guide/en/logstash/2.3/deploying-and-scaling.html#deploying-scaling

raid消息列队output内的插件:https://www.elastic.co/guide/en/logstash/current/plugins-outputs-redis.html

9.1、测试logstash写入radis

[root@elk02 ~]# yum -y install redis [root@elk02 ~]# vim /etc/redis.conf bind 11.11.11.31 #监听地址 daemonize yes #后台运行 systemctl start redis systemctl enable redis [root@elk02 config]# netstat -luntp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 11.11.11.31:6379 0.0.0.0:* LISTEN 130702/redis-server tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 871/sshd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1107/master tcp6 0 0 11.11.11.31:9200 :::* LISTEN 1309/java tcp6 0 0 11.11.11.31:9300 :::* LISTEN 1309/java tcp6 0 0 :::22 :::* LISTEN 871/sshd tcp6 0 0 ::1:25 :::* LISTEN 1107/master udp 0 0 127.0.0.1:323 0.0.0.0:* 533/chronyd udp6 0 0 ::1:323 :::* 533/chronyd [root@elk02 ~]# cd /home/elk/Application/logstash/ [root@elk02 config]# vim radis.conf input { stdin {} } output { redis { host => "11.11.11.31" port => "6379" db => "6" data_type => "list" key => "demo" } } [root@elk02 config]# ../bin/logstash -f radis.conf Sending Logstash logs to /home/elk/Application/logstash/logs which is now configured via log4j2.properties [2018-11-14T19:05:53,506][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified [2018-11-14T19:05:57,477][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.4.0"} [2018-11-14T19:06:14,992][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50} [2018-11-14T19:06:16,366][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x6c86dc5a run>"} The stdin plugin is now waiting for input: [2018-11-14T19:06:16,772][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]} [2018-11-14T19:06:18,719][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} heheh01 hehe02 [root@elk02 config]# redis-cli -h 11.11.11.31 11.11.11.31:6379> set name huangyanqi OK 11.11.11.31:6379> info # Keyspace db0:keys=1,expires=0,avg_ttl=0 db6:keys=1,expires=0,avg_ttl=0 11.11.11.31:6379> SELECT 6 #进入db6 OK 11.11.11.31:6379[6]> keys * #keys类型 1) "demo" 11.11.11.31:6379[6]> type demo @demo格式 list 11.11.11.31:6379[6]> llen demo #消息长度 (integer) 2 11.11.11.31:6379[6]> lindex demo -1 #查看最后一行 "{"message":" heheh01","@timestamp":"2018-11-14T11:06:46.034Z","@version":"1","host":"elk02"}" 11.11.11.31:6379[6]>

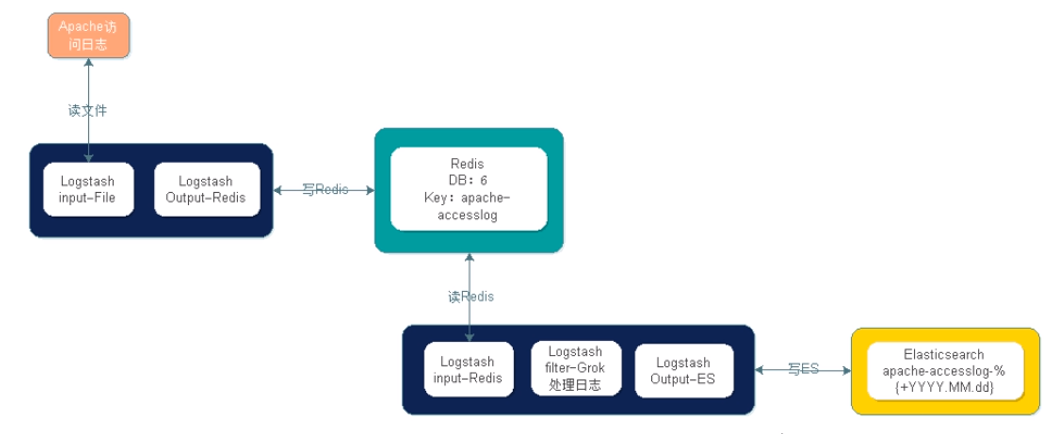

9.2、 apache的日志写入redis内

应为elk(11.30)已经安装了http,就使用这台做实验了,radis安装在了elk2(11.31)上

[root@elk ~]# cd /home/elk/Application/logstash/config/ [root@elk config]# vim apache.conf input { file { path => "/var/log/httpd/access_log" start_position => "beginning" } } output { redis { host => "11.11.11.31" port => "6379" db => "6" data_type => "list" key => "apache-access-log" } } #这时需要访问下11.30上的http服务,以便产生新的日志,radis才能读取到 #然后就可以访问radis了 [root@elk02 ~]# redis-cli -h 11.11.11.31 #登陆redis 11.11.11.31:6379> select 6 #进入库6 OK 11.11.11.31:6379[6]> keys * #查看哪些key值,生产千万不能查所有key 1) "apache-access-log" 2) "demo" 11.11.11.31:6379[6]> type apache-access-log #key的类型 list 11.11.11.31:6379[6]> llen apache-access-log #key的长度,就多少条信息 (integer) 3 11.11.11.31:6379[6]> lindex apache-access-log -1 #这个key的最后一条内容 "{"message":"11.11.11.1 - - [16/Nov/2018:04:45:07 +0800] \"GET / HTTP/1.1\" 200 688 \"-\" \"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36\"","@timestamp":"2018-11-15T20:45:08.705Z","@version":"1","path":"/var/log/httpd/access_log","host":"elk"}" 11.11.11.31:6379[6]>

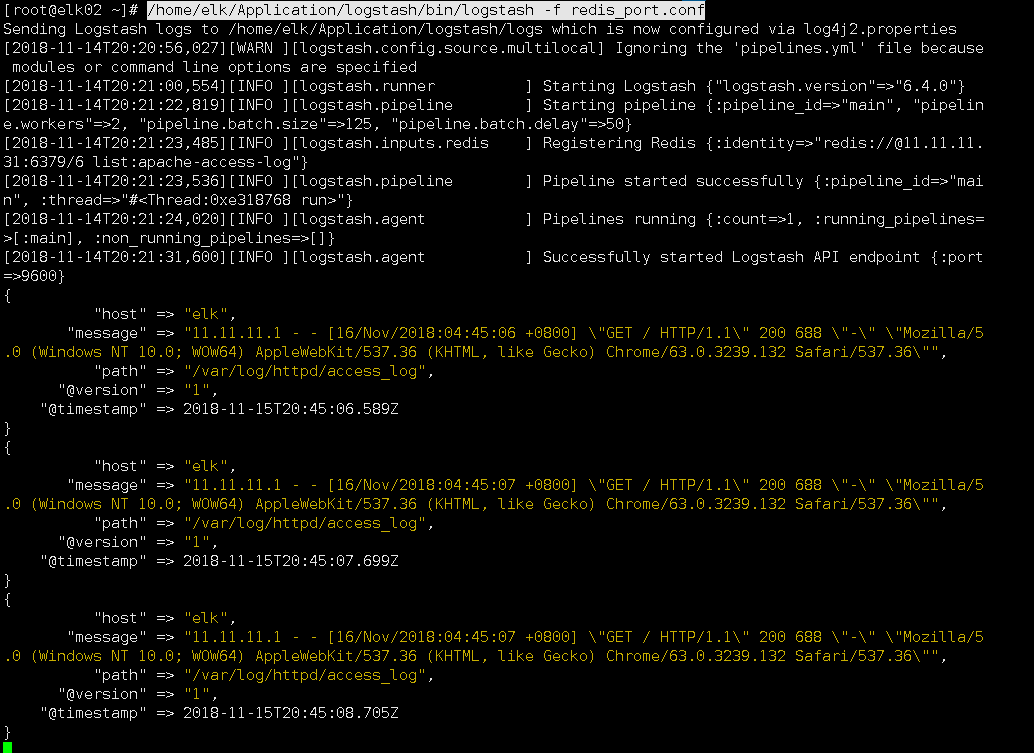

9.3、把redis内的日志写入logstash内

raid消息列队input内的插件 https://www.elastic.co/guide/en/logstash/current/plugins-inputs-redis.html

[root@elk02 ~]# cat redis_port.conf input { redis { host => "11.11.11.31" port => "6379" db =>"6" data_type => "list" key => "apache-access-log" } } filter { grok { match => { "message" => "%{HTTPD_COMMONLOG}"} } } output { stdout { codec => rubydebug } } [root@elk02 ~]# [root@elk02 ~]# /home/elk/Application/logstash/bin/logstash -f redis_port.conf

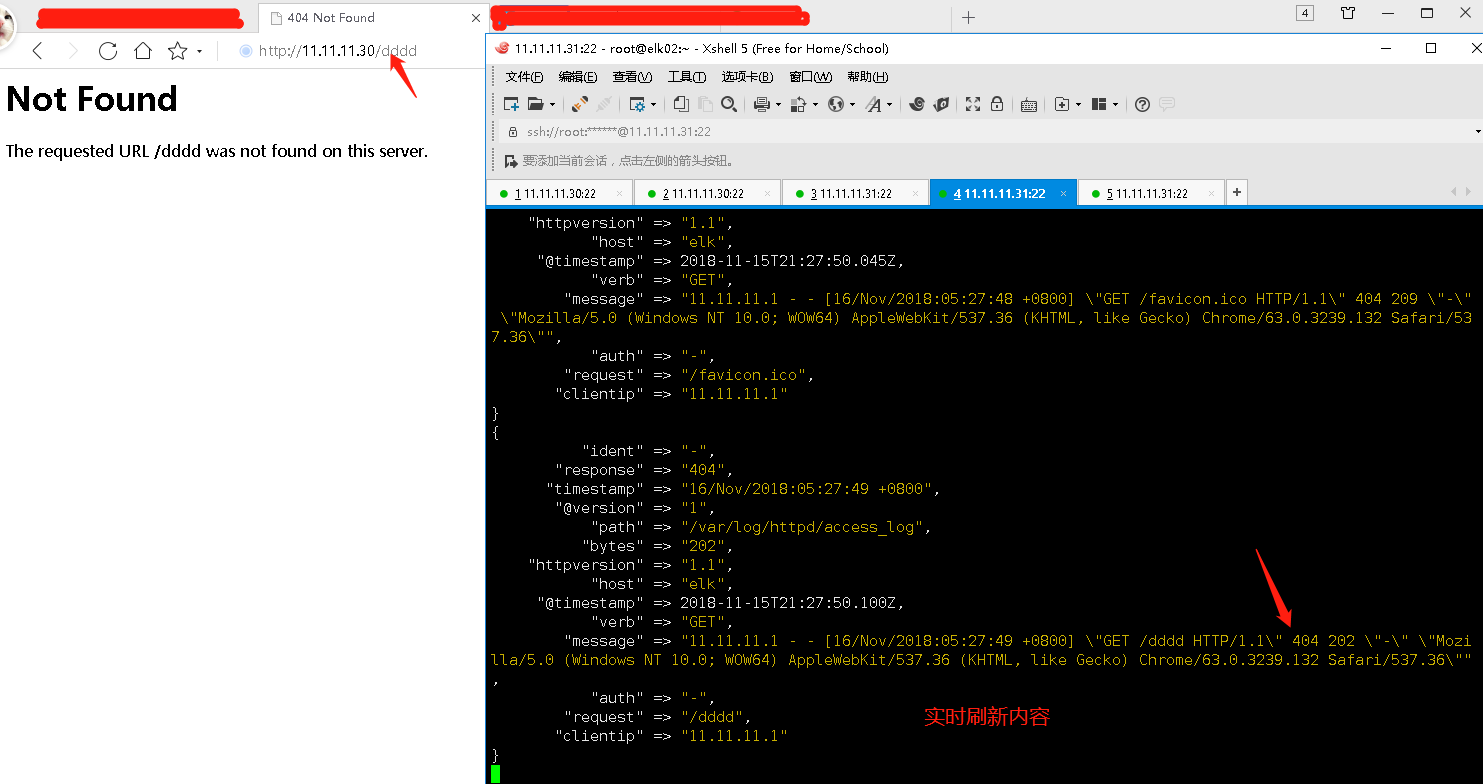

9.4、实测

elk(11.30)安装了httpd、logstash、els、kibans、head

elk02(11.31)安装了logstash、els、redis

过程:在11.30上运行httpd使用logstash收集然后传输到11.31上的redis内;然后使用11.31上的logstash读取后写入11.30的els内。

[root@elk config]# cat apache.conf input { file { path => "/var/log/httpd/access_log" start_position => "beginning" } } output { redis { host => "11.11.11.31" port => "6379" db => "6" data_type => "list" key => "apache-access-log" } } [root@elk config]# pwd /home/elk/Application/logstash/config [root@elk config]# [root@elk config]# ../bin/logstash -f apache.conf [root@elk02 ~]# cat redis_port.conf input { redis { host => "11.11.11.31" port => "6379" db =>"6" data_type => "list" key => "apache-access-log" } } output { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "access01-log-%{+YYYY.MM.dd}" } } [root@elk02 ~]# [root@elk02 ~]# /home/elk/Application/logstash/bin/logstash -f redis_port.conf

10、生产建议后设置

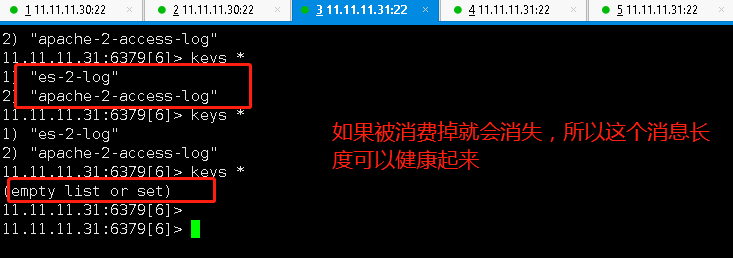

需求分析: 访问日志:apache访问日志、nginx访问日志、tomcat file--》filter 错误日志:error log、java日志 直接收,java异常需要处理 系统日志:/var/log/* syslong rsyslog 运行日志: 程序写的 file json格式 网络日志: 防火墙、交换机、路由器日志 syslong 标准化: 日志放哪(/data/logs/) 格式是什么(JSON格式) 命名规则(access_log error_log runtime_log 三个目录) 日志怎么切割(按天或小时)(access error crontab进行切分) 所有原始文本---rsync到别处,后删除最近三天前的日志 工具化: 如何使用logstash进行收集方案 如果使用redis list 作为ELKstack的消息队列,那么请对所有list key的长度进行监控 llen key_name 根据实际情况,如果超过10万就告警

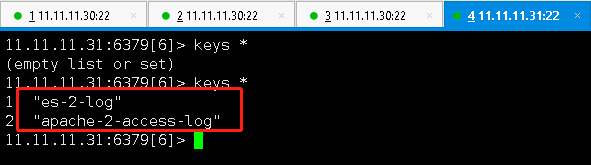

#11.11.11.30上设置后输入11.31上的redis上。

[root@elk config]# pwd /home/elk/Application/logstash/config [root@elk config]# cat apache1.conf input { file { path => "/var/log/httpd/access_log" start_position => "beginning" type => "apache-2-access-log" } file { path => "/home/elk/Log/elasticsearch/my-es.log" type => "es-2-log" start_position => "beginning" codec => multiline{ pattern => "^[" negate => true what => "previous" } } } filter { grok { match => { "message" => "%{HTTPD_COMMONLOG}"} } } output { if [type] == "apache-2-access-log" { redis { host => "11.11.11.31" port => "6379" db => "6" data_type => "list" key => "apache-2-access-log" } } if [type] == "es-2-log" { redis { host => "11.11.11.31" port => "6379" db => "6" data_type => "list" key => "es-2-log" } } } [root@elk config]#

[root@elk config]# ../bin/logstash -f apache.conf

[root@elk02 ~]# cat redis_port.conf input { syslog { type => "system-2-syslog" port=> 514 } redis { type => "apache-2-access-log" host => "11.11.11.31" port => "6379" db => "6" data_type => "list" key => "apache-2-access-log" } redis { type => "es-2-log" host => "11.11.11.31" port => "6379" db => "6" data_type => "list" key => "es-2-log" } } filter { if [type] == "apache-2-access-log" { grok { match => { "message" => "%{HTTPD_COMMONLOG}"} } } } output { if [type] == "apache-2-access-log" { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "apache-2-access-log-%{+YYYY.MM}" } } if [type] == "es-2-log" { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "es-2-log-%{+YYYY.MM.dd}" } } if [type] == "system-2-syslog" { elasticsearch { hosts => ["http://11.11.11.30:9200"] index => "system-2-syslog-%{+YYYY.MM}" } } } [root@elk02 ~]#

附录:

1、继3.1 启动elasticsearch之后安装的插件(方法)

https://www.cnblogs.com/Onlywjy/p/Elasticsearch.html

1.1、安装haed插件,启动./elasticsearch之后

- 下载head插件

wget -O /usr/local/src/master.zip https://github.com/mobz/elasticsearch-head/archive/master.zip

- 安装node

wget https://npm.taobao.org/mirrors/node/latest-v4.x/node-v4.4.7-linux-x64.tar.gz

http://nodejs.org/dist/v4.4.7/ node下载地址

tar -zxvf node-v4.4.7-linux-x64.tar.gz

- 配置下环境变量,编辑/etc/profile添加

#set node envirnoment

export NODE_HOME=/usr/local/src/node-v4.4.7-linux-x64 export PATH=$PATH:$NODE_HOME/bin export NODE_PATH=$NODE_HOME/lib/node_modules

执行 source /etc/profile

- 安装grunt

grunt是基于Node.js的项目构建工具,可以进行打包压缩、测试、执行等等的工作,head插件就是通过grunt启动

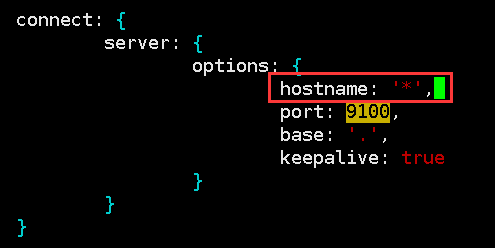

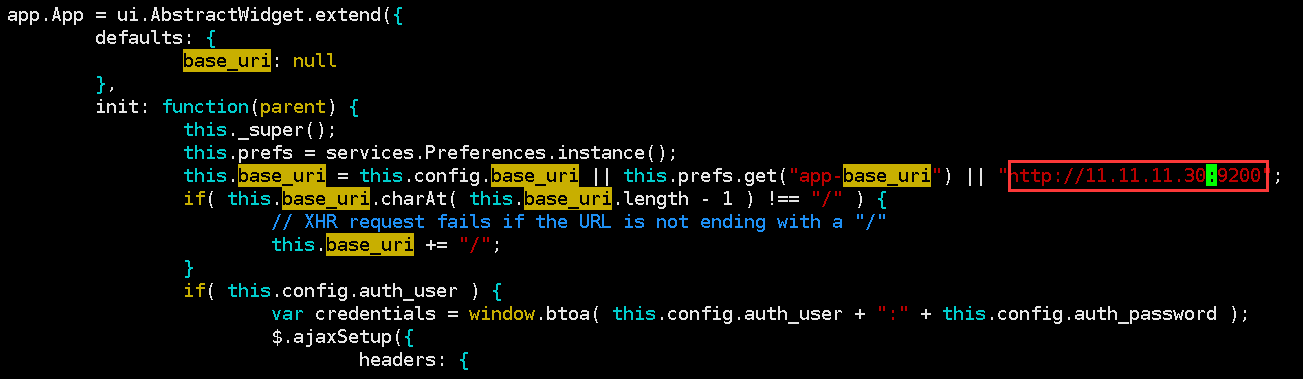

unzip master.zip [root@elk src]# cd elasticsearch-head-master/ npm install -g grunt-cli //执行后会生成node_modules文件夹 [root@elk elasticsearch-head-master]# npm install -g grunt-cli npm WARN engine atob@2.1.2: wanted: {"node":">= 4.5.0"} (current: {"node":"4.4.7","npm":"2.15.8"}) /usr/local/src/node-v4.4.7-linux-x64/bin/grunt -> /usr/local/src/node-v4.4.7-linux-x64/lib/node_modules/grunt-cli/bin/grunt grunt-cli@1.3.2 /usr/local/src/node-v4.4.7-linux-x64/lib/node_modules/grunt-cli ├── grunt-known-options@1.1.1 ├── interpret@1.1.0 ├── v8flags@3.1.1 (homedir-polyfill@1.0.1) ├── nopt@4.0.1 (abbrev@1.1.1, osenv@0.1.5) └── liftoff@2.5.0 (flagged-respawn@1.0.0, extend@3.0.2, rechoir@0.6.2, is-plain-object@2.0.4, object.map@1.0.1, resolve@1.8.1, fined@1.1.0, findup-sync@2.0.0) [root@elk elasticsearch-head-master]# grunt -version grunt-cli v1.3.2 grunt v1.0.1 修改head插件源码 #修改服务器监听地址:Gruntfile.js

#修改连接地址

[root@elk elasticsearch-head-master]# vim _site/app.js

#设置开机启动

[root@elk ~]# cat es_head_run.sh

PATH=$PATH:$HOME/bin:/usr/local/src/node-v4.4.7-linux-x64/bin/grunt

export PATH

cd /usr/local/src/elasticsearch-head-master/

nohup npm run start >/usr/local/src/elasticsearch-head-master/nohup.out 2>&1 &

[root@elk ~]# vim /etc/rc.d/rc.local

/usr/bin/sh /root/es_head_run.sh 2>&1

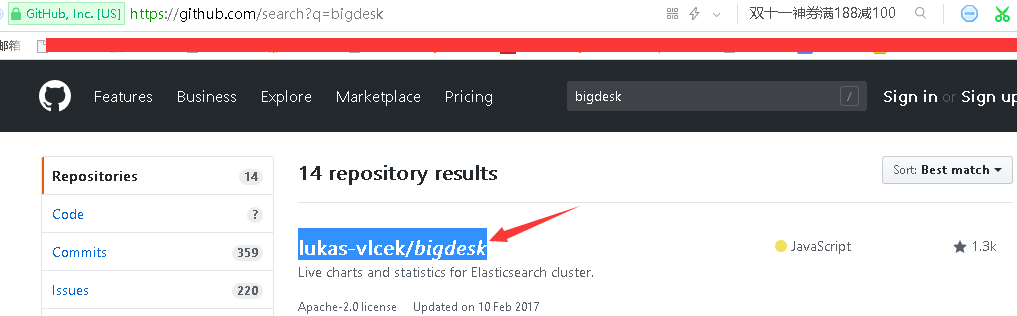

1、离线安装插件方法 https://www.elastic.co/guide/en/marvel/current/installing-marvel.html#offline-installation

2、插件收索方法: 还有kopf (记得到里边查看版本兼容性)

[root@elk elasticsearch]# ./bin/elasticsearch-plugin install lukas-vlcek/bigdesk

2、健康集群是否正常(状态)

[elk@elk ~]$ curl -XGET 'http://11.11.11.30:9200/_cluster/health?pretty=true' { "cluster_name" : "my-es", "status" : "green", "timed_out" : false, "number_of_nodes" : 2, "number_of_data_nodes" : 2, "active_primary_shards" : 0, "active_shards" : 0, "relocating_shards" : 0, "initializing_shards" : 0, "unassigned_shards" : 0, "delayed_unassigned_shards" : 0, "number_of_pending_tasks" : 0, "number_of_in_flight_fetch" : 0, "task_max_waiting_in_queue_millis" : 0, "active_shards_percent_as_number" : 100.0 } [elk@elk ~]$

提供更好的健康检查方法: https://www.elastic.co/guide/en/elasticsearch/guide/current/_cat_api.html

[elk@elk ~]$ curl -XGET 'http://11.11.11.30:9200/_cat/health?pretty=true' 1541662928 15:42:08 my-es green 2 2 0 0 0 0 0 0 - 100.0% [elk@elk ~]$

3、配置logstash后续测试

参考文档:https://www.elastic.co/guide/en/logstash/current/plugins-outputs-elasticsearch.html

写入插件:https://www.elastic.co/guide/en/logstash/current/input-plugins.html

输出插件:https://www.elastic.co/guide/en/logstash/current/output-plugins.html

这里选输出elasticsearch插件 : https://www.elastic.co/guide/en/logstash/current/plugins-outputs-elasticsearch.html

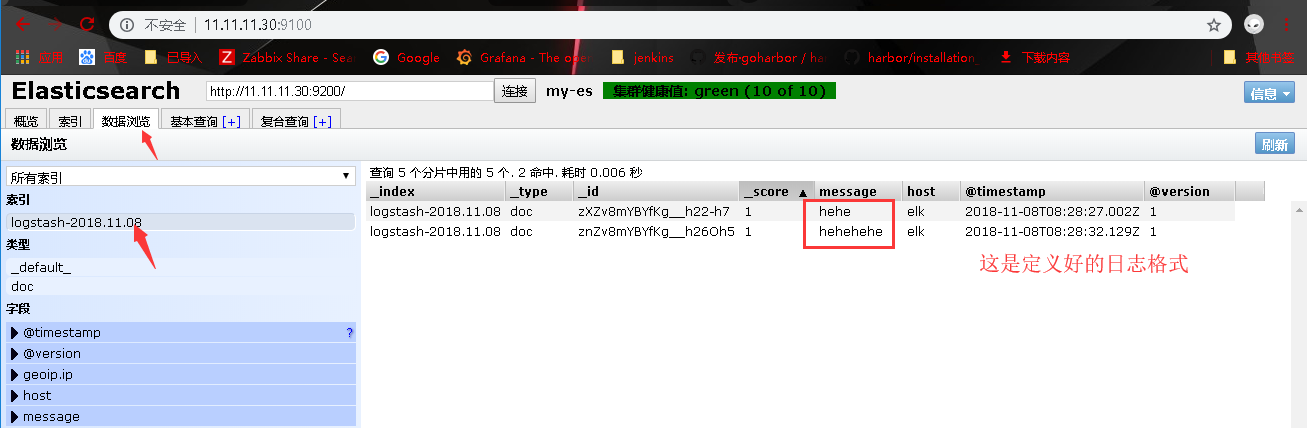

[root@elk ~]# /home/elk/Application/logstash/bin/logstash -e 'input { stdin{} } output { elasticsearch { hosts => ["11.11.11.30:9200"] index => "logstash-%{+YYYY.MM.dd}" } }' ...中间会出现一堆东西,不用管... [2018-11-08T16:28:05,537][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} hehe hehehehe #数据已经写入els上了,可以在head页面内查看写入的数据

4、线上集群的被建议做法

1、每有一个ES上都运行一个kibanaweb 2、每个kibana都连接自己本地的ES 3、前端Nginx负载均衡+验证

5、Kafka消息队列(待续)

https://www.unixhot.com/article/61

https://www.infoq.cn/

6、另一个可以代替head的插件

软件地址: https://github.com/lmenezes/cerebro/tags

下载到系统内、解压后 [root@elk cerebro-0.8.1]# ./bin/cerebro -Dhttp.port=1234 -Dhttp.address=11.11.11.30