白盒监控:监控一些内部的数据,topic的监控数据,Redis key的大小。内部暴露的指标被称为白盒监控。比较关注的是原因。

黑盒监控:站在用户的角度看到的东西。网站不能打开,网站打开的比较慢。比较关注现象,表示正在发生的问题,正在发生的告警。

一、部署exporter

黑盒监控官网:

https://github.com/prometheus/blackbox_exporter

https://github.com/prometheus/blackbox_exporter/blob/master/blackbox.yml

https://grafana.com/grafana/dashboards/5345

# 1、创建ConfigMap,通过ConfigMap形式挂载进容器里

apiVersion: v1

data:

blackbox.yml: |-

modules:

http_2xx:

prober: http

http_post_2xx:

prober: http

http:

method: POST

tcp_connect:

prober: tcp

pop3s_banner:

prober: tcp

tcp:

query_response:

- expect: "^+OK"

tls: true

tls_config:

insecure_skip_verify: false

ssh_banner:

prober: tcp

tcp:

query_response:

- expect: "^SSH-2.0-"

irc_banner:

prober: tcp

tcp:

query_response:

- send: "NICK prober"

- send: "USER prober prober prober :prober"

- expect: "PING :([^ ]+)"

send: "PONG ${1}"

- expect: "^:[^ ]+ 001"

icmp:

prober: icmp

kind: ConfigMap

metadata:

name: blackbox.conf

namespace: monitoring

---

# 2、创建Service、deployment

---

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: monitoring

spec:

ports:

- name: container-1-web-1

port: 9115

protocol: TCP

targetPort: 9115

selector:

app: blackbox-exporter

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

---

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: blackbox-exporter

spec:

affinity: {}

containers:

- args:

- --config.file=/mnt/blackbox.yml

env:

- name: TZ

value: Asia/Shanghai

- name: LANG

value: C.UTF-8

image: prom/blackbox-exporter:master

imagePullPolicy: IfNotPresent

lifecycle: {}

name: blackbox-exporter

ports:

- containerPort: 9115

name: web

protocol: TCP

resources:

limits:

cpu: 260m

memory: 395Mi

requests:

cpu: 10m

memory: 10Mi

securityContext:

allowPrivilegeEscalation: false

capabilities: {}

privileged: false

procMount: Default

readOnlyRootFilesystem: false

runAsNonRoot: false

volumeMounts:

- mountPath: /usr/share/zoneinfo/Asia/Shanghai

name: tz-config

- mountPath: /etc/localtime

name: tz-config

- mountPath: /etc/timezone

name: timezone

- mountPath: /mnt

name: config

dnsPolicy: ClusterFirst

restartPolicy: Always

securityContext: {}

volumes:

- hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

type: ""

name: tz-config

- hostPath:

path: /etc/timezone

type: ""

name: timezone

- configMap:

name: blackbox.conf

name: config

# 查看pod状态

[root@k8s-master01 ~]# kubectl get pod -n monitoring blackbox-exporter-78bb74fd9d-z5xdq

NAME READY STATUS RESTARTS AGE

blackbox-exporter-78bb74fd9d-z5xdq 1/1 Running 0 67s

二、additional传统监控

# 测试exporter是否正常

# 查看svc的IP

[root@k8s-master01 ~]# kubectl get svc -n monitoring blackbox-exporter

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

blackbox-exporter ClusterIP 10.100.9.18 <none> 9115/TCP 30m

# curl一下exporter的svc

[root@k8s-master01 ~]# curl "http://10.100.9.18:9115/probe?target=baidu.com&module=http_2xx"

2.1、添加个监控测试

[root@k8s-master01 prometheus-down]# vim prometheus-additional.yaml

- job_name: "blackbox"

metrics_path: /probe

params:

module: [http_2xx] # Look for a HTTP 200 response.

static_configs:

- targets:

- http://prometheus.io

- https://prometheus.io

- http://www.baidu.com

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter:9115 # exporter的svc name

# Then you will need to make a secret out of this configuration.

[root@k8s-master01 prometheus-down]# kubectl create secret generic additional-scrape-configs --from-file=prometheus-additional.yaml --dry-run -oyaml > additional-scrape-configs.yaml

# 查看Secret

[root@k8s-master01 prometheus-down]# cat additional-scrape-configs.yaml

apiVersion: v1

data:

prometheus-additional.yaml: LSBqb2JfbmFtZTogImJsYWNrYm94IgogIG1ldHJpY3NfcGF0aDogL3Byb2JlCiAgcGFyYW1zOgogICAgbW9kdWxlOiBbaHR0cF8yeHhdICAjIExvb2sgZm9yIGEgSFRUUCAyMDAgcmVzcG9uc2UuCiAgc3RhdGljX2NvbmZpZ3M6CiAgICAtIHRhcmdldHM6CiAgICAgIC0gaHR0cDovL3Byb21ldGhldXMuaW8gICAgIyBUYXJnZXQgdG8gcHJvYmUgd2l0aCBodHRwLgogICAgICAtIGh0dHBzOi8vcHJvbWV0aGV1cy5pbyAgICMgVGFyZ2V0IHRvIHByb2JlIHdpdGggaHR0cHMuCiAgICAgIC0gaHR0cDovL3d3dy5iYWlkdS5jb20gICAgIyBUYXJnZXQgdG8gcHJvYmUgd2l0aCBodHRwIG9uIHBvcnQgODA4MC4KICByZWxhYmVsX2NvbmZpZ3M6CiAgICAtIHNvdXJjZV9sYWJlbHM6IFtfX2FkZHJlc3NfX10KICAgICAgdGFyZ2V0X2xhYmVsOiBfX3BhcmFtX3RhcmdldAogICAgLSBzb3VyY2VfbGFiZWxzOiBbX19wYXJhbV90YXJnZXRdCiAgICAgIHRhcmdldF9sYWJlbDogaW5zdGFuY2UKICAgIC0gdGFyZ2V0X2xhYmVsOiBfX2FkZHJlc3NfXwogICAgICByZXBsYWNlbWVudDogYmxhY2tib3gtZXhwb3J0ZXI6OTExNSAgIyBleHBvcnRlcueahHN2YyBuYW1lCg==

kind: Secret

metadata:

name: additional-scrape-configs

# 创建Secret

[root@k8s-master01 prometheus-down]# kubectl apply -f additional-scrape-configs.yaml -n monitoring

secret/additional-scrape-configs created

# 进到manifests目录,编辑

[root@k8s-master01 manifests]# vim prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

name: prometheus

labels:

prometheus: prometheus

spec:

replicas: 2

... 加上下面3行

additionalScrapeConfigs:

name: additional-scrape-configs

key: prometheus-additional.yaml

...

# replace刚刚修改的文件

[root@k8s-master01 manifests]# kubectl replace -f prometheus-prometheus.yaml -n monitoring

# 手动删除pod、使之重新构建

[root@k8s-master01 manifests]# kubectl delete po prometheus-k8s-0 prometheus-k8s-1 -n monitoring

查看是否成功加载配置:

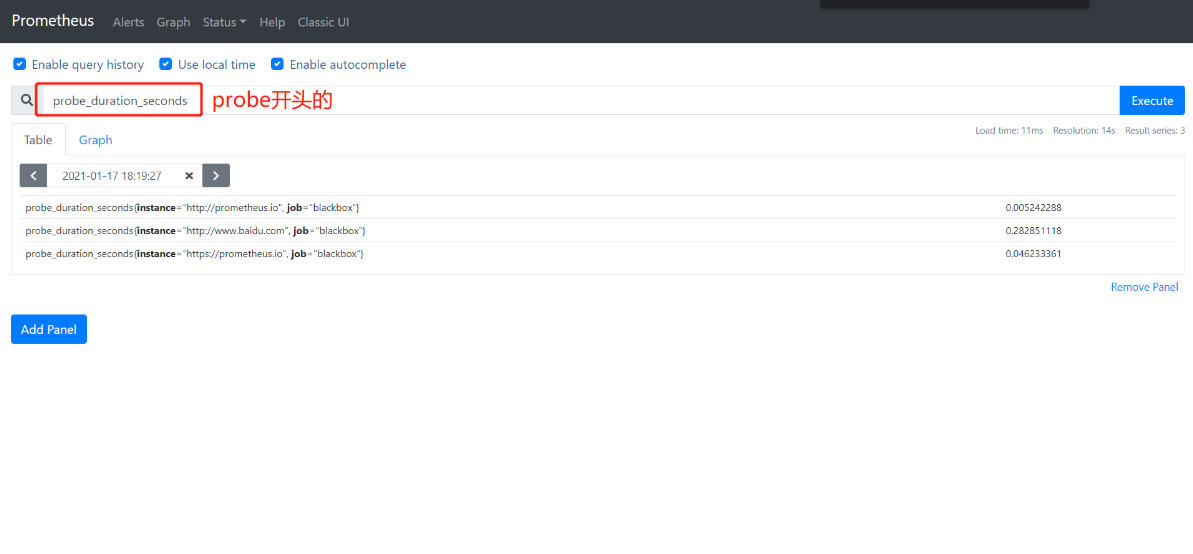

数据查看: