hadoop2.8.5:

https://hadoop.apache.org/docs/r2.8.5/

阿里文档:

EMR里可以通过 Ranger组件来实现

https://help.aliyun.com/document_detail/66410.html?spm=a2c4g.11186623.3.4.1a685b78iZGjgK

4.AWS S3迁移到阿里OSS

https://help.aliyun.com/document_detail/95130.html?spm=a2c4g.11186623.2.8.73cf48fayabm5m#concept-igj-s12-qfb

5.UFile迁移到阿里OSS

在线迁移服务目前暂时未包括UFile,可以通过UFile提供的工具将文件下到NAS或本地

https://docs.ucloud.cn/storage_cdn/ufile/tools/tools/tools_file

再通过在线迁移服务从本地NAS弄到OSS

https://help.aliyun.com/document_detail/98476.html?spm=a2c4g.11174283.6.617.480251ccL3tHG2

目前UFile应该也是兼容了S3 API的,所以可以尝试用迁移S3的方式先试试看能否迁UFile的数据

1. binlog 写到 HDFS

https://help.aliyun.com/document_detail/71539.html?spm=5176.11065259.1996646101.searchclickresult.701e754d68cQgN&aly_as=So7-sfoz

2. EMR Kafka 到 OSS

https://yq.aliyun.com/articles/65307

3. Kafka集群之间的数据迁移

https://help.aliyun.com/document_detail/127685.html?spm=5176.11065259.1996646101.searchclickresult.701e754d68cQgN&aly_as=-artxIw9

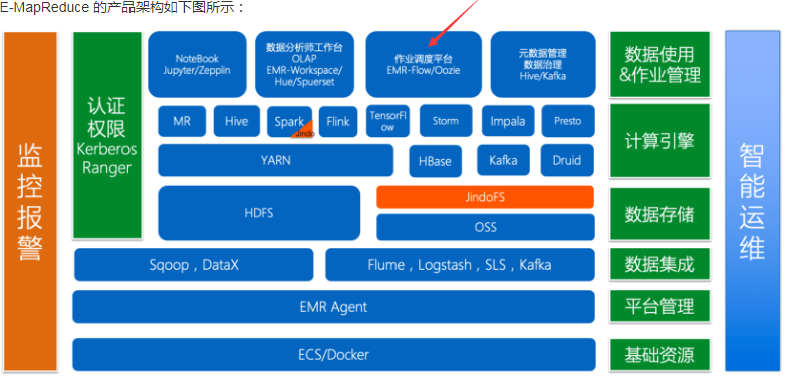

EMR架构:

EMR目录:

ln -s /opt/apps/hive-current /usr/lib/hive-current

/usr/lib/hadoop-current/share/hadoop/common/hadoop-common-2.8.5.jar

/usr/lib/hadoop-current//share/hadoop/common/lib/hadoop-auth-2.8.5.jar

系统已经设置好的环境:

echo $JAVA_HOME /usr/lib/jvm/java-1.8.0

echo $HADOOP_HOME /usr/lib/hadoop-current

echo $HADOOP_CONF_DIR /etc/ecm/hadoop-conf

echo $HADOOP_OG_DIR

echo $YARN_LOG_DIR /var/log/hadoop-yarn

echo $HIVE_HOME /usr/lib/hive-current

echo $HIVE_CONF_DIR /etc/ecm/hive-conf

echo $PIG_HOME /usr/lib/pig-current

echo $PIG_CONF_DIR /etc/ecm/pig-conf

#做的软链

配置文件目录在/etc/ecm

例如 :core-site.xml在$HADOOP_CONF_DIR

/etc/ecm/hadoop-conf/core-site.xml

而是实际地址是: /etc/ecm/hadoop-conf-2.8.5-1.4.0/core-site.xml

又如:

vi /etc/ecm/hadoop-conf/fair-scheduler.xml

#这里面的配置文件记录集群的一些配置,调用jar等信息;

/usr/local/emr/emr-bin/conf