见:

笔记

hdfs调优;

dfs.socket.timeout 3000 480000

dfs.datanode.socket.write.timeout 3000 480000

dfs.replication 副本改为2

dfs.blocksize 16M --- 128M 又调回16M ,几百K小文件多

dfs.datanode.max.transfer.threads 4096 8192

scheduler org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler 不变

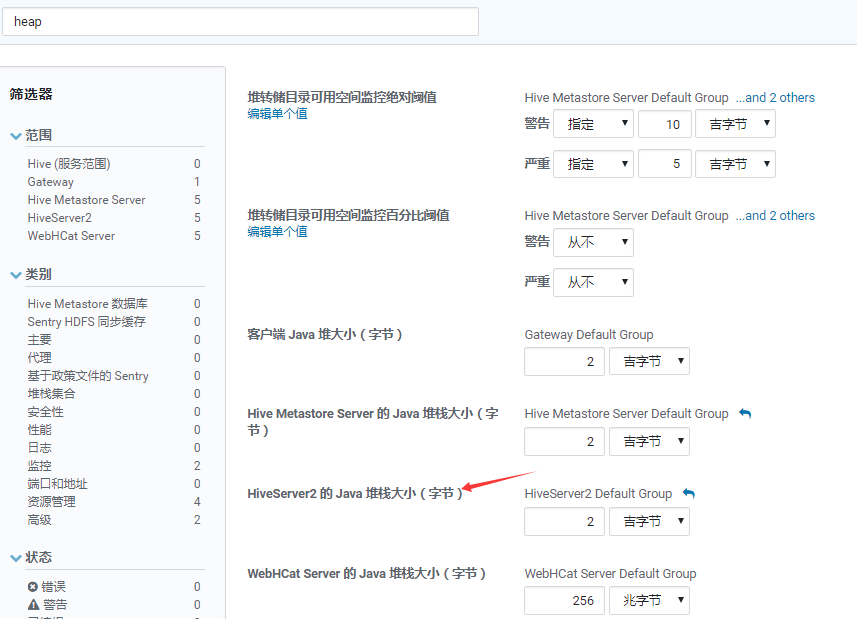

heap:

Namenode 738 6G 调为5G

2NN: 948 6G 又调为4个G

yarn调优:

yarn.nodemanager.resource.memory-mb 4G 10G 容器内存: 可调为6G node1单独 1G

NodeManager 的 Java 堆栈大小(字节) node1单独 1G

NodeManager Group 1 975M 4G

NodeManager Default Group 975 4G

ResourceManager 的 Java 堆栈大小 975 8G 可调为: 6G

ApplicationMaster Java 最大堆栈 738M 2G

appliction master 内存 1 2G

yarn.nodemanager.resource.cpu-vcores node1 8 node2-4 16

yarn.scheduler.maximum-allocation-mb 3 6

yarn.scheduler.maximum-allocation-vcores 32 16

ApplicationMaster Java Maximum Heap Size (2147483648) to ApplicationMaster Memory (1073741824) ratio is above 0.85, possibly requiring the use of virtual memory.

hive调优:

https://www.cloudera.com/documentation/enterprise/6/6.2/topics/cdh_ig_hive_troubleshooting.html#d13479e316

heap推荐是调到4G