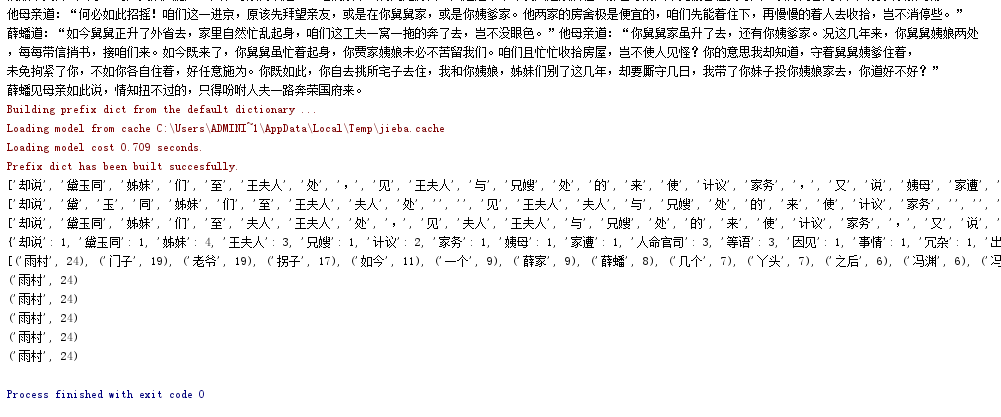

fo = open('novel.txt','r',encoding='utf-8') #读取文件 str = fo.read() fo.close() print(str) str = str.lower() #全部转为小写 sep = '.,:;?!' #删除特殊字符 for a in sep: str = str.replace(a,' ') print(str) strlist = str.split() #分割字符 print(len(strlist),strlist) strset = set(strlist) #将字符转为列表 print(len(strset),strset) se = {'a','the','and','we','you','of','si','s','ter','to'} #删除无语义词 strsete =strset-se print(strsete) strdict = {} #单词计数字典 for word in strset: strdict[word] = strlist.count(word) print(len(strdict),strdict) for word in strset: #单词计数集合 strdict[word] = strlist.count(word) print(len(strdict),strdict) wordlist = list(strdict.items()) wordlist.sort(key=lambda x:x[1],reverse=True) #用lambda函数排序 print(strlist) for i in range(20): #输出TOP(20) print(wordlist[i])

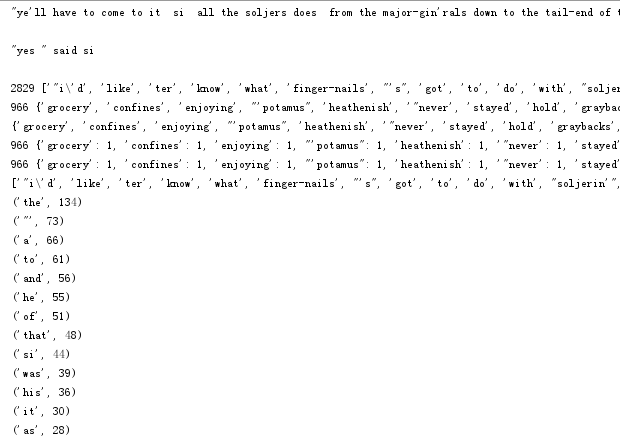

import jieba #导入jieba包 fo = open('小说.txt','r',encoding='utf-8') ci = fo.read() fo.close() print(ci) ci.replace(',','') print(ci) print(list(jieba.cut(ci))) #精确模式,将句子最精确的分开,适合文本分析 print(list(jieba.cut(ci,cut_all=True))) #全模式,把句子中所有的可以成词的词语都扫描出来,速度快,但不能解决歧义 print(list(jieba.cut_for_search(ci))) #搜索引擎模式,在精确模式的基础上,对长词再次切分,提高召回率,适合用于搜索引擎分词 cilist = jieba._lcut(ci) #用字典形式统计每个词的字数 cidict = {} for word in cilist: if len(word) == 1: continue else: cidict[word] = cidict.get(word,0)+1 print(cidict) cilist = list(cidict.items()) #以列表返回可遍历的(键, 值) 元组数组 cilist.sort(key = lambda x:x[1],reverse=True) #出现词汇次数由高到低排序 print(cilist) for i in range(5): #第一个词循环遍历输出 print(cilist[0])