鉴于配置与启动hadoop操作过于繁琐, so 自己写了几个脚本, 减少操作

多台主机同时操作脚本

操作实例

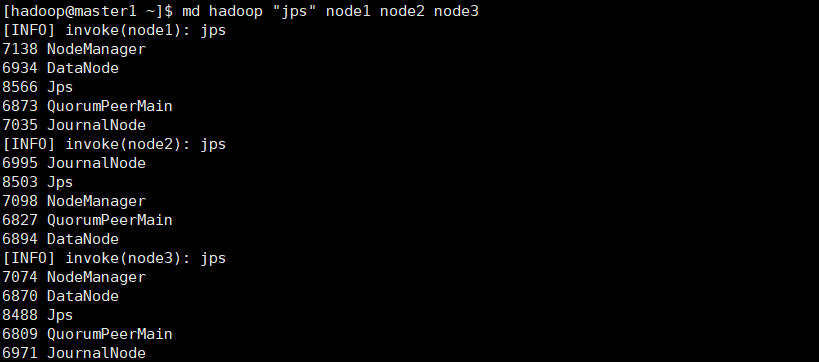

--查看hadoop用户 node1 node2 node3主机的jps进程

结果

#/bin/bash if [ "$1" = "--help" -o $# -lt 3 ]; then echo "Usage: opts <user> <opt> <mechine1> [<mechine2>...]" echo " please user "_" instead of "\"" echo " please user "__" instead of "_"" echo " please user "%" instead of "double quotation marks"" exit fi args=(${*//$2/opt}) user=$1 unset args[0] opt=${2//_//} opt=${opt//%/"} unset args[1] for s in ${args[@]} do echo "[INFO] invoke($s): $opt" ssh ${user}@$s "source /etc/profile; ${opt//'//'/_}" done

操作实例

--传输/etc/文件夹到hadoop用户 node1 node2 node3主机的 /tmp 文件夹下

scps /etc/ /tmp hadoop node1 node2 node3

文件传输到多台主机

#/bin/bash opt=$1 if [ "$opt" = "--help" -o $# -lt 4 ] ; then echo "Usage: scps <sourcePath> <destinationPath> <user> <mechine1> [<mechine2> <mechine3>...]" exit fi mechines=($*) soucePath=$1 destinationPath=$2 user=$3 unset mechines[0] unset mechines[1] unset mechines[2] for i in ${mechines[@]} do echo "(INFO) invoke: scp -r $soucePath $user@${i}:$destinationPath" scp -r $soucePath $user@${i}:$destinationPath done

一键启动/关闭hadoop集群命令(注:hadoop为用户, 可自己更换)

#!/bin/bash #启动hadoop ha集群 start(){ md hadoop "zkServer.sh start" node1 node2 node3 md hadoop "start-dfs.sh" master1 md hadoop "start-yarn.sh" master1 md hadoop "yarn-daemon.sh start resourcemanager" master2 } #启动hadoop ha集群 stop(){ md hadoop "yarn-daemon.sh stop resourcemanager" master2 md hadoop "stop-yarn.sh" master1 md hadoop "stop-dfs.sh" master1 md hadoop "zkServer.sh stop" node1 node2 node3 } #验证hadoop NameNode状态 statusNN(){ success=0 nn=$1 echo "[INFO] check hadoop in $nn status..." rm1=`ssh hadoop@$nn "source /etc/profile; jps | grep ResourceManager"` nn1=`ssh hadoop@$nn "source /etc/profile; jps | grep NameNode"` zkfc1=`ssh hadoop@$nn "source /etc/profile; jps |grep DFSZKFailoverController"` len_rm1=${#rm1} len_nn1=${#nn1} len_zkfc1=${#zkfc1} if [ $len_rm1 == 0 ] then echo -e "[WARING] ResourceManager in $nn do not running" let success=1 fi if [ $len_nn1 == 0 ] then echo -e "[WARING] NameNode in $nn do not running" let success=1 fi if [ $len_zkfc1 == 0 ] then echo -e "[WARING] DFSZKFailoverController in $nn do not running" let success=1 fi if [ $success != 1 ] then echo -e "[INFO] hadoop in $nn is running well" fi } #验证Hadoop DataNode状态 statusDN(){ success=0 dn=$1 echo "[INFO] check hadoop in $dn status..." qpm1=`ssh hadoop@$dn "source /etc/profile; jps | grep QuorumPeerMain"` jn1=`ssh hadoop@$dn "source /etc/profile; jps | grep JournalNode"` nm1=`ssh hadoop@$dn "source /etc/profile; jps | grep NodeManager"` dn1=`ssh hadoop@$dn "source /etc/profile; jps | grep DataNode"` len_qpm1=${#qpm1} len_jn1=${#jn1} len_nm1=${#nm1} len_dn1=${#dn1} if [ $len_qpm1 == 0 ]; then echo -e "[WARING] QuorumPeerMain in $dn is not running" let success=1 fi if [ $len_jn1 == 0 ]; then echo -e "[WARING] JournalNode in $dn is not running" let success=1 fi if [ $len_nm1 == 0 ]; then echo -e "[WARING] NodeManager in $dn is not running" let success=1 fi if [ $len_dn1 == 0 ]; then echo -e "[WARING] DataNode in $dn is not running" let success=1 fi if [ $success != 1 ]; then echo -e "[INFO] hadoop in $dn is running well" fi } status(){ statusNN master1 statusNN master2 statusDN node1 statusDN node2 statusDN node3 } opt=$1 if [ "$opt" == "start" ] then echo "[INFO] start hadoop..." start elif [ "$opt" == "stop" ] then echo "[INFO] stop hadoop..." stop elif [ "$opt" == "restart" ] then echo "[INFO] restart hadoop..." stop start elif [ "$opt" == "status" ] then echo "checking hadoop status...." status else echo "Usage: hadoop-admin <start|stop|restart>" fi

操作实例

--启动hadoop ha集群: hadoop-admin start

--关闭hadoop ha集群: hadoop-admin stop

--查看hadoop状态: hadoop-admin status (注意:只能查看进程是否开启)