spark-shell 交互式编程

数据格式如下所示:

Tom,DataBase,80

Tom,Algorithm,50

Tom,DataStructure,60

Jim,DataBase,90

Jim,Algorithm,60

Jim,DataStructure,80

请根据给定的实验数据,在 spark-shell 中通过编程来计算以下内容:

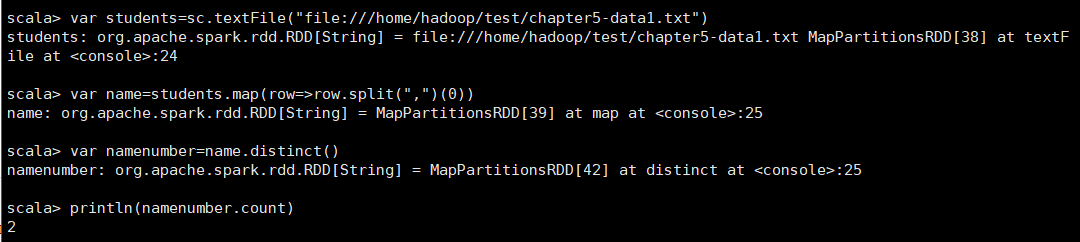

(1) 该系总共有多少学生;

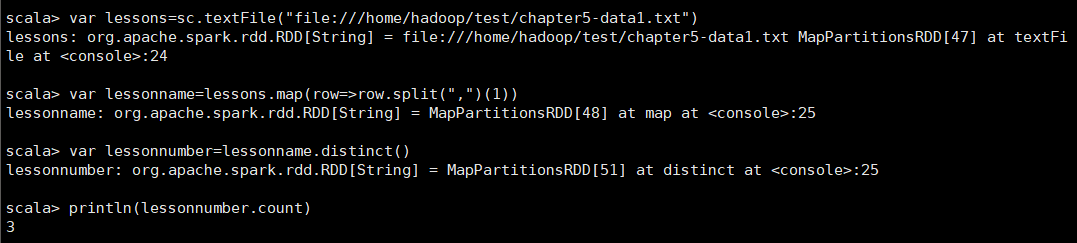

(2) 该系共开设来多少门课程;

(3) Tom 同学的总成绩平均分是多少;

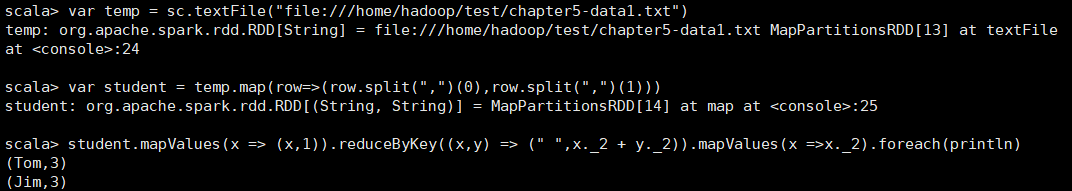

(4) 求每名同学的选修的课程门数;

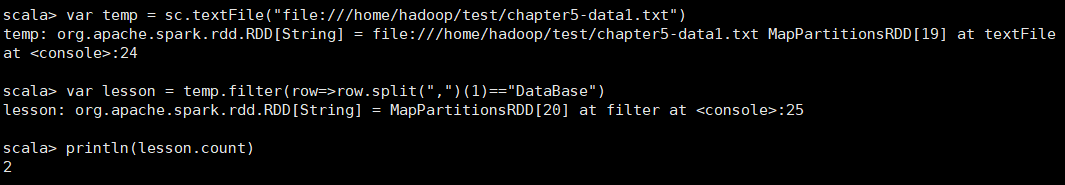

(5) 该系 DataBase 课程共有多少人选修;

(6) 各门课程的平均分是多少;

(7) 使用累加器计算共有多少人选了 DataBase 这门课。

(1) var students=sc.textFile("file:///home/hadoop/test/chapter5-data1.txt") var name=students.map(row=>row.split(",")(0)) var namenumber=name.distinct() println(namenumber.count)

(2) var lessons=sc.textFile("file:///home/hadoop/test/chapter5-data1.txt") var lessonname=lessons.map(row=>row.split(",")(1)) var lessonnumber=lessonname.distinct() println(lessonnumber.count)

(3) val students=sc.textFile("file:///home/hadoop/test/chapter5-data1.txt") val Tom=students.filter(row=>row.split(",")(0)=="Tom") Tom.map(row=>(row.split(",")(0),row.split(",")(2).toInt)).mapValues(x=>(x,1)).reduceByKey((x,y)=>(x._1+y._1,x._2+y._2)).mapValues(x=>(x._1/x._2)).collect()

(4) var temp = sc.textFile("file:///home/hadoop/test/chapter5-data1.txt") var student = temp.map(row=>(row.split(",")(0),row.split(",")(1))) student.mapValues(x => (x,1)).reduceByKey((x,y) => (" ",x._2 + y._2)).mapValues(x =>x._2).foreach(println)

(5) var temp = sc.textFile("file:///home/hadoop/test/chapter5-data1.txt") var lesson = temp.filter(row=>row.split(",")(1)=="DataBase") println(lesson.count)

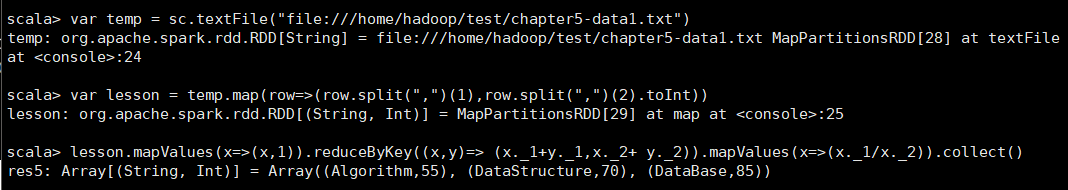

(6) var temp = sc.textFile("file:///home/hadoop/test/chapter5-data1.txt") var lesson = temp.map(row=>(row.split(",")(1),row.split(",")(2).toInt)) lesson.mapValues(x=>(x,1)).reduceByKey((x,y)=> (x._1+y._1,x._2+ y._2)).mapValues(x=>(x._1/x._2)).collect()

(7) var temp = sc.textFile("file:///home/hadoop/test/chapter5-data1.txt")

var dateBase = temp.filter(row=>row.split(",")(1)=="DataBase").map(row=>(row.split(",")(1),1))

var accum = sc.longAccumulator("longAccumulator")

dateBase.values.foreach(x => accum.add(x))

println(accum.value)