有时候会有种需求就是:将一段句子里面的词语进行分词,分词结果根据构造的词典进行分词

(当然了解NLP的同学,jieba里面的自定义词典可以满足需求) 这里自己写了轮子共享下:

需求:

a = "i love you so much and want to do something for you, can you give me one chance or want to marry you."

require_list = ["want to", "so much", "one chance"]

## 结果需要

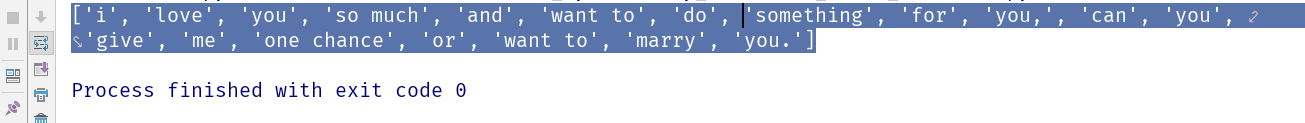

Finish Result: ['i', 'love', 'you', 'so much', 'and', 'want to', 'do', 'something', 'for', 'you,', 'can', 'you', 'give', 'me', 'one chance', 'or', 'want to', 'marry', 'you.']

采用了逆向词组判定和递归的想法:

# 关于切词组化的正序排布

def tokenize(need_article1, rule_phrase, new_list):

need_article = need_article1

len_need_article = len(need_article)

for word_join in rule_phrase:

while word_join in need_article:

# if word_join in need_article:

length = len(word_join)

index_first = need_article.find(word_join)

if index_first == -1:

break

try:

if index_first + length < len_need_article:

if need_article[index_first + length] == " " and (index_first == 0 or need_article[index_first - 1] == " "):

need_article_temp = need_article[:index_first + length]

specfic_phrase = need_article_temp[index_first::]

if need_article_temp[:index_first]:

before_phrase = need_article_temp[:index_first]

conbine_list = tokenize(before_phrase, rule_phrase, new_list)

if conbine_list:

new_list.append(specfic_phrase)

need_article =need_article[index_first + length:]

else:

new_list.append(specfic_phrase)

need_article = need_article[index_first + length:]

else:

break

except Exception as e:

# 这里越界不做处理,直接将句子扔进递归

need_article_temp = need_article[:index_first + length]

specfic_phrase = need_article_temp[index_first::]

if need_article_temp[:index_first]:

before_phrase = need_article_temp[:index_first]

conbine_list = tokenize(before_phrase, rule_phrase, new_list)

if conbine_list:

new_list.append(specfic_phrase)

need_article = need_article[index_first + length:]

else:

new_list.append(specfic_phrase)

need_article = need_article[index_first + length:]

else:

conbine_list2 = need_article.split()

new_list.extend(conbine_list2)

if need_article1 == need_article:

if not conbine_list2:

conbine_list = need_article.split()

new_list.extend(conbine_list)

return need_article

return new_list

def tokenize_foo(a, list1, new_list):

new_list_order = tokenize(a, list1, new_list)

if a == new_list_order:

# 还是返回了原来的字符串, 没有任何的词汇组合

new_list_order = new_list_order.split()

return new_list_order

主程序:

if __name__ == '__main__':

a = "i love you so much and want to do something for you, can you give me one chance or want to marry you."

list1 = ["want to", "one chance", "so much"]

new_list = []

new_list_order = tokenize_foo(a, list1, new_list)

print(new_list_order)

结果展示