(1) 项目名称:信息化领域热词分类分析及解释

(2) 功能设计:

1)

数据采集:要求从定期自动从网络中爬取信息领域的相关热

词;

2)

数据清洗:对热词信息进行数据清洗,并采用自动分类技术

生成信息领域热词目录,;

3)

热词解释:针对每个热词名词自动添加中文解释(参照百度

百科或维基百科);

4)

热词引用:并对近期引用热词的文章或新闻进行标记,生成

超链接目录,用户可以点击访问;

5)

数据可视化展示:

① 用字符云或热词图进行可视化展示;

② 用关系图标识热词之间的紧密程度。6) 数据报告:可将所有热词目录和名词解释生成 WORD 版报告

形式导出。

今天将之前的片段的方法总结到一起来使用,

import requests from bs4 import BeautifulSoup import pymysql import json import lxml import xlwt import jieba import pandas as pd import re from collections import Counter import linecache from lxml import etree def getTitle(url): headers = { 'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36'} # 创建头部信息 response = requests.get(url, headers=headers) # 发送网络请求 content = response.content.decode('utf-8') soup = BeautifulSoup(content, 'html.parser') list=soup.select('div:nth-child(2) > h2:nth-child(1) > a:nth-child(1)') for i in range(18): print(list[i].text) return list def getHotword(): url = "https://news.cnblogs.com/n/recommend?page={}" f = xlwt.Workbook(encoding='utf-8') ft = open("Hotword.txt", "w", encoding='utf-8') sheet01 = f.add_sheet(u'sheet1', cell_overwrite_ok=True) sheet01.write(0, 0, '博客最热新闻') # 第一行第一列 temp = 0 for i in range(1, 100): newurl = url.format(i) title = getTitle(newurl) for j in range(len(title)): ft.write(title[j].text +' ') sheet01.write(temp + j + 1, 0, title[j].text) temp += len(title) print("第" + str(i) + "页打印完!") print("全部打印完!!!") f.save('Hotword.xls') ft.close() def fenci(): filehandle = open("Hotword.txt", "r", encoding='utf-8'); file = open("final_hotword2.txt", "w", encoding='utf-8'); filepaixu = open("final_hotword.txt", "w", encoding='utf-8'); mystr = filehandle.read() dbinserthot=[] seg_list = jieba.cut(mystr) # 默认是精确模式 print(seg_list) # all_words = cut_words.split() # print(all_words) stopwords = {}.fromkeys([line.rstrip() for line in open(r'final.txt', encoding='UTF-8')]) c = Counter() for x in seg_list: if x not in stopwords: if len(x) > 1 and x != ' ' and x != 'quot': c[x] += 1 print(' 词频统计结果:') for (k, v) in c.most_common(100): # 输出词频最高的前两个词 print("%s:%d" % (k, v)) file.write(k + ' ') filepaixu.write(k + ":" + str(v) + ' ') value=[k,str(v)] dbinserthot.append(value) tuphot=tuple(dbinserthot) db = pymysql.connect(host="localhost", user="root", password="1229", database="lianxi", charset='utf8') cursor = db.cursor() sql_hot = "INSERT INTO final_hotword values(%s,%s)" try: cursor.executemany(sql_hot, tuphot) db.commit() except: print('执行失败,进入回调3') db.rollback() db.close() # print(mystr) filehandle.close(); file.close() filepaixu.close() # seg2 = jieba.cut("好好学学python,有用。", cut_all=False) # print("精确模式(也是默认模式):", ' '.join(seg2)) def get_page(url): headers = { "user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:90.0) Gecko/20100101 Firefox/90.0" } try: response = requests.get(url,headers=headers) response.encoding = 'utf-8' if response.status_code == 200: print('获取网页成功') #print(response.encoding) return response.text else: print('获取网页失败') except Exception as e: print(e) def getHotExpeain(): url = 'https://baike.baidu.com/' headers = { "user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:90.0) Gecko/20100101 Firefox/90.0" } f = xlwt.Workbook(encoding='utf-8') sheet01 = f.add_sheet(u'sheet1', cell_overwrite_ok=True) sheet01.write(0, 0, '热词') # 第一行第一列 sheet01.write(0, 1, '热词解释') # 第一行第二列 sheet01.write(0, 2, '网址') # 第一行第三列 fopen = open('final_hotword2.txt', 'r', encoding='utf-8') lines = fopen.readlines() urls = ['https://baike.baidu.com/item/{}'.format(line) for line in lines] i = 0 alllist = [] value = () for url in urls: print(url.replace(" ", "")) page = get_page(url.replace(" ", "")) items = re.findall('<meta name="description" content="(.*?)">', page, re.S) print(items) if len(items) > 0: hot = str(linecache.getline("final_hotword2.txt", i + 1).strip()) hotexplent = str(items[0]) link = str(url.replace(" ", "")) sheet01.write(i + 1, 0, hot) sheet01.write(i + 1, 1, hotexplent) sheet01.write(i + 1, 2, link) value = (hot, hotexplent, link) alllist.append(value) i += 1 print("总爬取完毕数量:" + str(i)) print("打印完!!!") print(alllist) tuplist = tuple(alllist) # 存到mysql db = pymysql.connect(host="localhost", user="root", password="1229", database="lianxi", charset='utf8') cursor = db.cursor() sql_cvpr = "INSERT INTO website values(%s,%s,%s)" try: cursor.executemany(sql_cvpr, tuplist) db.commit() except: print('执行失败,进入回调3') db.rollback() db.close() f.save('hotword_explain.xls') def getDetail(href, title, line,hrefs,titles,contents,dbinsert): line1 = line.replace(' ', '') # print(title) headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36', 'Cookie': '__gads=ID=d75a34306778b5ff:T=1618733672:S=ALNI_MaHsp16fM1BsstZSOfJwX6G_QAUWQ; UM_distinctid=179ea734280ecc-09762ea5a082b4-f7f1939-144000-179ea7342813ab; .CNBlogsCookie=5E1AE0B6F75346AAE5B35A668F42048EA98E21F1955AD2276AF31BF0A80E27F66707B9E60CCEAD47488A30992D00DC561A9CABC8F44787B3C02A7BE680DD9E3674007F8365B87C23956D9A9DE039EFBF84AF98F6; _ga_3Q0DVSGN10=GS1.1.1627456725.2.1.1627456780.0; _ga=GA1.2.1309006929.1616077024; .Cnblogs.AspNetCore.Cookies=CfDJ8NACB8VE9qlHm6Ujjqxvg5CnxAgBVZuTnvm6dxCDTSbTrA48gOuFsKr59bSiLaPWs0F6RvFHxDGBPyp0eJ37eNmqXA-o3aBDnqb0SWn9WseCNwJFkDPi8YBkpNVfsXkXN759CT9mktdmE2mDvN2cmdIT5Hus-g0h8jvvAcB4Rv0u70At2vuraQEHkssYBYgPCGzYMr4ewXqP7W4hDt0J67noxu44HbVMveZtSzdh8pxXwlJ8i1pVA7VX4-gBtgdmc2POqQ2DoA6en5Jq-ne5-hyclgJ7EdobG5wPNt6A6ByteR6FIxpZNBLYRN6OCFjWCXrF7hdxLnTmSVQ02cYC53Q6V-658PcTbW_mwMu0pTOTbAFWh1kE25e6GUagtwqZq2mVlbuiYhiTOx2y2NAcdAebkgM75EVIEp6xTjt2xrMLj7A_cbNoQ6SM0n9DNNNDXM17frFZeUQhgJQCHGX_MD0sc_p-MyTcb-lFJl3Ddk8S6M6213_NnF9fOFwCYp-LO9FUxKSLAoWVttIdnIzeF6gHj6WgjIuUolAxAYjoLvPawhFxJpfRuCCWuhj6OlU6L25UvGVHphyiJ7EZLAscBdg; _gid=GA1.2.3940361.1628580647; affinity=1628668232.326.326.71855'} url2 = "https://news.cnblogs.com" + href requests.adapters.DEFAULT_RETRIES = 10 r2 = requests.get(url2, headers=headers) html = r2.content.decode("utf-8") html1 = etree.HTML(html) content1 = html1.xpath('//div[@id="news_body"]') # print('line:'+line) if len(content1) == 0: print("异常") else: titles.append(title) hrefs.append(url2) content2 = content1[0].xpath('string(.)') # print(content2) content = content2.replace(' ', '').replace(' ', '').replace(' ', '').replace(' ', '') contents.append(content) # print(title) # print(content) # print(line) m = content.find(line1) n = title.find(line1) # print(line1) # print(m) # print(n) # python中是没有&&及||这两个运算符的,取而代之的是英文and和or if m != -1 or n != -1: print('匹配上') value=[title,url2,line1] dbinsert.append(value) else: print('未匹配') def climing(line,hrefs,titles,contents,dbinsert): print(line); for i in range(0, 40): print("***********************************") print(i) page = i + 1 url = "https://news.cnblogs.com/n/page/" + str(page) r = requests.get(url) html = r.content.decode("utf-8") # print("Status code:", r.status_code) # print(html) html1 = etree.HTML(html) href = html1.xpath('//h2[@class="news_entry"]/a/@href') title = html1.xpath('//h2[@class="news_entry"]/a/text()') # print(href) # print(title) for a in range(0, 18): getDetail(href[a], title[a], line,hrefs,titles,contents,dbinsert) def bijiao(hrefs,titles,contents,line,dbinsert): print(line) line1 = line.replace(' ', '') for i in range(0,len(titles)): print(i) content = str(contents[i]) title = str(titles[i]) m = content.find(line1) n = title.find(line1) if m != -1 or n != -1: print('匹配上') value = [title, hrefs[i], line1] dbinsert.append(value) else: print('未匹配') def getHotLink(): # 文件读取,读取到热词 hrefs = [] titles = [] contents = [] p = 1 for line in open("final_hotword2.txt", encoding='utf-8'): dbinsert=[] if p == 1: climing(line,hrefs,titles,contents,dbinsert) else: bijiao(hrefs,titles,contents,line,dbinsert) p = p+1 tulinsert=tuple(dbinsert) db = pymysql.connect(host="localhost", user="root", password="1229", database="lianxi", charset='utf8') cursor = db.cursor() sql_xilang = "INSERT INTO Link values(%s,%s,%s)" try: cursor.executemany(sql_xilang, tulinsert) db.commit() except: print('执行失败,进入回调3') db.rollback() db.close() if __name__ == '__main__': #获取新闻 getHotword() #进行分词,获取热词 fenci() #百度词条获取热词解释 getHotExpeain() #获取与热词相关的链接 getHotLink()

其中final.txt,是个词包,我已经将这个词包传到百度网盘上了。

链接:https://pan.baidu.com/s/1zRTS5lJlEAN_NuHljXQY7Q

提取码:h4b6

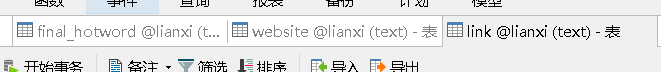

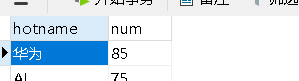

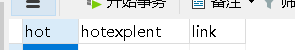

然后是数据库的表:

一共三个表:

final_hotword:

website:

Link:

片段整合到一起运行,会爬取最新新闻存储起来,并会通过jieba自动分词,生成100个热词存储到数据库,会爬取热词解释到数据库,最后会爬取热词相关文章链接到数据库。