RDD:(spark进行计算的基本单位(弹性分布式数据集))

1.获取RDD

加载文件获取

val = rdd = sc.texFile()

通过并行化获取

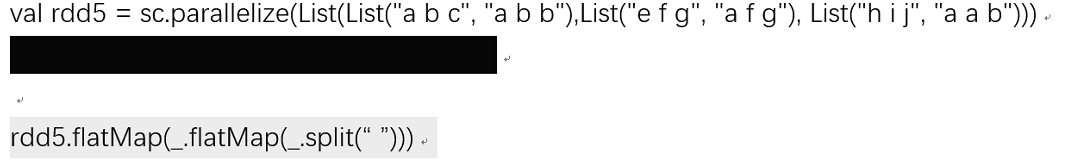

val rdd = rdd1 = sc.parallelize()

2.有关rdd常用方法

sc.parallelize(new ...,num) num为分区个数 rdd.partition.length //查看分区个数

rdd.sortBy(X=>X,true) //true 升序,false降序,默认

rdd.collect //显示内容

rdd.flatMap(_.split("")) //压平

rdd.union(rdd1) //并集

rdd union rdd1 //合并

rdd.distinct //去重

rdd.intersection //交集

rdd.join(rdd1) //把键相同的值合并 前提rdd中为对偶

.leftOutJion(rdd1) //左联

.rightOutJoin(rdd1) //右联

rdd.groupByKey() //根据key进行分组

rdd.top

rdd.take

rdd.takeOrdered

rdd.first

3. 转换(Transformation):

map(func)

filter(func)

flatMap(func)

mapPartitions(func)

mapPartitionsWithIndex(func)

sample(withReplacement, fraction, seed)

union(otherDataset)

intersection(otherDataset)

distinct([numPartitions]))

groupByKey([numPartitions])

reduceByKey(func, [numPartitions])

aggregateByKey(zeroValue)(seqOp, combOp, [numPartitions])

sortByKey([ascending], [numPartitions])

join(otherDataset, [numPartitions])

cogroup(otherDataset, [numPartitions])

cartesian(otherDataset)

pipe(command, [envVars])

coalesce(numPartitions)

repartition(numPartitions)

repartitionAndSortWithinPartitions(partitioner)

动作(Action)

动作(actions) reduce(func) collect() count() first() take(n) takeSample(withReplacement, num, [seed]) takeOrdered(n, [ordering]) saveAsTextFile(path) saveAsSequenceFile(path) saveAsObjectFile(path) countByKey() foreach(func)