今天在做hue的资源调度的操作,执行了好多次一直报下面的错误:

Job init failed : org.apache.hadoop.yarn.exceptions.YarnRuntimeException: java.io.FileNotFoundException: File does not exist: hdfs://VM200-11:8020/user/admin/.staging/job_1536636981967_0013/job.splitmetainfo at org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl$InitTransition.createSplits(JobImpl.java:1580) at org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl$InitTransition.transition(JobImpl.java:1444) at org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl$InitTransition.transition(JobImpl.java:1402) at org.apache.hadoop.yarn.state.StateMachineFactory$MultipleInternalArc.doTransition(StateMachineFactory.java:385) at org.apache.hadoop.yarn.state.StateMachineFactory.doTransition(StateMachineFactory.java:302) at org.apache.hadoop.yarn.state.StateMachineFactory.access$300(StateMachineFactory.java:46) at org.apache.hadoop.yarn.state.StateMachineFactory$InternalStateMachine.doTransition(StateMachineFactory.java:448) at org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl.handle(JobImpl.java:996) at org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl.handle(JobImpl.java:138) at org.apache.hadoop.mapreduce.v2.app.MRAppMaster$JobEventDispatcher.handle(MRAppMaster.java:1366) at org.apache.hadoop.mapreduce.v2.app.MRAppMaster.serviceStart(MRAppMaster.java:1142) at org.apache.hadoop.service.AbstractService.start(AbstractService.java:193) at org.apache.hadoop.mapreduce.v2.app.MRAppMaster$5.run(MRAppMaster.java:1573) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917) at org.apache.hadoop.mapreduce.v2.app.MRAppMaster.initAndStartAppMaster(MRAppMaster.java:1569)

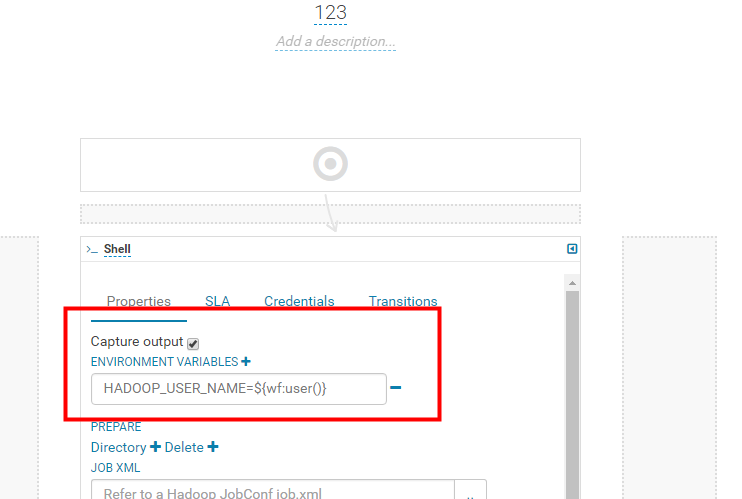

看到这个错误信息我们可以看到文件不存在,其实时间的原因并不是这样的,我们提交任务到集群上面。但是任务的运行不知道该在哪一台机器上执行找不到这个目录所以就报文件不存在了我们只需要在提交任务的时候给他加上环境变量就可以避免这个错误:

让他找到Hadoop的用户,然后去执行相应的任务只要加上这个就可以了:HADOOP_USER_NAME=${wf:user()} (方便copy)

至此问题得到了解决。任务顺利的跑完了。