1.创建Maven项目

2.Httpclient Maven地址

1 <dependency> 2 <groupId>org.apache.httpcomponents</groupId> 3 <artifactId>httpclient</artifactId> 4 <version>4.5.5</version> 5 </dependency>

在pom.xml文件中添加Httpclient jar包

1 <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" 2 xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> 3 <modelVersion>4.0.0</modelVersion> 4 <groupId>com.gxy.blogs</groupId> 5 <artifactId>Demo</artifactId> 6 <version>0.0.1-SNAPSHOT</version> 7 8 <dependencies> 9 <dependency> 10 <groupId>org.apache.httpcomponents</groupId> 11 <artifactId>httpclient</artifactId> 12 <version>4.5.5</version> 13 </dependency> 14 </dependencies> 15 16 </project>

3.主要代码

1 package cha01; 2 3 import java.io.IOException; 4 import org.apache.http.HttpEntity; 5 import org.apache.http.client.ClientProtocolException; 6 import org.apache.http.client.methods.CloseableHttpResponse; 7 import org.apache.http.client.methods.HttpGet; 8 import org.apache.http.impl.client.CloseableHttpClient; 9 import org.apache.http.impl.client.HttpClients; 10 import org.apache.http.util.EntityUtils; 11 12 public class Test { 13 14 public static void main(String[] args) throws IOException { 15 CloseableHttpClient httpclient=HttpClients.createDefault(); 16 HttpGet httpget=new HttpGet("http://www.baidu.com"); 17 CloseableHttpResponse response=httpclient.execute(httpget); 18 HttpEntity entity=response.getEntity(); 19 System.out.println(entity); 20 String page=EntityUtils.toString(entity, "utf-8"); 21 System.out.println(page); 22 response.close(); 23 httpclient.close(); 24 } 25 }

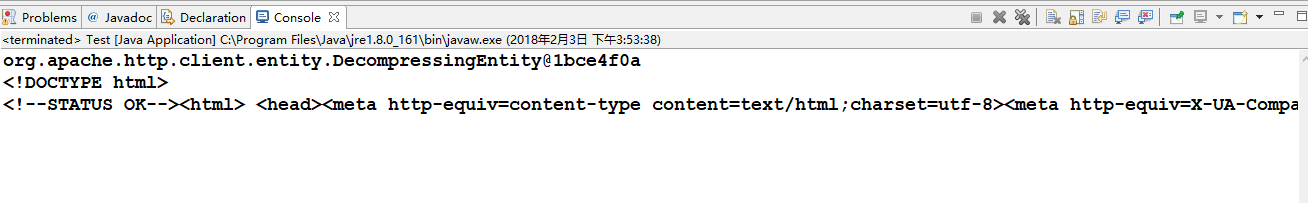

4.运行结果