RHCS

环境配置

-

luci一台 ricci多台

-

iptables disabled

-

selinux disabled

-

本次使用两个6.5来测试,server111和server222,命令的时候注意主机的区分

yum源设定

[HA]

name=Instructor HA Repository

baseurl=http://localhost/pub/6.5/HighAvailability

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[LoadBalancer]

name=Instructor LoadBalancer Repository

baseurl=http://localhost/pub/6.5/LoadBalancer

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[ResilientStorage]

name=Instructor ResilientStorage Repository

baseurl=http://localhost/pub/6.5/ResilientStorage

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[ScalableFileSystem]

name=Instructor ScalableFileSystem Repository

baseurl=http://localhost/pub/6.5/ScalableFileSystem

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

ricci安装

yum install ricci -y

passwd ricci

/etc/init.d/ricci start

chkconfig ricci on

注意事项

- 红帽高可用性附加组件最多支持的集群节点数为 16。

- 使用 luci 配置 GUI。

- 该组件不支持在集群节点中使用 NetworkManager。如果您已经在集群节点中安装了 NetworkManager,您应该删除或者停止该服务。

- 集群中的节点使用多播地址彼此沟通。因此必须将红帽高可用附加组件中的每个网络切换以及关联的联网设备配置为启用多播地址并支持 IGMP(互联网组管理协议)。请确定红帽高可用附加组件中的每个网络切换以及关联的联网设备都支持多播地址和 IGMP

- 红帽企业版 Linux 6 中使用 ricci 替换 ccsd。因此必需在每个集群节点中都运行 ricci

- 从红帽企业版 Linux 6.1 开始,您在任意节点中使用 ricci 推广更新的集群配置时要求输入密码。您在系统中安装 ricci 后,请使用 passwd ricci 命令为用户 ricci 创建密码。

- 登陆luci的web界面的登陆为luci主机的root用户和密码

8.创建添加Failover domain 时候 节点的值越小 ,越靠前。 - 添加某种服务节点上都需要有该服务,创建完成后自动启动

服务安装(注意主机区别)

[root@server222 Desktop]# yum install ricci -y

[root@server111 Desktop]# yum install luci -y

[root@server111 Desktop]# yum install ricci -y

[root@server111 Desktop]# passwd ricci #为ricci设定密码

[root@server111 Desktop]# /etc/init.d/ricci start #启动ricci,并且设定开机启动

[root@server222 Desktop]# passwd ricci #同上为ricci设定密码

[root@server222 Desktop]# /etc/init.d/ricci start #启动ricci ,并且开机启动

[root@server111 Desktop]# /etc/init.d/luci start #并启动luci ,开机启动

-

启动后点击链接进入管理界面,注意是否有hosts或者dns的解析

-

看到主界面如下图所示

- 进入cluster界面,点击create 的刀以下界面,相关的配置如图中所示

- 创建cluster

- 等待创建完成两台主机都会自动重启,结果为下图所示

- 成功创建后

[root@server222 ~]# cd /etc/cluster/

[root@server222 cluster]# ls

cluster.conf cman-notify.d

[root@server222 cluster]# cman_tool status

Version: 6.2.0

Config Version: 1

Cluster Name: forsaken

Cluster Id: 7919

Cluster Member: Yes

Cluster Generation: 8

Membership state: Cluster-Member

Nodes: 2

Expected votes: 1

Total votes: 2

Node votes: 1

Quorum: 1

Active subsystems: 9

Flags: 2node

Ports Bound: 0 11 177

Node name: 192.168.157.222

Node ID: 2

Multicast addresses: 239.192.30.14

Node addresses: 192.168.157.222

[root@server111 ~]# cman_tool status

Version: 6.2.0

Config Version: 1

Cluster Name: forsaken

Cluster Id: 7919

Cluster Member: Yes

Cluster Generation: 8

Membership state: Cluster-Member

Nodes: 2

Expected votes: 1

Total votes: 2

Node votes: 1

Quorum: 1

Active subsystems: 7

Flags: 2node

Ports Bound: 0

Node name: 192.168.157.111

Node ID: 1

Multicast addresses: 239.192.30.14

Node addresses: 192.168.157.111

[root@server111 ~]# clustat

Cluster Status for forsaken @ Tue May 19 22:01:06 2015

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

192.168.157.111 1 Online, Local

192.168.157.222 2 Online

[root@server222 cluster]# clustat

Cluster Status for forsaken @ Tue May 19 22:01:23 2015

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

192.168.157.111 1 Online

192.168.157.222 2 Online, Local

为节点添加fence机制

-

注释:tramisu 为我的物理机,该步骤需要在物理机上完成

-

安装必要的服务

[root@tramisu ~]# yum install fence-virtd.x86_64 fence-virtd-libvirt.x86_64 fence-virtd-multicast.x86_64 fence-virtd-serial.x86_64 -y

fence_virtd -c

Module search path [/usr/lib64/fence-virt]:

Available backends:

libvirt 0.1

Listener modules are responsible for accepting requests

from fencing clients.

Listener module [multicast]:

No listener module named multicast found!

Use this value anyway [y/N]? y

The multicast listener module is designed for use environments

where the guests and hosts may communicate over a network using

multicast.

The multicast address is the address that a client will use to

send fencing requests to fence_virtd.

Multicast IP Address [225.0.0.12]:

Using ipv4 as family.

Multicast IP Port [1229]:

Setting a preferred interface causes fence_virtd to listen only

on that interface. Normally, it listens on all interfaces.

In environments where the virtual machines are using the host

machine as a gateway, this *must* be set (typically to virbr0).

Set to 'none' for no interface.

Interface [virbr0]: br0 #因为我的物理机的网卡为br0在与虚拟机通讯使用,大家根据自己实际情况设定

The key file is the shared key information which is used to

authenticate fencing requests. The contents of this file must

be distributed to each physical host and virtual machine within

a cluster.

Key File [/etc/cluster/fence_xvm.key]:

Backend modules are responsible for routing requests to

the appropriate hypervisor or management layer.

Backend module [libvirt]:

Configuration complete.

=== Begin Configuration ===

backends {

libvirt {

uri = "qemu:///system";

}

}

listeners {

multicast {

port = "1229";

family = "ipv4";

interface = "br0";

address = "225.0.0.12"; #多播地址

key_file = "/etc/cluster/fence_xvm.key"; #生成key的地址

}

}

fence_virtd {

module_path = "/usr/lib64/fence-virt";

backend = "libvirt";

listener = "multicast";

}

=== End Configuration ===

Replace /etc/fence_virt.conf with the above [y/N]? y

[root@tramisu Desktop]# mkdir /etc/cluster

[root@tramisu Desktop]# fence_virtd -c^C

[root@tramisu Desktop]# dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1 #运用dd命令生成随机数的key

1+0 records in

1+0 records out

128 bytes (128 B) copied, 0.000455837 s, 781 kB/s

[root@tramisu ~]# ll /etc/cluster/fence_xvm.key #生成的key

-rw-r--r-- 1 root root 128 May 19 22:13 /etc/cluster/fence_xvm.key

[root@tramisu ~]# scp /etc/cluster/fence_xvm.key 192.168.157.111:/etc/cluster/ #将key远程拷贝给两个节点,注意拷贝的目录

The authenticity of host '192.168.157.111 (192.168.157.111)' can't be established.

RSA key fingerprint is 80:50:bb:dd:40:27:26:66:4c:6e:20:5f:82:3f:7c:ab.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.157.111' (RSA) to the list of known hosts.

root@192.168.157.111's password:

fence_xvm.key 100% 128 0.1KB/s 00:00

[root@tramisu ~]# scp /etc/cluster/fence_xvm.key 192.168.157.222:/etc/cluster/

The authenticity of host '192.168.157.222 (192.168.157.222)' can't be established.

RSA key fingerprint is 28:be:4f:5a:37:4a:a8:80:37:6e:18:c5:93:84:1d:67.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.157.222' (RSA) to the list of known hosts.

root@192.168.157.222's password:

fence_xvm.key 100% 128 0.1KB/s 00:00

[root@tramisu ~]# systemctl restart fence_virtd.service #重启服务

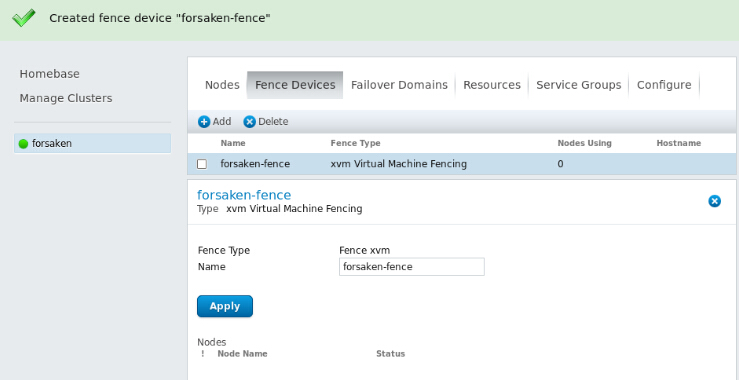

- 回到luci主机的web网页设定fence如下图

- 设定完成后如下图所示

- 回到每一个节点进行设置,如下图

- 上图中第二步具体设置如下图

- 设定完成后如下图

+查看配置文件的变化

[root@server111 ~]# cat /etc/cluster/cluster.conf #查看文件内容的改变

[root@server222 ~]# cat /etc/cluster/cluster.conf #发现配置在两个节点上应该是一样的

- 节点状态

[root@server222 ~]# clustat #查看节点状态,两台节点状态也应该是一样的

Cluster Status for forsaken @ Tue May 19 22:31:31 2015

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

192.168.157.111 1 Online

192.168.157.222 2 Online, Local

一些简单的测试

[root@server222 ~]# fence_node 192.168.157.111 #利用命令切掉111节点

fence 192.168.157.111 success

[root@server222 ~]# clustat

Cluster Status for forsaken @ Tue May 19 22:32:23 2015

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

192.168.157.111 1 Offline #第一台被换掉,此时我们查看111主机应该会进入重启状态,证明fence机制正常工作

192.168.157.222 2 Online, Local

[root@server222 ~]# clustat #当111节点重新启动会,会自动呗加入到节点中,此时222主机作为主机,111节点作为备用节点

Cluster Status for forsaken @ Tue May 19 22:35:28 2015

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

192.168.157.111 1 Online

192.168.157.222 2 Online, Local

[root@server222 ~]# echo c > /proc/sysrq-trigger #也可以使用内存破坏等命令,或者手动宕掉网卡等操作来实验,呗破坏的节点自动重启,重启后作为备机,大家可以自行实验,在此不做多余的介绍