一、环境准备:

10.10.0.170 k8s-master-01 10.10.0.171 k8s-master-02 10.10.0.172 k8s-master-03 10.10.0.190 k8s-node-01 10.10.0.222 vip

二、初始化:

2.1 三台master(k8s-master-01、k8s-master-02、k8s-master-03)上执行如下脚本:

#!/bin/sh #1 修改主机名, 并写入hosts文件中 ip=$(ifconfig |grep eth0 -A 1|grep -oP '(?<=inet )[\d\.]+(?=\s)') echo ${ip} if [ ${ip}x = '10.10.0.170'x ];then echo "set hostname k8s-master-01" hostnamectl set-hostname k8s-master-01 elif [ ${ip}x = '10.10.0.171'x ];then echo "set hostname k8s-master-02" hostnamectl set-hostname k8s-master-02 elif [ ${ip}x = '10.10.0.172'x ];then echo "set hostname k8s-master-03" hostnamectl set-hostname k8s-master-03 fi echo "10.10.0.170 k8s-master-01" >> /etc/hosts echo "10.10.0.171 k8s-master-02" >> /etc/hosts echo "10.10.0.172 k8s-master-03" >> /etc/hosts echo "10.10.0.190 k8s-node-01" >> /etc/hosts #2 关闭防火墙 systemctl stop firewalld systemctl disable firewalld #3 关闭selinux setenforce 0 sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config sed -i '/^SELINUX=/c SELINUX=disabled/' /etc/sysconfig/selinux #4 关闭系统的swap swapoff -a sed -i 's/\(.*swap.*swap.*\)/#\1/' /etc/fstab #5 配置sysctl cat >/etc/sysctl.d/k8s.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 vm.swappiness=0 EOF sysctl -p /etc/sysctl.d/k8s.conf > /dev/null #6 修改本机时区及时间同步 rm -rf /etc/localtime ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime echo "*/10 * * * * /usr/sbin/ntpdate -u time7.aliyun.com">> /var/spool/cron/root #7 安装所需软已经docker ce yum install epel-release tmux mysql lrzsz -y yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-selinux \ docker-engine-selinux \ docker-engine -y yum install -y yum-utils \ device-mapper-persistent-data \ lvm2 yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo yum install -y docker-ce-18.06.1.ce -y

cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

#8 安装kubelet kubeadm kubectl cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF yum install kubelet kubeadm kubectl -y systemctl enable kubelet systemctl enable docker systemctl restart kubelet systemctl restart docker #9 keepalived安装 yum install keepalived -y systemctl restart keepalived systemctl enable keepalived #10 重启服务器 reboot

(注:上述的2~8同时也需要在node节点机上执行。)

[root@k8s-master-01 ~]# cat /etc/keepalived/keepalived.conf :

[root@k8s-master-01 ~]# cat /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { fzhlzfy@163.com } notification_email_from dba@dbserver.com smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id K8S-HA } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 150 advert_int 1 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 10.10.0.222 } }

[root@k8s-master-02 k8s-install]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs { notification_email { fzhlzfy@163.com } notification_email_from dba@dbserver.com smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id K8S-HA } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 priority 100 advert_int 1 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 10.10.0.222 } }

[root@k8s-master-03 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs { notification_email { fzhlzfy@163.com } notification_email_from dba@dbserver.com smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id K8S-HA } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 priority 90 advert_int 1 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 10.10.0.222 } }

keepalived是为了保证整个集群的高可用。

所有docker服务器修改docker运行参数(三台master):

vim /lib/systemd/system/docker.service ExecStart=/usr/bin/dockerd -H=0.0.0.0:2375 -H unix:///var/run/docker.sock systemctl daemon-reload && systemctl restart docker

三、etcd集群安装:

1、免秘钥登录:

k8s-master-01上执行:

ssh-keygen -t rsa(一路回车) ssh-copy-id k8s-master-01 ssh-copy-id k8s-master-02 ssh-copy-id k8s-master-03

2、设置cfssl环境:

k8s-master-01上执行:

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl chmod +x cfssljson_linux-amd64 mv cfssljson_linux-amd64 /usr/local/bin/cfssljson chmod +x cfssl-certinfo_linux-amd64 mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

3、创建CA配置文件:

k8s-master-01上执行:

cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "8760h" }, "profiles": { "kubernetes-Soulmate": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "8760h" } } } } EOF cat > ca-csr.json <<EOF { "CN": "kubernetes-Soulmate", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "shanghai", "L": "shanghai", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca cat > etcd-csr.json <<EOF { "CN": "etcd", "hosts": [ "127.0.0.1", "10.10.0.170", "10.10.0.171", "10.10.0.172" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "shanghai", "L": "shanghai", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=kubernetes-Soulmate etcd-csr.json | cfssljson -bare etcd

[root@k8s-master-01 k8s-install]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem etcd.csr etcd-csr.json etcd-key.pem etcd.pem

4、cp证书:

k8s-master-01上执行:

[root@k8s-master-01 k8s-install]# mkdir /etc/etcd/ssl/ [root@k8s-master-01 k8s-install]# cp etcd.pem etcd-key.pem ca.pem /etc/etcd/ssl/ [root@k8s-master-01 k8s-install]# ssh -n k8s-master-02 "mkdir -p /etc/etcd/ssl && exit" [root@k8s-master-01 k8s-install]# ssh -n k8s-master-03 "mkdir -p /etc/etcd/ssl && exit" [root@k8s-master-01 k8s-install]# scp -r /etc/etcd/ssl/*.pem k8s-master-02:/etc/etcd/ssl/ ca.pem 100% 1387 1.4KB/s 00:00 etcd-key.pem 100% 1675 1.6KB/s 00:00 etcd.pem 100% 1452 1.4KB/s 00:00 [root@k8s-master-01 k8s-install]# scp -r /etc/etcd/ssl/*.pem k8s-master-03:/etc/etcd/ssl/ ca.pem 100% 1387 1.4KB/s 00:00 etcd-key.pem 100% 1675 1.6KB/s 00:00 etcd.pem 100% 1452 1.4KB/s 00:00

5、etcd安装:

三台master都执行:

yum install etcd -y

etcd.service配置文件:

[root@k8s-master-01 ~]# cat /etc/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/bin/etcd \ --name k8s-master-01 \ --cert-file=/etc/etcd/ssl/etcd.pem \ --key-file=/etc/etcd/ssl/etcd-key.pem \ --peer-cert-file=/etc/etcd/ssl/etcd.pem \ --peer-key-file=/etc/etcd/ssl/etcd-key.pem \ --trusted-ca-file=/etc/etcd/ssl/ca.pem \ --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \ --initial-advertise-peer-urls https://10.10.0.170:2380 \ --listen-peer-urls https://10.10.0.170:2380 \ --listen-client-urls https://10.10.0.170:2379,http://127.0.0.1:2379 \ --advertise-client-urls https://10.10.0.170:2379 \ --initial-cluster-token etcd-cluster-0 \ --initial-cluster k8s-master-01=https://10.10.0.170:2380,k8s-master-02=https://10.10.0.171:2380,k8s-master-03=https://10.10.0.172:2380 \ --initial-cluster-state new \ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target

[root@k8s-master-02 ~]# cat /etc/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/bin/etcd \ --name k8s-master-02 \ --cert-file=/etc/etcd/ssl/etcd.pem \ --key-file=/etc/etcd/ssl/etcd-key.pem \ --peer-cert-file=/etc/etcd/ssl/etcd.pem \ --peer-key-file=/etc/etcd/ssl/etcd-key.pem \ --trusted-ca-file=/etc/etcd/ssl/ca.pem \ --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \ --initial-advertise-peer-urls https://10.10.0.171:2380 \ --listen-peer-urls https://10.10.0.171:2380 \ --listen-client-urls https://10.10.0.171:2379,http://127.0.0.1:2379 \ --advertise-client-urls https://10.10.0.171:2379 \ --initial-cluster-token etcd-cluster-0 \ --initial-cluster k8s-master-01=https://10.10.0.170:2380,k8s-master-02=https://10.10.0.171:2380,k8s-master-03=https://10.10.0.172:2380 \ --initial-cluster-state new \ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target

[root@k8s-master-03 ~]# cat /etc/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/bin/etcd \ --name k8s-master-03 \ --cert-file=/etc/etcd/ssl/etcd.pem \ --key-file=/etc/etcd/ssl/etcd-key.pem \ --peer-cert-file=/etc/etcd/ssl/etcd.pem \ --peer-key-file=/etc/etcd/ssl/etcd-key.pem \ --trusted-ca-file=/etc/etcd/ssl/ca.pem \ --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \ --initial-advertise-peer-urls https://10.10.0.172:2380 \ --listen-peer-urls https://10.10.0.172:2380 \ --listen-client-urls https://10.10.0.172:2379,http://127.0.0.1:2379 \ --advertise-client-urls https://10.10.0.172:2379 \ --initial-cluster-token etcd-cluster-0 \ --initial-cluster k8s-master-01=https://10.10.0.170:2380,k8s-master-02=https://10.10.0.171:2380,k8s-master-03=https://10.10.0.172:2380 \ --initial-cluster-state new \ --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target

配置文件简单介绍,详细的解释可自己baidu、google:

--name etcd集群中的节点名,这里可以随意,可区分且不重复就行 --listen-peer-urls 监听的用于节点之间通信的url,可监听多个,集群内部将通过这些url进行数据交互(如选举,数据同步等) --initial-advertise-peer-urls 建议用于节点之间通信的url,节点间将以该值进行通信。 --listen-client-urls 监听的用于客户端通信的url,同样可以监听多个。 --advertise-client-urls 建议使用的客户端通信url,该值用于etcd代理或etcd成员与etcd节点通信。 --initial-cluster-token etcd-cluster-1 节点的token值,设置该值后集群将生成唯一id,并为每个节点也生成唯一id,当使用相同配置文件再启动一个集群时,只要该token值不一样,etcd集群就不会相互影响。 --initial-cluster 也就是集群中所有的initial-advertise-peer-urls 的合集 --initial-cluster-state new 新建集群的标志

三台master执行:

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

验证etcd集群健康性:

三台都尝试:

[root@k8s-master-01 ~]# etcdctl --endpoints=https://10.10.0.170:2379,https://10.10.0.171:2379,https://10.10.0.172:2379 --ca-file=/etc/etcd/ssl/ca.pem --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem cluster-health member 1c25bde2973f71cf is healthy: got healthy result from https://10.10.0.172:2379 member 3222a6aebdf856ac is healthy: got healthy result from https://10.10.0.170:2379 member 5796b25a0b404b92 is healthy: got healthy result from https://10.10.0.171:2379 cluster is healthy [root@k8s-master-02 ~]# etcdctl --endpoints=https://10.10.0.170:2379,https://10.10.0.171:2379,https://10.10.0.172:2379 --ca-file=/etc/etcd/ssl/ca.pem --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem cluster-health member 1c25bde2973f71cf is healthy: got healthy result from https://10.10.0.172:2379 member 3222a6aebdf856ac is healthy: got healthy result from https://10.10.0.170:2379 member 5796b25a0b404b92 is healthy: got healthy result from https://10.10.0.171:2379 cluster is healthy [root@k8s-master-03 ~]# etcdctl --endpoints=https://10.10.0.170:2379,https://10.10.0.171:2379,https://10.10.0.172:2379 --ca-file=/etc/etcd/ssl/ca.pem --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem cluster-health member 1c25bde2973f71cf is healthy: got healthy result from https://10.10.0.172:2379 member 3222a6aebdf856ac is healthy: got healthy result from https://10.10.0.170:2379 member 5796b25a0b404b92 is healthy: got healthy result from https://10.10.0.171:2379 cluster is healthy

如上图所示,则表示集群健康。

四、kubeadm init初始化集群:

4.1~4.6在k8s-master-01上执行:

4.1 镜像准备:

[root@k8s-master-01 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE k8s.gcr.io/kube-proxy v1.12.1 61afff57f010 3 weeks ago 96.6MB k8s.gcr.io/kube-controller-manager v1.12.1 aa2dd57c7329 3 weeks ago 164MB k8s.gcr.io/kube-scheduler v1.12.1 d773ad20fd80 3 weeks ago 58.3MB k8s.gcr.io/kube-apiserver v1.12.1 dcb029b5e3ad 3 weeks ago 194MB k8s.gcr.io/coredns 1.2.2 367cdc8433a4 2 months ago 39.2MB k8s.gcr.io/pause 3.1 da86e6ba6ca1 10 months ago 742kB

4.2 kubeadm-config.yaml文件:

[root@k8s-master-01 ~]# cat kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1alpha3 kind: ClusterConfiguration kubernetesVersion: v1.12.1 apiServerCertSANs: - 10.10.0.170 - 10.10.0.171 - 10.10.0.172 - k8s-master-01 - k8s-master-02 - k8s-master-03 - 10.10.0.222 api: controlPlaneEndpoint: 10.10.0.222:8443 etcd: external: endpoints: - https://10.10.0.170:2379 - https://10.10.0.171:2379 - https://10.10.0.172:2379 caFile: /etc/etcd/ssl/ca.pem certFile: /etc/etcd/ssl/etcd.pem keyFile: /etc/etcd/ssl/etcd-key.pem networking: # This CIDR is a Calico default. Substitute or remove for your CNI provider. podSubnet: "10.244.0.0/16"

4.3 初始化:

[root@k8s-master-01 ~]# kubeadm init --config kubeadm-config.yaml [init] using Kubernetes version: v1.12.1 [preflight] running pre-flight checks [preflight/images] Pulling images required for setting up a Kubernetes cluster [preflight/images] This might take a minute or two, depending on the speed of your internet connection [preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [preflight] Activating the kubelet service [certificates] Generated ca certificate and key. [certificates] Generated apiserver certificate and key. [certificates] apiserver serving cert is signed for DNS names [k8s-master-01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local k8s-master-01 k8s-master-02 k8s-master-03] and IPs [10.96.0.1 10.10.0.170 10.10.0.170 10.10.0.171 10.10.0.172 10.10.0.222] [certificates] Generated apiserver-kubelet-client certificate and key. [certificates] Generated front-proxy-ca certificate and key. [certificates] Generated front-proxy-client certificate and key. [certificates] valid certificates and keys now exist in "/etc/kubernetes/pki" [certificates] Generated sa key and public key. [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf" [controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml" [controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml" [controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml" [init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests" [init] this might take a minute or longer if the control plane images have to be pulled [apiclient] All control plane components are healthy after 23.001756 seconds [uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.12" in namespace kube-system with the configuration for the kubelets in the cluster [markmaster] Marking the node k8s-master-01 as master by adding the label "node-role.kubernetes.io/master=''" [markmaster] Marking the node k8s-master-01 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule] [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master-01" as an annotation [bootstraptoken] using token: 7igv4r.pfh4zf7h8eao43k7 [bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 10.10.0.170:6443 --token 7igv4r.pfh4zf7h8eao43k7 --discovery-token-ca-cert-hash sha256:8488d362ce896597e9d6f23c825b60447b6e1fdb494ce72d32843d02d2d4b200

4.4 环境配置:

[root@k8s-master-01 ~]# mkdir -p $HOME/.kube [root@k8s-master-01 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master-01 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

4.5 检查集群状态:

[root@k8s-master-01 ~]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health": "true"} etcd-1 Healthy {"health": "true"} etcd-2 Healthy {"health": "true"}

4.6 k8s证书cp:

[root@k8s-master-01 ~]# scp -r /etc/kubernetes/pki/ 10.10.0.171:/etc/kubernetes/ ca.key 100% 1679 1.6KB/s 00:00 ca.crt 100% 1025 1.0KB/s 00:00 apiserver.key 100% 1675 1.6KB/s 00:00 apiserver.crt 100% 1326 1.3KB/s 00:00 apiserver-kubelet-client.key 100% 1675 1.6KB/s 00:00 apiserver-kubelet-client.crt 100% 1099 1.1KB/s 00:00 front-proxy-ca.key 100% 1675 1.6KB/s 00:00 front-proxy-ca.crt 100% 1038 1.0KB/s 00:00 front-proxy-client.key 100% 1675 1.6KB/s 00:00 front-proxy-client.crt 100% 1058 1.0KB/s 00:00 sa.key 100% 1679 1.6KB/s 00:00 sa.pub 100% 451 0.4KB/s 00:00[root@k8s-master-01 ~]# scp -r /etc/kubernetes/pki/ 10.10.0.172:/etc/kubernetes/ ca.key 100% 1679 1.6KB/s 00:00 ca.crt 100% 1025 1.0KB/s 00:00 apiserver.key 100% 1675 1.6KB/s 00:00 apiserver.crt 100% 1326 1.3KB/s 00:00 apiserver-kubelet-client.key 100% 1675 1.6KB/s 00:00 apiserver-kubelet-client.crt 100% 1099 1.1KB/s 00:00 front-proxy-ca.key 100% 1675 1.6KB/s 00:00 front-proxy-ca.crt 100% 1038 1.0KB/s 00:00 front-proxy-client.key 100% 1675 1.6KB/s 00:00 front-proxy-client.crt 100% 1058 1.0KB/s 00:00 sa.key 100% 1679 1.6KB/s 00:00 sa.pub 100% 451 0.4KB/s 00:00

k8s-master-02(上诉4.1~4.5):

[root@k8s-master-02 ~]# kubeadm init --config kubeadm-config.yaml [init] using Kubernetes version: v1.12.1 [preflight] running pre-flight checks [preflight/images] Pulling images required for setting up a Kubernetes cluster [preflight/images] This might take a minute or two, depending on the speed of your internet connection [preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [preflight] Activating the kubelet service [certificates] Using the existing apiserver certificate and key. [certificates] Using the existing apiserver-kubelet-client certificate and key. [certificates] Using the existing front-proxy-client certificate and key. [certificates] valid certificates and keys now exist in "/etc/kubernetes/pki" [certificates] Using the existing sa key. [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf" [controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml" [controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml" [controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml" [init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests" [init] this might take a minute or longer if the control plane images have to be pulled [apiclient] All control plane components are healthy after 20.002010 seconds [uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.12" in namespace kube-system with the configuration for the kubelets in the cluster [markmaster] Marking the node k8s-master-02 as master by adding the label "node-role.kubernetes.io/master=''" [markmaster] Marking the node k8s-master-02 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule] [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master-02" as an annotation [bootstraptoken] using token: z4q8gj.pyxlik9groyp6t3e [bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 10.10.0.171:6443 --token z4q8gj.pyxlik9groyp6t3e --discovery-token-ca-cert-hash sha256:5149f28976005454d8b0da333648e66880aa9419bc0e639781ceab65c77034be

五、pod网络配置:

镜像如下:

k8s.gcr.io/coredns:1.2.2 quay.io/coreos/flannel:v0.10.0-amd64

5.1 配置前:

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:a1:0f:80:1e txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.10.0.170 netmask 255.255.255.0 broadcast 10.10.0.255 inet6 fe80::20c:29ff:fe22:d2ff prefixlen 64 scopeid 0x20<link> ether 00:0c:29:22:d2:ff txqueuelen 1000 (Ethernet) RX packets 1444658 bytes 365717587 (348.7 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1339639 bytes 185797411 (177.1 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 480338 bytes 116529453 (111.1 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 480338 bytes 116529453 (111.1 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@k8s-master-01 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000 link/ether 00:0c:29:22:d2:ff brd ff:ff:ff:ff:ff:ff inet 10.10.0.170/24 brd 10.10.0.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.10.0.222/32 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe22:d2ff/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:a1:0f:80:1e brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever

5.2 安装flannel network:

[root@k8s-master-01 ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@k8s-master-01 ~]# kubectl apply -f kube-flannel.yml clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.extensions/kube-flannel-ds-amd64 created daemonset.extensions/kube-flannel-ds-arm64 created daemonset.extensions/kube-flannel-ds-arm created daemonset.extensions/kube-flannel-ds-ppc64le created daemonset.extensions/kube-flannel-ds-s390x created

查看一下集群中的daemonset:

[root@k8s-master-01 ~]# kubectl get ds -l app=flannel -n kube-system NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE kube-flannel-ds-amd64 2 2 2 2 2 beta.kubernetes.io/arch=amd64 22m kube-flannel-ds-arm 0 0 0 0 0 beta.kubernetes.io/arch=arm 22m kube-flannel-ds-arm64 0 0 0 0 0 beta.kubernetes.io/arch=arm64 22m kube-flannel-ds-ppc64le 0 0 0 0 0 beta.kubernetes.io/arch=ppc64le 22m kube-flannel-ds-s390x 0 0 0 0 0 beta.kubernetes.io/arch=s390x 22m

查看pods:

[root@k8s-master-01 ~]# kubectl get pod --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE kube-system coredns-576cbf47c7-nmphm 1/1 Running 0 23m 10.244.0.3 k8s-master-01 <none> kube-system coredns-576cbf47c7-w5mhv 1/1 Running 0 23m 10.244.0.2 k8s-master-01 <none> kube-system kube-apiserver-k8s-master-01 1/1 Running 0 178m 10.10.0.170 k8s-master-01 <none> kube-system kube-apiserver-k8s-master-02 1/1 Running 0 11m 10.10.0.171 k8s-master-02 <none> kube-system kube-controller-manager-k8s-master-01 1/1 Running 0 177m 10.10.0.170 k8s-master-01 <none> kube-system kube-controller-manager-k8s-master-02 1/1 Running 0 11m 10.10.0.171 k8s-master-02 <none> kube-system kube-flannel-ds-amd64-cl4kb 1/1 Running 1 24m 10.10.0.170 k8s-master-01 <none> kube-system kube-flannel-ds-amd64-rghg4 1/1 Running 0 24m 10.10.0.171 k8s-master-02 <none> kube-system kube-proxy-2vsqh 1/1 Running 0 150m 10.10.0.171 k8s-master-02 <none> kube-system kube-proxy-wvtrz 1/1 Running 0 178m 10.10.0.170 k8s-master-01 <none> kube-system kube-scheduler-k8s-master-01 1/1 Running 0 178m 10.10.0.170 k8s-master-01 <none> kube-system kube-scheduler-k8s-master-02 1/1 Running 0 11m 10.10.0.171 k8s-master-02 <none>

查看此时的网络:

[root@k8s-master-01 ~]# ifconfig cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.0.1 netmask 255.255.255.0 broadcast 0.0.0.0 inet6 fe80::74ef:2ff:fec2:6c85 prefixlen 64 scopeid 0x20<link> ether 0a:58:0a:f4:00:01 txqueuelen 0 (Ethernet) RX packets 5135 bytes 330511 (322.7 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 5136 bytes 1929848 (1.8 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:a1:0f:80:1e txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.10.0.170 netmask 255.255.255.0 broadcast 10.10.0.255 inet6 fe80::20c:29ff:fe22:d2ff prefixlen 64 scopeid 0x20<link> ether 00:0c:29:22:d2:ff txqueuelen 1000 (Ethernet) RX packets 1727975 bytes 420636786 (401.1 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1613768 bytes 225024592 (214.6 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.0.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::98c0:baff:fed3:8de5 prefixlen 64 scopeid 0x20<link> ether 9a:c0:ba:d3:8d:e5 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 10 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 590730 bytes 145157886 (138.4 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 590730 bytes 145157886 (138.4 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 veth5504c620: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet6 fe80::f499:6ff:fece:d24a prefixlen 64 scopeid 0x20<link> ether f6:99:06:ce:d2:4a txqueuelen 0 (Ethernet) RX packets 2564 bytes 200932 (196.2 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2579 bytes 965054 (942.4 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 vetha0ab0abe: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet6 fe80::74ef:2ff:fec2:6c85 prefixlen 64 scopeid 0x20<link> ether 76:ef:02:c2:6c:85 txqueuelen 0 (Ethernet) RX packets 2571 bytes 201469 (196.7 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2584 bytes 966816 (944.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@k8s-master-01 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000 link/ether 00:0c:29:22:d2:ff brd ff:ff:ff:ff:ff:ff inet 10.10.0.170/24 brd 10.10.0.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.10.0.222/32 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe22:d2ff/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:a1:0f:80:1e brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever 4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN link/ether 9a:c0:ba:d3:8d:e5 brd ff:ff:ff:ff:ff:ff inet 10.244.0.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::98c0:baff:fed3:8de5/64 scope link valid_lft forever preferred_lft forever 5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP link/ether 0a:58:0a:f4:00:01 brd ff:ff:ff:ff:ff:ff inet 10.244.0.1/24 scope global cni0 valid_lft forever preferred_lft forever inet6 fe80::74ef:2ff:fec2:6c85/64 scope link valid_lft forever preferred_lft forever 6: vetha0ab0abe@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP link/ether 76:ef:02:c2:6c:85 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::74ef:2ff:fec2:6c85/64 scope link valid_lft forever preferred_lft forever 7: veth5504c620@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP link/ether f6:99:06:ce:d2:4a brd ff:ff:ff:ff:ff:ff link-netnsid 1 inet6 fe80::f499:6ff:fece:d24a/64 scope link valid_lft forever preferred_lft forever

六、把k8s-master-03加入集群(完全可以放在五中和k8s-master-02一起进行):

k8s-master-03上执行4.1~4.5:

[root@k8s-master-03 ~]# kubeadm init --config kubeadm-config.yaml [init] using Kubernetes version: v1.12.1 [preflight] running pre-flight checks [preflight/images] Pulling images required for setting up a Kubernetes cluster [preflight/images] This might take a minute or two, depending on the speed of your internet connection [preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [preflight] Activating the kubelet service [certificates] Using the existing apiserver certificate and key. [certificates] Using the existing apiserver-kubelet-client certificate and key. [certificates] Using the existing front-proxy-client certificate and key. [certificates] valid certificates and keys now exist in "/etc/kubernetes/pki" [certificates] Using the existing sa key. [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf" [controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml" [controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml" [controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml" [init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests" [init] this might take a minute or longer if the control plane images have to be pulled [apiclient] All control plane components are healthy after 20.503277 seconds [uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.12" in namespace kube-system with the configuration for the kubelets in the cluster [markmaster] Marking the node k8s-master-03 as master by adding the label "node-role.kubernetes.io/master=''" [markmaster] Marking the node k8s-master-03 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule] [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master-03" as an annotation [bootstraptoken] using token: ks930p.auijb1h0or3o87f9 [bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 10.10.0.172:6443 --token ks930p.auijb1h0or3o87f9 --discovery-token-ca-cert-hash sha256:8488d362ce896597e9d6f23c825b60447b6e1fdb494ce72d32843d02d2d4b200

[root@k8s-master-03 ~]# mkdir -p $HOME/.kube [root@k8s-master-03 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master-03 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

注:上上图红色部分的来源:

[root@k8s-master-03 ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 8488d362ce896597e9d6f23c825b60447b6e1fdb494ce72d32843d02d2d4b200

(因此,即便token过期了,但是重新生成token后,token发生了变化,但ca证书sha256编码hash值却是不变的。)

六、检查所有pod(可在三台master上面分别执行):

[root@k8s-master-03 ~]# kubectl get po --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-576cbf47c7-nmphm 1/1 Running 0 22h kube-system coredns-576cbf47c7-w5mhv 1/1 Running 0 22h kube-system kube-apiserver-k8s-master-01 1/1 Running 0 25h kube-system kube-apiserver-k8s-master-02 1/1 Running 0 22h kube-system kube-apiserver-k8s-master-03 1/1 Running 0 11h kube-system kube-controller-manager-k8s-master-01 1/1 Running 0 25h kube-system kube-controller-manager-k8s-master-02 1/1 Running 0 22h kube-system kube-controller-manager-k8s-master-03 1/1 Running 0 11h kube-system kube-flannel-ds-amd64-cl4kb 1/1 Running 1 22h kube-system kube-flannel-ds-amd64-prvvj 1/1 Running 0 11h kube-system kube-flannel-ds-amd64-rghg4 1/1 Running 0 22h kube-system kube-proxy-2vsqh 1/1 Running 0 24h kube-system kube-proxy-mvf9h 1/1 Running 0 11h kube-system kube-proxy-wvtrz 1/1 Running 0 25h kube-system kube-scheduler-k8s-master-01 1/1 Running 0 25h kube-system kube-scheduler-k8s-master-02 1/1 Running 0 22h kube-system kube-scheduler-k8s-master-03 1/1 Running 0 11h

七、dashboard安装(我这里选择在k8s-master-03上安装dashboard):

7.1镜像准备:

k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0

7.2获取yaml文件:

[root@k8s-master-03 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

对上述下载的文件kubernetes-dashboard.yaml做适当处理:

[root@k8s-master-03 ~]# cat kubernetes-dashboard.yaml # Copyright 2017 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # ------------------- Dashboard Secret ------------------- # apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kube-system type: Opaque --- # ------------------- Dashboard Service Account ------------------- # apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system --- # ------------------- Dashboard Role & Role Binding ------------------- # kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: kubernetes-dashboard-minimal namespace: kube-system rules: # Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret. - apiGroups: [""] resources: ["secrets"] verbs: ["create"] # Allow Dashboard to create 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] verbs: ["create"] # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics from heapster. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: kubernetes-dashboard-minimal namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard-minimal subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system --- # ------------------- Dashboard Deployment ------------------- # kind: Deployment apiVersion: apps/v1beta2 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0 ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- # ------------------- Dashboard Service ------------------- # kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: type: NodePort ports: - port: 443 targetPort: 8443 nodePort: 30001 selector: k8s-app: kubernetes-dashboard

(注:红色部分为添加部分:便于远程访问。)

7.3 create:

[root@k8s-master-03 ~]# kubectl apply -f kubernetes-dashboard.yaml secret/kubernetes-dashboard-certs created serviceaccount/kubernetes-dashboard created role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created deployment.apps/kubernetes-dashboard created service/kubernetes-dashboard created

[root@k8s-master-03 ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-576cbf47c7-nmphm 1/1 Running 0 23h kube-system coredns-576cbf47c7-w5mhv 1/1 Running 0 23h kube-system kube-apiserver-k8s-master-01 1/1 Running 0 25h kube-system kube-apiserver-k8s-master-02 1/1 Running 0 22h kube-system kube-apiserver-k8s-master-03 1/1 Running 0 12h kube-system kube-controller-manager-k8s-master-01 1/1 Running 0 25h kube-system kube-controller-manager-k8s-master-02 1/1 Running 0 22h kube-system kube-controller-manager-k8s-master-03 1/1 Running 0 12h kube-system kube-flannel-ds-amd64-cl4kb 1/1 Running 1 23h kube-system kube-flannel-ds-amd64-prvvj 1/1 Running 0 12h kube-system kube-flannel-ds-amd64-rghg4 1/1 Running 0 23h kube-system kube-proxy-2vsqh 1/1 Running 0 25h kube-system kube-proxy-mvf9h 1/1 Running 0 12h kube-system kube-proxy-wvtrz 1/1 Running 0 25h kube-system kube-scheduler-k8s-master-01 1/1 Running 0 25h kube-system kube-scheduler-k8s-master-02 1/1 Running 0 22h kube-system kube-scheduler-k8s-master-03 1/1 Running 0 12h kube-system kubernetes-dashboard-77fd78f978-7rczc 1/1 Running 0 8m36s

(三台服务器都能看到该pod。)

7.4 创建登录令牌(k8s-master-03上执行):

[root@k8s-master-03 ~]# cat admin-user.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: admin namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin namespace: kube-system

[root@k8s-master-03 ~]# kubectl create -f admin-user.yaml serviceaccount/admin created clusterrolebinding.rbac.authorization.k8s.io/admin created

[root@k8s-master-03 ~]# kubectl describe serviceaccount admin -n kube-system Name: admin Namespace: kube-system Labels: k8s-app=kubernetes-dashboard Annotations: <none> Image pull secrets: <none> Mountable secrets: admin-token-96xbr Tokens: admin-token-96xbr Events: <none>

[root@k8s-master-03 ~]# kubectl describe secret admin-token-96xbr -n kube-system Name: admin-token-96xbr Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: admin kubernetes.io/service-account.uid: 546a18f5-dddd-11e8-8392-000c29666ccc Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi05NnhiciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjU0NmExOGY1LWRkZGQtMTFlOC04MzkyLTAwMGMyOTY2NmNjYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbiJ9.GInI4jFvfYMKGLoJ-5PhVm9d8MiJeXg97oJmgX3hMreAUAUdRGZz2VLSc0ig3msw_VBg8JYb2pPQjWpYCR2bwNXMrN-FDPq3Ym6wZMittLTmZCHcKwHKRWNnomKbQsJf6wE8dN6Dws-eSYA66NqI8PXiCKao3XnQVbKz9eFMcl7W4u0u4T_0T1I0xqEhlsPReGyTQ1RyHfdTphT32Wo7BELsAEN69xscHFaL7JQlgry_boHO3RnIr8S-7bSnJBCKOVJZ9NMu_2TyH_81lYQZASkQCh1H7BwJFXIETvG6zcxrTb8FSUtgtEc3OjIWPYFnlrdaPhSbvU54yHfTCWrUUw

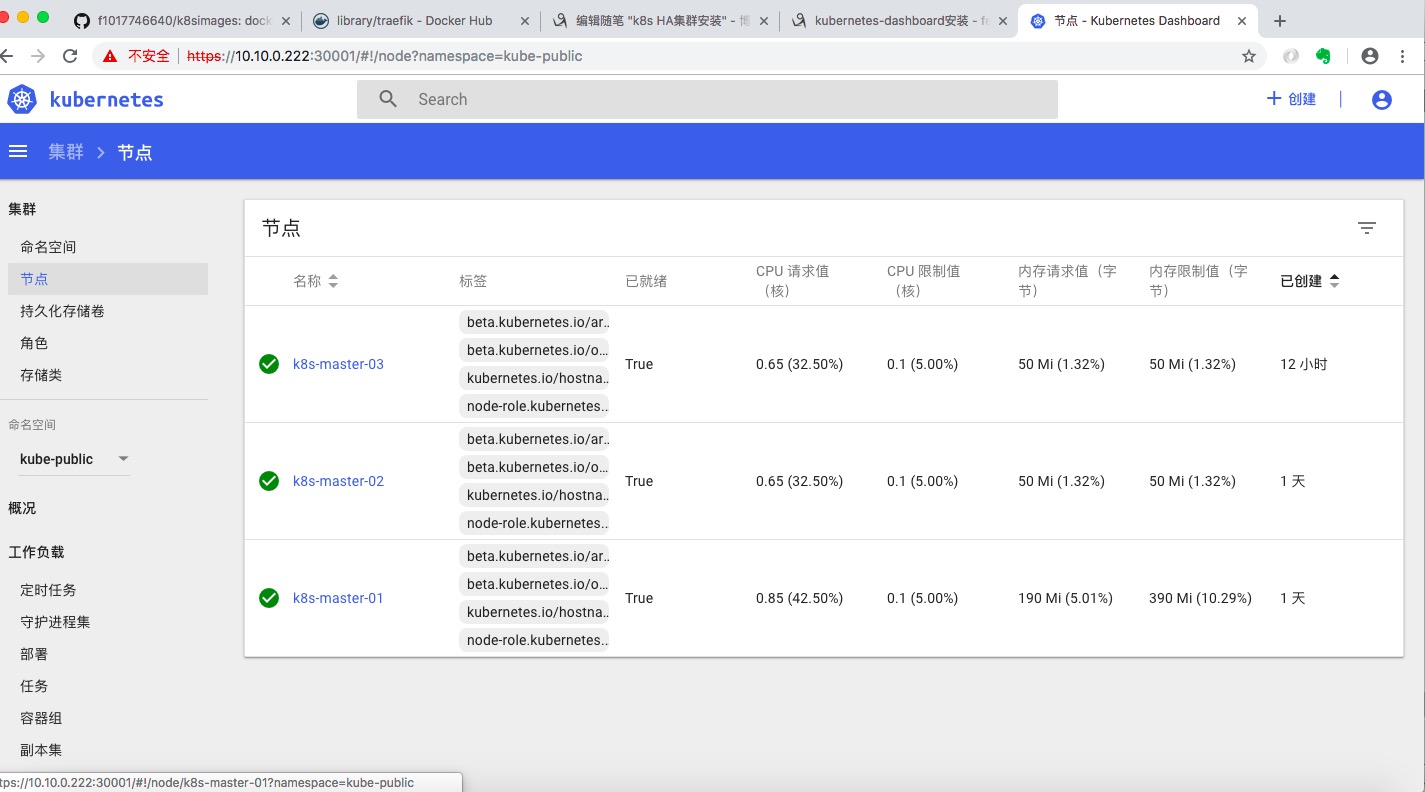

访问https://10.10.0.222:30001输入上面得到的token,既可以得到下图:

八、dashboard插件heapster的安装:

8.1 镜像(最好是三个master都要有):

[root@k8s-master-01 ~]# docker images|grep heapster k8s.gcr.io/heapster-amd64 v1.5.4 72d68eecf40c 3 months ago 75.3MB k8s.gcr.io/heapster-influxdb-amd64 v1.3.3 577260d221db 14 months ago 12.5MB k8s.gcr.io/heapster-grafana-amd64 v4.4.3 8cb3de219af7 14 months ago 152MB

8.2 获取yaml文件:

https://raw.githubusercontent.com/Lentil1016/kubeadm-ha/1.12.1/plugin/heapster.yaml

我对此文件做了修改,如下:

[root@k8s-master-03 ~]# cat heapster.yaml apiVersion: v1 kind: Service metadata: name: monitoring-grafana namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Grafana" spec: # On production clusters, consider setting up auth for grafana, and # exposing Grafana either using a LoadBalancer or a public IP. # type: LoadBalancer type: NodePort ports: - port: 80 protocol: TCP targetPort: ui nodePort: 30005 selector: k8s-app: influxGrafana --- apiVersion: v1 kind: ServiceAccount metadata: name: heapster namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- apiVersion: v1 kind: ConfigMap metadata: name: heapster-config namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: EnsureExists data: NannyConfiguration: |- apiVersion: nannyconfig/v1alpha1 kind: NannyConfiguration --- apiVersion: v1 kind: ConfigMap metadata: name: eventer-config namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: EnsureExists data: NannyConfiguration: |- apiVersion: nannyconfig/v1alpha1 kind: NannyConfiguration --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: heapster-v1.5.4 namespace: kube-system labels: k8s-app: heapster kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v1.5.4 spec: replicas: 1 selector: matchLabels: k8s-app: heapster version: v1.5.4 template: metadata: labels: k8s-app: heapster version: v1.5.4 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: priorityClassName: system-cluster-critical containers: - image: k8s.gcr.io/heapster-amd64:v1.5.4 name: heapster livenessProbe: httpGet: path: /healthz port: 8082 scheme: HTTP initialDelaySeconds: 180 timeoutSeconds: 5 command: - /heapster - --source=kubernetes.summary_api:'' - --sink=influxdb:http://monitoring-influxdb:8086 - image: k8s.gcr.io/heapster-amd64:v1.5.4 name: eventer command: - /eventer - --source=kubernetes:'' - --sink=influxdb:http://monitoring-influxdb:8086 volumes: - name: heapster-config-volume configMap: name: heapster-config - name: eventer-config-volume configMap: name: eventer-config serviceAccountName: kubernetes-admin tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule - key: "CriticalAddonsOnly" operator: "Exists" --- kind: Service apiVersion: v1 metadata: name: heapster namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Heapster" spec: type: NodePort ports: - port: 80 targetPort: 8082 nodePort: 30006 selector: k8s-app: heapster --- kind: Deployment apiVersion: extensions/v1beta1 metadata: name: monitoring-influxdb-grafana-v4 namespace: kube-system labels: k8s-app: influxGrafana version: v4 kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: replicas: 1 selector: matchLabels: k8s-app: influxGrafana version: v4 template: metadata: labels: k8s-app: influxGrafana version: v4 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: priorityClassName: system-cluster-critical tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule - key: "CriticalAddonsOnly" operator: "Exists" containers: - name: influxdb image: k8s.gcr.io/heapster-influxdb-amd64:v1.3.3 resources: limits: cpu: 100m memory: 500Mi requests: cpu: 100m memory: 500Mi ports: - name: http containerPort: 8083 - name: api containerPort: 8086 volumeMounts: - name: influxdb-persistent-storage mountPath: /data - name: grafana image: k8s.gcr.io/heapster-grafana-amd64:v4.4.3 env: resources: # keep request = limit to keep this container in guaranteed class limits: cpu: 100m memory: 100Mi requests: cpu: 100m memory: 100Mi env: # This variable is required to setup templates in Grafana. - name: INFLUXDB_SERVICE_URL value: http://monitoring-influxdb:8086 # The following env variables are required to make Grafana accessible via # the kubernetes api-server proxy. On production clusters, we recommend # removing these env variables, setup auth for grafana, and expose the grafana # service using a LoadBalancer or a public IP. - name: GF_AUTH_BASIC_ENABLED value: "false" - name: GF_AUTH_ANONYMOUS_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ORG_ROLE value: Admin - name: GF_SERVER_ROOT_URL value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy/ ports: - name: ui containerPort: 3000 volumeMounts: - name: grafana-persistent-storage mountPath: /var volumes: - name: influxdb-persistent-storage emptyDir: {} - name: grafana-persistent-storage emptyDir: {} --- apiVersion: v1 kind: Service metadata: name: monitoring-influxdb namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "InfluxDB" spec: type: NodePort ports: - name: http port: 8083 targetPort: 8083 - name: api port: 8086 targetPort: 8086 nodePort: 30007 selector: k8s-app: influxGrafana

( 加了NodePort端口。)

[root@k8s-master-03 ~]# kubectl apply -f heapster.yaml service/monitoring-grafana created serviceaccount/heapster created configmap/heapster-config created configmap/eventer-config created deployment.extensions/heapster-v1.5.4 created service/heapster created deployment.extensions/monitoring-influxdb-grafana-v4 created service/monitoring-influxdb created

8.3 查看:

[root@k8s-master-03 ~]# kubectl get pods,svc --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system pod/coredns-576cbf47c7-nmphm 1/1 Running 0 24h kube-system pod/coredns-576cbf47c7-w5mhv 1/1 Running 0 24h kube-system pod/kube-apiserver-k8s-master-01 1/1 Running 0 27h kube-system pod/kube-apiserver-k8s-master-02 1/1 Running 0 24h kube-system pod/kube-apiserver-k8s-master-03 1/1 Running 0 13h kube-system pod/kube-controller-manager-k8s-master-01 1/1 Running 0 27h kube-system pod/kube-controller-manager-k8s-master-02 1/1 Running 0 24h kube-system pod/kube-controller-manager-k8s-master-03 1/1 Running 0 13h kube-system pod/kube-flannel-ds-amd64-cl4kb 1/1 Running 1 24h kube-system pod/kube-flannel-ds-amd64-prvvj 1/1 Running 0 13h kube-system pod/kube-flannel-ds-amd64-rghg4 1/1 Running 0 24h kube-system pod/kube-proxy-2vsqh 1/1 Running 0 26h kube-system pod/kube-proxy-mvf9h 1/1 Running 0 13h kube-system pod/kube-proxy-wvtrz 1/1 Running 0 27h kube-system pod/kube-scheduler-k8s-master-01 1/1 Running 0 27h kube-system pod/kube-scheduler-k8s-master-02 1/1 Running 0 24h kube-system pod/kube-scheduler-k8s-master-03 1/1 Running 0 13h kube-system pod/kubernetes-dashboard-77fd78f978-7rczc 1/1 Running 0 108m kube-system pod/monitoring-influxdb-grafana-v4-65cc9bb8c8-qmhb4 2/2 Running 0 9m56s NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 27h kube-system service/heapster NodePort 10.101.21.123 <none> 80:30006/TCP 9m56s kube-system service/kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 27h kube-system service/kubernetes-dashboard NodePort 10.108.219.183 <none> 443:30001/TCP 108m kube-system service/monitoring-grafana NodePort 10.111.38.86 <none> 80:30005/TCP 9m56s kube-system service/monitoring-influxdb NodePort 10.107.91.86 <none> 8083:30880/TCP,8086:30007/TCP 9m56s

九、k8s集群增加节点:

9.1 节点三组件:

kubelet、kube-proxy、docker、kubeadm

9.2 镜像:

k8s.gcr.io/kube-proxy:v1.12.1

k8s.gcr.io/pause:3.1

(kubelet、kubeadm、docker按照上面的方式安装即可,此处省。)

9.3 查看token列表(任何一个master节点均可):

[root@k8s-master-03 ~]# kubeadm token list TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS 7igv4r.pfh4zf7h8eao43k7 <invalid> 2018-11-01T20:12:13+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token ks930p.auijb1h0or3o87f9 <invalid> 2018-11-02T09:41:31+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token q7tox4.5j53kpgdob45f49i <invalid> 2018-11-01T22:58:18+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token z4q8gj.pyxlik9groyp6t3e <invalid> 2018-11-01T20:40:28+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

似乎token都失效了,需要重新生成。

[root@k8s-master-03 ~]# kubeadm token create I1102 18:24:59.302880 28667 version.go:93] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: x509: certificate is valid for www.webhostingtest1.com, webhostingtest1.com, not storage.googleapis.com I1102 18:24:59.302947 28667 version.go:94] falling back to the local client version: v1.12.2 txqfdo.1steqzihimchr82l [root@k8s-master-03 ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 8488d362ce896597e9d6f23c825b60447b6e1fdb494ce72d32843d02d2d4b200 [root@k8s-master-03 ~]# kubeadm token list TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS 7igv4r.pfh4zf7h8eao43k7 <invalid> 2018-11-01T20:12:13+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token ks930p.auijb1h0or3o87f9 <invalid> 2018-11-02T09:41:31+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token q7tox4.5j53kpgdob45f49i <invalid> 2018-11-01T22:58:18+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token txqfdo.1steqzihimchr82l 23h 2018-11-03T18:24:59+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token z4q8gj.pyxlik9groyp6t3e <invalid> 2018-11-01T20:40:28+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

[root@k8s-node-01 ~]# kubeadm join 10.10.0.172:6443 --token txqfdo.1steqzihimchr82l --discovery-token-ca-cert-hash sha256:8488d362ce896597e9d6f23c825b60447b6e1fdb494ce72d32843d02d2d4b200 [preflight] running pre-flight checks [discovery] Trying to connect to API Server "10.10.0.172:6443" [discovery] Created cluster-info discovery client, requesting info from "https://10.10.0.172:6443" [discovery] Requesting info from "https://10.10.0.172:6443" again to validate TLS against the pinned public key [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.10.0.172:6443" [discovery] Successfully established connection with API Server "10.10.0.172:6443" [kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.12" ConfigMap in the kube-system namespace [kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [preflight] Activating the kubelet service [tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap... [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-node-01" as an annotation This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the master to see this node join the cluster.

可以使用--print-join-command来直接生成命令:

[root@offline-k8s-master ~]# kubeadm token create --print-join-command kubeadm join 10.0.0.200:6443 --token jjbxr5.ee6c4kh6vof9zu1m --discovery-token-ca-cert-hash sha256:139438d7734c9edd08e1beb99dccabcd5c613b14f3a0f7abd07b097a746101ff

过几分钟在master上查看node:

[root@k8s-master-01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master-01 Ready master 46h v1.12.2 k8s-master-02 Ready master 45h v1.12.2 k8s-master-03 Ready master 32h v1.12.2 k8s-node-01 Ready <none> 79s v1.12.2

十、k8s命令支持tab快捷用法:

yum install -y bash-completion > /dev/null source /usr/share/bash-completion/bash_completion source <(kubectl completion bash) echo "source <(kubectl completion bash)" >> /etc/profile

十一 、上面用到的k8s网络是flanne,如果选择calico网络,master上面需要的镜像如下:

[root@k8s-master-1 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE calico/node v3.6.1 b4d7c4247c3a 31 hours ago 73.1MB calico/cni v3.6.1 c7d27197e298 31 hours ago 84.3MB calico/kube-controllers v3.6.1 0bd1f99c7034 31 hours ago 50.9MB k8s.gcr.io/kube-proxy v1.14.0 5cd54e388aba 3 days ago 82.1MB k8s.gcr.io/kube-controller-manager v1.14.0 b95b1efa0436 3 days ago 158MB k8s.gcr.io/kube-apiserver v1.14.0 ecf910f40d6e 3 days ago 210MB k8s.gcr.io/kube-scheduler v1.14.0 00638a24688b 3 days ago 81.6MB k8s.gcr.io/coredns 1.3.1 eb516548c180 2 months ago 40.3MB k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 3 months ago 258MB k8s.gcr.io/pause 3.1 da86e6ba6ca1 15 months ago 742kB [root@k8s-master-1 ~]#

kubeadm init --kubernetes-version=1.14.0 --pod-network-cidr=20.10.0.0/16 --apiserver-advertise-address=10.20.26.21 --node-name=k8s-master-1

kubectl apply -f https://docs.projectcalico.org/v3.6/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

[root@k8s-master-1 ~]# kubectl get po -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-5cbcccc885-bwcwp 1/1 Running 0 72m calico-node-64rjg 1/1 Running 0 32s calico-node-blp9h 1/1 Running 0 72m calico-node-xd4bq 1/1 Running 0 27m coredns-fb8b8dccf-r8b8f 1/1 Running 0 88m coredns-fb8b8dccf-v8jvx 1/1 Running 0 88m etcd-k8s-master-1 1/1 Running 0 87m kube-apiserver-k8s-master-1 1/1 Running 0 87m kube-controller-manager-k8s-master-1 1/1 Running 0 87m kube-proxy-9q7mz 1/1 Running 0 27m kube-proxy-qnfvz 1/1 Running 0 88m kube-proxy-xbstx 1/1 Running 0 31s kube-scheduler-k8s-master-1 1/1 Running 0 87m [root@k8s-master-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master-1 Ready master 88m v1.14.0 k8s-node-1 Ready <none> 27m v1.14.0 k8s-node-2 Ready <none> 34s v1.14.0

附件:

calico网络见下链接:

https://docs.projectcalico.org/v3.6/getting-started/kubernetes/