CentOS 7 下安装Ceph-nautilus

本问主要记录在CentOS 7下如何安装Ceph-nautilus,安装过程中遇到的一些问题及解决方法。

1、Ceph实验准备

以下是本次实验所用到的机器(采用的是虚拟机)的配置:

| node1 | 192.168.1.115(三块硬盘) | Mon、mgr、rgs、osd |

| node2 | 192.168.1.116(三块硬盘) | Mon、mgr、rgs、osd |

| node3 | 192.168.1.117(三块硬盘) | Mon、mgr、rgs、osd |

2、hosts及防火墙设置

node1、node2、node3节点进行如下配置:

可以采取ssh免秘钥方式,生成秘钥对拷贝秘钥到node节点:(以下方式二选其一)

#部署机到ceph节点免秘钥登录(先配置好hosts) ssh-keygen ssh-copy-id node1 ssh-copy-id node2 ssh-copy-id node3

配置免秘钥脚本或者直接粘贴运行

#!/bin/bash yum -y install expect ssh-keygen -t rsa -P "" -f /root/.ssh/id_rsa for i in 192.168.1.115 192.168.1.116 192.168.1.117;do expect -c " spawn ssh-copy-id -i /root/.ssh/id_rsa.pub root@$i expect { "*yes/no*" {send "yes "; exp_continue} "*password*" {send "Flyaway.123 "; exp_continue} "*Password*" {send "Flyaway.123 ";} } " done

所有节点进行如下操作,当然也可以ssh免秘钥方式操作

#添加hosts解析; cat >/etc/hosts<<EOF 127.0.0.1 localhost localhost.localdomain 192.168.1.115 node1 192.168.1.116 node2 192.168.1.117 node3 EOF #临时关闭selinux和防火墙; sed -i '/SELINUX/s/enforcing/disabled/g' /etc/sysconfig/selinux setenforce 0 systemctl stop firewalld.service systemctl disable firewalld.service #同步节点时间; yum install ntpdate -y ntpdate pool.ntp.org #修改对应节点主机名; ssh node1 "hostnamectl set-hostname node1" && ssh node2 "hostnamectl set-hostname node2" && ssh node3 "hostnamectl set-hostname node3"

#或者采取以下方式 hostname `cat /etc/hosts|grep $(ifconfig|grep broadcast|awk '{print $2}')|awk '{print $2}'`;su

更换国内源

因为Centos系统默认使用的是国外的源,可能会影响安装,所以需要替换为国内的源。这里使用 阿里云。

替换yum源

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo yum clean up && yum makecache && yum update -y

配置Ceph安装源

#设置环境变量,使 ceph-deploy 使用的阿里源。执行操作如下: rpm -Uvh https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/ceph-release-1-1.el7.noarch.rpm #安装EPEL: yum install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

#此步骤可不做

cat <<EOM > /etc/yum.repos.d/ceph.repo

[noarch]

name=ceph noarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch

enabled=1

gpgcheck=0

[x86_64]

name=ceph x86_64

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64

enabled=1

gpgcheck=0

EOM

在部署机上安装ceph-deploy

yum -y install ceph-deploy python-setuptools

更新其余节点的yum源

for host in node{2..3};do scp -r /etc/yum.repos.d/* $host:/etc/yum.repos.d/ ;done

在node1 上安装相关包

for host in node{1..3};do ssh -l root $host yum install ceph ceph-radosgw -y ;done ceph -v rpm -qa |grep ceph

开始部署集群

#创建集群部署目录 mkdir -p cluster cd cluster/

#安装Ceph

#ceph-deploy install node1 node2 node3

#添加监控节点(mon) ceph-deploy new node1 node2 node3 ceph-deploy mon create-initial #Ceph分发秘钥 ceph-deploy admin node1 node2 node3 ceph -s #创建OSD数据存储设备和目录; ceph-deploy osd create node1 --data /dev/sdb ceph-deploy osd create node1 --data /dev/sdc ceph-deploy osd create node2 --data /dev/sdb ceph-deploy osd create node2 --data /dev/sdc ceph-deploy osd create node3 --data /dev/sdb ceph-deploy osd create node3 --data /dev/sdc #创建ceph管理节点(mgr)和rgsw服务; ceph-deploy mgr create node1 node2 node3 ceph-deploy rgw create node1 node2 node3 netstat -tnlp|grep -aiE 6789 ceph -s #在所有节点上查看 集群是否部署成功 netstat -tnlp|grep -aiE 6789

ps -ef|grep ceph ceph -s

扩容monitor节点(只能扩容不能缩容)

参考链接:https://blog.csdn.net/nangonghen/article/details/107017258

#添加的ceph节点最好有三块盘 #配置hosts文件,添加节点的信息 cat >>/etc/hosts <<EOF 192.168.1.113 node4 192.168.1.114 node5 EOF #部署机到ceph节点做免秘钥登录 #ssh-keygen ssh-copy-id node4 ssh-copy-id node5 #修改节点主机名 ssh node4 "hostnamectl set-hostname node4" && ssh node5 "hostnamectl set-hostname node5" #ceph 节点需安装ceph ceph-radosgw for i in node4 node5;do echo --->>$i; ssh $i "yum install -y ceph ceph-radosgw" ;done #切换至集群部署目录 cd cluster #修改ceph配置文件 添加绿色部分 [root@node1 cluster]# vim ceph.conf [global] fsid = 76539f0b-4130-4ecc-9a82-67580ef01577 mon_initial_members = node1, node2, node3, node4, node5 mon_host = 192.168.1.115,192.168.1.116,192.168.1.117,192.168.1.113,192.168.1.114 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx public_network = 192.168.1.0/24 #修改后的ceph.conf文件推送到ceph集群中的所有服务器 cd cluster ceph-deploy --overwrite-conf config push node1 node2 node3 node4 node5 #添加monitor节点 ceph-deploy mon add node4 ceph-deploy mon add node5 ceph -s

网络升级改造:添加 cluster network

#网络升级改造:添加cluster network public network网络: ceph集群监控 #和cluster network进行分离 ceph 的osd节点进行数据同步,3副本。 cluster network = 10.0.1.0/24 #重启osd systemctl restart ceph-osd@0.service systemctl restart ceph.target systemctl restart ceph-mon@node1 systemctl restart ceph-mds@主机名 #演示数据复制 recovery #rbd映射及扩容 [root@node1 ~]# rbd map rbd/rbd4 rbd: sysfs write failed RBD image feature set mismatch. Try disabling features unsupported by the kernel with "rbd feature disable". In some cases useful info is found in syslog - try "dmesg | tail". rbd: map failed: (6) No such device or address cd cluster vim ceph.conf [globals] rbd_default_features = 3 ceph-deploy --overwrite-conf admin node{1..5} [root@controller1 ~]# rbd lock list vms/f9dedf92-05c4-4cf5-a617-4ace50759b95_disk There is 1 exclusive lock on this image. Locker ID Address client.467411015 auto 94250098466816 10.78.10.36:0/4111401624 #物理机无故断电,虚拟机无法启动。 #修改features #解锁。 rbd lock list ... rbd lock remove(rm) ..... #给nova用户增加一个权限:allow profile osd ceph auth create xxxx #rbd 库容 rbd resize --pool rbd --size 50G rbd5 rbd map xfs文件系统,xfs_growfs /dev/rbd0 ext3,ext4: resize2fs /dev/rbd0

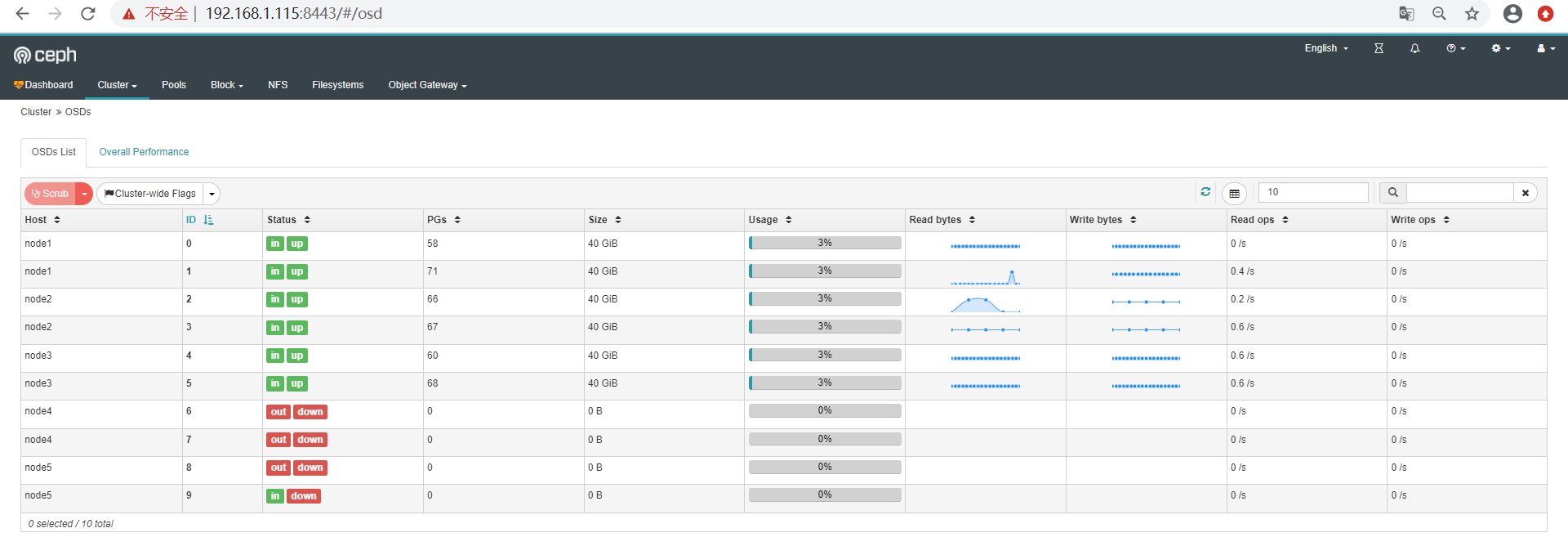

启用web监控 dashboard

# 自 nautilus开始,dashboard作为一个单独的模块独立出来了,使用时需要在所有的mgr节点上单独安装 yum install -y ceph-mgr-dashboard # 启用dashboard ceph mgr module enable dashboard --force # 默认启用SSL/TLS,所以需要创建自签名根证书 ceph dashboard create-self-signed-cert # 创建具有管理员角色的用户 ceph dashboard ac-user-create admin admin administrator # 查看ceph-mgr服务 ceph mgr services { "dashboard": "https://node0:8443/" }

可能遇到的问题

1.执行命令ceph-deploy install node0 node1 node2 node3时报错

ImportError: No module named pkg_resources

解决方法:

yum install epel-release -y

yum install python2-pip* -y

2.集群安装时报错 获取 GPG 密钥失败:[Errno 14] curl#37 - "Couldn't open file /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7"

解决方法:

#这个就是安装的时候会检查key这个可以再repo文件中关闭即可 # vim /etc/yum.repos.d/epel.repo [epel] name=Extra Packages for Enterprise Linux 7 - $basearch #baseurl=http://download.fedoraproject.org/pub/epel/7/$basearch metalink=https://mirrors.fedoraproject.org/metalink?repo=epel-7&arch=$basearch failovermethod=priority enabled=1 pgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 #把gpgcheck=1 改为gpgcheck=0 即可,意思是在安装的时候不进行源的检查

sed -i 's/gpgcheck=1/gpgcheck=0/g' /etc/yum.repos.d/epel.repo