转自:https://gstreamer.freedesktop.org/documentation/application-development/basics/index.html?gi-language=c

https://blog.csdn.net/shallon_luo/article/details/5393788

Initializing GStreamer

When writing a GStreamer application, you can simply include gst/gst.h to get access to the library functions. Besides that, you will also need to initialize the GStreamer library.

Simple initialization

Before the GStreamer libraries can be used, gst_init has to be called from the main application. This call will perform the necessary initialization of the library as well as parse the GStreamer-specific command line options.

A typical program would have code to initialize GStreamer that looks like this:

#include <stdio.h> #include <gst/gst.h> int main (int argc, char *argv[]) { const gchar *nano_str; guint major, minor, micro, nano; gst_init (&argc, &argv); gst_version (&major, &minor, µ, &nano); if (nano == 1) nano_str = "(CVS)"; else if (nano == 2) nano_str = "(Prerelease)"; else nano_str = ""; printf ("This program is linked against GStreamer %d.%d.%d %s ", major, minor, micro, nano_str); return 0; }

It is also possible to call the gst_init function with two NULL arguments, in which case no command line options will be parsed by GStreamer.

Elements

The most important object in GStreamer for the application programmer is the GstElement object. An element is the basic building block for a media pipeline. All the different high-level components you will use are derived from GstElement. Every decoder, encoder, demuxer, video or audio output is in fact a GstElement

What are elements?

For the application programmer, elements are best visualized as black boxes. On the one end, you might put something in, the element does something with it and something else comes out at the other side. For a decoder element, for example, you'd put in encoded data, and the element would output decoded data.

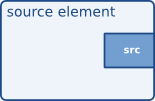

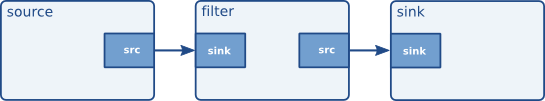

Source elements

Source elements generate data for use by a pipeline, for example reading from disk or from a sound card.

Source elements do not accept data, they only generate data. You can see this in the figure because it only has a source pad (on the right). A source pad can only generate data.

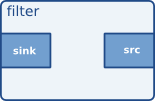

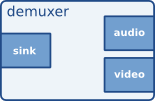

Filters, convertors, demuxers, muxers and codecs

Filters and filter-like elements have both input and outputs pads. They operate on data that they receive on their input (sink) pads, and will provide data on their output (source) pads. Examples of such elements are a volume element (filter), a video scaler (convertor), an Ogg demuxer or a Vorbis decoder.

Filter-like elements can have any number of source or sink pads. A video demuxer, for example, would have one sink pad and several (1-N) source pads, one for each elementary stream contained in the container format. Decoders, on the other hand, will only have one source and sink pads.

Sink elements

Sink elements are end points in a media pipeline. They accept data but do not produce anything. Disk writing, soundcard playback, and video output would all be implemented by sink elements.

Creating a GstElement

The simplest way to create an element is to use gst_element_factory_make (). This function takes a factory name and an element name for the newly created element. The name of the element is something you can use later on to look up the element in a bin, for example. The name will also be used in debug output. You can pass NULL as the name argument to get a unique, default name.

When you don't need the element anymore, you need to unref it using gst_object_unref (). This decreases the reference count for the element by 1. An element has a refcount of 1 when it gets created. An element gets destroyed completely when the refcount is decreased to 0.

#include <gst/gst.h> int main (int argc, char *argv[]) { GstElement *element; /* init GStreamer */ gst_init (&argc, &argv); /* create element */ element = gst_element_factory_make ("fakesrc", "source"); if (!element) { g_print ("Failed to create element of type 'fakesrc' "); return -1; } gst_object_unref (GST_OBJECT (element)); return 0; }

Using an element as a GObject

A GstElement can have several properties which are implemented using standard GObject properties. The usual GObject methods to query, set and get property values and GParamSpecs are therefore supported.

Every GstElement inherits at least one property from its parent GstObject: the "name" property. This is the name you provide to the functions gst_element_factory_make () or gst_element_factory_create (). You can get and set this property using the functions gst_object_set_name and gst_object_get_name or use the GObject property mechanism as shown below.

#include <gst/gst.h> int main (int argc, char *argv[]) { GstElement *element; gchar *name; /* init GStreamer */ gst_init (&argc, &argv); /* create element */ element = gst_element_factory_make ("fakesrc", "source"); /* get name */ g_object_get (G_OBJECT (element), "name", &name, NULL); g_print ("The name of the element is '%s'. ", name); g_free (name); gst_object_unref (GST_OBJECT (element)); return 0; }

Most plugins provide additional properties to provide more information about their configuration or to configure the element. gst-inspect is a useful tool to query the properties of a particular element, it will also use property introspection to give a short explanation about the function of the property and about the parameter types and ranges it supports.

For more information about GObject properties we recommend you read the GObject manual and an introduction to The Glib Object system.

A GstElement also provides various GObject signals that can be used as a flexible callback mechanism. Here, too, you can use gst-inspect to see which signals a specific element supports. Together, signals and properties are the most basic way in which elements and applications interact.

More about element factories

In the previous section, we briefly introduced the GstElementFactory object already as a way to create instances of an element. Element factories, however, are much more than just that. Element factories are the basic types retrieved from the GStreamer registry, they describe all plugins and elements that GStreamer can create. This means that element factories are useful for automated element instancing, such as what autopluggers do, and for creating lists of available elements.

Getting information about an element using a factory

Tools like gst-inspect will provide some generic information about an element, such as the person that wrote the plugin, a descriptive name (and a shortname), a rank and a category. The category can be used to get the type of the element that can be created using this element factory. Examples of categories includeCodec/Decoder/Video (video decoder), Codec/Encoder/Video (video encoder), Source/Video (a video generator), Sink/Video (a video output), and all these exist for audio as well, of course. Then, there's also Codec/Demuxer and Codec/Muxer and a whole lot more. gst-inspect will give a list of all factories, and gst-inspect <factory-name> will list all of the above information, and a lot more.

Finding out what pads an element can contain

Perhaps the most powerful feature of element factories is that they contain a full description of the pads that the element can generate, and the capabilities of those pads (in layman words: what types of media can stream over those pads), without actually having to load those plugins into memory. This can be used to provide a codec selection list for encoders, or it can be used for autoplugging purposes for media players. All current GStreamer-based media players and autopluggers work this way.

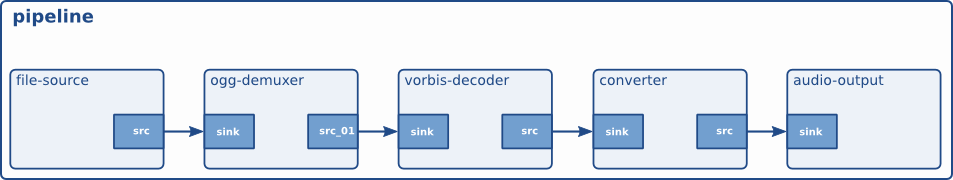

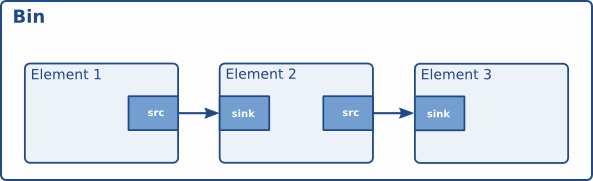

Linking elements

By linking a source element with zero or more filter-like elements and finally a sink element, you set up a media pipeline. Data will flow through the elements. This is the basic concept of media handling in GStreamer.

By linking these three elements, we have created a very simple chain of elements. The effect of this will be that the output of the source element (“element1”) will be used as input for the filter-like element (“element2”). The filter-like element will do something with the data and send the result to the final sink element (“element3”).

Imagine the above graph as a simple Ogg/Vorbis audio decoder. The source is a disk source which reads the file from disc. The second element is a Ogg/Vorbis audio decoder. The sink element is your soundcard, playing back the decoded audio data. We will use this simple graph to construct an Ogg/Vorbis player later in this manual.

In code, the above graph is written like this:

#include <gst/gst.h> int main (int argc, char *argv[]) { GstElement *pipeline; GstElement *source, *filter, *sink; /* init */ gst_init (&argc, &argv); /* create pipeline */ pipeline = gst_pipeline_new ("my-pipeline"); /* create elements */ source = gst_element_factory_make ("fakesrc", "source"); filter = gst_element_factory_make ("identity", "filter"); sink = gst_element_factory_make ("fakesink", "sink"); /* must add elements to pipeline before linking them */ gst_bin_add_many (GST_BIN (pipeline), source, filter, sink, NULL); /* link */ if (!gst_element_link_many (source, filter, sink, NULL)) { g_warning ("Failed to link elements!"); } [..] }

For more specific behaviour, there are also the functions gst_element_link () andgst_element_link_pads (). You can also obtain references to individual pads and link those using variousgst_pad_link_* () functions. See the API references for more details.

Important: you must add elements to a bin or pipeline before linking them, since adding an element to a bin will disconnect any already existing links. Also, you cannot directly link elements that are not in the same bin or pipeline; if you want to link elements or pads at different hierarchy levels, you will need to use ghost pads (more about ghost pads later).

Element States

After being created, an element will not actually perform any actions yet. You need to change elements state to make it do something. GStreamer knows four element states, each with a very specific meaning. Those four states are:

-

GST_STATE_NULL: this is the default state. No resources are allocated in this state, so, transitioning to it will free all resources. The element must be in this state when its refcount reaches 0 and it is freed. -

GST_STATE_READY: in the ready state, an element has allocated all of its global resources, that is, resources that can be kept within streams. You can think about opening devices, allocating buffers and so on. However, the stream is not opened in this state, so the stream positions is automatically zero. If a stream was previously opened, it should be closed in this state, and position, properties and such should be reset. -

GST_STATE_PAUSED: in this state, an element has opened the stream, but is not actively processing it. An element is allowed to modify a stream's position, read and process data and such to prepare for playback as soon as state is changed to PLAYING, but it is not allowed to play the data which would make the clock run. In summary, PAUSED is the same as PLAYING but without a running clock.Elements going into the

PAUSEDstate should prepare themselves for moving over to thePLAYINGstate as soon as possible. Video or audio outputs would, for example, wait for data to arrive and queue it so they can play it right after the state change. Also, video sinks can already play the first frame (since this does not affect the clock yet). Autopluggers could use this same state transition to already plug together a pipeline. Most other elements, such as codecs or filters, do not need to explicitly do anything in this state, however. -

GST_STATE_PLAYING: in thePLAYINGstate, an element does exactly the same as in thePAUSEDstate, except that the clock now runs.

You can change the state of an element using the function gst_element_set_state (). If you set an element to another state, GStreamer will internally traverse all intermediate states. So if you set an element fromNULL to PLAYING, GStreamer will internally set the element to READY and PAUSED in between.

When moved to GST_STATE_PLAYING, pipelines will process data automatically. They do not need to be iterated in any form. Internally, GStreamer will start threads that take on this task for them. GStreamer will also take care of switching messages from the pipeline's thread into the application's own thread, by using aGstBus. See Bus for details.

When you set a bin or pipeline to a certain target state, it will usually propagate the state change to all elements within the bin or pipeline automatically, so it's usually only necessary to set the state of the top-level pipeline to start up the pipeline or shut it down. However, when adding elements dynamically to an already-running pipeline, e.g. from within a "pad-added" signal callback, you need to set it to the desired target state yourself using gst_element_set_state () or gst_element_sync_state_with_parent ().

Bins

A bin is a container element. You can add elements to a bin. Since a bin is an element itself, a bin can be handled in the same way as any other element. Therefore, the whole previous chapter (Elements) applies to bins as well.

What are bins

Bins allow you to combine a group of linked elements into one logical element. You do not deal with the individual elements anymore but with just one element, the bin.

The bin will also manage the elements contained in it. It will perform state changes on the elements as well as collect and forward bus messages.

There is one specialized type of bin available to the GStreamer programmer:

- A pipeline: a generic container that manages the synchronization and bus messages of the contained elements. The toplevel bin has to be a pipeline, every application thus needs at least one of these.

A pipeline is a top-level bin. It provides a bus for the application and manages the synchronization for its children. As you set it to PAUSED or PLAYING state, data flow will start and media processing will take place. Once started, pipelines will run in a separate thread until you stop them or the end of the data stream is reached.

In GStreamer you usually build the pipeline by manually assembling the individual elements, but, when the pipeline is easy enough, and you do not need any advanced features, you can take the shortcut:gst_parse_launch().

/* Build the pipeline */ pipeline = gst_parse_launch ("playbin uri=https://www.freedesktop.org/software/gstreamer-sdk/data/media/sintel_trailer-480p.webm", NULL);

Creating a bin

Bins are created in the same way that other elements are created, i.e. using an element factory. There are also convenience functions available (gst_bin_new () and gst_pipeline_new ()). To add elements to a bin or remove elements from a bin, you can use gst_bin_add () and gst_bin_remove (). Note that the bin that you add an element to will take ownership of that element. If you destroy the bin, the element will be dereferenced with it. If you remove an element from a bin, it will be dereferenced automatically.

#include <gst/gst.h> int main (int argc, char *argv[]) { GstElement *bin, *pipeline, *source, *sink; /* init */ gst_init (&argc, &argv); /* create */ pipeline = gst_pipeline_new ("my_pipeline"); bin = gst_bin_new ("my_bin"); source = gst_element_factory_make ("fakesrc", "source"); sink = gst_element_factory_make ("fakesink", "sink"); /* First add the elements to the bin */ gst_bin_add_many (GST_BIN (bin), source, sink, NULL); /* add the bin to the pipeline */ gst_bin_add (GST_BIN (pipeline), bin); /* link the elements */ gst_element_link (source, sink); [..] }

There are various functions to lookup elements in a bin. The most commonly used are gst_bin_get_by_name () and gst_bin_get_by_interface (). You can also iterate over all elements that a bin contains using the function gst_bin_iterate_elements (). See the API references of GstBin for details.

Bins manage states of their children

Bins manage the state of all elements contained in them. If you set a bin (or a pipeline, which is a special top-level type of bin) to a certain target state using gst_element_set_state (), it will make sure all elements contained within it will also be set to this state. This means it's usually only necessary to set the state of the top-level pipeline to start up the pipeline or shut it down.

The bin will perform the state changes on all its children from the sink element to the source element. This ensures that the downstream element is ready to receive data when the upstream element is brought to PAUSEDor PLAYING. Similarly when shutting down, the sink elements will be set to READY or NULL first, which will cause the upstream elements to receive a FLUSHING error and stop the streaming threads before the elements are set to the READY or NULL state.

Note, however, that if elements are added to a bin or pipeline that's already running, e.g. from within a "pad-added" signal callback, its state will not automatically be brought in line with the current state or target state of the bin or pipeline it was added to. Instead, you need to set it to the desired target state yourself using gst_element_set_state () or gst_element_sync_state_with_parent () when adding elements to an already-running pipeline.

Bus

A bus is a simple system that takes care of forwarding messages from the streaming threads to an application in its own thread context. The advantage of a bus is that an application does not need to be thread-aware in order to use GStreamer, even though GStreamer itself is heavily threaded.

Every pipeline contains a bus by default, so applications do not need to create a bus or anything. The only thing applications should do is set a message handler on a bus, which is similar to a signal handler to an object. When the mainloop is running, the bus will periodically be checked for new messages, and the callback will be called when any message is available.

How to use a bus

There are two different ways to use a bus:

-

Run a GLib/Gtk+ main loop (or iterate the default GLib main context yourself regularly) and attach some kind of watch to the bus. This way the GLib main loop will check the bus for new messages and notify you whenever there are messages.

Typically you would use

gst_bus_add_watch ()orgst_bus_add_signal_watch ()in this case.To use a bus, attach a message handler to the bus of a pipeline using

gst_bus_add_watch (). This handler will be called whenever the pipeline emits a message to the bus. In this handler, check the signal type (see next section) and do something accordingly. The return value of the handler should beTRUEto keep the handler attached to the bus, returnFALSEto remove it. -

Check for messages on the bus yourself. This can be done using

gst_bus_peek ()and/orgst_bus_poll ().

#include <gst/gst.h> static GMainLoop *loop; static gboolean my_bus_callback (GstBus * bus, GstMessage * message, gpointer data) { g_print ("Got %s message ", GST_MESSAGE_TYPE_NAME (message)); switch (GST_MESSAGE_TYPE (message)) { case GST_MESSAGE_ERROR:{ GError *err; gchar *debug; gst_message_parse_error (message, &err, &debug); g_print ("Error: %s ", err->message); g_error_free (err); g_free (debug); g_main_loop_quit (loop); break; } case GST_MESSAGE_EOS: /* end-of-stream */ g_main_loop_quit (loop); break; default: /* unhandled message */ break; } /* we want to be notified again the next time there is a message * on the bus, so returning TRUE (FALSE means we want to stop watching * for messages on the bus and our callback should not be called again) */ return TRUE; } gint main (gint argc, gchar * argv[]) { GstElement *pipeline; GstBus *bus; guint bus_watch_id; /* init */ gst_init (&argc, &argv); /* create pipeline, add handler */ pipeline = gst_pipeline_new ("my_pipeline"); /* adds a watch for new message on our pipeline's message bus to * the default GLib main context, which is the main context that our * GLib main loop is attached to below */ bus = gst_pipeline_get_bus (GST_PIPELINE (pipeline)); bus_watch_id = gst_bus_add_watch (bus, my_bus_callback, NULL); gst_object_unref (bus); /* [...] */ /* create a mainloop that runs/iterates the default GLib main context * (context NULL), in other words: makes the context check if anything * it watches for has happened. When a message has been posted on the * bus, the default main context will automatically call our * my_bus_callback() function to notify us of that message. * The main loop will be run until someone calls g_main_loop_quit() */ loop = g_main_loop_new (NULL, FALSE); g_main_loop_run (loop); /* clean up */ gst_element_set_state (pipeline, GST_STATE_NULL); gst_object_unref (pipeline); g_source_remove (bus_watch_id); g_main_loop_unref (loop); return 0; }

It is important to know that the handler will be called in the thread context of the mainloop. This means that the interaction between the pipeline and application over the bus is asynchronous, and thus not suited for some real-time purposes, such as cross-fading between audio tracks, doing (theoretically) gapless playback or video effects. All such things should be done in the pipeline context, which is easiest by writing a GStreamer plug-in. It is very useful for its primary purpose, though: passing messages from pipeline to application. The advantage of this approach is that all the threading that GStreamer does internally is hidden from the application and the application developer does not have to worry about thread issues at all.

Note that if you're using the default GLib mainloop integration, you can, instead of attaching a watch, connect to the “message” signal on the bus. This way you don't have to switch() on all possible message types; just connect to the interesting signals in form of message::<type>, where <type> is a specific message type (see the next section for an explanation of message types).

The above snippet could then also be written as:

GstBus *bus; [..] bus = gst_pipeline_get_bus (GST_PIPELINE (pipeline)); gst_bus_add_signal_watch (bus); g_signal_connect (bus, "message::error", G_CALLBACK (cb_message_error), NULL); g_signal_connect (bus, "message::eos", G_CALLBACK (cb_message_eos), NULL); [..]

If you aren't using GLib mainloop, the asynchronous message signals won't be available by default. You can however install a custom sync handler that wakes up the custom mainloop and that usesgst_bus_async_signal_func () to emit the signals. (see also documentation for details)

Message types

GStreamer has a few pre-defined message types that can be passed over the bus. The messages are extensible, however. Plug-ins can define additional messages, and applications can decide to either have specific code for those or ignore them. All applications are strongly recommended to at least handle error messages by providing visual feedback to the user.

All messages have a message source, type and timestamp. The message source can be used to see which element emitted the message. For some messages, for example, only the ones emitted by the top-level pipeline will be interesting to most applications (e.g. for state-change notifications). Below is a list of all messages and a short explanation of what they do and how to parse message-specific content.

-

Error, warning and information notifications: those are used by elements if a message should be shown to the user about the state of the pipeline. Error messages are fatal and terminate the data-passing. The error should be repaired to resume pipeline activity. Warnings are not fatal, but imply a problem nevertheless. Information messages are for non-problem notifications. All those messages contain a

GErrorwith the main error type and message, and optionally a debug string. Both can be extracted usinggst_message_parse_error(),_parse_warning ()and_parse_info (). Both error and debug strings should be freed after use. -

End-of-stream notification: this is emitted when the stream has ended. The state of the pipeline will not change, but further media handling will stall. Applications can use this to skip to the next song in their playlist. After end-of-stream, it is also possible to seek back in the stream. Playback will then continue automatically. This message has no specific arguments.

-

Tags: emitted when metadata was found in the stream. This can be emitted multiple times for a pipeline (e.g. once for descriptive metadata such as artist name or song title, and another one for stream-information, such as samplerate and bitrate). Applications should cache metadata internally.

gst_message_parse_tag()should be used to parse the taglist, which should begst_tag_list_unref ()'ed when no longer needed. -

State-changes: emitted after a successful state change.

gst_message_parse_state_changed ()can be used to parse the old and new state of this transition. -

Buffering: emitted during caching of network-streams. One can manually extract the progress (in percent) from the message by extracting the “buffer-percent” property from the structure returned by

gst_message_get_structure(). See also Buffering -

Element messages: these are special messages that are unique to certain elements and usually represent additional features. The element's documentation should mention in detail which element messages a particular element may send. As an example, the 'qtdemux' QuickTime demuxer element may send a 'redirect' element message on certain occasions if the stream contains a redirect instruction.

-

Application-specific messages: any information on those can be extracted by getting the message structure (see above) and reading its fields. Usually these messages can safely be ignored.

Application messages are primarily meant for internal use in applications in case the application needs to marshal information from some thread into the main thread. This is particularly useful when the application is making use of element signals (as those signals will be emitted in the context of the streaming thread).

Signals

/* Connect to the pad-added signal */

g_signal_connect (source, "pad-added", G_CALLBACK (pad_added_handler), &data);

GSignals are a crucial point in GStreamer. They allow you to be notified (by means of a callback) when something interesting has happened. Signals are identified by a name, and each GObject has its own signals.

In this line, we are attaching to the “pad-added” signal of our source (an uridecodebin element). To do so, we use g_signal_connect() and provide the callback function to be used (pad_added_handler) and a data pointer. GStreamer does nothing with this data pointer, it just forwards it to the callback so we can share information with it. In this case, we pass a pointer to the CustomData structure we built specially for this purpose.

The signals that a GstElement generates can be found in its documentation or using the gst-inspect-1.0tool

signal example:https://gstreamer.freedesktop.org/documentation/tutorials/basic/dynamic-pipelines.html?gi-language=c

Pads and capabilities

As we have seen in Elements, the pads are the element's interface to the outside world. Data streams from one element's source pad to another element's sink pad. The specific type of media that the element can handle will be exposed by the pad's capabilities.

Pad templates

Pads are created from Pad Templates, which indicate all possible Capabilities a Pad could ever have. Templates are useful to create several similar Pads, and also allow early refusal of connections between elements: If the Capabilities of their Pad Templates do not have a common subset (their intersection is empty), there is no need to negotiate further.

Pad Templates can be viewed as the first step in the negotiation process. As the process evolves, actual Pads are instantiated and their Capabilities refined until they are fixed (or negotiation fails).

Pads

A pad type is defined by two properties: its direction and its availability. As we've mentioned before, GStreamer defines two pad directions: source pads and sink pads. This terminology is defined from the view of within the element: elements receive data on their sink pads and generate data on their source pads. Schematically, sink pads are drawn on the left side of an element, whereas source pads are drawn on the right side of an element. In such graphs, data flows from left to right.

Pad directions are very simple compared to pad availability. A pad can have any of three availabilities: always, sometimes and on request. The meaning of those three types is exactly as it says: always pads always exist, sometimes pads exist only in certain cases (and can disappear randomly), and on-request pads appear only if explicitly requested by applications.

Dynamic (or sometimes) pads

Some elements might not have all of their pads when the element is created. This can happen, for example, with an Ogg demuxer element. The element will read the Ogg stream and create dynamic pads for each contained elementary stream (vorbis, theora) when it detects such a stream in the Ogg stream. Likewise, it will delete the pad when the stream ends. This principle is very useful for demuxer elements, for example.

Running gst-inspect oggdemux will show that the element has only one pad: a sink pad called 'sink'. The other pads are “dormant”. You can see this in the pad template because there is an “Exists: Sometimes” property. Depending on the type of Ogg file you play, the pads will be created. We will see that this is very important when you are going to create dynamic pipelines. You can attach a signal handler to an element to inform you when the element has created a new pad from one of its “sometimes” pad templates. The following piece of code is an example of how to do this:

https://gstreamer.freedesktop.org/documentation/tutorials/basic/dynamic-pipelines.html?gi-language=c

#include <gst/gst.h> static void cb_new_pad (GstElement *element, GstPad *pad, gpointer data) { gchar *name; name = gst_pad_get_name (pad); g_print ("A new pad %s was created ", name); g_free (name); /* here, you would setup a new pad link for the newly created pad */ [..] } int main (int argc, char *argv[]) { GstElement *pipeline, *source, *demux; GMainLoop *loop; /* init */ gst_init (&argc, &argv); /* create elements */ pipeline = gst_pipeline_new ("my_pipeline"); source = gst_element_factory_make ("filesrc", "source"); g_object_set (source, "location", argv[1], NULL); demux = gst_element_factory_make ("oggdemux", "demuxer"); /* you would normally check that the elements were created properly */ /* put together a pipeline */ gst_bin_add_many (GST_BIN (pipeline), source, demux, NULL); gst_element_link_pads (source, "src", demux, "sink"); /* listen for newly created pads */ g_signal_connect (demux, "pad-added", G_CALLBACK (cb_new_pad), NULL); /* start the pipeline */ gst_element_set_state (GST_ELEMENT (pipeline), GST_STATE_PLAYING); loop = g_main_loop_new (NULL, FALSE); g_main_loop_run (loop); [..] }

It is not uncommon to add elements to the pipeline only from within the "pad-added" callback. If you do this, don't forget to set the state of the newly-added elements to the target state of the pipeline usinggst_element_set_state () or gst_element_sync_state_with_parent ().

Request pads

An element can also have request pads. These pads are not created automatically but are only created on demand. This is very useful for multiplexers, aggregators and tee elements. Aggregators are elements that merge the content of several input streams together into one output stream. Tee elements are the reverse: they are elements that have one input stream and copy this stream to each of their output pads, which are created on request. Whenever an application needs another copy of the stream, it can simply request a new output pad from the tee element.

The following piece of code shows how you can request a new output pad from a “tee” element:

https://gstreamer.freedesktop.org/documentation/tutorials/basic/multithreading-and-pad-availability.html?gi-language=c

static void some_function (GstElement * tee) { GstPad *pad; gchar *name; pad = gst_element_get_request_pad (tee, "src%d"); name = gst_pad_get_name (pad); g_print ("A new pad %s was created ", name); g_free (name); /* here, you would link the pad */ /* [..] */ /* and, after doing that, free our reference */ gst_object_unref (GST_OBJECT (pad)); }

The gst_element_get_request_pad () method can be used to get a pad from the element based on the name of the pad template. It is also possible to request a pad that is compatible with another pad template. This is very useful if you want to link an element to a multiplexer element and you need to request a pad that is compatible. The method gst_element_get_compatible_pad () can be used to request a compatible pad, as shown in the next example. It will request a compatible pad from an Ogg multiplexer from any input.

static void link_to_multiplexer (GstPad * tolink_pad, GstElement * mux) { GstPad *pad; gchar *srcname, *sinkname; srcname = gst_pad_get_name (tolink_pad); pad = gst_element_get_compatible_pad (mux, tolink_pad, NULL); gst_pad_link (tolink_pad, pad); sinkname = gst_pad_get_name (pad); gst_object_unref (GST_OBJECT (pad)); g_print ("A new pad %s was created and linked to %s ", sinkname, srcname); g_free (sinkname); g_free (srcname); }

Capabilities of a pad

Since the pads play a very important role in how the element is viewed by the outside world, a mechanism is implemented to describe the data that can flow or currently flows through the pad by using capabilities. Here, we will briefly describe what capabilities are and how to use them, enough to get an understanding of the concept. For an in-depth look into capabilities and a list of all capabilities defined in GStreamer, see thePlugin Writers Guide

Capabilities are attached to pad templates and to pads. For pad templates, it will describe the types of media that may stream over a pad created from this template. For pads, it can either be a list of possible caps (usually a copy of the pad template's capabilities), in which case the pad is not yet negotiated, or it is the type of media that currently streams over this pad, in which case the pad has been negotiated already.

Dissecting capabilities

A pad's capabilities are described in a GstCaps object. Internally, a GstCaps will contain one or moreGstStructure that will describe one media type. A negotiated pad will have capabilities set that contain exactly one structure. Also, this structure will contain only fixed values. These constraints are not true for unnegotiated pads or pad templates.

As an example, below is a dump of the capabilities of the “vorbisdec” element, which you will get by runninggst-inspect vorbisdec. You will see two pads: a source and a sink pad. Both of these pads are always available, and both have capabilities attached to them. The sink pad will accept vorbis-encoded audio data, with the media type “audio/x-vorbis”. The source pad will be used to send raw (decoded) audio samples to the next element, with a raw audio media type (in this case, “audio/x-raw”). The source pad will also contain properties for the audio samplerate and the amount of channels, plus some more that you don't need to worry about for now.

Pad Templates: SRC template: 'src' Availability: Always Capabilities: audio/x-raw format: F32LE rate: [ 1, 2147483647 ] channels: [ 1, 256 ] SINK template: 'sink' Availability: Always Capabilities: audio/x-vorbis

Properties and values

Properties are used to describe extra information for capabilities. A property consists of a key (a string) and a value. There are different possible value types that can be used:

-

Basic types, this can be pretty much any

GTyperegistered with Glib. Those properties indicate a specific, non-dynamic value for this property. Examples include:-

An integer value (

G_TYPE_INT): the property has this exact value. -

A boolean value (

G_TYPE_BOOLEAN): the property is eitherTRUEorFALSE. -

A float value (

G_TYPE_FLOAT): the property has this exact floating point value. -

A string value (

G_TYPE_STRING): the property contains a UTF-8 string. -

A fraction value (

GST_TYPE_FRACTION): contains a fraction expressed by an integer numerator and denominator.

-

-

Range types are

GTypes registered by GStreamer to indicate a range of possible values. They are used for indicating allowed audio samplerate values or supported video sizes. The two types defined in GStreamer are:-

An integer range value (

GST_TYPE_INT_RANGE): the property denotes a range of possible integers, with a lower and an upper boundary. The “vorbisdec” element, for example, has a rate property that can be between 8000 and 50000. -

A float range value (

GST_TYPE_FLOAT_RANGE): the property denotes a range of possible floating point values, with a lower and an upper boundary. -

A fraction range value (

GST_TYPE_FRACTION_RANGE): the property denotes a range of possible fraction values, with a lower and an upper boundary.

-

-

A list value (

GST_TYPE_LIST): the property can take any value from a list of basic values given in this list.Example: caps that express that either a sample rate of 44100 Hz and a sample rate of 48000 Hz is supported would use a list of integer values, with one value being 44100 and one value being 48000.

-

An array value (

GST_TYPE_ARRAY): the property is an array of values. Each value in the array is a full value on its own, too. All values in the array should be of the same elementary type. This means that an array can contain any combination of integers, lists of integers, integer ranges together, and the same for floats or strings, but it can not contain both floats and ints at the same time.Example: for audio where there are more than two channels involved the channel layout needs to be specified (for one and two channel audio the channel layout is implicit unless stated otherwise in the caps). So the channel layout would be an array of integer enum values where each enum value represents a loudspeaker position. Unlike a

GST_TYPE_LIST, the values in an array will be interpreted as a whole.

What capabilities are used for

Capabilities (short: caps) describe the type of data that is streamed between two pads, or that one pad (template) supports. This makes them very useful for various purposes:

-

Autoplugging: automatically finding elements to link to a pad based on its capabilities. All autopluggers use this method.

-

Compatibility detection: when two pads are linked, GStreamer can verify if the two pads are talking about the same media type. The process of linking two pads and checking if they are compatible is called “caps negotiation”.

-

Metadata: by reading the capabilities from a pad, applications can provide information about the type of media that is being streamed over the pad, which is information about the stream that is currently being played back.

-

Filtering: an application can use capabilities to limit the possible media types that can stream between two pads to a specific subset of their supported stream types. An application can, for example, use “filtered caps” to set a specific (fixed or non-fixed) video size that should stream between two pads. You will see an example of filtered caps later in this manual, in Manually adding or removing data from/to a pipeline. You can do caps filtering by inserting a capsfilter element into your pipeline and setting its “caps” property. Caps filters are often placed after converter elements like audioconvert, audioresample, videoconvert or videoscale to force those converters to convert data to a specific output format at a certain point in a stream.

Using capabilities for metadata

A pad can have a set (i.e. one or more) of capabilities attached to it. Capabilities (GstCaps) are represented as an array of one or more GstStructures, and each GstStructure is an array of fields where each field consists of a field name string (e.g. "width") and a typed value (e.g. G_TYPE_INT orGST_TYPE_INT_RANGE).

Note that there is a distinct difference between the possible capabilities of a pad (ie. usually what you find as caps of pad templates as they are shown in gst-inspect), the allowed caps of a pad (can be the same as the pad's template caps or a subset of them, depending on the possible caps of the peer pad) and lastly negotiatedcaps (these describe the exact format of a stream or buffer and contain exactly one structure and have no variable bits like ranges or lists, ie. they are fixed caps).

You can get values of properties in a set of capabilities by querying individual properties of one structure. You can get a structure from a caps using gst_caps_get_structure () and the number of structures in aGstCaps using gst_caps_get_size ().

Caps are called simple caps when they contain only one structure, and fixed caps when they contain only one structure and have no variable field types (like ranges or lists of possible values). Two other special types of caps are ANY caps and empty caps.

Here is an example of how to extract the width and height from a set of fixed video caps:

static void read_video_props (GstCaps *caps) { gint width, height; const GstStructure *str; g_return_if_fail (gst_caps_is_fixed (caps)); str = gst_caps_get_structure (caps, 0); if (!gst_structure_get_int (str, "width", &width) || !gst_structure_get_int (str, "height", &height)) { g_print ("No width/height available "); return; } g_print ("The video size of this set of capabilities is %dx%d ", width, height); }

Creating capabilities for filtering

While capabilities are mainly used inside a plugin to describe the media type of the pads, the application programmer often also has to have basic understanding of capabilities in order to interface with the plugins, especially when using filtered caps. When you're using filtered caps or fixation, you're limiting the allowed types of media that can stream between two pads to a subset of their supported media types. You do this using a capsfilter element in your pipeline. In order to do this, you also need to create your own GstCaps. The easiest way to do this is by using the convenience function gst_caps_new_simple ():

static gboolean link_elements_with_filter (GstElement *element1, GstElement *element2) { gboolean link_ok; GstCaps *caps; caps = gst_caps_new_simple ("video/x-raw", "format", G_TYPE_STRING, "I420", "width", G_TYPE_INT, 384, "height", G_TYPE_INT, 288, "framerate", GST_TYPE_FRACTION, 25, 1, NULL); link_ok = gst_element_link_filtered (element1, element2, caps); gst_caps_unref (caps); if (!link_ok) { g_warning ("Failed to link element1 and element2!"); } return link_ok; }

This will force the data flow between those two elements to a certain video format, width, height and framerate (or the linking will fail if that cannot be achieved in the context of the elements involved). Keep in mind that when you use gst_element_link_filtered () it will automatically create a capsfilterelement for you and insert it into your bin or pipeline between the two elements you want to connect (this is important if you ever want to disconnect those elements because then you will have to disconnect both elements from the capsfilter instead).

In some cases, you will want to create a more elaborate set of capabilities to filter a link between two pads. Then, this function is too simplistic and you'll want to use the method gst_caps_new_full ():

static gboolean link_elements_with_filter (GstElement *element1, GstElement *element2) { gboolean link_ok; GstCaps *caps; caps = gst_caps_new_full ( gst_structure_new ("video/x-raw", "width", G_TYPE_INT, 384, "height", G_TYPE_INT, 288, "framerate", GST_TYPE_FRACTION, 25, 1, NULL), gst_structure_new ("video/x-bayer", "width", G_TYPE_INT, 384, "height", G_TYPE_INT, 288, "framerate", GST_TYPE_FRACTION, 25, 1, NULL), NULL); link_ok = gst_element_link_filtered (element1, element2, caps); gst_caps_unref (caps); if (!link_ok) { g_warning ("Failed to link element1 and element2!"); } return link_ok; }

See the API references for the full API of GstStructure and GstCaps.

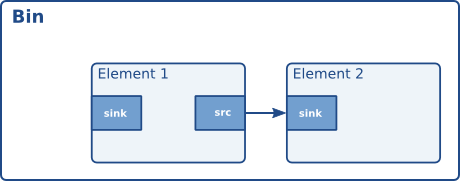

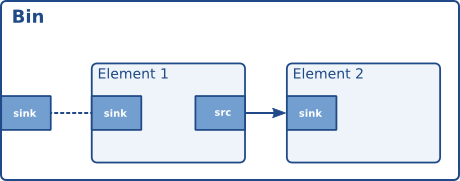

Ghost pads

A ghost pad is a pad from some element in the bin that can be accessed directly from the bin as well. Compare it to a symbolic link in UNIX filesystems. Using ghost pads on bins, the bin also has a pad and can transparently be used as an element in other parts of your code.

The sink pad of element one is now also a pad of the bin. Because ghost pads look and work like any other pads, they can be added to any type of elements, not just to a GstBin, just like ordinary pads.

A ghostpad is created using the function gst_ghost_pad_new ():

https://gstreamer.freedesktop.org/documentation/tutorials/playback/custom-playbin-sinks.html?gi-language=c

#include <gst/gst.h> int main (int argc, char *argv[]) { GstElement *bin, *sink; GstPad *pad; /* init */ gst_init (&argc, &argv); /* create element, add to bin */ sink = gst_element_factory_make ("fakesink", "sink"); bin = gst_bin_new ("mybin"); gst_bin_add (GST_BIN (bin), sink); /* add ghostpad */ pad = gst_element_get_static_pad (sink, "sink"); gst_element_add_pad (bin, gst_ghost_pad_new ("sink", pad)); gst_object_unref (GST_OBJECT (pad)); [..] }

In the above example, the bin now also has a pad: the pad called “sink” of the given element. The bin can, from here on, be used as a substitute for the sink element. You could, for example, link another element to the bin.

Buffers and Events

The data flowing through a pipeline consists of a combination of buffers and events. Buffers contain the actual media data. Events contain control information, such as seeking information and end-of-stream notifiers. All this will flow through the pipeline automatically when it's running.

Buffers

Buffers contain the data that will flow through the pipeline you have created. A source element will typically create a new buffer and pass it through a pad to the next element in the chain. When using the GStreamer infrastructure to create a media pipeline you will not have to deal with buffers yourself; the elements will do that for you.

A buffer consists, amongst others, of:

-

Pointers to memory objects. Memory objects encapsulate a region in the memory.

-

A timestamp for the buffer.

-

A refcount that indicates how many elements are using this buffer. This refcount will be used to destroy the buffer when no element has a reference to it.

-

Buffer flags.

The simple case is that a buffer is created, memory allocated, data put in it, and passed to the next element. That element reads the data, does something (like creating a new buffer and decoding into it), and unreferences the buffer. This causes the data to be free'ed and the buffer to be destroyed. A typical video or audio decoder works like this.

There are more complex scenarios, though. Elements can modify buffers in-place, i.e. without allocating a new one. Elements can also write to hardware memory (such as from video-capture sources) or memory allocated from the X-server (using XShm). Buffers can be read-only, and so on.

Events

Events are control particles that are sent both up- and downstream in a pipeline along with buffers. Downstream events notify fellow elements of stream states. Possible events include seeking, flushes, end-of-stream notifications and so on. Upstream events are used both in application-element interaction as well as element-element interaction to request changes in stream state, such as seeks. For applications, only upstream events are important. Downstream events are just explained to get a more complete picture of the data concept.

Since most applications seek in time units, our example below does so too:

static void seek_to_time (GstElement *element, guint64 time_ns) { GstEvent *event; event = gst_event_new_seek (1.0, GST_FORMAT_TIME, GST_SEEK_FLAG_NONE, GST_SEEK_METHOD_SET, time_ns, GST_SEEK_TYPE_NONE, G_GUINT64_CONSTANT (0)); gst_element_send_event (element, event); }

The function gst_element_seek () is a shortcut for this. This is mostly just to show how it all works.

Communication

GStreamer provides several mechanisms for communication and data exchange between the application and thepipeline.

-

buffers are objects for passing streaming data between elements in the pipeline. Buffers always travel from sources to sinks (downstream).

-

events are objects sent between elements or from the application to elements. Events can travel upstream and downstream. Downstream events can be synchronised to the data flow.

-

messages are objects posted by elements on the pipeline's message bus, where they will be held for collection by the application. Messages can be intercepted synchronously from the streaming thread context of the element posting the message, but are usually handled asynchronously by the application from the application's main thread. Messages are used to transmit information such as errors, tags, state changes, buffering state, redirects etc. from elements to the application in a thread-safe way.

-

queries allow applications to request information such as duration or current playback position from the pipeline. Queries are always answered synchronously. Elements can also use queries to request information from their peer elements (such as the file size or duration). They can be used both ways within a pipeline, but upstream queries are more common.

query example: https://gstreamer.freedesktop.org/documentation/tutorials/basic/time-management.html?gi-language=c

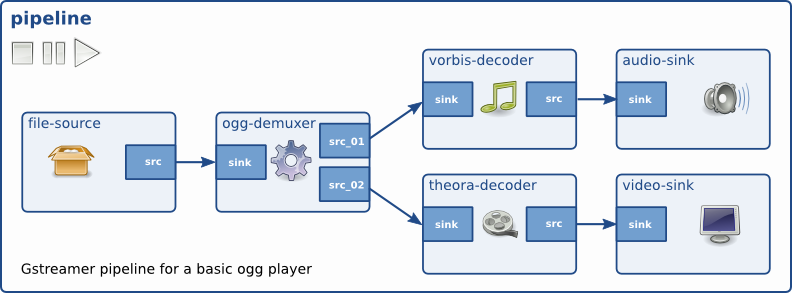

Your first application

This chapter will summarize everything you've learned in the previous chapters. It describes all aspects of a simple GStreamer application, including initializing libraries, creating elements, packing elements together in a pipeline and playing this pipeline. By doing all this, you will be able to build a simple Ogg/Vorbis audio player.

#include <gst/gst.h> #include <glib.h> static gboolean bus_call (GstBus *bus, GstMessage *msg, gpointer data) { GMainLoop *loop = (GMainLoop *) data; switch (GST_MESSAGE_TYPE (msg)) { case GST_MESSAGE_EOS: g_print ("End of stream "); g_main_loop_quit (loop); break; case GST_MESSAGE_ERROR: { gchar *debug; GError *error; gst_message_parse_error (msg, &error, &debug); g_free (debug); g_printerr ("Error: %s ", error->message); g_error_free (error); g_main_loop_quit (loop); break; } default: break; } return TRUE; } static void on_pad_added (GstElement *element, GstPad *pad, gpointer data) { GstPad *sinkpad; GstElement *decoder = (GstElement *) data; /* We can now link this pad with the vorbis-decoder sink pad */ g_print ("Dynamic pad created, linking demuxer/decoder "); sinkpad = gst_element_get_static_pad (decoder, "sink"); gst_pad_link (pad, sinkpad); gst_object_unref (sinkpad); } int main (int argc, char *argv[]) { GMainLoop *loop; GstElement *pipeline, *source, *demuxer, *decoder, *conv, *sink; GstBus *bus; guint bus_watch_id; /* Initialisation */ gst_init (&argc, &argv); loop = g_main_loop_new (NULL, FALSE); /* Check input arguments */ if (argc != 2) { g_printerr ("Usage: %s <Ogg/Vorbis filename> ", argv[0]); return -1; } /* Create gstreamer elements */ pipeline = gst_pipeline_new ("audio-player"); source = gst_element_factory_make ("filesrc", "file-source"); demuxer = gst_element_factory_make ("oggdemux", "ogg-demuxer"); decoder = gst_element_factory_make ("vorbisdec", "vorbis-decoder"); conv = gst_element_factory_make ("audioconvert", "converter"); sink = gst_element_factory_make ("autoaudiosink", "audio-output"); if (!pipeline || !source || !demuxer || !decoder || !conv || !sink) { g_printerr ("One element could not be created. Exiting. "); return -1; } /* Set up the pipeline */ /* we set the input filename to the source element */ g_object_set (G_OBJECT (source), "location", argv[1], NULL); /* we add a message handler */ bus = gst_pipeline_get_bus (GST_PIPELINE (pipeline)); bus_watch_id = gst_bus_add_watch (bus, bus_call, loop); gst_object_unref (bus); /* we add all elements into the pipeline */ /* file-source | ogg-demuxer | vorbis-decoder | converter | alsa-output */ gst_bin_add_many (GST_BIN (pipeline), source, demuxer, decoder, conv, sink, NULL); /* we link the elements together */ /* file-source -> ogg-demuxer ~> vorbis-decoder -> converter -> alsa-output */ gst_element_link (source, demuxer); gst_element_link_many (decoder, conv, sink, NULL); g_signal_connect (demuxer, "pad-added", G_CALLBACK (on_pad_added), decoder); /* note that the demuxer will be linked to the decoder dynamically. The reason is that Ogg may contain various streams (for example audio and video). The source pad(s) will be created at run time, by the demuxer when it detects the amount and nature of streams. Therefore we connect a callback function which will be executed when the "pad-added" is emitted.*/ /* Set the pipeline to "playing" state*/ g_print ("Now playing: %s ", argv[1]); gst_element_set_state (pipeline, GST_STATE_PLAYING); /* Iterate */ g_print ("Running... "); g_main_loop_run (loop); /* Out of the main loop, clean up nicely */ g_print ("Returned, stopping playback "); gst_element_set_state (pipeline, GST_STATE_NULL); g_print ("Deleting pipeline "); gst_object_unref (GST_OBJECT (pipeline)); g_source_remove (bus_watch_id); g_main_loop_unref (loop); return 0; }

We now have created a complete pipeline. We can visualise the pipeline as follows: