本文主要测试mysql插入数据效率,测试机器硬件为:inter i3 (2.13GHz,4核) + 4G内存(2.99G可用) +32位操作系统

一:表结构

CREATE TABLE `record` ( `id` int(11) NOT NULL AUTO_INCREMENT, `openid` varchar(63) NOT NULL, `tag_id` int(11) DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

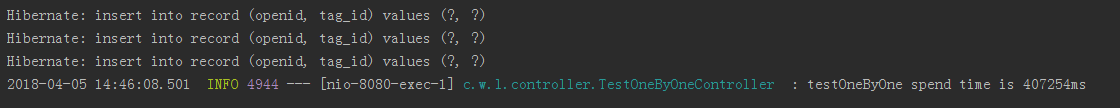

二:单线程单条插入

public class TestOneByOneController { private static Logger logger = LoggerFactory.getLogger(TestOneByOneController.class); @Autowired private RecordRepository recordRepository; private static final int total = 10000; @RequestMapping(value = "/testOneByOne") @ResponseBody public String testOneByOne(){ long startTime = System.currentTimeMillis(); try { int i; for (i = 0; i < total; i++) { String openid = UUID.randomUUID().toString(); RecordEntity record = new RecordEntity(); record.setOpenid(openid); record.setTagId(i); recordRepository.save(record); } }catch (Exception ex){ ex.printStackTrace(); } Long endTime = System.currentTimeMillis(); String result = "testOneByOne spend time is " + (endTime - startTime) + "ms"; logger.info(result); return result; } }

1万条数据插入结果:大约需要407s

三:单线程批量插入

(1)测试代码

package com.ws.learn.controller; @RestController public class TestController { private static Logger logger = LoggerFactory.getLogger(TestController.class); private EntityManagerFactory emf; @PersistenceUnit//使用这个标记来注入EntityManagerFactory public void setEntityManagerFactory(EntityManagerFactory emf) { this.emf = emf; } private static final int total = 1000000; private static final int perThreadDealNum = 10000; @RequestMapping(value = "/test") @ResponseBody public String test(){ long startTime = System.currentTimeMillis(); try { StringBuilder sb = new StringBuilder(204800); sb.append("insert into record(openid,tag_id) values"); int num = 0; for (num = 0; num < total; num++) { String openid = UUID.randomUUID().toString(); sb.append("('" + openid + "'," + num + "),"); if((num + 1)%perThreadDealNum == 0){ sb.deleteCharAt(sb.length() -1); myBatchInsert(sb); sb = new StringBuilder(204800); sb.append("insert into record(openid,tag_id) values"); } } if ( num % perThreadDealNum != 0) { sb.deleteCharAt(sb.length() -1); myBatchInsert(sb); } }catch (Exception ex){ ex.printStackTrace(); }finally { } Long endTime = System.currentTimeMillis(); String result = "test spend time is " + (endTime - startTime) + "ms"; logger.info(result); return result; } public void myBatchInsert(StringBuilder sb){ EntityManager em = emf.createEntityManager(); em.getTransaction().begin(); Query query = em.createNativeQuery(sb.toString()); query.executeUpdate(); em.getTransaction().commit(); em.close(); } }

(2)测试结果

(2.1)100万的数据,每次批量插入1万,插入时间需要44s左右

2018-04-05 14:21:40.917 INFO 3012 --- [nio-8080-exec-1] com.ws.learn.controller.TestController : test spend time is 44025ms

(2.2) 100万的数据,每次批量插入2万,插入时间需要42s左右,基本没多少改进

2018-04-05 14:25:30.911 INFO 3012 --- [nio-8080-exec-1] com.ws.learn.controller.TestController : test spend time is 41980ms

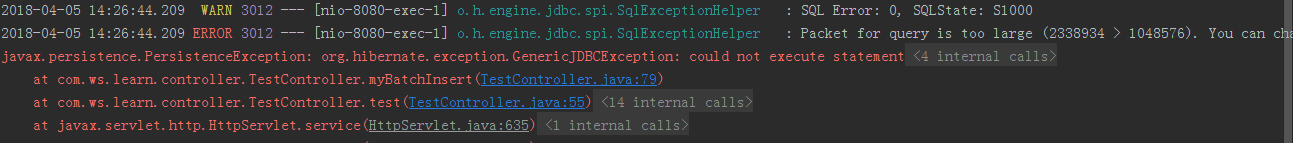

(2.3)当每次批量插入5万时报错:

四:多线程批量插入

(1)测试代码

@RestController public class TestThreadController { private static Logger logger = LoggerFactory.getLogger(TestThreadController.class); private EntityManagerFactory emf; @PersistenceUnit//使用这个标记来注入EntityManagerFactory public void setEntityManagerFactory(EntityManagerFactory emf) { this.emf = emf; } private static final int total = 1000000; private static final int perThreadDealNum = 10000; @RequestMapping(value = "/testWithThread") @ResponseBody public String testWithThread(){ long startTime = System.currentTimeMillis(); try { logger.info("" + Runtime.getRuntime().availableProcessors()); final ExecutorService fixExecutorPool = Executors.newFixedThreadPool(10); StringBuilder sb = new StringBuilder(102400); sb.append("insert into record(openid,tag_id) values"); int i; for (i = 0; i < total; i++) { String openid = UUID.randomUUID().toString(); sb.append("('" + openid + "'," + i + "),"); if((i+1)%perThreadDealNum ==0){ sb.deleteCharAt(sb.length() -1); fixExecutorPool.execute(new RecordThread(sb, emf)); sb = new StringBuilder(204800); sb.append("insert into record(openid,tag_id) values"); } } if (i% perThreadDealNum != 0) { sb.deleteCharAt(sb.length() -1); fixExecutorPool.execute(new RecordThread(sb, emf)); } fixExecutorPool.shutdown(); while (!fixExecutorPool.awaitTermination(500, TimeUnit.MILLISECONDS)){ } }catch (Exception ex){ ex.printStackTrace(); }finally { } Long endTime = System.currentTimeMillis(); String result = "testWithThread spend time is " + (endTime - startTime) + "ms"; logger.info(result); return result; } }

public class RecordThread implements Runnable { private StringBuilder sb; private EntityManagerFactory emf; public RecordThread(StringBuilder sb, EntityManagerFactory emf){ this.sb = sb; this.emf = emf; } @Override public void run() { EntityManager em = emf.createEntityManager(); em.getTransaction().begin(); Query query = em.createNativeQuery(sb.toString()); query.executeUpdate(); em.getTransaction().commit(); em.close(); } }

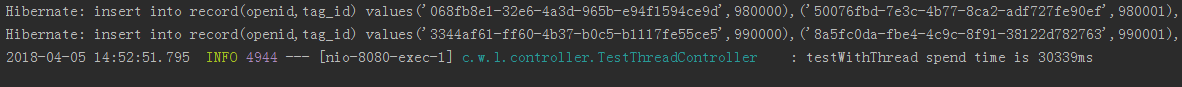

(2)测试结果

(2.1) 100万的数据,10个固定线程,每次批量插入1万,插入时间需要30339ms左右,比单线程的40+s有一定提升。

第二次运行有25866ms,第三次27187ms

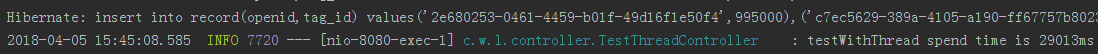

(2.2)100万的数据,10个固定线程,每次批量插入5千,插入时间需要29013ms,提升效果不大

(2.3)100万的数据,10个固定线程,每次批量插入2万,插入时间需要32511ms左右,时间反而增加了

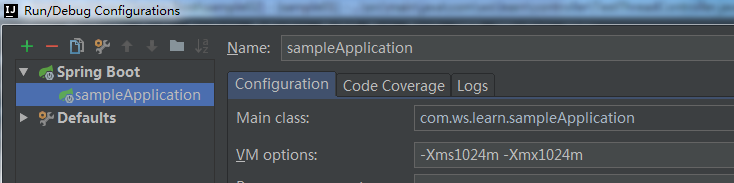

注意,如果jvm参数设置过小,此时有可能会出现 Exception in thread "pool-13-thread-1" java.lang.OutOfMemoryError: Java heap space 的异常

我的VM配置:

运行结果:

![]()

(2.4)200万的数据,10个固定线程,每次批量插入1万,插入时间需要63084ms

注意,需要调整好jvm参数,不然会堆内存溢出。

(2.5)200万的数据,15个固定线程,每次批量插入1万,插入时间需要62322ms,这种情况下增加线程基本无影响

(3)其它测试结果(由于有误差和垃圾回收等影响,数据量越大误差越大,下面为大致结果)

| 数据量 | 线程数 | 单次批量插入 | 耗时 |

| 10万 | 单线程 | 2000 | 5145ms |

| 10万 | 单线程 | 5000 | 4112ms |

| 10万 | 单线程 | 10000 | 4746ms |

| 10万 | 5 | 2000 | 2371ms |

| 10万 | 5 | 5000 | 2074ms |

| 10万 | 5 | 10000 | 2006ms |

| 100万 | 10 | 10000 | 25866ms |

| 100万 | 5 | 10000 | 25003ms |

| 100万 | 5 | 5000 | 29883ms |

| 100万 | 5 | 2000 | 35976ms |

| 100万 | 单线程 | 10000 | 40690ms |

| 100万 | 单线程 | 5000 | 45985ms |

| 100万 | 单线程 | 2000 | 57116ms |

在实际情况中,需要根据插入数据量大小和任务执行大致所需时间,合理选择线程数和单次批量插入条数。比如上面10万级别下,5000是一个比较合理的选择。当达到一定线程数后,增加线程数对耗时基本无太大影响。单次批量插入数有一定影响。

(4)下面是使用jconsole工具监控的200万数据测试时内存变化,突增的时候是在测试的时候。