前面学习里了mnist 数据集的识别源码解析,从已有的数据集中判别对应的结果。

那如果想输入一个数字图片,就能得到相应的结果,并判别对应的结果该如何做呢?

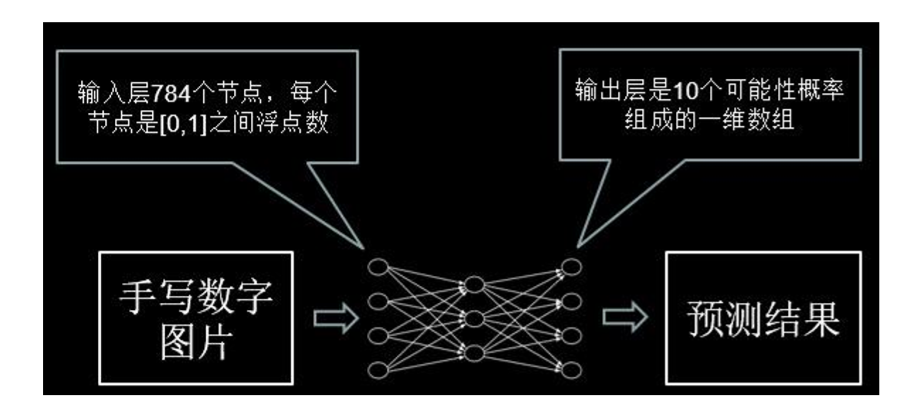

首先,我们看一看实现原理:

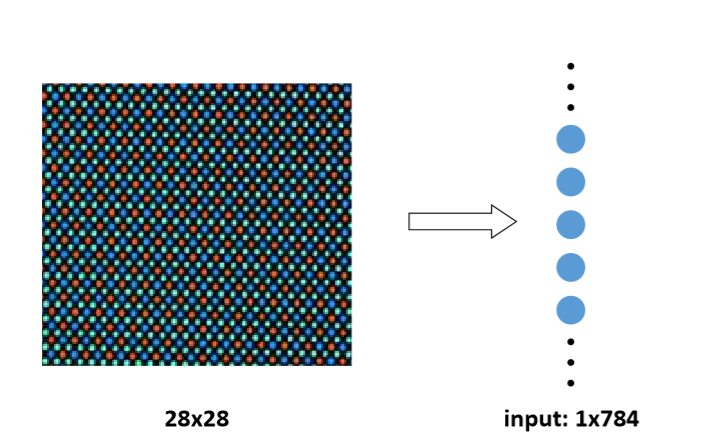

网络输入:1*784个像素点;像素点:0~1之间的浮点数(接近0越黑,接近1越白)

网络输出:一维数组(十个可能性概率),数组中最大的那个元素所对应的索引号就是预测的结果。

下面我们看看代码:

forward.py

#coding:utf-8

#1前向传播过程

import tensorflow as tf

#网络输入节点为784个(代表每张输入图片的像素个数)

INPUT_NODE = 784

#输出节点为10个(表示输出为数字0-9的十分类)

OUTPUT_NODE = 10

#隐藏层节点500个

LAYER1_NODE = 500

def get_weight(shape, regularizer):

#参数满足截断正态分布,并使用正则化,

w = tf.Variable(tf.truncated_normal(shape,stddev=0.1))

#w = tf.Variable(tf.random_normal(shape,stddev=0.1))

#将每个参数的正则化损失加到总损失中

if regularizer != None: tf.add_to_collection('losses', tf.contrib.layers.l2_regularizer(regularizer)(w))

return w

def get_bias(shape):

#初始化的一维数组,初始化值为全 0

b = tf.Variable(tf.zeros(shape))

return b

def forward(x, regularizer):

#由输入层到隐藏层的参数w1形状为[784,500]

w1 = get_weight([INPUT_NODE, LAYER1_NODE], regularizer)

#由输入层到隐藏的偏置b1形状为长度500的一维数组,

b1 = get_bias([LAYER1_NODE])

#前向传播结构第一层为输入 x与参数 w1矩阵相乘加上偏置 b1 ,再经过relu函数 ,得到隐藏层输出 y1。

y1 = tf.nn.relu(tf.matmul(x, w1) + b1)

#由隐藏层到输出层的参数w2形状为[500,10]

w2 = get_weight([LAYER1_NODE, OUTPUT_NODE], regularizer)

#由隐藏层到输出的偏置b2形状为长度10的一维数组

b2 = get_bias([OUTPUT_NODE])

#前向传播结构第二层为隐藏输出 y1与参 数 w2 矩阵相乘加上偏置 矩阵相乘加上偏置 b2,得到输出 y。

#由于输出 。由于输出 y要经过softmax oftmax 函数,使其符合概率分布,故输出y不经过 relu函数

y = tf.matmul(y1, w2) + b2

return y

backward.py

#coding:utf-8 #2反向传播过程 #引入tensorflow、input_data、前向传播mnist_forward和os模块 import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data import mnist_forward import os #每轮喂入神经网络的图片数 BATCH_SIZE = 200 #初始学习率 LEARNING_RATE_BASE = 0.1 #学习率衰减率 LEARNING_RATE_DECAY = 0.99 #正则化系数 REGULARIZER = 0.0001 #训练轮数 STEPS = 50000 #滑动平均衰减率 MOVING_AVERAGE_DECAY = 0.99 #模型保存路径 MODEL_SAVE_PATH="./model/" #模型保存名称 MODEL_NAME="mnist_model" def backward(mnist): #用placeholder给训练数据x和标签y_占位 x = tf.placeholder(tf.float32, [None, mnist_forward.INPUT_NODE]) y_ = tf.placeholder(tf.float32, [None, mnist_forward.OUTPUT_NODE]) #调用mnist_forward文件中的前向传播过程forword()函数,并设置正则化,计算训练数据集上的预测结果y y = mnist_forward.forward(x, REGULARIZER) #当前计算轮数计数器赋值,设定为不可训练类型 global_step = tf.Variable(0, trainable=False) #调用包含所有参数正则化损失的损失函数loss ce = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y, labels=tf.argmax(y_, 1)) cem = tf.reduce_mean(ce) loss = cem + tf.add_n(tf.get_collection('losses')) #设定指数衰减学习率learning_rate learning_rate = tf.train.exponential_decay( LEARNING_RATE_BASE, global_step, mnist.train.num_examples / BATCH_SIZE, LEARNING_RATE_DECAY, staircase=True) #使用梯度衰减算法对模型优化,降低损失函数 #train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step=global_step) train_step = tf.train.MomentumOptimizer(learning_rate,0.9).minimize(loss, global_step=global_step) #train_step = tf.train.AdamOptimizer(learning_rate).minimize(loss, global_step=global_step) #定义参数的滑动平均 ema = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step) ema_op = ema.apply(tf.trainable_variables()) #实例化可还原滑动平均的saver #在模型训练时引入滑动平均可以使模型在测试数据上表现的更加健壮 with tf.control_dependencies([train_step,ema_op]): train_op = tf.no_op(name='train') saver = tf.train.Saver() with tf.Session() as sess: #所有参数初始化 init_op = tf.global_variables_initializer() sess.run(init_op) #断点续训,加入ckpt操作 ckpt = tf.train.get_checkpoint_state(MODEL_SAVE_PATH) if ckpt and ckpt.model_checkpoint_path: saver.restore(sess, ckpt.model_checkpoint_path) #每次喂入batch_size组(即200组)训练数据和对应标签,循环迭代steps轮 for i in range(STEPS): xs, ys = mnist.train.next_batch(BATCH_SIZE) _, loss_value, step = sess.run([train_op, loss, global_step], feed_dict={x: xs, y_: ys}) if i % 1000 == 0: print("After %d training step(s), loss on training batch is %g." % (step, loss_value)) #将当前会话加载到指定路径 saver.save(sess, os.path.join(MODEL_SAVE_PATH, MODEL_NAME), global_step=global_step) def main(): #读入mnist mnist = input_data.read_data_sets("./data/", one_hot=True) #反向传播 backward(mnist) if __name__ == '__main__': main()

test.py

#coding:utf-8 import tensorflow as tf import numpy as np from PIL import Image import mnist_backward import mnist_forward def restore_model(testPicArr): #利用tf.Graph()复现之前定义的计算图 with tf.Graph().as_default() as tg: x = tf.placeholder(tf.float32, [None, mnist_forward.INPUT_NODE]) #调用mnist_forward文件中的前向传播过程forword()函数 y = mnist_forward.forward(x, None) #得到概率最大的预测值 preValue = tf.argmax(y, 1) #实例化具有滑动平均的saver对象 variable_averages = tf.train.ExponentialMovingAverage(mnist_backward.MOVING_AVERAGE_DECAY) variables_to_restore = variable_averages.variables_to_restore() saver = tf.train.Saver(variables_to_restore) with tf.Session() as sess: #通过ckpt获取最新保存的模型 ckpt = tf.train.get_checkpoint_state(mnist_backward.MODEL_SAVE_PATH) if ckpt and ckpt.model_checkpoint_path: saver.restore(sess, ckpt.model_checkpoint_path) preValue = sess.run(preValue, feed_dict={x:testPicArr}) return preValue else: print("No checkpoint file found") return -1 #预处理,包括resize,转变灰度图,二值化 def pre_pic(picName): img = Image.open(picName) reIm = img.resize((28,28), Image.ANTIALIAS) im_arr = np.array(reIm.convert('L')) #对图片做二值化处理(这样以滤掉噪声,另外调试中可适当调节阈值) threshold = 50 #模型的要求是黑底白字,但输入的图是白底黑字,所以需要对每个像素点的值改为255减去原值以得到互补的反色。 for i in range(28): for j in range(28): im_arr[i][j] = 255 - im_arr[i][j] if (im_arr[i][j] < threshold): im_arr[i][j] = 0 else: im_arr[i][j] = 255 #把图片形状拉成1行784列,并把值变为浮点型(因为要求像素点是0-1 之间的浮点数) nm_arr = im_arr.reshape([1, 784]) nm_arr = nm_arr.astype(np.float32) #接着让现有的RGB图从0-255之间的数变为0-1之间的浮点数 img_ready = np.multiply(nm_arr, 1.0/255.0) return img_ready def application(): #输入要识别的几张图片 testNum = input("input the number of test pictures:") for i in range(testNum): #给出待识别图片的路径和名称 testPic = raw_input("the path of test picture:") #图片预处理 testPicArr = pre_pic(testPic) #获取预测结果 preValue = restore_model(testPicArr) print "The prediction number is:", preValue def main(): application() if __name__ == '__main__': main()

mnist_app.py

#coding:utf-8 import tensorflow as tf import numpy as np from PIL import Image import mnist_backward import mnist_forward def restore_model(testPicArr): #利用tf.Graph()复现之前定义的计算图 with tf.Graph().as_default() as tg: x = tf.placeholder(tf.float32, [None, mnist_forward.INPUT_NODE]) #调用mnist_forward文件中的前向传播过程forword()函数 y = mnist_forward.forward(x, None) #得到概率最大的预测值 preValue = tf.argmax(y, 1) #实例化具有滑动平均的saver对象 variable_averages = tf.train.ExponentialMovingAverage(mnist_backward.MOVING_AVERAGE_DECAY) variables_to_restore = variable_averages.variables_to_restore() saver = tf.train.Saver(variables_to_restore) with tf.Session() as sess: #通过ckpt获取最新保存的模型 ckpt = tf.train.get_checkpoint_state(mnist_backward.MODEL_SAVE_PATH) if ckpt and ckpt.model_checkpoint_path: saver.restore(sess, ckpt.model_checkpoint_path) preValue = sess.run(preValue, feed_dict={x:testPicArr}) return preValue else: print("No checkpoint file found") return -1 #预处理,包括resize,转变灰度图,二值化 def pre_pic(picName): img = Image.open(picName) reIm = img.resize((28,28), Image.ANTIALIAS) im_arr = np.array(reIm.convert('L')) #对图片做二值化处理(这样以滤掉噪声,另外调试中可适当调节阈值) threshold = 50 #模型的要求是黑底白字,但输入的图是白底黑字,所以需要对每个像素点的值改为255减去原值以得到互补的反色。 for i in range(28): for j in range(28): im_arr[i][j] = 255 - im_arr[i][j] if (im_arr[i][j] < threshold): im_arr[i][j] = 0 else: im_arr[i][j] = 255 #把图片形状拉成1行784列,并把值变为浮点型(因为要求像素点是0-1 之间的浮点数) nm_arr = im_arr.reshape([1, 784]) nm_arr = nm_arr.astype(np.float32) #接着让现有的RGB图从0-255之间的数变为0-1之间的浮点数 img_ready = np.multiply(nm_arr, 1.0/255.0) return img_ready def application(): #输入要识别的几张图片 testNum = input("input the number of test pictures:") for i in range(testNum): #给出待识别图片的路径和名称 testPic = raw_input("the path of test picture:") #图片预处理 testPicArr = pre_pic(testPic) #获取预测结果 preValue = restore_model(testPicArr) print "The prediction number is:", preValue def main(): application() if __name__ == '__main__': main()

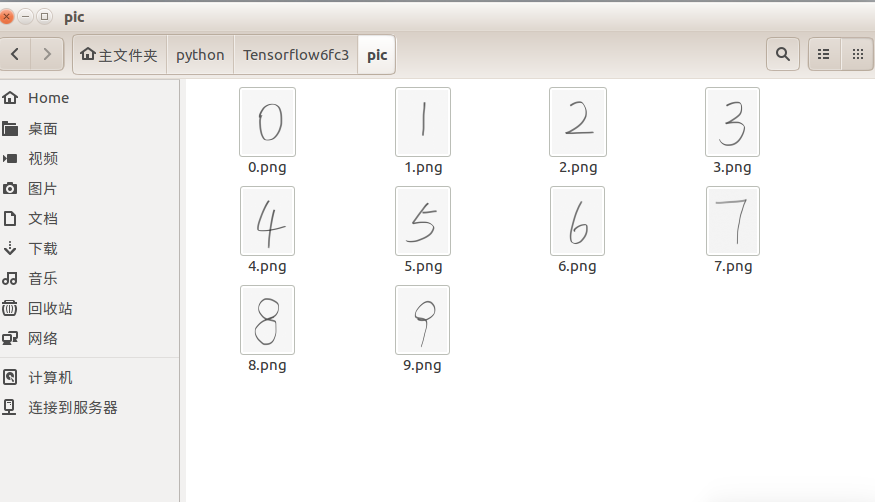

先看看手写图片数据集:

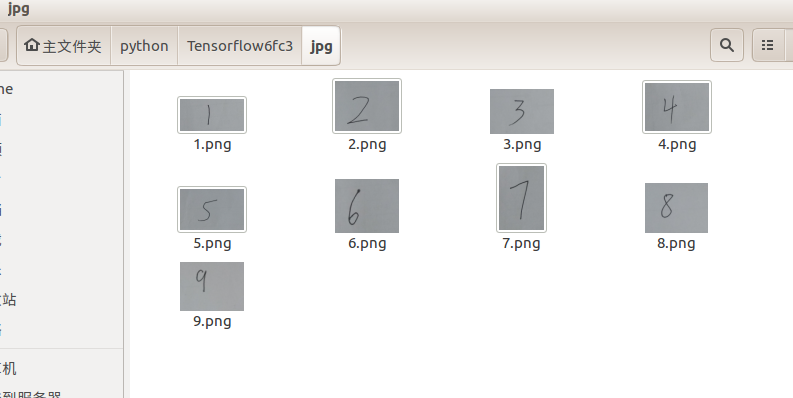

下面是我手写的数据图

结果

会发现用视频中老师创建的图片正确率100%,但是自己手写的错误率很高。

下篇博文主要谈谈我对代码的理解。

注:本文章通过观看北京大学曹健老师的Tensorflow视频,笔记总结而来的。