一、boston房价预测

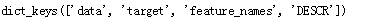

from sklearn.datasets import load_boston boston = load_boston() boston.keys()

#划分训练集与测试集 from sklearn.model_selection import train_test_split x_train,x_test,y_train,y_test=train_test_split(boston.data,boston.target,test_size=0.3) print(x_train.shape,y_train.shape)

#一元线性回归模型 from sklearn.linear_model import LinearRegression LineR = LinearRegression() LineR.fit(x_train,y_train) print("系数:",LineR.coef_) #系数 print("截距:",LineR.intercept_) #截距

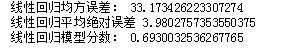

#检测模型好坏 from sklearn.metrics import regression y_pred = LineR.predict(x_test) #预测 n = regression.mean_squared_error(y_test,y_pred) #预测模型均方误差 print("线性回归均方误差:",n) a = regression.mean_absolute_error(y_test,y_pred) print("线性回归平均绝对误差",a) s = LineR.score(x_test,y_test) #模型分数 print("线性回归模型分数:",s)

#多元多项式回归模型 from sklearn.preprocessing import PolynomialFeatures poly = PolynomialFeatures(degree = 2) x_poly_train= poly.fit_transform(x_train) #多项式化 x_poly_test = poly.transform(x_test) #建立模型 LineR2 = LinearRegression() LineR2.fit(x_poly_train,y_train) y_pred2 =LineR2.predict(x_poly_test)#预测

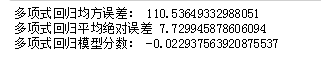

#检测模型好坏 n2 = regression.mean_squared_error(y_test,y_pred2) print("多项式回归均方误差:",n2) a2 = regression.mean_absolute_error(y_test,y_pred2) print("多项式回归平均绝对误差",a2) s2 = LineR2.score(x_poly_test,y_test) print("多项式回归模型分数:",s2)

#比较线性模型与非线性模型的性能,并说明原因 非线性模型功能强,因为多项式模型是曲线,更符合与样本点的分布,而且多项式回归模型误差更小。

二、中文文本分类

import os import numpy as np import sys from datetime import datetime import gc path = 'E:\258' list = os.listdir(path) list

def list_all_files(rootdir):

import os

_files = []

list = os.listdir(rootdir) #列出文件夹下所有的目录与文件

for i in range(0,len(list)):

path = os.path.join(rootdir,list[i])

if os.path.isdir(path):

_files.extend(list_all_files(path))

if os.path.isfile(path):

_files.append(path)

return _files

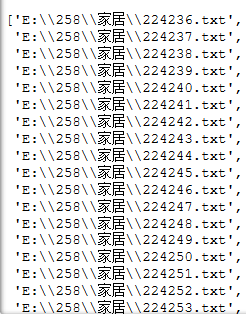

_fs = list_all_files('E:\258')

#所有文件遍历出来

_fs

!pip install jieba

import jieba

with open(r'E:\stopsCN.txt',encoding='utf-8') as f:

stopwords = f.read().split('

')

import jieba

def processing(tokens):

tokens = "".join([char for char in tokens if char.isalpha()])# 去掉非字母汉字的字符

tokens = [token for token in jieba.cut(tokens,cut_all=True) if len(token) >=2]# 结巴分词

tokens = " ".join([token for token in tokens if token not in stopwords])# 去掉停用词

return tokens

tokenList = []

targetList = []

for root,dirs,files in os.walk(path):#拼接路径,再打开文件

for f in files:

filePath = os.path.join(root,f)

with open(filePath,encoding='utf-8') as f:

content = f.read()

target = filePath.split('\')[-2]

targetList.append(target)

tokenList.append(processing(content))

# 划分训练集测试集

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import GaussianNB,MultinomialNB

from sklearn.model_selection import cross_val_score

from sklearn.metrics import classification_report

x_train,x_test,y_train,y_test = train_test_split(tokenList,targetList,test_size=0.3,stratify=targetList)

vectorizer = TfidfVectorizer()

X_train = vectorizer.fit_transform(x_train)

X_test = vectorizer.transform(x_test)

#多项式朴素贝叶斯

from sklearn.naive_bayes import MultinomialNB

mnb = MultinomialNB()

module = mnb.fit(X_train,y_train)

y_predict = module.predict(X_test)#预测

scores=cross_val_score(mnb,X_test,y_test,cv=5)

print("Accuracy:%.3f"%scores.mean())

print("classification_report:

",classification_report(y_predict,y_test))

targetList.append(target)

print(targetList[0:10])

tokenList.append(processing(content))

tokenList[0:10]

# 统计测试集和预测集的各类新闻个数

testCount = collections.Counter(y_test)

predCount = collections.Counter(y_predict)

print('实际:',testCount,'

', '预测', predCount)

# 建立标签列表,实际结果列表,预测结果列表

nameList = list(testCount.keys())

testList = list(testCount.values())

predictList = list(predCount.values())

x = list(range(len(nameList)))

print("新闻类别:",nameList,'

',"实际:",testList,'

',"预测:",predictList)