为什么不直接用kube-dns?

为什么不直接用kube-dns?

为什么不直接用kube-dns?

感谢各位前辈的专研,在下午有限的时间里把Openshift DNS的机制理了一下。更详细的材料大家可以参考

https://blog.cloudtechgroup.cn/Blog/2018/07/23/ocp-2018-07-23/

https://www.redhat.com/en/blog/red-hat-openshift-container-platform-dns-deep-dive-dns-changes-red-hat-openshift-container-platform-36

https://www.cnblogs.com/sammyliu/p/10056035.html

本篇主要是基于3.11版本

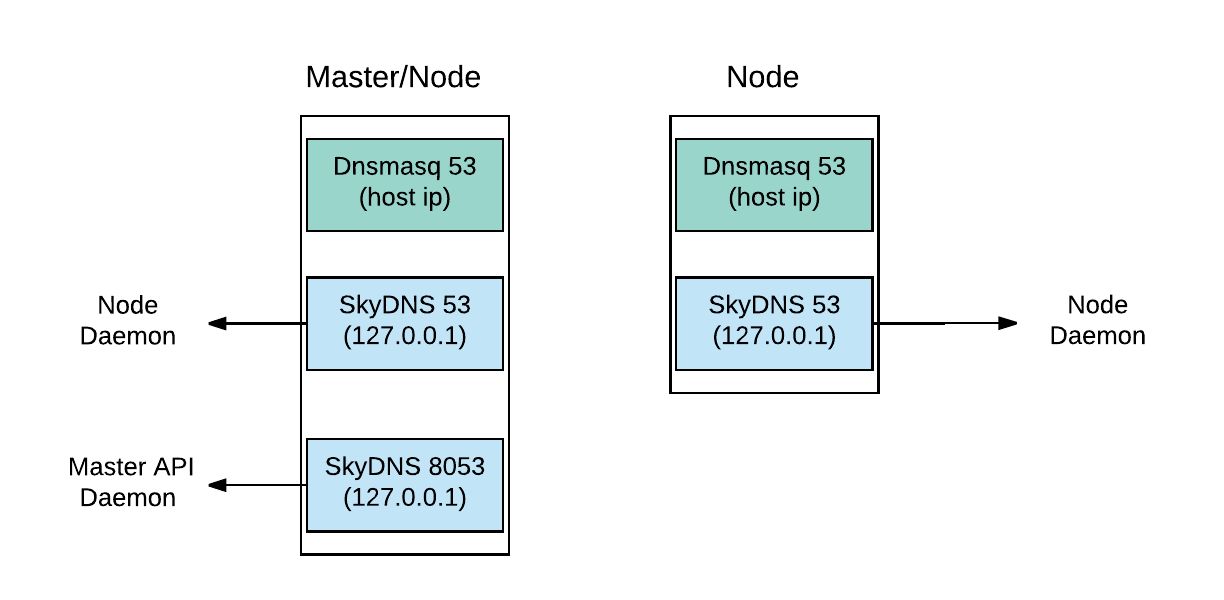

1.DNS架构

也就是说所有容器的dns寻址都是通过外部的dnsmasq以及SkyDNS来进行的,不是走容器内部网络去找kube-dns或者类似的Pod

可以通过命令查看一下

- 在master节点

[root@master dnsmasq.d]# netstat -tunlp|grep 53 tcp 0 0 0.0.0.0:8053 0.0.0.0:* LISTEN 16864/openshift tcp 0 0 127.0.0.1:53 0.0.0.0:* LISTEN 14937/openshift tcp 0 0 10.128.0.1:53 0.0.0.0:* LISTEN 3191/dnsmasq tcp 0 0 172.17.0.1:53 0.0.0.0:* LISTEN 3191/dnsmasq tcp 0 0 192.168.56.113:53 0.0.0.0:* LISTEN 3191/dnsmasq

查看具体的进程,注意进程号

# ps -ef|grep openshift root 14937 14925 0 15:20 ? 00:00:28 openshift start network --config=/etc/origin/node/node-config.yaml --kubeconfig=/tmp/kubeconfig --loglevel=2 root 16864 16851 6 15:22 ? 00:16:28 openshift start master api --config=/etc/origin/master/master-config.yaml --loglevel=2 root 17582 17570 3 15:23 ? 00:09:22 openshift start master controllers --config=/etc/origin/master/master-config.yaml --listen=https://0.0.0.0:8444 --loglevel=2

查看路由信息

[root@master dnsmasq.d]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.56.1 0.0.0.0 UG 100 0 0 enp0s3 10.128.0.0 0.0.0.0 255.252.0.0 U 0 0 0 tun0 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 172.30.0.0 0.0.0.0 255.255.0.0 U 0 0 0 tun0 192.168.56.0 0.0.0.0 255.255.255.0 U 100 0 0 enp0s3

可以看到10.128.0.0是pod网段,172.17.0.0是SVC网段,192.169.56.0是宿主机网段,每个网段都启动了一个dnsmasq,监听在53端口

- 在node节点

[root@node1 node]# netstat -tunlp|grep 53 tcp 0 0 127.0.0.1:53 0.0.0.0:* LISTEN 17134/openshift tcp 0 0 10.131.0.1:53 0.0.0.0:* LISTEN 3243/dnsmasq tcp 0 0 172.17.0.1:53 0.0.0.0:* LISTEN 3243/dnsmasq tcp 0 0 192.168.56.104:53 0.0.0.0:* LISTEN 3243/dnsmasq

ps -ef|grep openshift root 17134 17120 0 15:21 ? 00:00:31 openshift start network --config=/etc/origin/node/node-config.yaml --kubeconfig=/tmp/kubeconfig --loglevel=2

2.DNS的配置信息

Pod中的dns配置会指向Pod所在宿主机IP,配置为

$ cat /etc/resolv.conf nameserver 192.168.56.105 search myproject.svc.cluster.local svc.cluster.local cluster.local redhat.com example.com options ndots:5

192.168.56.105是pod所在的宿主机

宿主机的resolv.conf文件

[root@node1 node]# cat /etc/resolv.conf

# nameserver updated by /etc/NetworkManager/dispatcher.d/99-origin-dns.sh

# Generated by NetworkManager

search cluster.local cluster.local example.com

nameserver 192.168.56.104

在部署环境时,会在每个节点上部署 /etc/NetworkManager/dispatcher.d/99-origin-dns.sh 文件。每当节点上的 NetworkManager 服务启动时,该文件会被运行。它的任务包括:

- 创建 dnsmasq 配置文件 :

- node-dnsmasq.conf (没有)

- origin-dns.conf

- origin-upstream-dns.conf(没有)

- 当 NetworkManager 服务启动时启动 dnsmasq 服务

- 设置宿主机的所有默认路由 IP 为 Dnsmasq 的侦听IP

- 修改 /etc/resolv.conf,设置搜索域,以及将宿主机的默认 IP 作为 nameserver

- 创建 /etc/origin/node/resolv.conf

origin-dns.conf的配置目录在/etc/dnsmasq.d/,内容如下

[root@node1 dnsmasq.d]# cat origin-dns.conf no-resolv domain-needed no-negcache max-cache-ttl=1 enable-dbus dns-forward-max=10000 cache-size=10000 bind-dynamic min-port=1024 except-interface=lo # End of config

如果有文件origin-upstream-dns.conf ,中定义了上游(upstream) DNS 名字服务器,如果没有可以手工创建.

[root@node2 dnsmasq.d]# cat origin-upstream-dns.conf server=10.72.17.5 server=10.68.5.26 server=202.96.134.33 server=202.96.128.86

如果需要解析外部域名,是需要在pod运行的宿主机节点上进行创建的。

node-dnsmasq.conf的内容是

server=/in-addr.arpa/127.0.0.1 server=/cluster.local/127.0.0.1

根据前辈SammyTalksAboutCloud的研究,这个已经写到程序里面去了。

可以通过journalctl -u dnsmasq去查看日志

[root@node2 dnsmasq.d]# journalctl -u dnsmasq -- Logs begin at Fri 2018-12-28 22:08:53 CST, end at Thu 2019-01-03 20:04:52 CST. -- Dec 28 22:10:48 node2.example.com systemd[1]: Started DNS caching server.. Dec 28 22:10:48 node2.example.com dnsmasq[3561]: started, version 2.76 cachesize 150 Dec 28 22:10:48 node2.example.com dnsmasq[3561]: compile time options: IPv6 GNU-getopt DBus no-i18n IDN DHCP DHCPv6 no-Lua TFTP no-conntrack ipset auth no-D Dec 28 22:10:48 node2.example.com dnsmasq[3561]: DBus support enabled: connected to system bus Dec 28 22:10:48 node2.example.com dnsmasq[3561]: warning: no upstream servers configured Dec 28 22:10:48 node2.example.com dnsmasq[3561]: read /etc/hosts - 7 addresses Dec 28 22:11:59 node2.example.com dnsmasq[3561]: setting upstream servers from DBus Dec 28 22:11:59 node2.example.com dnsmasq[3561]: using nameserver 127.0.0.1#53 for domain in-addr.arpa Dec 28 22:11:59 node2.example.com dnsmasq[3561]: using nameserver 127.0.0.1#53 for domain cluster.local

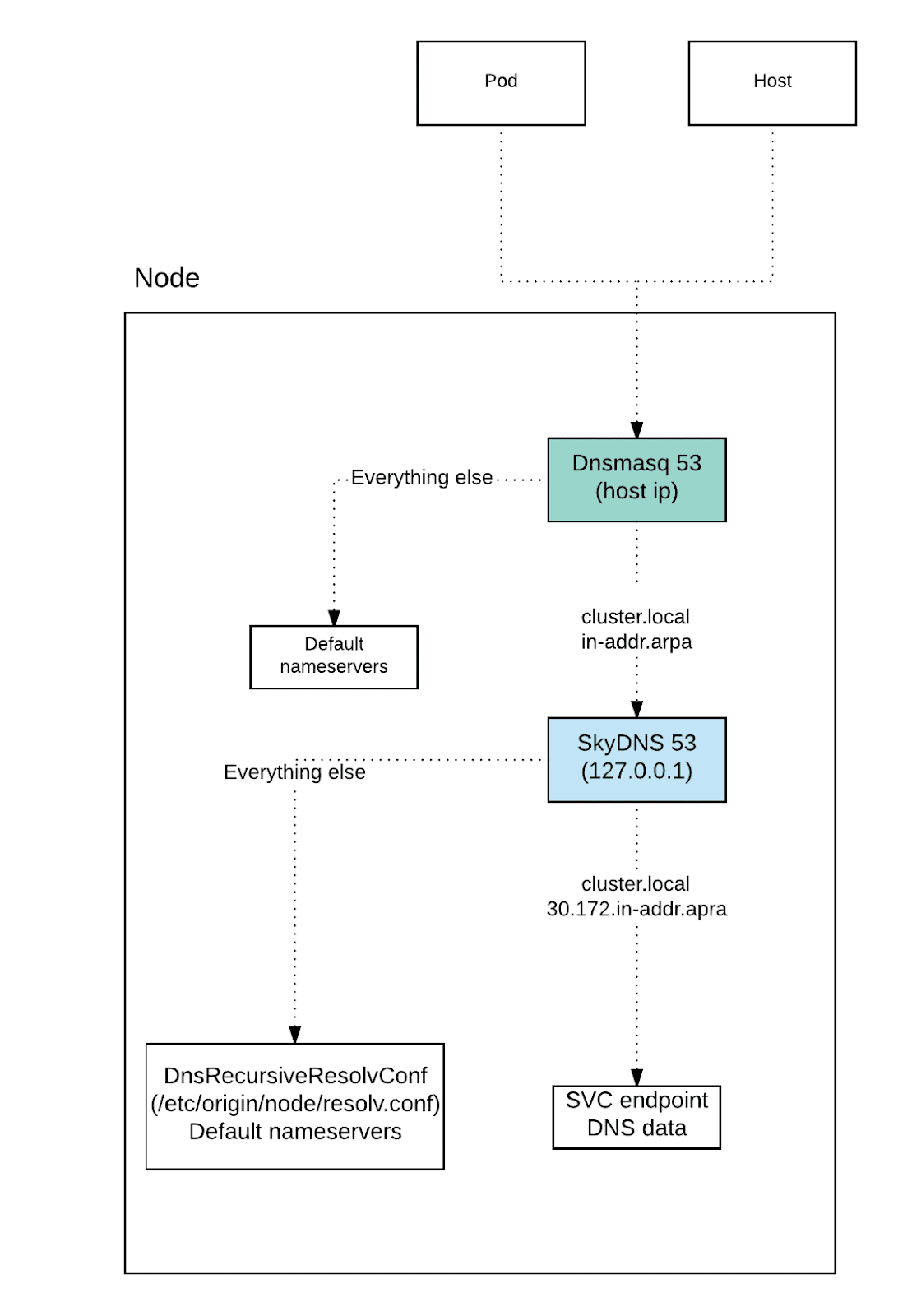

根据日志信息,知道dnsmasq实际把请求转发给了监听在127.0.0.1:53上的skyDNS

skyDNS并不是作为一个单独的进程启动,而是在启动网络

openshift start network --config=/etc/origin/node/node-config.yaml --kubeconfig=/tmp/kubeconfig --loglevel=2

中启动,SkyDNS 调用 OpenShift API 服务来获取主机名、IP地址等信息,然后封装成标准 DNS 记录并返回给查询客户端。

3.DNS在openshift中的配置

- master

cat /etc/origin/master/master-config.yaml

dnsConfig: bindAddress: 0.0.0.0:8053 bindNetwork: tcp4

bind在每个ip的8053端口

- node

cat /etc/origin/node/node-config.yaml

dnsBindAddress: 127.0.0.1:53 dnsDomain: cluster.local dnsIP: 0.0.0.0 dnsNameservers: null dnsRecursiveResolvConf: /etc/origin/node/resolv.conf

根据这种机制,service在宿主机范围内(不仅只是在容器中)能够解析

[root@node2 dnsmasq.d]# dig tomcat.myproject.svc.cluster.local ; <<>> DiG 9.9.4-RedHat-9.9.4-72.el7 <<>> tomcat.myproject.svc.cluster.local ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 719 ;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0 ;; QUESTION SECTION: ;tomcat.myproject.svc.cluster.local. IN A ;; ANSWER SECTION: tomcat.myproject.svc.cluster.local. 30 IN A 172.30.16.194 ;; Query time: 0 msec ;; SERVER: 10.0.3.15#53(10.0.3.15) ;; WHEN: Thu Jan 03 20:26:06 CST 2019 ;; MSG SIZE rcvd: 68

能够访问

[root@node2 dnsmasq.d]# curl tomcat.myproject.svc:8080 <!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8" /> <title>Apache Tomcat/8.5.37</title> <link href="favicon.ico" rel="icon" type="image/x-icon" /> <link href="favicon.ico" rel="shortcut icon" type="image/x-icon" /> <link href="tomcat.css" rel="stylesheet" type="text/css" /> </head> <body>

4.查询流程图

查看dnsmasq更详细日志

vi /etc/dnsmasq.conf # For debugging purposes, log each DNS query as it passes through # dnsmasq. log-queries

systemctl restart dnsmasq

[root@node2 dnsmasq.d]# journalctl -f -u dnsmasq -- Logs begin at Fri 2018-12-28 22:08:53 CST. -- Jan 03 20:48:12 node2.example.com dnsmasq[33966]: using nameserver 10.72.17.5#53 Jan 03 20:48:12 node2.example.com dnsmasq[33966]: using nameserver 127.0.0.1#53 for domain in-addr.arpa Jan 03 20:48:12 node2.example.com dnsmasq[33966]: using nameserver 127.0.0.1#53 for domain cluster.local Jan 03 20:48:22 node2.example.com dnsmasq[33966]: setting upstream servers from DBus Jan 03 20:48:22 node2.example.com dnsmasq[33966]: using nameserver 202.96.128.86#53 Jan 03 20:48:22 node2.example.com dnsmasq[33966]: using nameserver 202.96.134.33#53 Jan 03 20:48:22 node2.example.com dnsmasq[33966]: using nameserver 10.68.5.26#53 Jan 03 20:48:22 node2.example.com dnsmasq[33966]: using nameserver 10.72.17.5#53 Jan 03 20:48:22 node2.example.com dnsmasq[33966]: using nameserver 127.0.0.1#53 for domain in-addr.arpa Jan 03 20:48:22 node2.example.com dnsmasq[33966]: using nameserver 127.0.0.1#53 for domain cluster.local Jan 03 20:48:39 node2.example.com dnsmasq[33966]: query[A] www.baidu.com from 10.0.3.15 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: forwarded www.baidu.com to 202.96.128.86 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: forwarded www.baidu.com to 202.96.134.33 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: forwarded www.baidu.com to 10.68.5.26 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: forwarded www.baidu.com to 10.72.17.5 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: query[AAAA] www.baidu.com from 10.0.3.15 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: forwarded www.baidu.com to 202.96.128.86 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: forwarded www.baidu.com to 202.96.134.33 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: forwarded www.baidu.com to 10.68.5.26 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: forwarded www.baidu.com to 10.72.17.5 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: reply www.baidu.com is <CNAME> Jan 03 20:48:39 node2.example.com dnsmasq[33966]: reply www.a.shifen.com is 14.215.177.38 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: reply www.a.shifen.com is 14.215.177.39 Jan 03 20:48:39 node2.example.com dnsmasq[33966]: reply www.baidu.com is <CNAME>