喜欢看电影的小伙伴,如果想看新的电影,然后没去看电影院看,没有正确的获得好的方法,大家就可以在电影天堂里进行下载。这里给大家提供一种思路。

1.dytt.py

# -*- coding: utf-8 -*- import scrapy from Dytt.items import DyttItem from scrapy.linkextractors import LinkExtractor from Dytt.settings import USER_AGENT import pdb class DyttSpider(scrapy.Spider): name = 'dytt' allowed_domains = ['www.dy2018.com'] headers = { 'Accept': 'application/json, text/javascript, */*; q=0.01', 'Accept-Encoding': 'gzip, deflate', 'Accept-Language': 'zh-CN,zh;q=0.8', 'Connection': 'keep-alive', 'Content-Length': '11', 'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8', 'Host': 'www.dy2018.com', 'Origin': 'http://www.dy2018.com', 'Referer': 'http://www.dy2018.com/html/tv/oumeitv/index.html', 'User-Agent': USER_AGENT, 'X-Requested-With': 'XMLHttpRequest', } start_urls = ['http://www.dy2018.com/html/tv/oumeitv/index.html'] def parse(self, response): le = LinkExtractor(restrict_css='div.co_area1 div.co_content2') for link in le.extract_links(response): yield scrapy.Request(link.url,callback=self.parse_url,headers=self.headers) def parse_url(self,response): sel = response.css('div#Zoom') dytt = DyttItem() dytt['china_name'] = sel.xpath('./p/text()').extract()[1] dytt['english_name'] = sel.xpath('./p/text()').extract()[2] dytt['year'] = sel.xpath('./p/text()').extract()[3] dytt['home'] = sel.xpath('./p/text()').extract()[4] dytt['type'] = sel.xpath('./p/text()').extract()[5] dytt['time'] = sel.xpath('./p/text()').extract()[8] dytt['director'] = sel.xpath('./p/text()').extract()[15] dytt['role'] = sel.xpath('./p/text()').extract()[16] dytt['ftp'] = sel.xpath('(.//tbody)[1]//a/@href').extract()[0] dytt['thunder'] = sel.xpath('(.//tbody)[2]//a/@href').extract()[0] yield dytt

2.items.py

import scrapy class DyttItem(scrapy.Item): china_name = scrapy.Field() english_name = scrapy.Field() year = scrapy.Field() home = scrapy.Field() type = scrapy.Field() time = scrapy.Field() director = scrapy.Field() role = scrapy.Field() ftp = scrapy.Field() thunder = scrapy.Field()

3.pipelines.py

# -*- coding: utf-8 -*- import json import codecs import chardet class DyttPipeline(object): def open_spider(self, spider): self.file = codecs.open('dytt1.json', 'w', encoding='utf-8') ###重要2 def close_spider(self, spider): self.file.close() def process_item(self, item, spider): line = json.dumps(dict(item), ensure_ascii=False) + " " ### 重要3 self.file.write(line) return item

4.settings.py

USER_AGENT ={ #设置浏览器的User_agent

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

}

CONCURRENT_REQUESTS = 16 #同时来16个请求

DOWNLOAD_DELAY = 0.2 #0.2s后开启处理第一个请求

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

FEED_EXPORT_FIELDS = ['china_name','english_name','year','home','type','time','director','role','ftp','thunder']

COOKIES_ENABLED = False

ITEM_PIPELINES = {

'Dytt.pipelines.DyttPipeline': 300,

}

在pipelines.py文件中写入.json格式

2.开始爬取时,返回EORRO 400

解决办法:在dytt.py文件中添加:header,重新运行

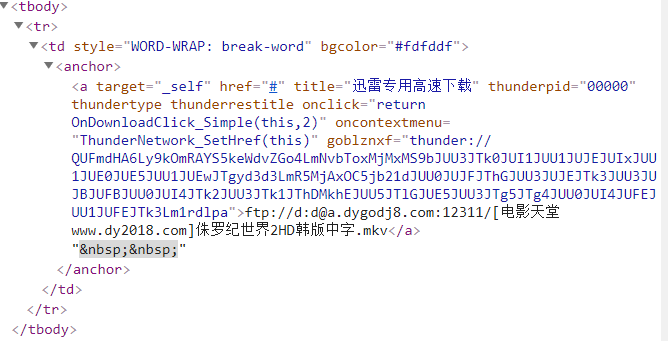

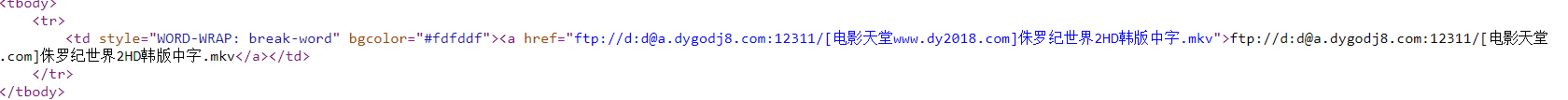

3.无法爬取thunder链接,在网页中点击查看源码(真正爬取的信息),即可发现--无法显示thunder具体信息

查看源代码:

如果有遇到其他问题的小伙伴,欢迎留言!!!