我们前面说了了 PV 和 PVC 的使用方法,但是前面的 PV 都是静态的,什么意思?就是我要使用的一个 PVC 的话就必须手动去创建一个 PV,我们也说过这种方式在很大程度上并不能满足我们的需求,比如我们有一个应用需要对存储的并发度要求比较高,而另外一个应用对读写速度又要求比较高,特别是对于 StatefulSet 类型的应用简单的来使用静态的 PV 就很不合适了,这种情况下我们就需要用到动态 PV,也就是我们今天要说的的 StorageClass

persistenvolume动态供给

创建

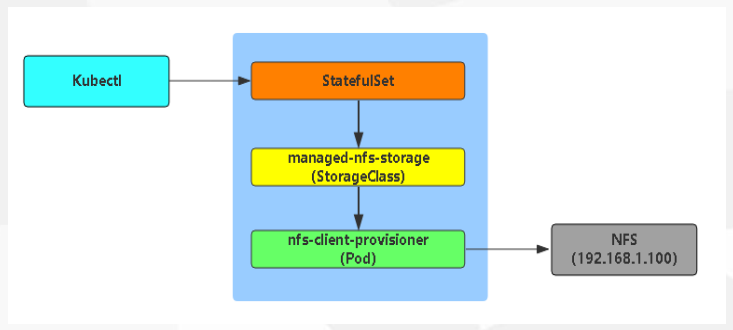

要使用 StorageClass,我们就得安装对应的自动配置程序,比如我们这里存储后端使用的是 nfs,那么我们就需要使用到一个 nfs-client 的自动配置程序,我们也叫它 Provisioner,这个程序使用我们已经配置好的 nfs 服务器,来自动创建持久卷,也就是自动帮我们创建 PV。

- 自动创建的 PV 以

${namespace}-${pvcName}-${pvName}这样的命名格式创建在 NFS 服务器上的共享数据目录中 - 而当这个 PV 被回收后会以

archieved-${namespace}-${pvcName}-${pvName}这样的命名格式存在 NFS 服务器上。

当然在部署nfs-client之前,我们需要先成功安装上 nfs 服务器,前面我们已经安装过了,服务地址是192.168.1.123,共享数据目录是/data/k8s/,然后接下来我们部署 nfs-client 即可,我们也可以直接参考 nfs-client 的文档:https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client,进行安装即可。

查看

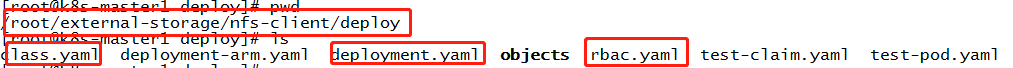

可以看到在使用git clone https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client 后需要的就是红框部分yaml文件,修改一下就可以使用。

class.yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "true" ~ ~ ~

rbac.yaml

1 kind: ServiceAccount 2 apiVersion: v1 3 metadata: 4 name: nfs-client-provisioner 5 --- 6 kind: ClusterRole 7 apiVersion: rbac.authorization.k8s.io/v1 8 metadata: 9 name: nfs-client-provisioner-runner 10 rules: 11 - apiGroups: [""] 12 resources: ["persistentvolumes"] 13 verbs: ["get", "list", "watch", "create", "delete"] 14 - apiGroups: [""] 15 resources: ["persistentvolumeclaims"] 16 verbs: ["get", "list", "watch", "update"] 17 - apiGroups: ["storage.k8s.io"] 18 resources: ["storageclasses"] 19 verbs: ["get", "list", "watch"] 20 - apiGroups: [""] 21 resources: ["events"] 22 verbs: ["create", "update", "patch"] 23 --- 24 kind: ClusterRoleBinding 25 apiVersion: rbac.authorization.k8s.io/v1 26 metadata: 27 name: run-nfs-client-provisioner 28 subjects: 29 - kind: ServiceAccount 30 name: nfs-client-provisioner 31 namespace: default 32 roleRef: 33 kind: ClusterRole 34 name: nfs-client-provisioner-runner 35 apiGroup: rbac.authorization.k8s.io 36 --- 37 kind: Role 38 apiVersion: rbac.authorization.k8s.io/v1 39 metadata: 40 name: leader-locking-nfs-client-provisioner 41 rules: 42 - apiGroups: [""] 43 resources: ["endpoints"] 44 verbs: ["get", "list", "watch", "create", "update", "patch"] 45 --- 46 kind: RoleBinding 47 apiVersion: rbac.authorization.k8s.io/v1 48 metadata: 49 name: leader-locking-nfs-client-provisioner 50 subjects: 51 - kind: ServiceAccount 52 name: nfs-client-provisioner 53 # replace with namespace where provisioner is deployed 54 namespace: default 55 roleRef: 56 kind: Role 57 name: leader-locking-nfs-client-provisioner 58 apiGroup: rbac.authorization.k8s.io

deployment.yaml

1 apiVersion: v1 2 kind: ServiceAccount 3 metadata: 4 name: nfs-client-provisioner 5 --- 6 kind: Deployment 7 apiVersion: extensions/v1beta1 8 metadata: 9 name: nfs-client-provisioner 10 spec: 11 replicas: 1 12 strategy: 13 type: Recreate 14 template: 15 metadata: 16 labels: 17 app: nfs-client-provisioner 18 spec: 19 serviceAccountName: nfs-client-provisioner 20 containers: 21 - name: nfs-client-provisioner 22 image: quay.io/external_storage/nfs-client-provisioner:latest 23 volumeMounts: 24 - name: nfs-client-root 25 mountPath: /persistentvolumes 26 env: 27 - name: PROVISIONER_NAME 28 value: fuseim.pri/ifs 29 - name: NFS_SERVER 30 value: 192.168.1.123 31 - name: NFS_PATH 32 value: /data/k8s 33 volumes: 34 - name: nfs-client-root 35 nfs: 36 server: 192.168.1.123 37 path: /data/k8s

部署

kubectl create -f class.yaml

kubectl create -f rbac.yaml

kubectl create -f deployment.yaml

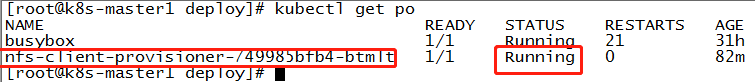

查看运行状态

测试

接下来我们还是用一个简单的示例来测试下我们上面用 StorageClass 方式声明的 PVC 对象吧:(test-pod.yaml)

1 kind: Pod 2 apiVersion: v1 3 metadata: 4 name: test-pod 5 spec: 6 containers: 7 - name: test-pod 8 image: busybox 9 imagePullPolicy: IfNotPresent 10 command: 11 - "/bin/sh" 12 args: 13 - "-c" 14 - "touch /mnt/SUCCESS && exit 0 || exit 1" 15 volumeMounts: 16 - name: nfs-pvc 17 mountPath: "/mnt" 18 restartPolicy: "Never" 19 volumes: 20 - name: nfs-pvc 21 persistentVolumeClaim: 22 claimName: test-pvc

测试一个nginx-test.yaml 使用statusfulset

1 apiVersion: v1 2 kind: Service 3 metadata: 4 name: nginx 5 labels: 6 app: nginx 7 spec: 8 ports: 9 - port: 80 10 name: web 11 clusterIP: None 12 selector: 13 app: nginx 14 --- 15 apiVersion: apps/v1 16 kind: StatefulSet 17 metadata: 18 name: web 19 spec: 20 selector: 21 matchLabels: 22 app: nginx 23 serviceName: "nginx" 24 replicas: 3 25 template: 26 metadata: 27 labels: 28 app: nginx 29 spec: 30 terminationGracePeriodSeconds: 10 31 containers: 32 - name: nginx 33 image: nginx 34 ports: 35 - containerPort: 80 36 name: web 37 volumeMounts: 38 - name: www 39 mountPath: /usr/share/nginx/html 40 volumeClaimTemplates: 41 - metadata: 42 name: www 43 spec: 44 accessModes: [ "ReadWriteOnce" ] 45 storageClassName: "managed-nfs-storage" 46 resources: 47 requests: 48 storage: 3Gi

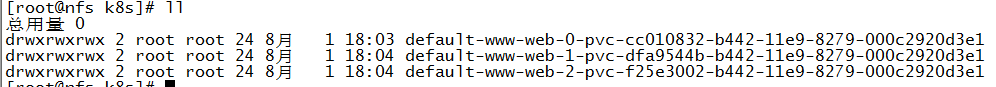

查看三个副本

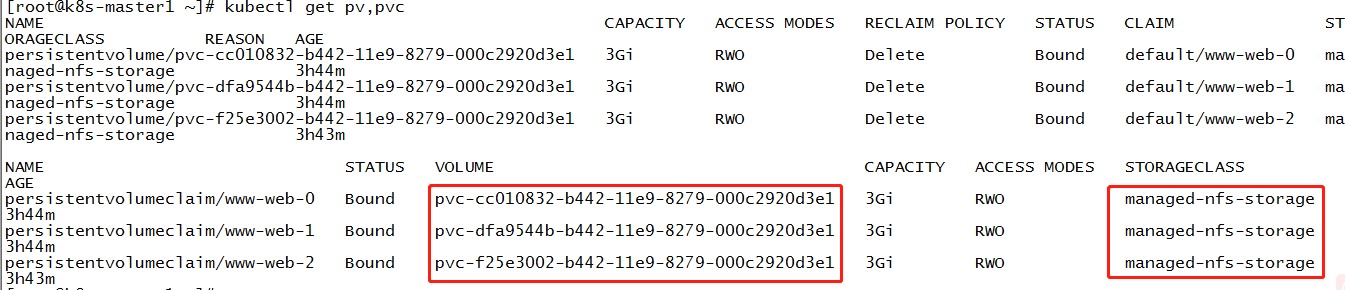

我们可以看到是不是也生成了3个 PVC 对象,名称由模板名称 name 加上 Pod 的名称组合而成,这3个 PVC 对象也都是 绑定状态了,很显然我们查看 PV 也可以看到对应的3个 PV 对象

查看pv、pvc

查看nfs共享目录