前面弄错了,应该看2017的秋季课,结果看了春季课了。

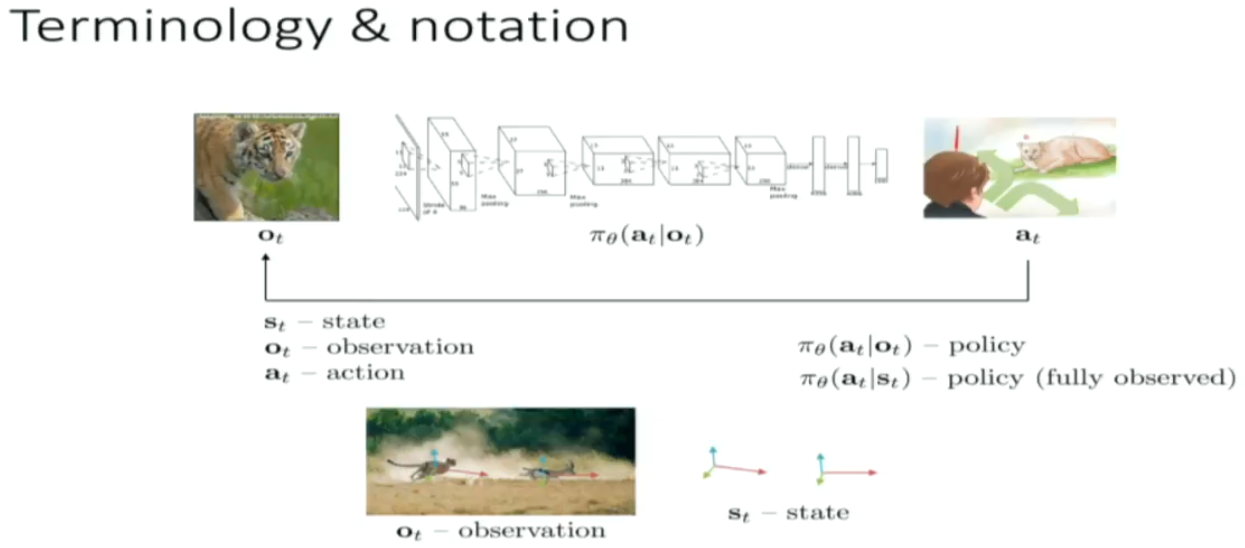

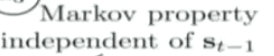

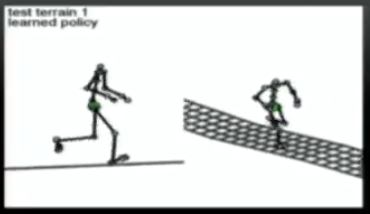

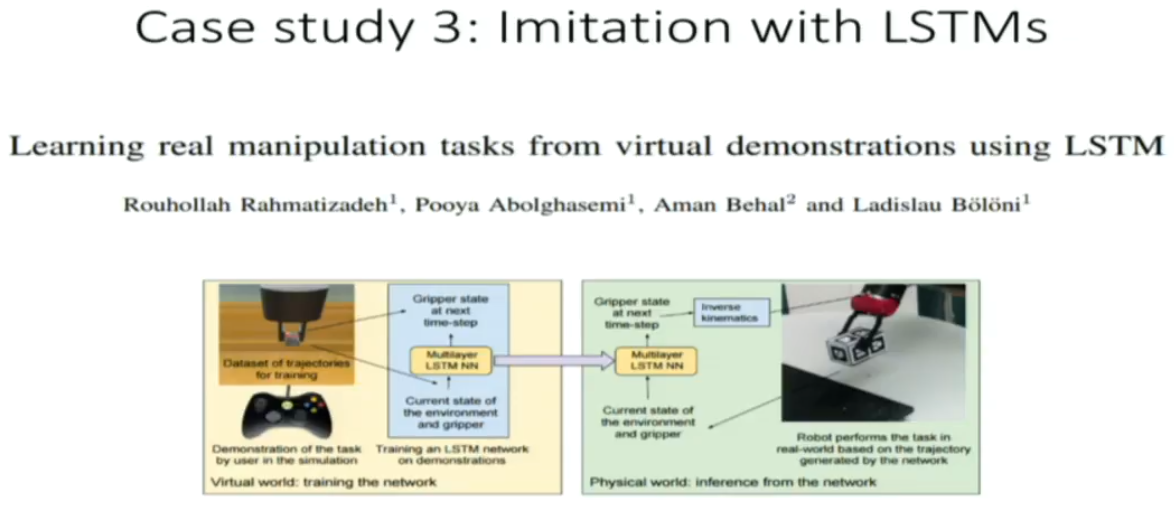

neural network control a virtual robot, by imitating human motion

neural network control a virtual robot, by imitating human motion

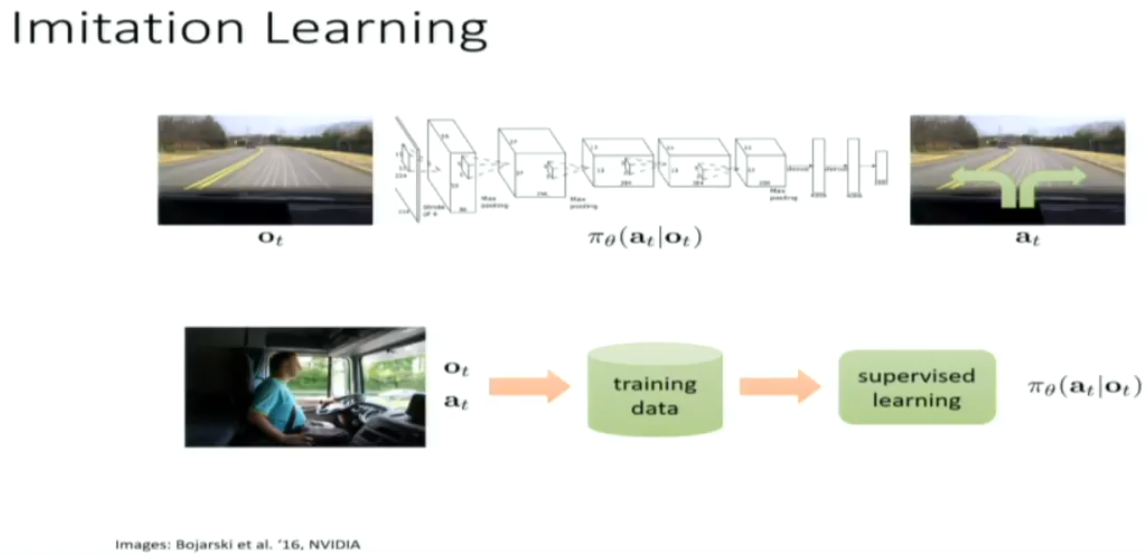

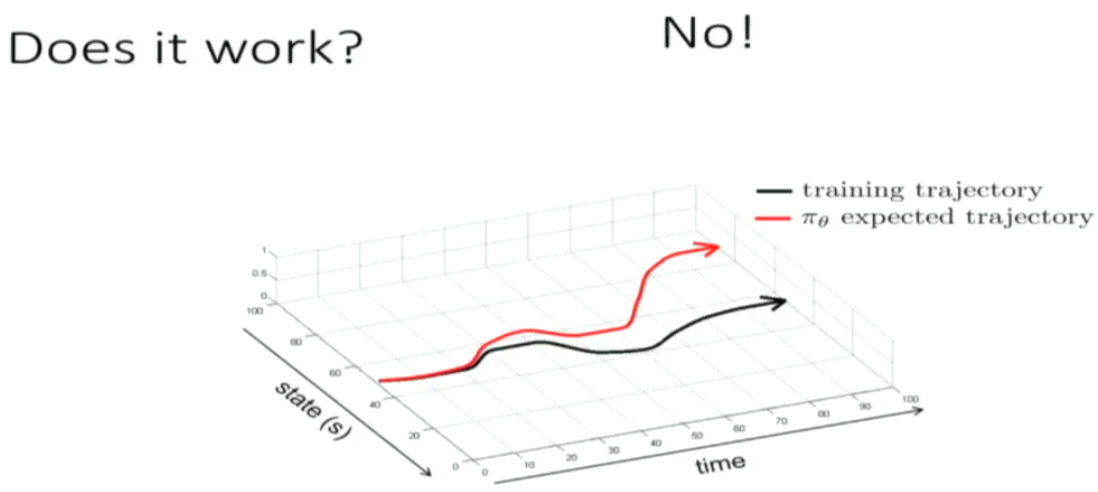

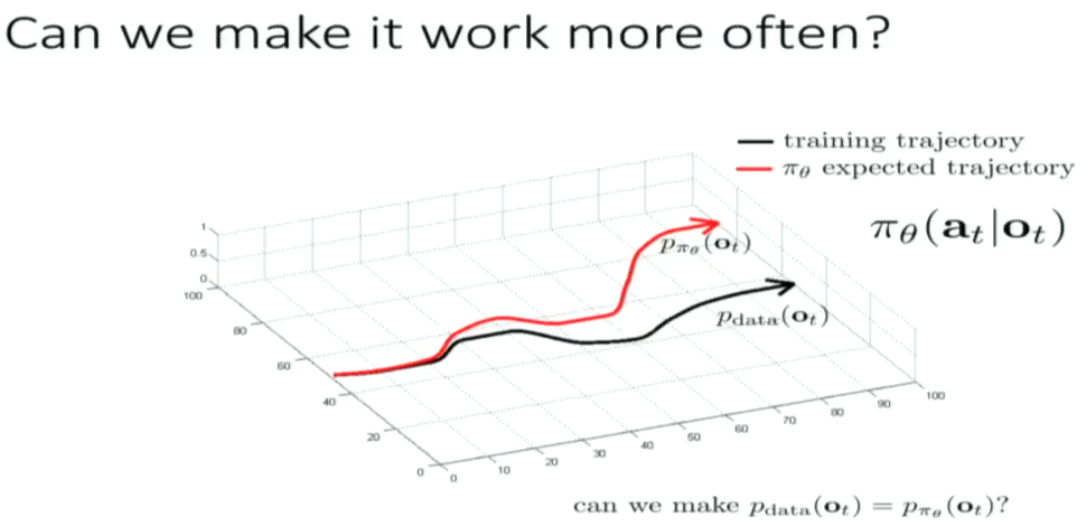

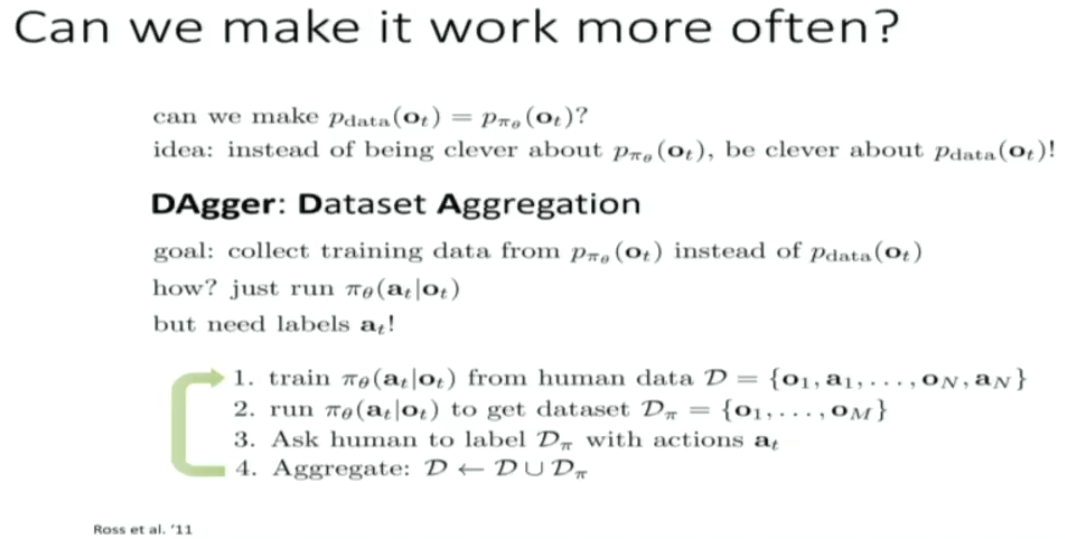

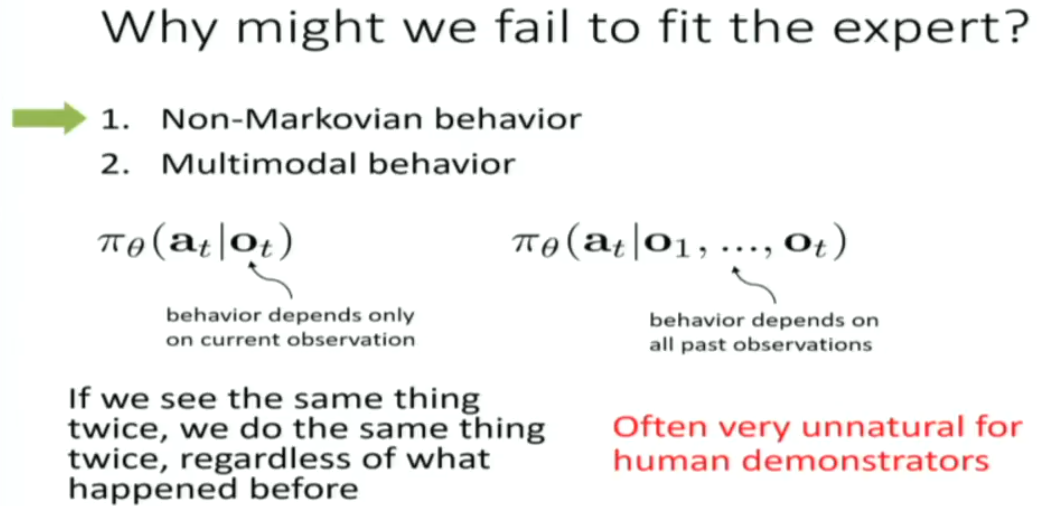

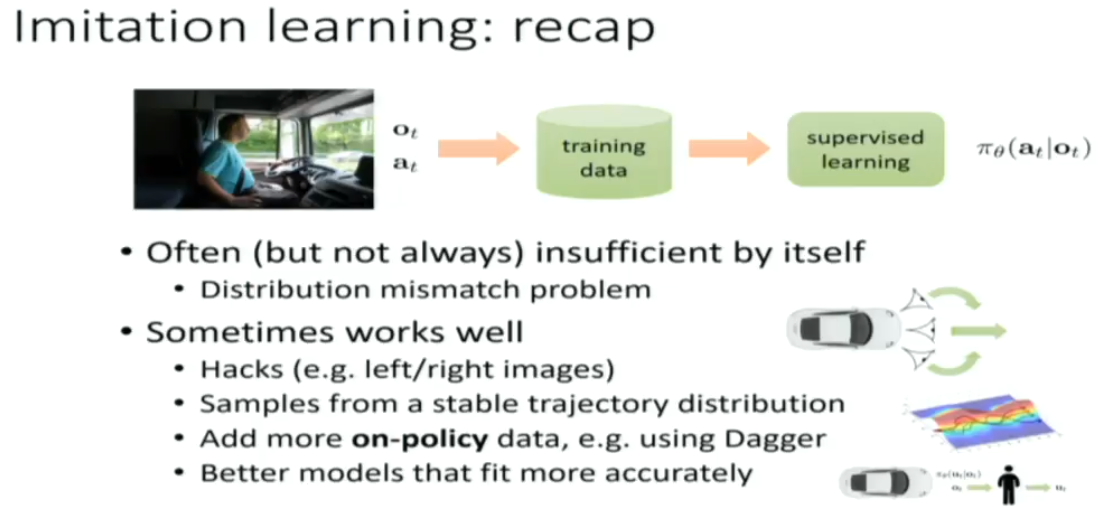

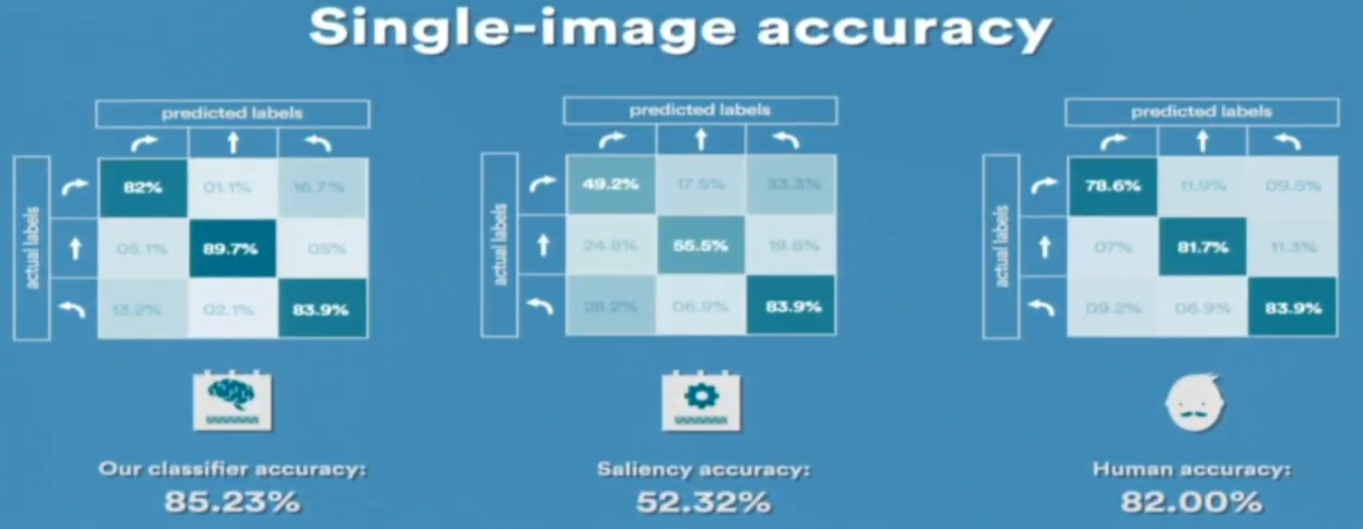

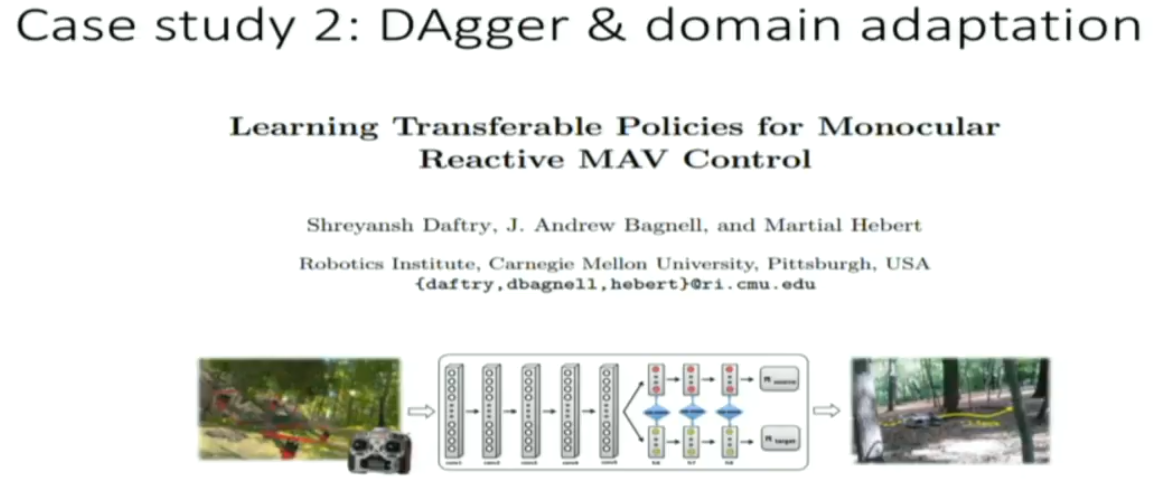

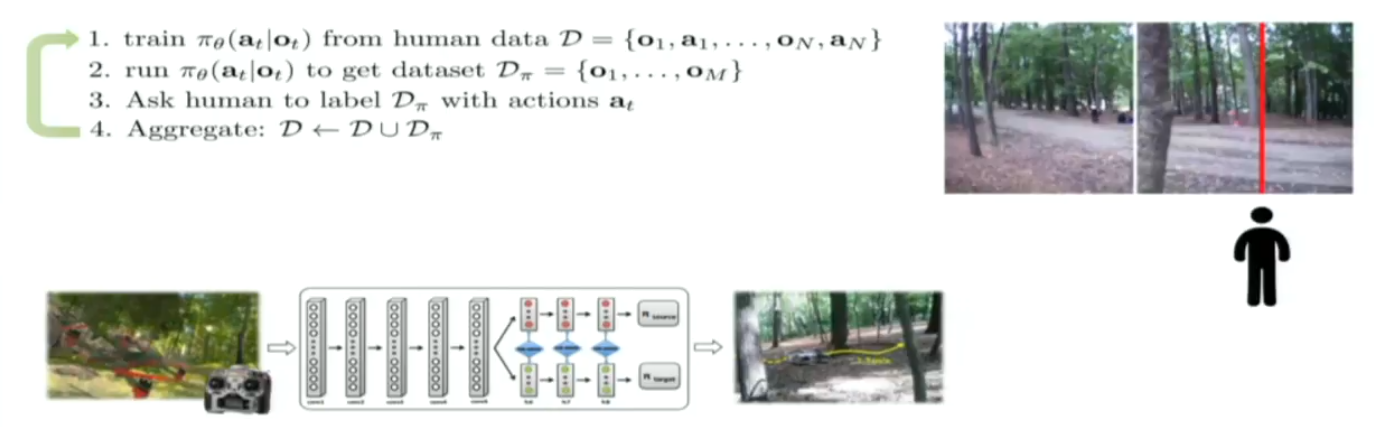

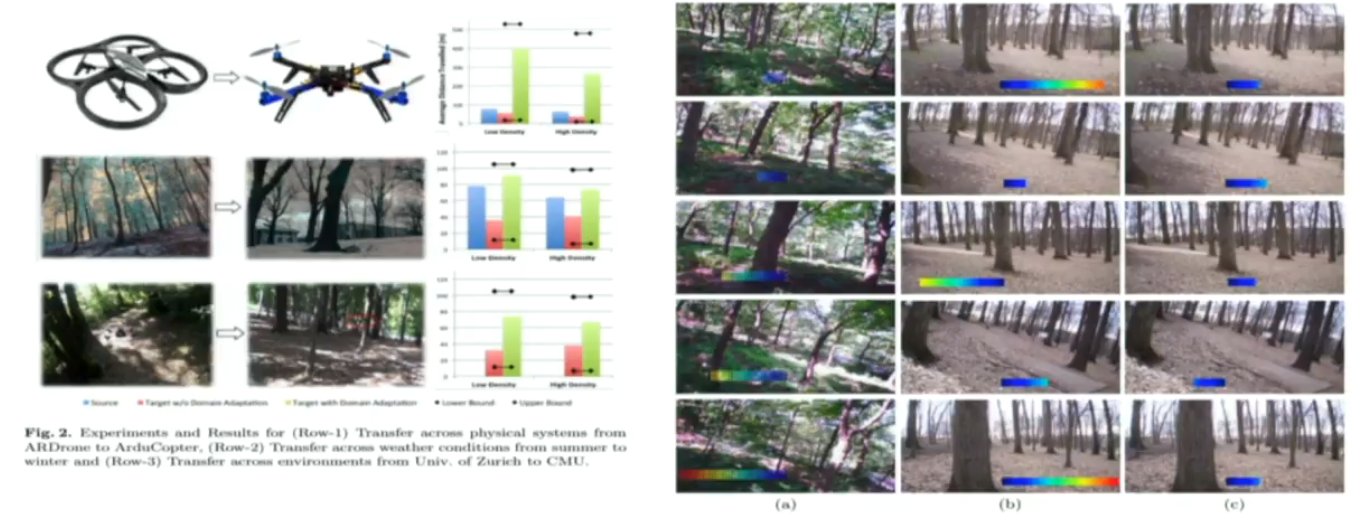

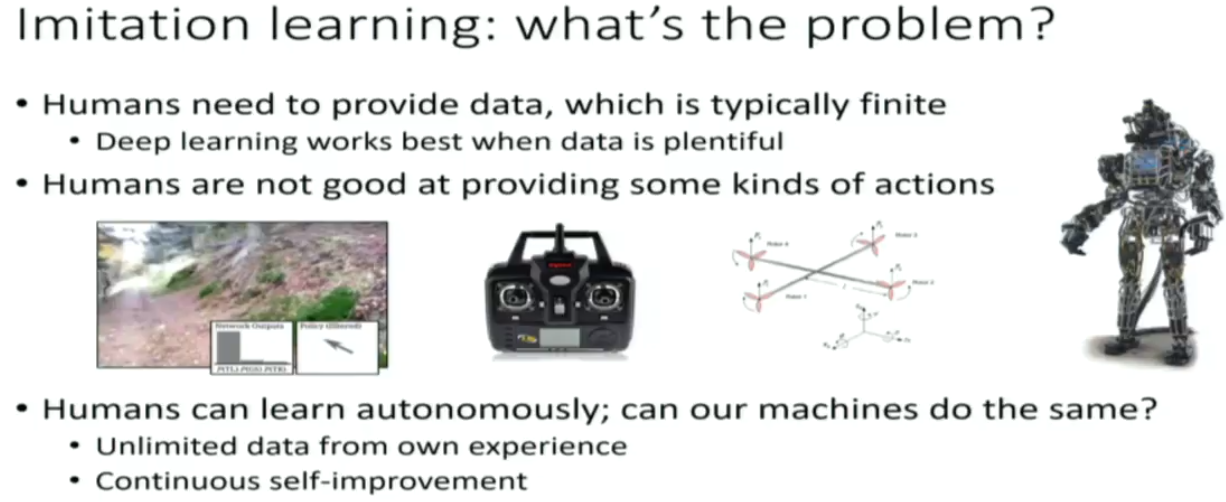

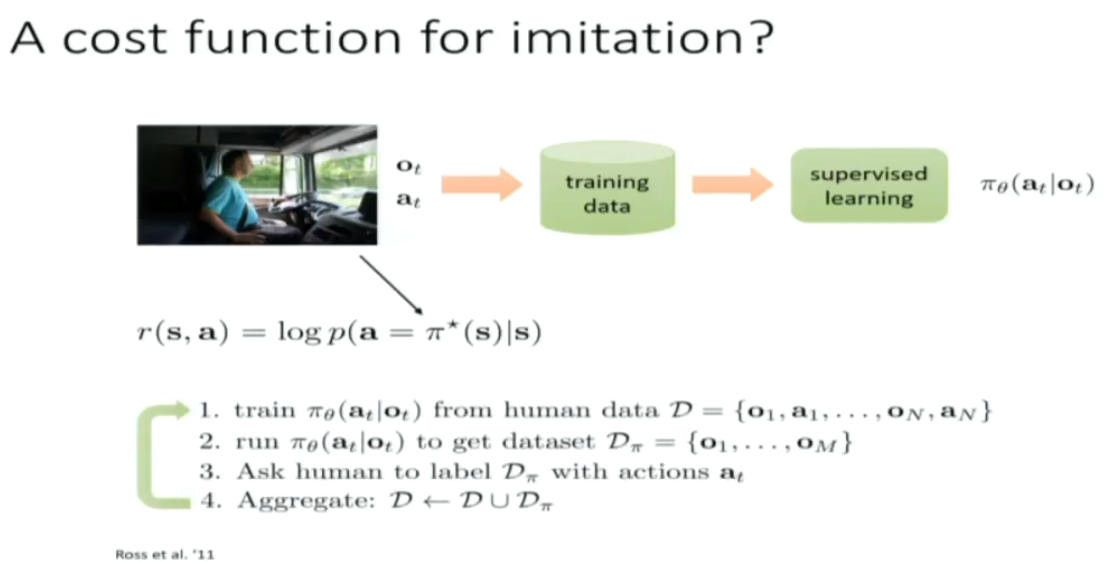

Domain shift cause the failure of supervised learning in imitation learning.

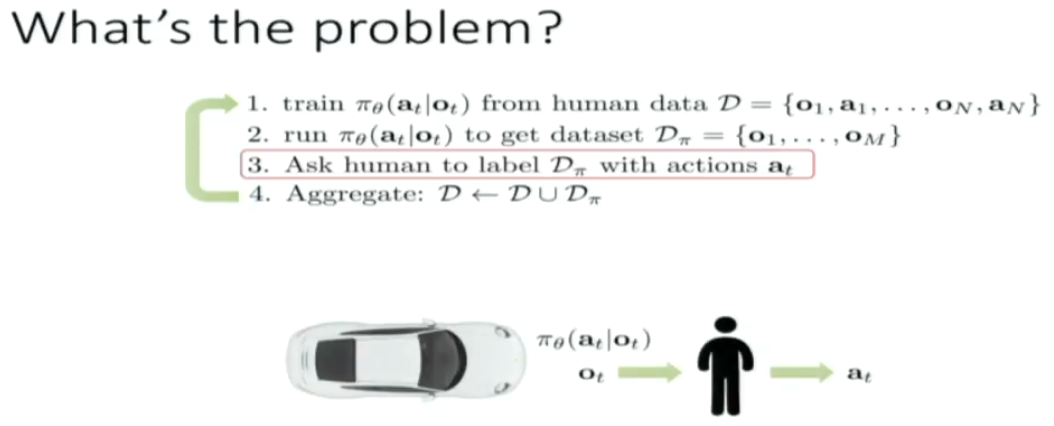

human expert said "turn left!!!" (step 3)

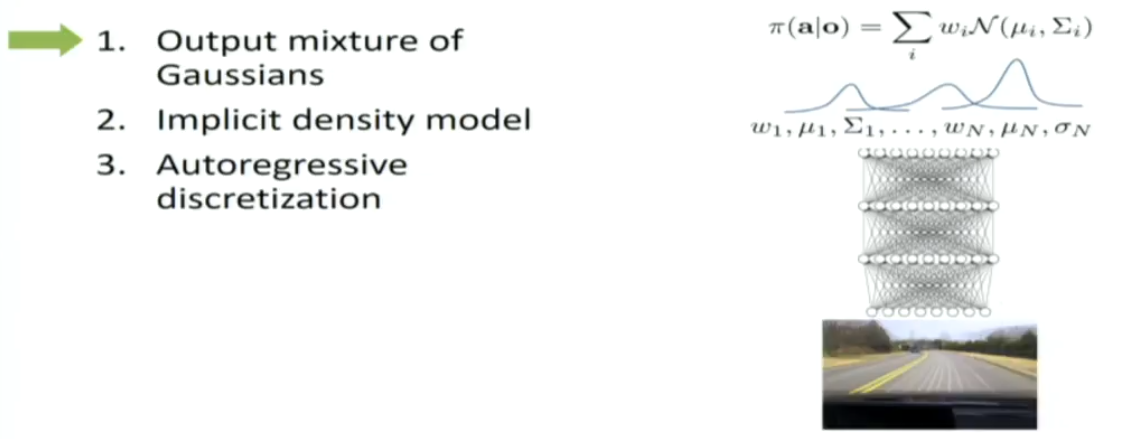

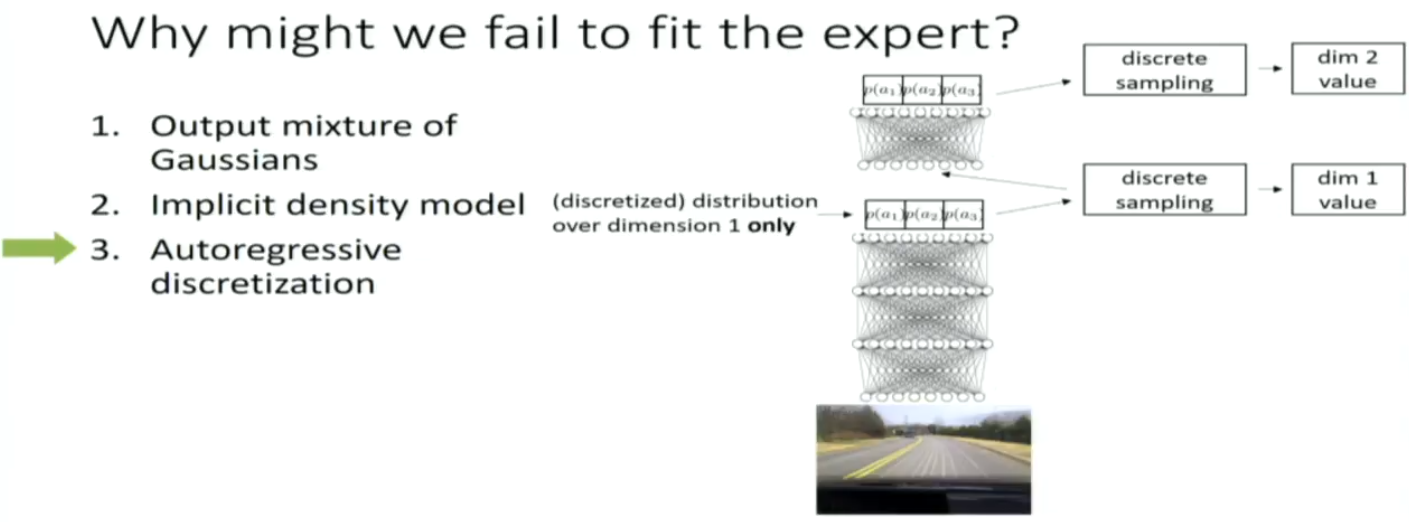

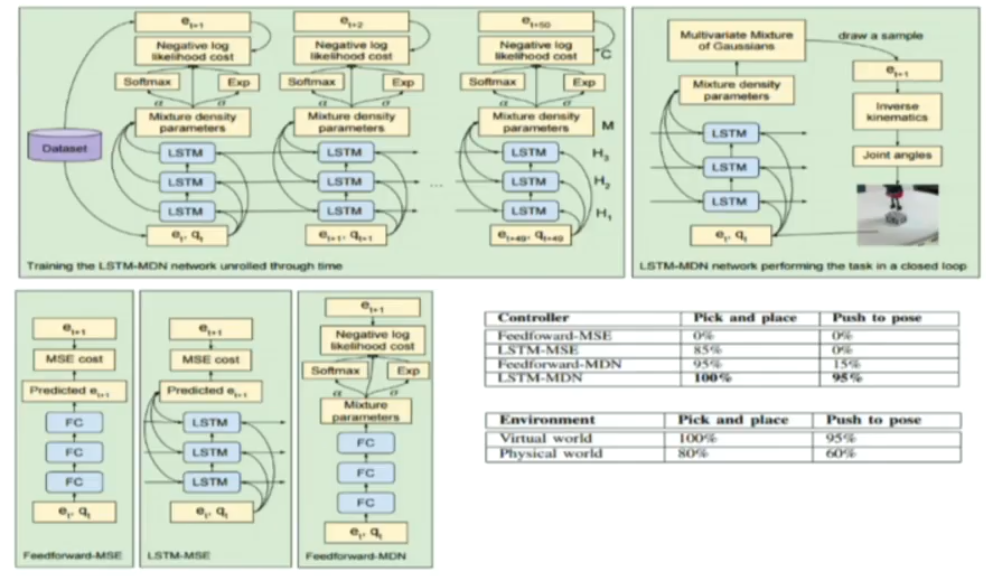

we don't want the average of the two expected behaviors. when the actions are discrete, the model works well.

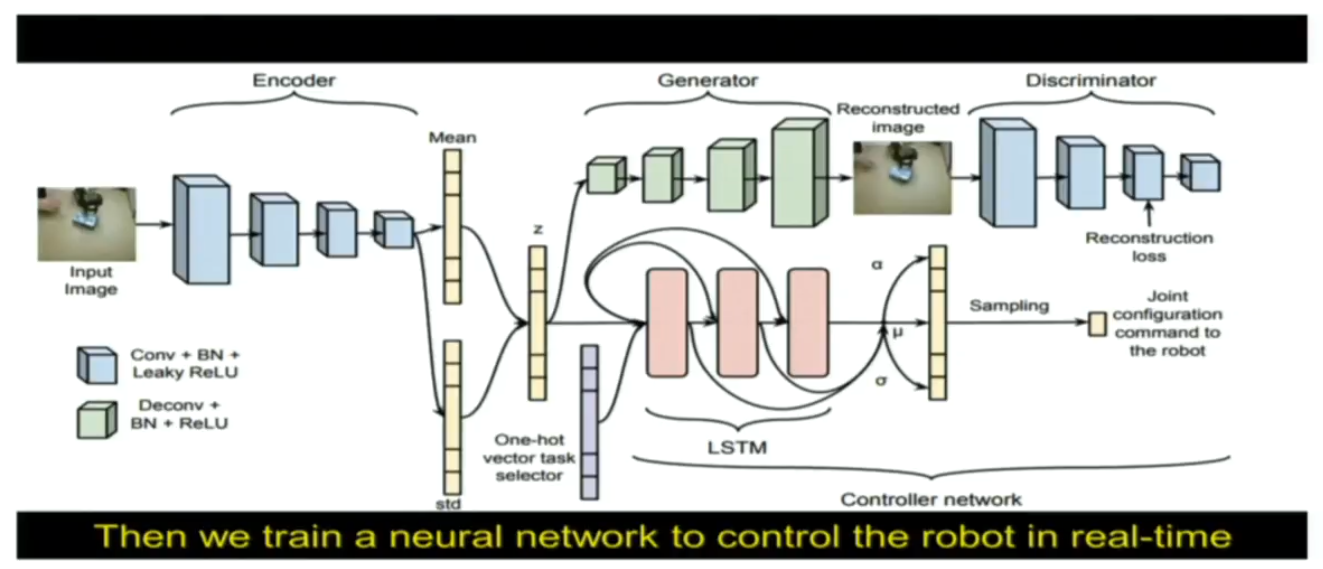

however, this is the gaussian output of continuous actions

however, this is the gaussian output of continuous actions

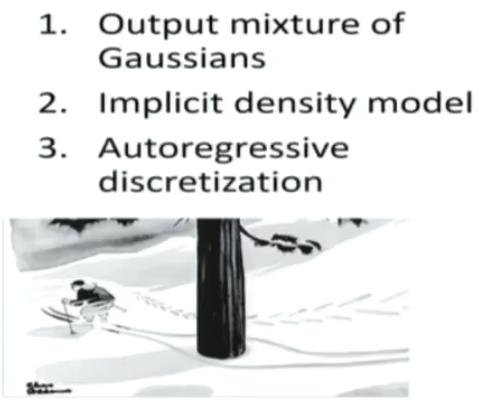

solution:

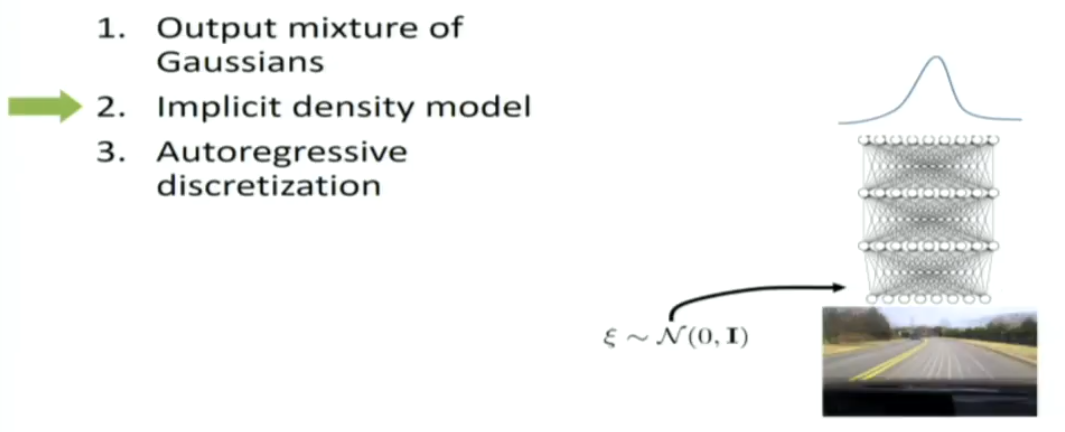

add a noise input here.

the defect is implicit density model is harder to train.

recommend to look at VAE and GAN and stan??? variational gradient descent, which are three methods to train implicit density models

upside: capable to mimic any form of function

downside: much more complex to implement

the second net is conditionally sampling from the first net

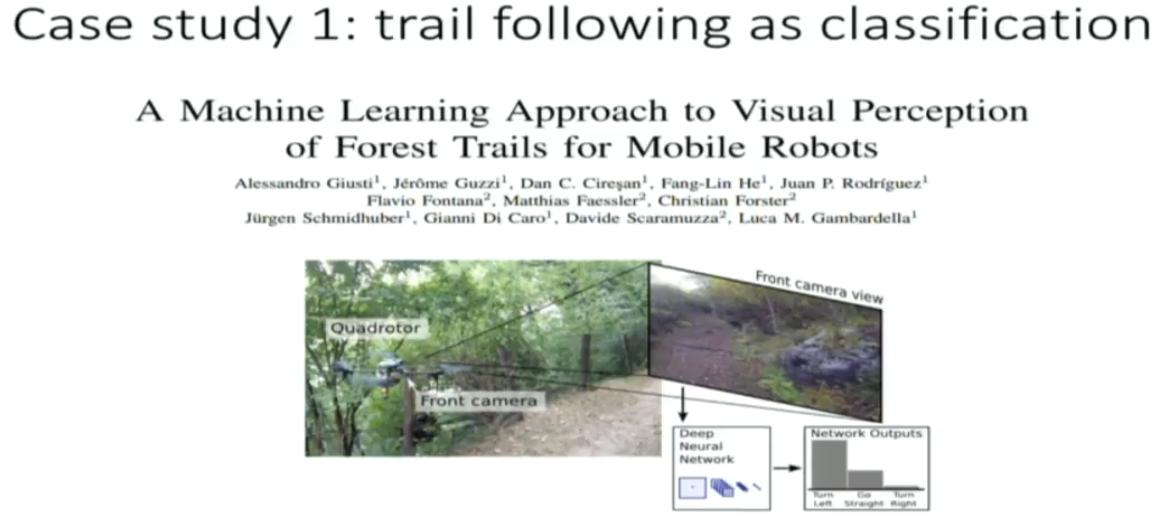

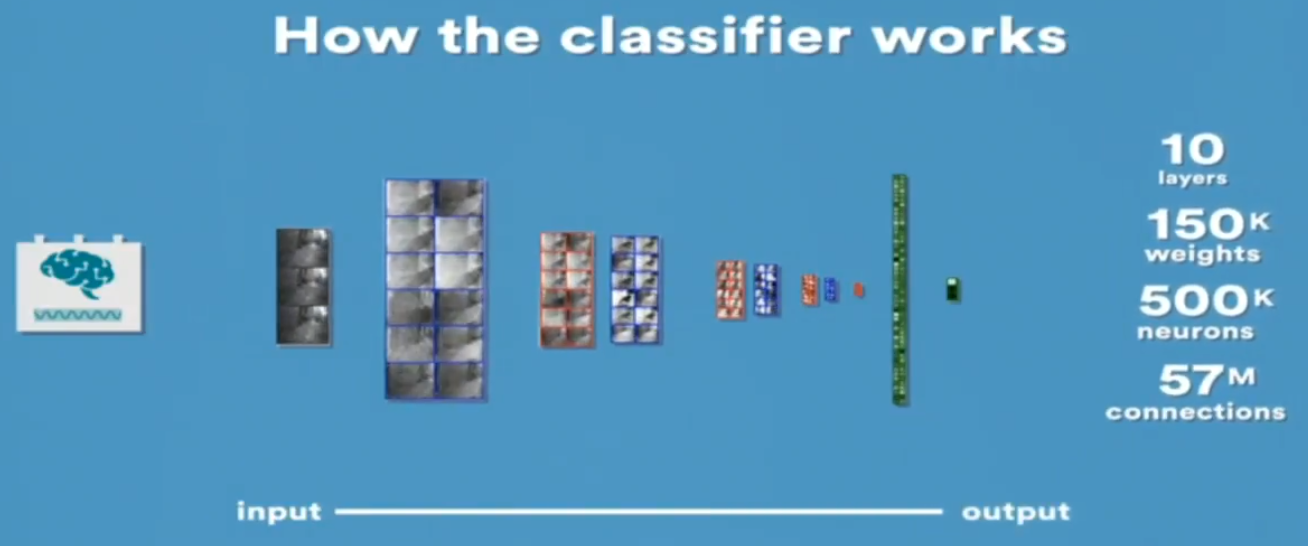

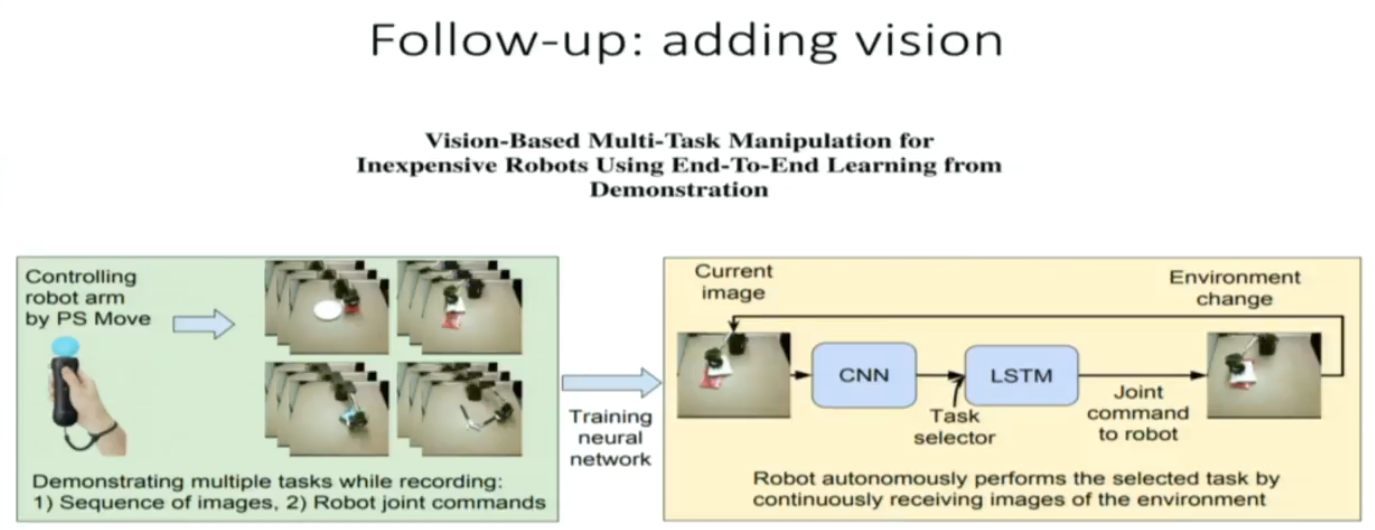

It's time for case study

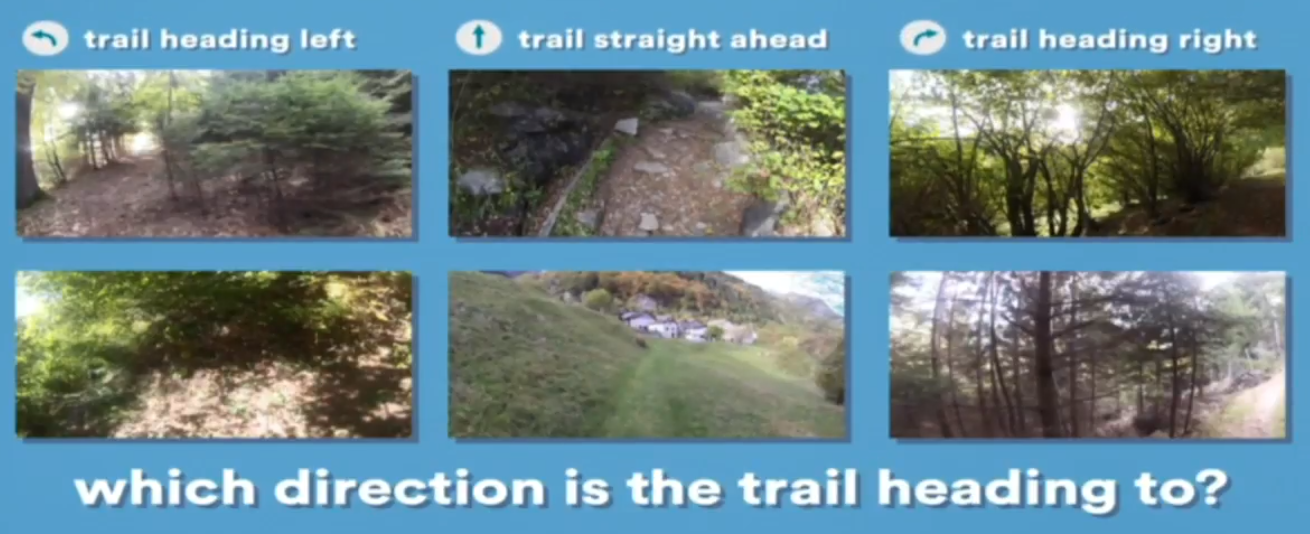

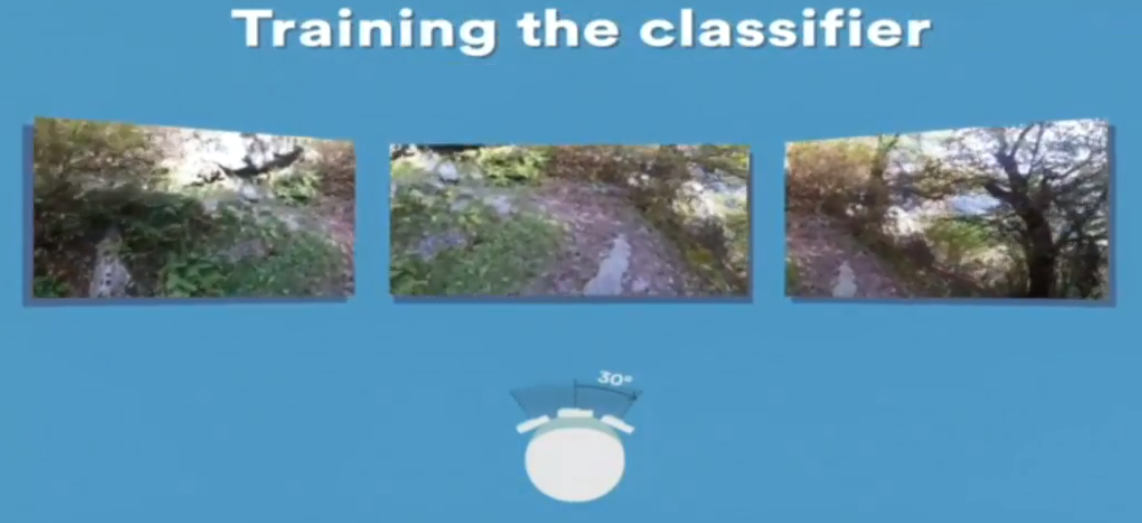

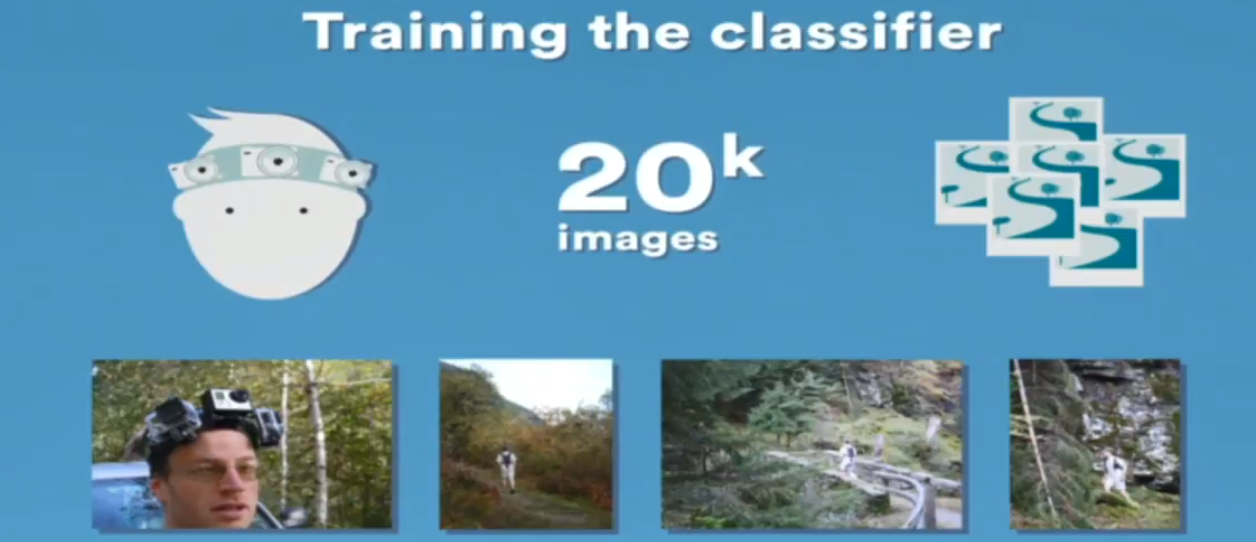

this is a human with three go-pro on his head...

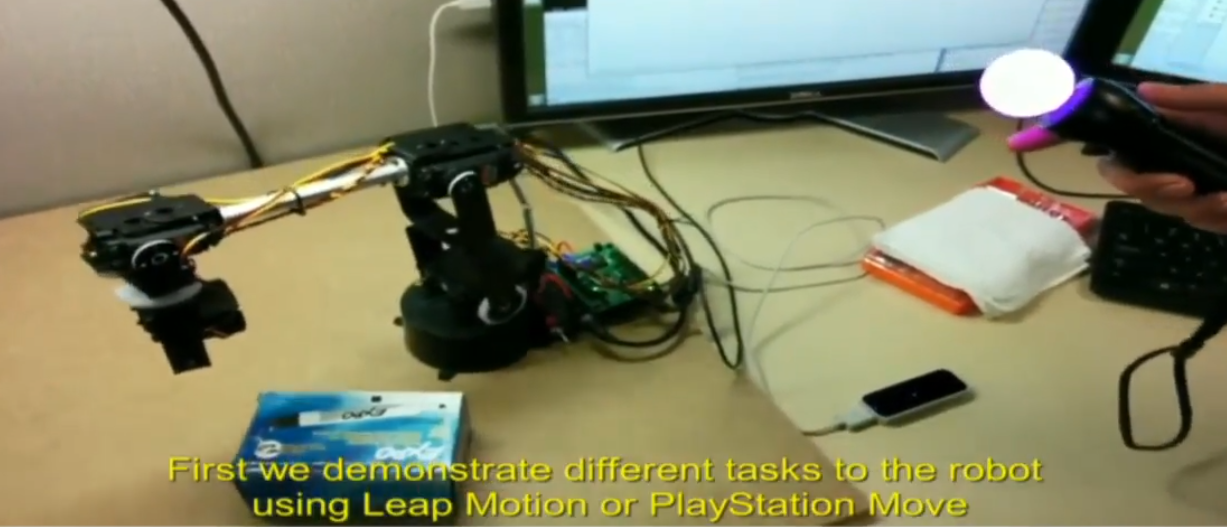

robot: 300 bucks

game control: 100 bucks

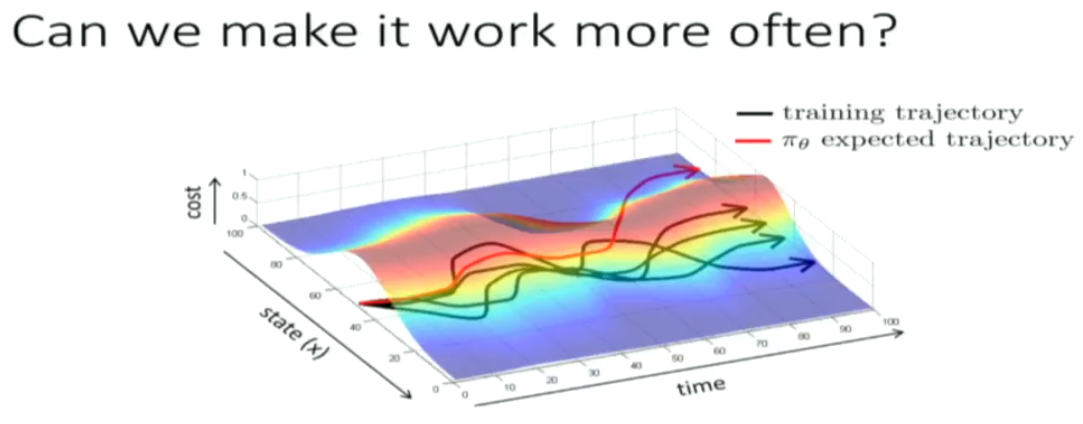

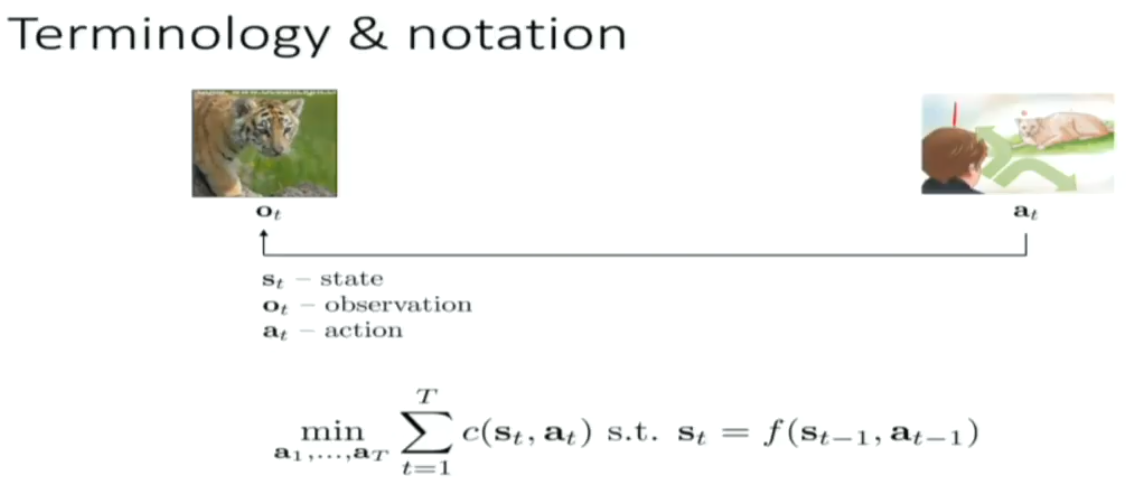

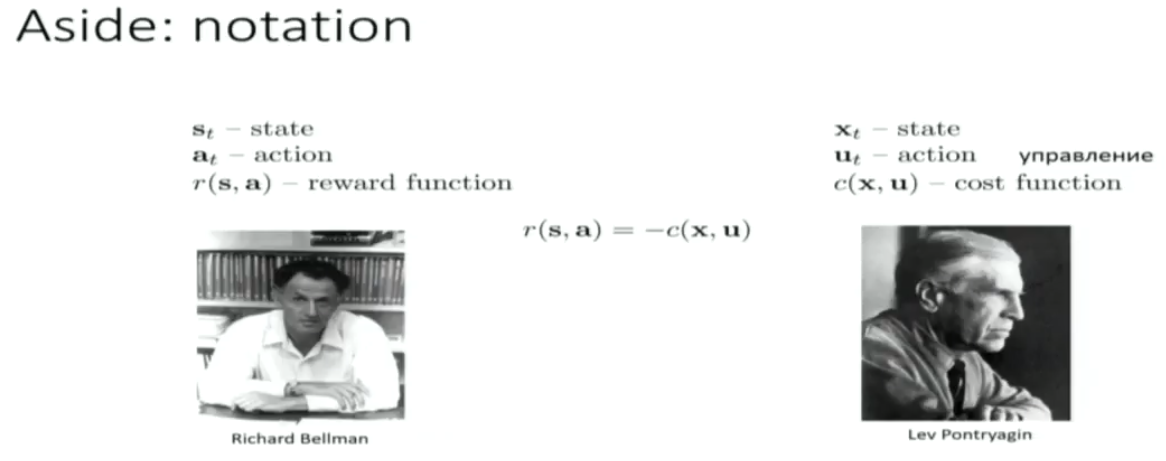

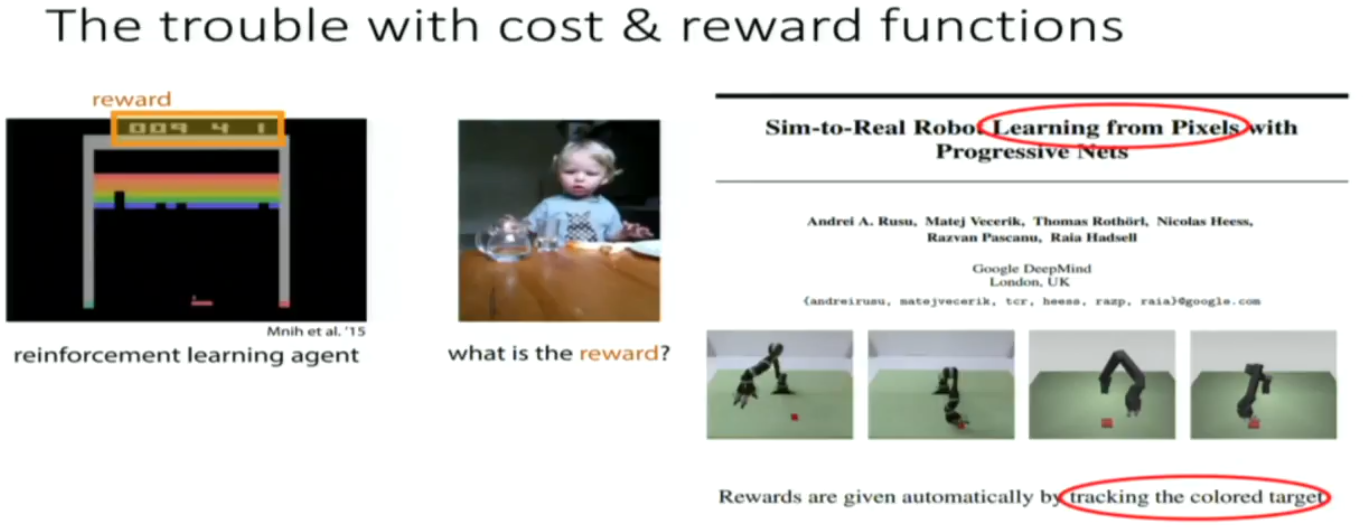

c for cost

r for reward (the negative of cost)

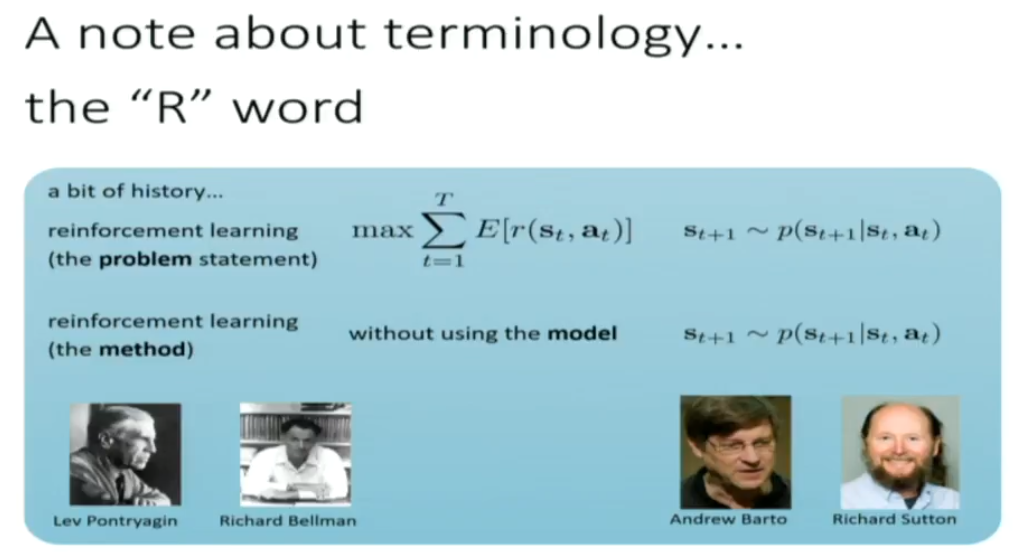

you know there maybe a little bit culture differences here. so like americans like to believe life is for reward, but maybe russians behavior more pessimistically.

HAhahahahahahaha....

reinforcement learning in CS is exactly the same as optimal control in dynamic programming