6.1hadoop日志

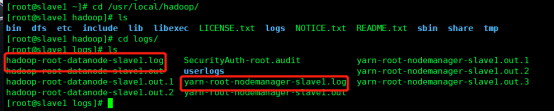

Master节点

Slave节点

6.2 hadoop排错

(待补充)

6.3 spark

6.4 zookeeper

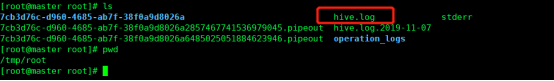

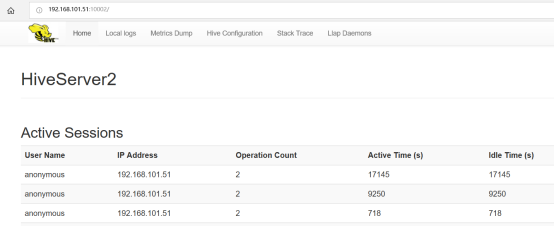

6.5 hive

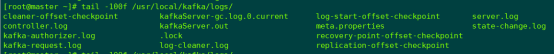

6.6 kafka

7重启命令

7.1 hadoop

a.dfs启动停止

#start-dfs.sh

#stop-dfs.sh

亦或

# /usr/local/hadoop/sbin/start-dfs.sh

# /usr/local/hadoop/sbin/stop-dfs.sh

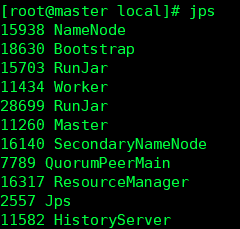

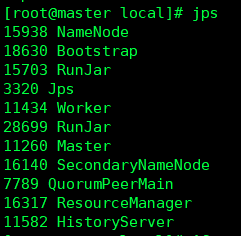

执行完后,jps 进程有DataNode NameNode SecondaryNameNode

端口开放9870

b.yarn启动停止

#start-yarn.sh

#stop-yarn.sh

亦或

# /usr/local/hadoop/sbin/start-yarn.sh

# /usr/local/hadoop/sbin/stop-yarn.sh

执行完后,jps 进程有ResourceManager ResourceManager

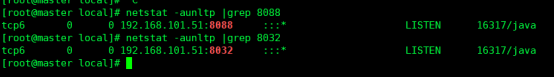

端口开放8088,8032等

注意:zookeeper必须提前启动

c.hdfs 安全模式

当前模式

hdfs dfsadmin -safemode get

开启安全模式

hdfs dfsadmin -safemode enter

关闭安全模式

hdfs dfsadmin -safemode leave

7.2 zookeeper

#/usr/local/zookeeper/bin/zkServer.sh start

#/usr/local/zookeeper/bin/zkServer.sh stop

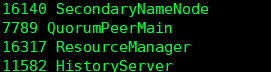

执行完后,jps 进程有QuorumPeerMain

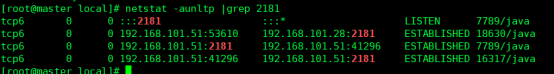

端口2181开放

7.3 spark

/usr/local/spark/sbin/start-all.sh

执行完后,jps 进程有Worker Master

/usr/local/spark/sbin/start-history-server.sh

执行完后,jps 进程有HistoryServer

netstat -anltup |grep 18080

注意:依次启动Hadoop的start-dfs.sh和Spark的start-all.sh后,再运行start-history-server.sh

7.4 hive

后台启动

#nohup hive –service hiveserver2 &

Jps初见进程RunJar

10002端口为GUI的beeline界面

8开机自启动

Vim /etc/rc.local

source /etc/profile

start-all.sh

/usr/local/zookeeper/bin/zkServer.sh start

/usr/local/kafka/bin/kafka-server-start.sh -daemon /usr/local/kafka/config/server.properties

/usr/local/spark/sbin/start-all.sh

/usr/local/spark/sbin/start-history-server.sh

nohup hive --service hiveserver2 &

chmod +x /etc/rc.local