1、镜像下载

所有节点下载镜像

docker pull kibana:5.6.4 docker tag kibana:5.6.4 docker.elastic.co/kibana/kibana:5.6.4 docker pull dotbalo/fluentd-elasticsearch:v2.0.4 docker pull dotbalo/elasticsearch:v5.6.4 docker tag dotbalo/fluentd-elasticsearch:v2.0.4 k8s.gcr.io/fluentd-elasticsearch:v2.0.4 docker tag dotbalo/elasticsearch:v5.6.4 k8s.gcr.io/elasticsearch:v5.6.4

master01下载对应的k8s源码包

wget https://github.com/kubernetes/kubernetes/releases/download/v1.11.1/kubernetes.tar.gz

对节点进行label

[root@k8s-master01 fluentd-elasticsearch]# kubectl label node beta.kubernetes.io/fluentd-ds-ready=true --all node/k8s-master01 labeled node/k8s-master02 labeled node/k8s-master03 labeled node/k8s-node01 labeled node/k8s-node02 labeled [root@k8s-master01 fluentd-elasticsearch]# kubectl get nodes --show-labels | grep beta.kubernetes.io/fluentd-ds-ready k8s-master01 Ready master 1d v1.11.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/fluentd-ds-ready=true,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s-master01,node-role.kubernetes.io/master= k8s-master02 Ready master 1d v1.11.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/fluentd-ds-ready=true,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s-master02,node-role.kubernetes.io/master= k8s-master03 Ready master 1d v1.11.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/fluentd-ds-ready=true,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s-master03,node-role.kubernetes.io/master= k8s-node01 Ready <none> 7h v1.11.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/fluentd-ds-ready=true,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s-node01 k8s-node02 Ready <none> 7h v1.11.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/fluentd-ds-ready=true,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s-node02

2、持久化卷

采用NFS作为后端存储,NAS、GFS、CEPH同理

在NFS服务器上创建es的存储目录(使用动态存储可以自动创建pv,无需此步骤)

[root@nfs es]# pwd /nfs/es [root@nfs es]# mkdir es{0..2}

三个es实例创建三个目录,以此类推

挂载测试

# 所有k8s节点 yum install nfs-utils -y # 挂载 [root@k8s-master01 ~]# mount -t nfs 192.168.2.2:/nfs/es/es0 /mnt/ # 无问题卸载 [root@k8s-master01 ~]# umount /mnt/

3、创建集群

使用静态pv创建es集群,文件地址:https://github.com/dotbalo/k8s/

[root@k8s-master01 efk-static]# ls efk-namespaces.yaml es2-pv.yaml fluentd-es-configmap.yaml kibana-svc.yaml es0-pv.yaml es-service.yaml fluentd-es-ds.yaml README.md es1-pv.yaml es-ss.yaml kibana-deployment.yaml [root@k8s-master01 efk-static]# kubectl apply -f . [root@k8s-master01 efk-static]# kubectl apply -f . namespace/logging configured service/elasticsearch-logging created serviceaccount/elasticsearch-logging created clusterrole.rbac.authorization.k8s.io/elasticsearch-logging configured clusterrolebinding.rbac.authorization.k8s.io/elasticsearch-logging configured statefulset.apps/elasticsearch-logging created persistentvolume/pv-es-0 configured persistentvolume/pv-es-1 configured persistentvolume/pv-es-2 configured configmap/fluentd-es-config-v0.1.1 created serviceaccount/fluentd-es created clusterrole.rbac.authorization.k8s.io/fluentd-es configured clusterrolebinding.rbac.authorization.k8s.io/fluentd-es configured daemonset.apps/fluentd-es-v2.0.2 created deployment.apps/kibana-logging created service/kibana-logging created

查看pv、pvc、pods

[root@k8s-master01 efk-static]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv-es-0 4Gi RWX Recycle Bound logging/elasticsearch-logging-elasticsearch-logging-1 es-storage-class 59m pv-es-1 4Gi RWX Recycle Available es-storage-class 59m pv-es-2 4Gi RWX Recycle Bound logging/elasticsearch-logging-elasticsearch-logging-0 es-storage-class 59m [root@k8s-master01 efk-static]# kubectl get pvc -n logging NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE elasticsearch-logging-elasticsearch-logging-0 Bound pv-es-2 4Gi RWX es-storage-class 1h elasticsearch-logging-elasticsearch-logging-1 Bound pv-es-0 4Gi RWX es-storage-class 1h

[root@k8s-master01 ~]# kubectl get pods -n logging NAME READY STATUS RESTARTS AGE elasticsearch-logging-0 1/1 Running 2 3h elasticsearch-logging-1 1/1 Running 0 3h fluentd-es-v2.0.4-5jqhw 1/1 Running 0 10m fluentd-es-v2.0.4-fw5gk 1/1 Running 0 10m fluentd-es-v2.0.4-hm2tc 1/1 Running 0 10m fluentd-es-v2.0.4-nqqtm 1/1 Running 0 10m fluentd-es-v2.0.4-r5fgh 1/1 Running 0 10m kibana-logging-677854568-l6pvc 1/1 Running 0 3h

4、创建kibana的ingress

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: kibana namespace: logging annotations: kubernetes.io/ingress.class: traefik spec: rules: - host: kibana.net http: paths: - backend: serviceName: kibana-logging servicePort: 5601

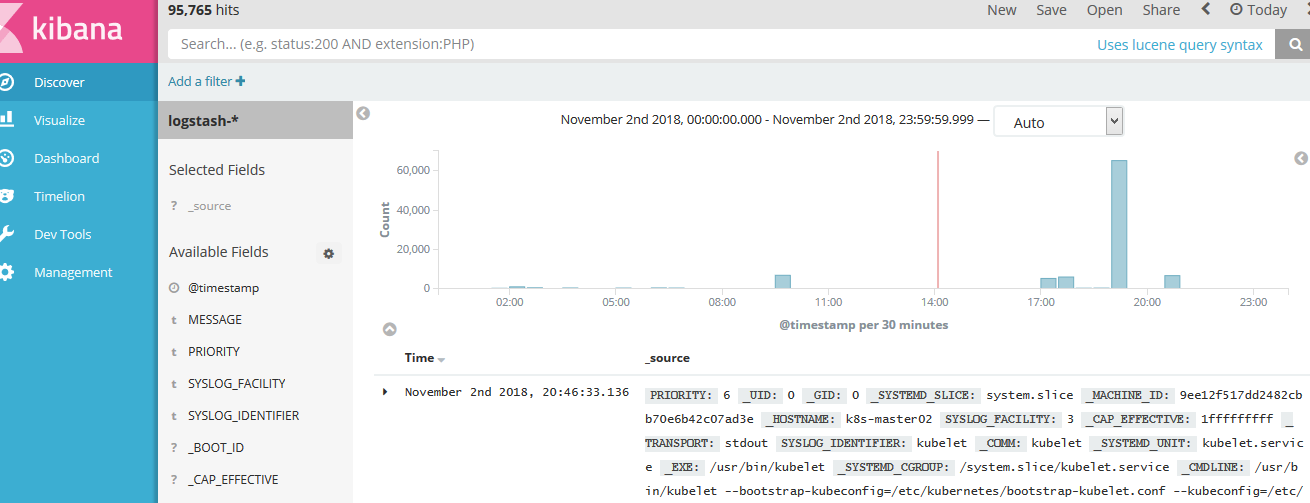

访问kibana,创建index

赞助作者: