https://github.com/kata-containers/runtime/issues/1876

插件扩展

https://m.php.cn/manual/view/36157.html

网络驱动插件与群模式

Docker 1.12增加了对群集管理和编排的支持,称为群集模式。以群集模式运行的Docker Engine目前仅支持内置网络覆盖驱动程序。因此,现有网络插件将无法在群集模式下工作。

当您在群集模式之外运行Docker引擎时,所有在Docker 1.11中工作的网络插件都将继续正常工作。它们不需要任何修改。

使用网络驱动插件

安装和运行网络驱动程序插件的方式取决于特定的插件。因此,请确保根据从插件开发人员处获得的指示安装插件。

但是,一旦运行,就像使用内置网络驱动程序一样使用网络驱动程序插件:作为面向网络的Docker命令中的驱动程序提及。例如,

$ docker network create --driver weave mynet

一些网络驱动程序插件列在插件中

mynet网络现在归属于该网络weave,因此后续涉及该网络的命令将被发送到该插件,

$ docker run --network=mynet busybox top

编写一个网络插件

网络插件实现了Docker插件API和网络插件协议

网络插件协议

网络驱动程序协议除了插件激活调用之外,还记录为libnetwork的一部分:https://github.com/docker/libnetwork/blob/master/docs/remote.md。

要与Docker维护人员和其他感兴趣的用户进行交互,请参阅IRC频道#docker-network。

-

Docker网络功能概述

-

LibNetwork项目

How to use this plugin Build this plugin. go build Ensure that your plugin is discoverable https://docs.docker.com/engine/extend/plugin_api/#/plugin-discovery sudo cp sriov.json /etc/docker/plugins Start the plugin sudo ./sriov &

{ "Name": "sriov", "Addr": "http://127.0.0.1:9599" }

func handlerPluginActivate(w http.ResponseWriter, r *http.Request) { _, _ = getBody(r) //TODO: Where is this encoding? resp := `{ "Implements": ["NetworkDriver"] }` fmt.Fprintf(w, "%s", resp) }

The following example launches a Kata Containers container using SR-IOV:

-

Build and start SR-IOV plugin:

To install the SR-IOV plugin, follow the SR-IOV plugin installation instructions

-

Create the docker network:

$ sudo docker network create -d sriov --internal --opt pf_iface=enp1s0f0 --opt vlanid=100 --subnet=192.168.0.0/24 vfnet E0505 09:35:40.550129 2541 plugin.go:297] Numvfs and Totalvfs are not same on the PF - Initialize numvfs to totalvfs ee2e5a594f9e4d3796eda972f3b46e52342aea04cbae8e5eac9b2dd6ff37b067The previous commands create the required SR-IOV docker network, subnet,

vlanid, and physical interface. -

Start containers and test their connectivity:

$ sudo docker run --runtime=kata-runtime --net=vfnet --cap-add SYS_ADMIN --ip=192.168.0.10 -it alpineThe previous example starts a container making use of SR-IOV. If two machines with SR-IOV enabled NICs are connected back-to-back and each has a network with matching

vlanidcreated, use the following two commands to test the connectivity:Machine 1:

sriov-1:~$ sudo docker run --runtime=kata-runtime --net=vfnet --cap-add SYS_ADMIN --ip=192.168.0.10 -it mcastelino/iperf bash -c "mount -t ramfs -o size=20M ramfs /tmp; iperf3 -s"Machine 2:

sriov-2:~$ sudo docker run --runtime=kata-runtime --net=vfnet --cap-add SYS_ADMIN --ip=192.168.0.11 -it mcastelino/iperf iperf3 -c 192.168.0.10 bash -c "mount -t r

NetworkDriver

docker run -it --runtime=kata-runtime --rm --device /dev/binder59:/dev/binder59 --net=vfnet debian /bin/bash docker: Error response from daemon: network vfnet not found.

docker network create vfnet

docker network rm vfnet

docker network ls

docker network create -d sriov --internal --opt pf_iface=enp1s0f0 --opt vlanid=100 --subnet=192.168.0.0/24 vfnet Error response from daemon: plugin "sriov" not found

root@ubuntu:/opt/gopath/src/github.com/kata-containers/tests/.ci# docker run -it --runtime=kata-runtime --rm --network none debian /bin/bash root@577ea2c62520:/# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever root@577ea2c62520:/#

// startVM starts the VM. func (s *Sandbox) startVM() (err error) { span, ctx := s.trace("startVM") defer span.Finish() s.Logger().Info("Starting VM") if err := s.network.Run(s.networkNS.NetNsPath, func() error { if s.factory != nil { vm, err := s.factory.GetVM(ctx, VMConfig{ HypervisorType: s.config.HypervisorType, HypervisorConfig: s.config.HypervisorConfig, AgentType: s.config.AgentType, AgentConfig: s.config.AgentConfig, ProxyType: s.config.ProxyType, ProxyConfig: s.config.ProxyConfig, }) if err != nil { return err } return vm.assignSandbox(s) } return s.hypervisor.startSandbox(vmStartTimeout) }); err != nil { return err } defer func() { if err != nil { s.hypervisor.stopSandbox() } }() // In case of vm factory, network interfaces are hotplugged // after vm is started. if s.factory != nil { endpoints, err := s.network.Add(s.ctx, &s.config.NetworkConfig, s, true) if err != nil { return err } s.networkNS.Endpoints = endpoints if s.config.NetworkConfig.NetmonConfig.Enable { if err := s.startNetworkMonitor(); err != nil { return err } } } s.Logger().Info("VM started") // Once the hypervisor is done starting the sandbox, // we want to guarantee that it is manageable. // For that we need to ask the agent to start the // sandbox inside the VM. if err := s.agent.startSandbox(s); err != nil { return err } s.Logger().Info("Agent started in the sandbox") return nil }

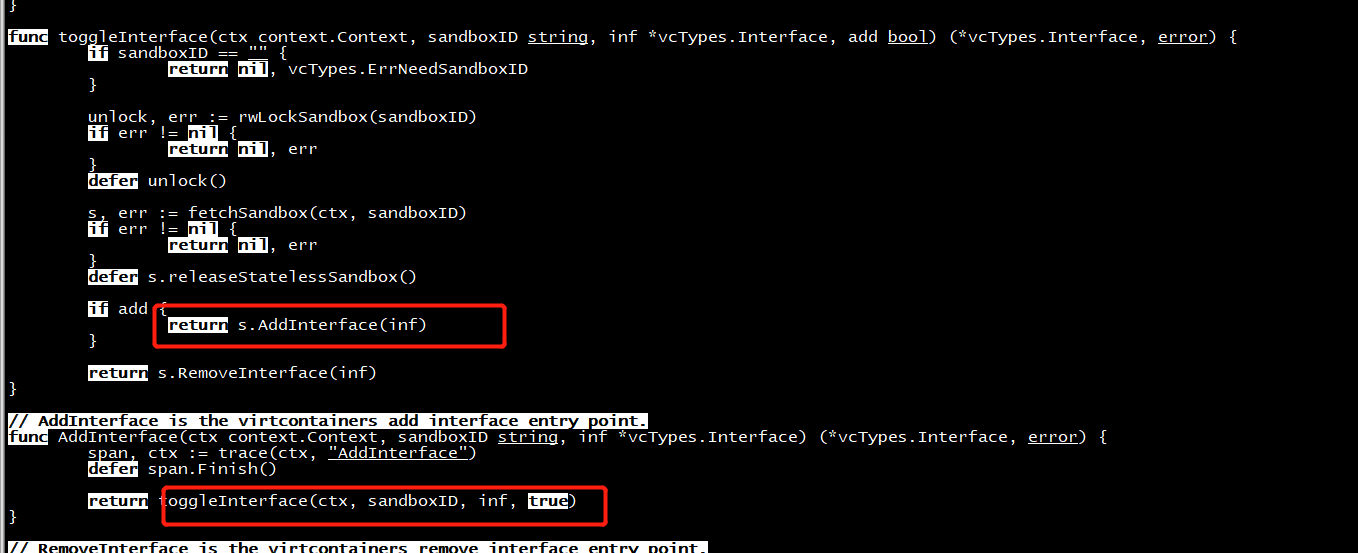

AddInterface

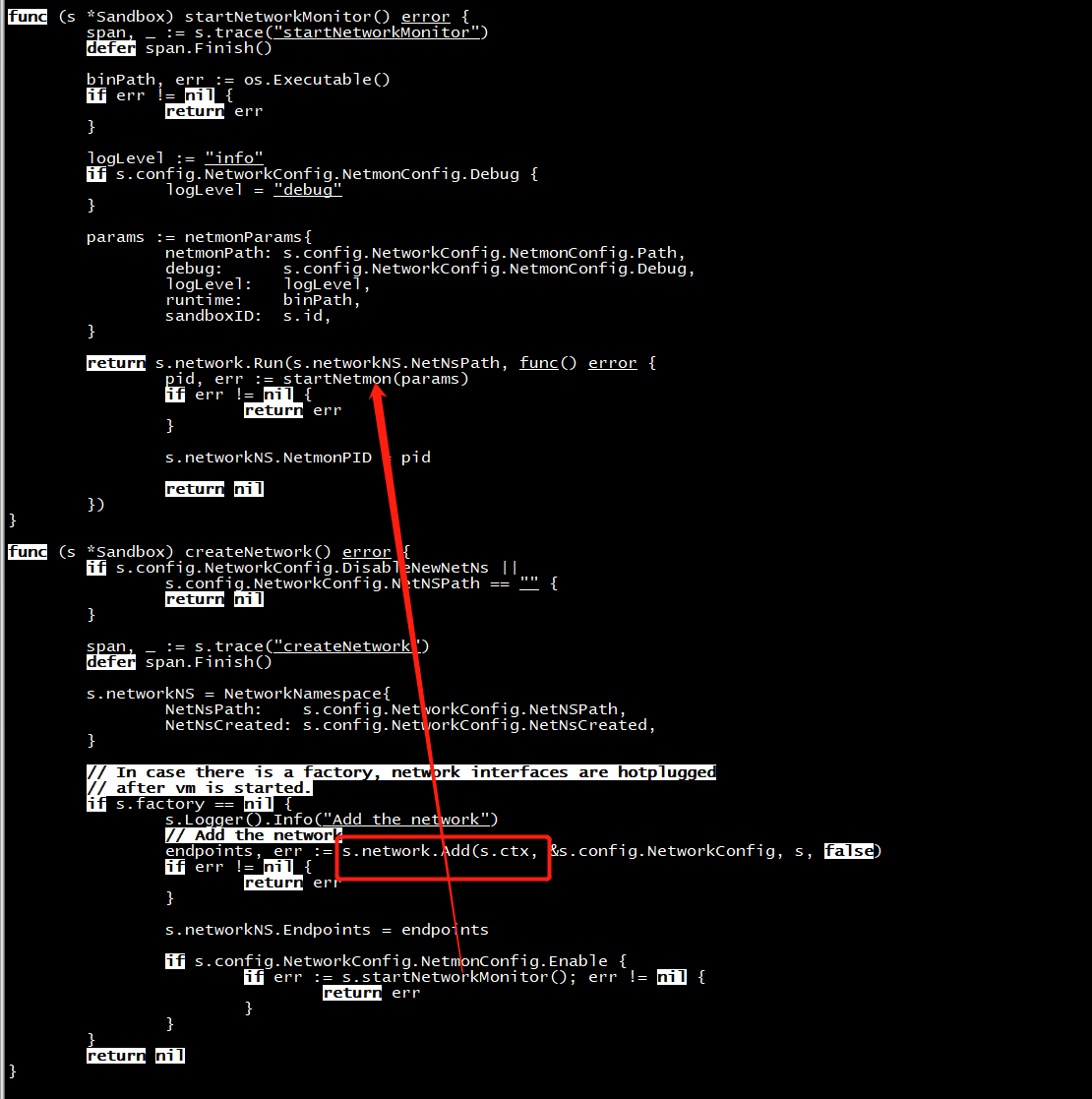

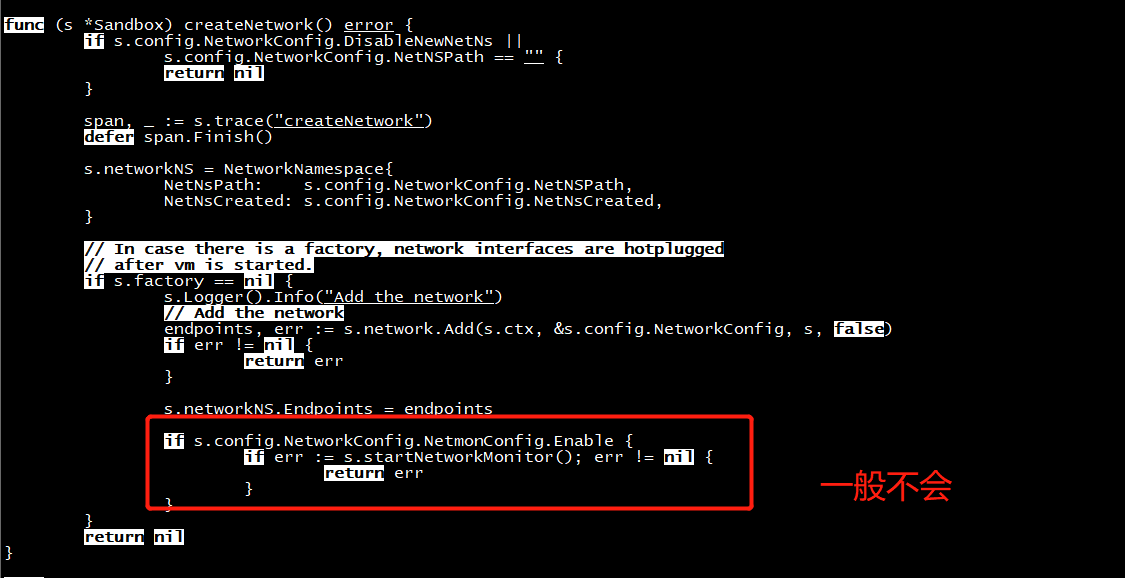

func (s *Sandbox) startNetworkMonitor() error

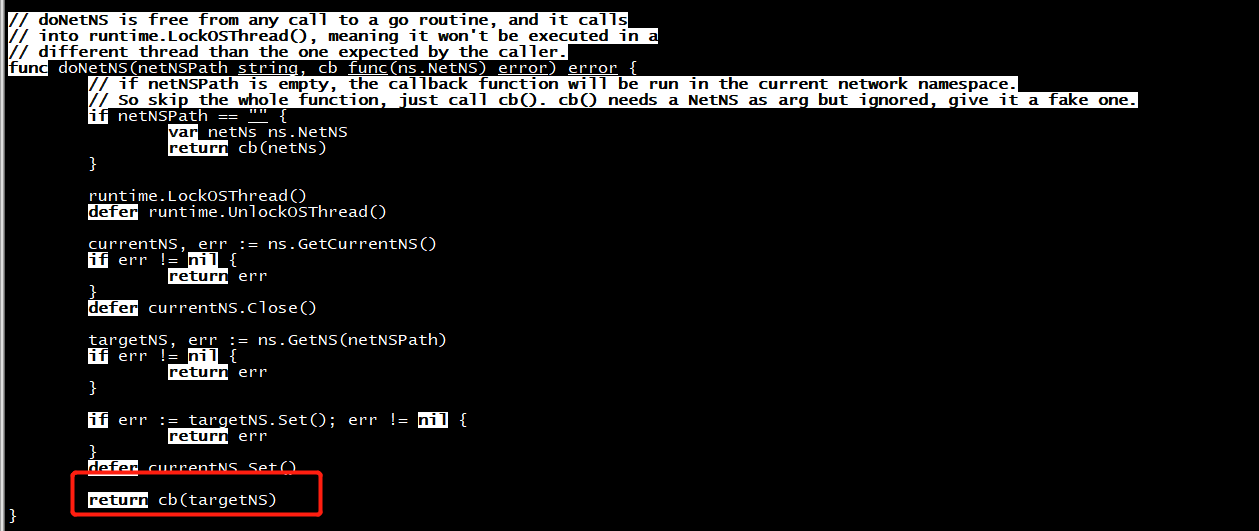

// Run runs a callback in the specified network namespace. func (n *Network) Run(networkNSPath string, cb func() error) error { span, _ := n.trace(context.Background(), "run") defer span.Finish() return doNetNS(networkNSPath, func(_ ns.NetNS) error { return cb() }) }

virtcontainers/netmon.go

func startNetmon(params netmonParams) (int, error) { args, err := prepareNetMonParams(params) if err != nil { return -1, err } cmd := exec.Command(args[0], args[1:]...) if err := cmd.Start(); err != nil { return -1, err } return cmd.Process.Pid, nil }