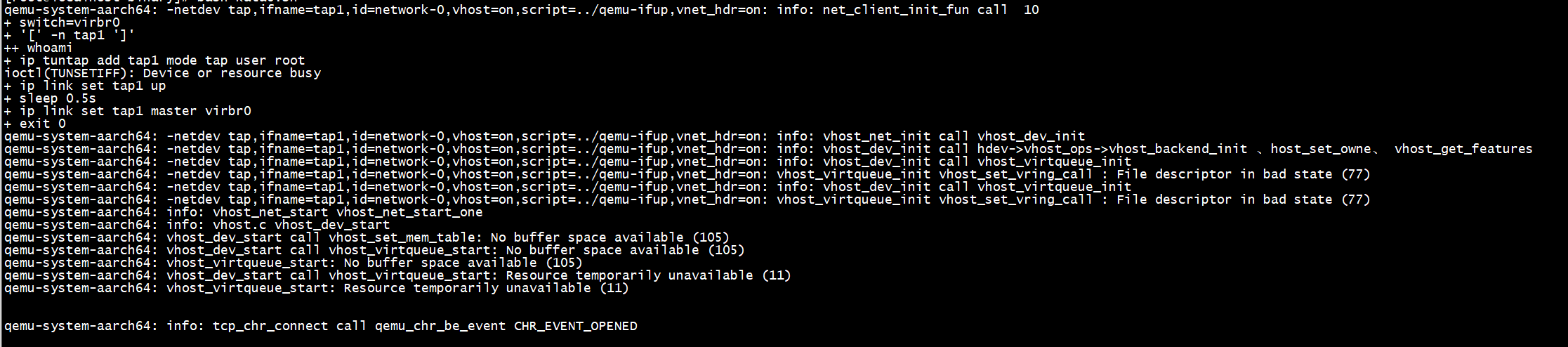

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: net_client_init_fun call 10 + switch=virbr0 + '[' -n tap1 ']' ++ whoami + ip tuntap add tap1 mode tap user root ioctl(TUNSETIFF): Device or resource busy + ip link set tap1 up + sleep 0.5s + ip link set tap1 master virbr0 + exit 0 qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_net_init call vhost_dev_init qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_dev_init call hdev->vhost_ops->vhost_backend_init 、host_set_owne、 vhost_get_features qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_dev_init call vhost_virtqueue_init qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: vhost_virtqueue_init vhost_set_vring_call : File descriptor in bad state (77) qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_dev_init call vhost_virtqueue_init qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: vhost_virtqueue_init vhost_set_vring_call : File descriptor in bad state (77) qemu-system-aarch64: info: vhost_net_start vhost_net_start_one qemu-system-aarch64: info: vhost.c vhost_dev_start qemu-system-aarch64: vhost_dev_start call vhost_set_mem_table: No buffer space available (105) qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: No buffer space available (105) qemu-system-aarch64: vhost_virtqueue_start: No buffer space available (105) qemu-system-aarch64: vhost_dev_start call vhost_virtqueue_start: Resource temporarily unavailable (11) qemu-system-aarch64: vhost_virtqueue_start: Resource temporarily unavailable (11) qemu-system-aarch64: info: tcp_chr_connect call qemu_chr_be_event CHR_EVENT_OPENED ---nc 连接时

#define _VHOST_DEBUG 1 #ifdef _VHOST_DEBUG #define VHOST_OPS_DEBUG(fmt, ...) do { error_report(fmt ": %s (%d)", ## __VA_ARGS__, strerror(errno), errno); } while (0) #else #define VHOST_OPS_DEBUG(fmt, ...) do { } while (0) #endif

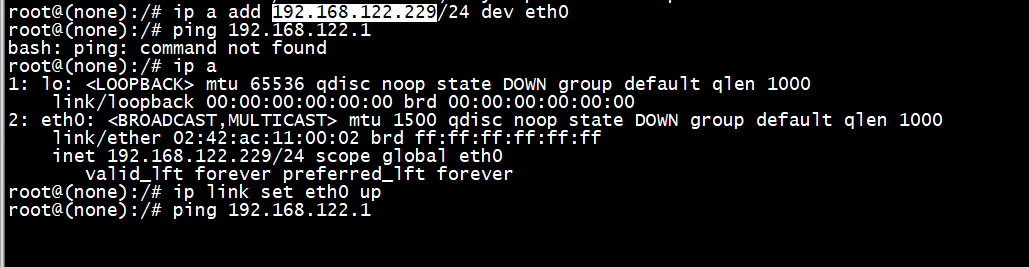

虚拟机侧

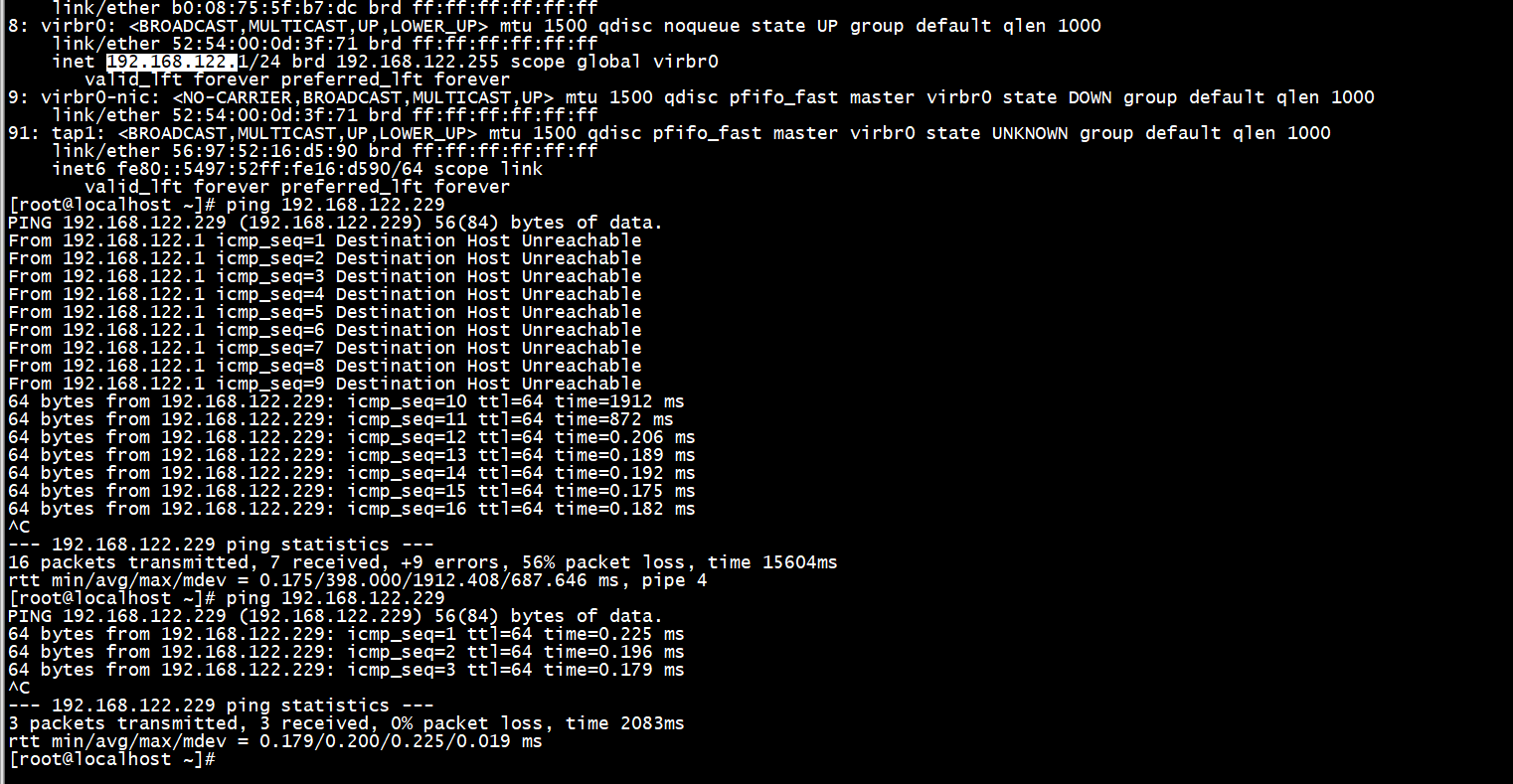

主机侧

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: net_client_init_fun call 10 + switch=virbr0 + '[' -n tap1 ']' ++ whoami + ip tuntap add tap1 mode tap user root ioctl(TUNSETIFF): Device or resource busy + ip link set tap1 up + sleep 0.5s + ip link set tap1 master virbr0 + exit 0

[root@localhost ~]# brctl show bridge name bridge id STP enabled interfaces virbr0 8000.5254000d3f71 yes enp125s0f1 tap1 virbr0-nic [root@localhost ~]#

+ switch=virbr0

+ '[' -n tap1 ']'

++ whoami

+ ip tuntap add tap1 mode tap user root

ioctl(TUNSETIFF): Device or resource busy

+ ip link set tap1 up

+ sleep 0.5s

+ ip link set tap1 master virbr0

+ exit 0

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_net_init call vhost_dev_init

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_dev_init call hdev->vhost_ops->vhost_backend_init 、host_set_owne、 vhost_get_features

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_dev_init call vhost_virtqueue_init

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_virtqueue_init vhost_set_vring_call

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_dev_init call vhost_virtqueue_init

qemu-system-aarch64: -netdev tap,ifname=tap1,id=network-0,vhost=on,script=../qemu-ifup,vnet_hdr=on: info: vhost_virtqueue_init vhost_set_vring_call

qemu-system-aarch64: info: vhost_net_start vhost_net_start_one

qemu-system-aarch64: info: vhost.c vhost_dev_start

qemu-system-aarch64: info: vhost_dev_start call vhost_set_mem_table

qemu-system-aarch64: info: vhost_dev_start call vhost_virtqueue_start

qemu-system-aarch64: info: vhost_virtqueue_start

qemu-system-aarch64: info: vhost_virtqueue_start vhost_set_vring_kick

qemu-system-aarch64: info: vhost_dev_start call vhost_virtqueue_start

qemu-system-aarch64: info: vhost_virtqueue_start

qemu-system-aarch64: info: vhost_virtqueue_start vhost_set_vring_kick

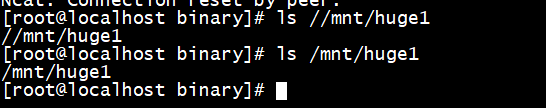

-object memory-backend-file,id=mem,size=4096M,mem-path=/mnt/huge1

主机侧

虚拟机侧

设置了numa参数

最后我们看下设置了numa参数的情况,我们刚才说之前两种情况都不能满足vhost_user的使用,因为无法内存共享。那么我们看下使用vhost_user时的qemu参数的例子:

-object memory-backend-file,id=ram-node0,prealloc=yes,mem-path=/dev/hugepages/,share=yes,size=17179869184 -numa node,nodeid=0,cpus=0-29,memdev=ram-node0

没错我们需要指定numa参数,同时也要指定mem-path。numa参数的会导致在parse_numa函数中解析,并对nb_numa_nodes++。这里还有注意,指定了numa时也指定了memory-backend-file参数,这会导致qemu创建一个struct HostMemoryBackend内存对象(object)。

下面我们回到memory_region_allocate_system_memory具体分析。