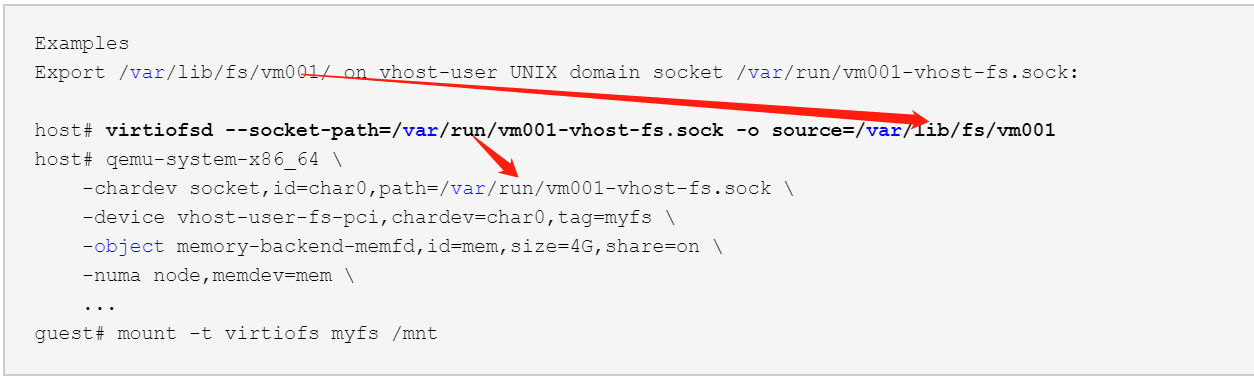

Examples Export /var/lib/fs/vm001/ on vhost-user UNIX domain socket /var/run/vm001-vhost-fs.sock: host# virtiofsd --socket-path=/var/run/vm001-vhost-fs.sock -o source=/var/lib/fs/vm001 host# qemu-system-x86_64 -chardev socket,id=char0,path=/var/run/vm001-vhost-fs.sock -device vhost-user-fs-pci,chardev=char0,tag=myfs -object memory-backend-memfd,id=mem,size=4G,share=on -numa node,memdev=mem ... guest# mount -t virtiofs myfs /mnt

./configure --target-list=aarch64-softmmu

../configure --target-list=aarch64-softmmu --disable-seccomp

[root@bogon qemu]# ./configure --target-list=aarch64-softmmu ERROR: glib-2.40 gthread-2.0 is required to compile QEMU

编译安装glib-2.40.0

[root@bogon glib-2.40.0]# vi ~/.profile 1 2 export PATH="$HOME/.cargo/bin:$PATH" 3 export PKG_CONFIG_PATH=/usr/local/lib/pkgconfig

[root@bogon qemu]# mkdir build [root@bogon qemu]# cd build/ [root@bogon build]# ./configure --target-list=aarch64-softmmu -bash: ./configure: No such file or directory [root@bogon build]# ../configure --target-list=aarch64-softmmu

../configure --target-list=aarch64-softmmu --disable-seccomp

CC aarch64-softmmu/target/arm/arm-powerctl.o GEN aarch64-softmmu/target/arm/decode-sve.inc.c CC aarch64-softmmu/target/arm/sve_helper.o GEN trace/generated-helpers.c CC aarch64-softmmu/trace/control-target.o CC aarch64-softmmu/gdbstub-xml.o CC aarch64-softmmu/trace/generated-helpers.o CC aarch64-softmmu/target/arm/translate-sve.o LINK aarch64-softmmu/qemu-system-aarch64 [root@bogon build]# pwd /data2/hyper/qemu/build [root@bogon build]# make -j 8 virtiofsd make[1]: Entering directory `/data2/hyper/qemu/slirp' make[1]: Nothing to be done for `all'. make[1]: Leaving directory `/data2/hyper/qemu/slirp' CC contrib/virtiofsd/buffer.o CC contrib/virtiofsd/fuse_opt.o CC contrib/virtiofsd/fuse_loop_mt.o CC contrib/virtiofsd/fuse_lowlevel.o CC contrib/virtiofsd/fuse_signals.o CC contrib/virtiofsd/fuse_virtio.o CC contrib/virtiofsd/helper.o CC contrib/virtiofsd/passthrough_ll.o CC contrib/virtiofsd/seccomp.o CC contrib/libvhost-user/libvhost-user.o /data2/hyper/qemu/contrib/virtiofsd/seccomp.c:12:21: fatal error: seccomp.h: No such file or directory #include <seccomp.h> ^ compilation terminated. CC contrib/libvhost-user/libvhost-user-glib.o make: *** [contrib/virtiofsd/seccomp.o] Error 1 make: *** Waiting for unfinished jobs.... [root@bogon build]#

[root@bogon build]# yum search seccomp Loaded plugins: fastestmirror, langpacks Loading mirror speeds from cached hostfile * centos-qemu-ev: mirrors.bfsu.edu.cn ===================================================================================== N/S matched: seccomp ====================================================================================== libseccomp.aarch64 : Enhanced seccomp library libseccomp-devel.aarch64 : Development files used to build applications with libseccomp support Name and summary matches only, use "search all" for everything. [root@bogon build]# yum -y install libseccomp-devel

[root@bogon hyper]# ctr run --runtime io.containerd.run.kata.v2 -t --rm docker.io/library/nginx:alpine sh ctr: listen unix /run/vc/vm/sh/virtiofsd.sock: bind: address already in use: unknown [root@bogon hyper]# rm -rf /run/vc/vm/sh/virtiofsd.sock [root@bogon hyper]# ctr run --runtime io.containerd.run.kata.v2 -t --rm docker.io/library/nginx:alpine sh ctr: fork/exec /usr/libexec/kata-qemu/virtiofsd: no such file or directory: not found [root@bogon hyper]# rm -rf /run/vc/vm/sh/virtiofsd.sock [root@bogon hyper]#

Standalone virtio-fs usage

This document describes how to setup the virtio-fs components for standalone testing with QEMU and without Kata Containers. In general it's easier to debug basic issues in this environment than inside Kata Containers.

Components

The following components need building:

- A guest kernel with virtio-fs support

- A QEMU with virtio-fs support

- The example virtio-fs daemon (virtiofsd)

- (optionally) The 'ireg' cache daemon

The instructions assume that you already have available a Linux guest image to run under QEMU and a Linux host on which you can build and run the components.

The guest kernel

An appropriately configured Linux 5.4 or later can be used for virtio-fs, however if you want access to development features, download the virtio-fs kernel tree by:

git clone https://gitlab.com/virtio-fs/linux.git

git checkout virtio-fs-dev

Configure, build and install this kernel inside your guest VM, ensuring that the following config options are selected:

CONFIG_VIRTIO

CONFIG_VIRTIO_FS

CONFIG_DAX

CONFIG_FS_DAX

CONFIG_DAX_DRIVER

CONFIG_ZONE_DEVICE

Build and install the kernel in the guest, on most distros this can be most easily achieved with the commandline:

make -j 8 && make -j 8 modules && make -j 8 modules_install && make -j 8 install

Boot the guest and ensure it boots normally.

Note: An alternative is to build the kernel on the host and pass the kernel on the QEMU command line; although this can take some work to get initrd's to work right.

Building QEMU

On the host, download the virtio-fs QEMU tree by:

git clone https://gitlab.com/virtio-fs/qemu.git

Inside the checkout create a build directory, and from inside that build directory:

../configure --prefix=$PWD --target-list=x86_64-softmmu

make -j 8

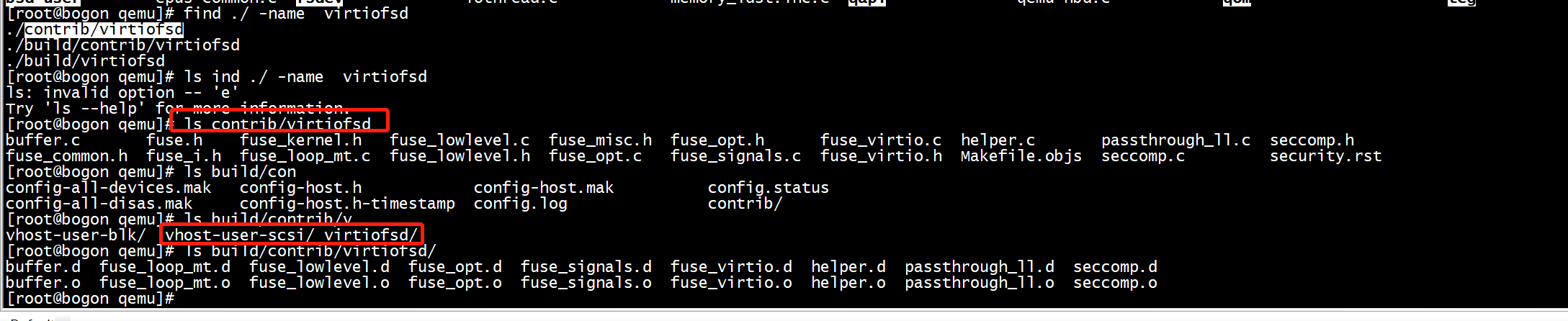

now also build the virtiofsd included in the qemu source:

make -j 8 virtiofsd

Running with virtio-fs

A shared directory for testing is needed, this can initially be empty, but it's useful if it contains a file that you can check from inside the guest; we assume that $TESTDIR points to it.

First start virtiofsd daemon:

In the qemu build directory, run:

./virtiofsd -o vhost_user_socket=/tmp/vhostqemu -o source=$TESTDIR -o cache=always

The socket path will also be passed to the QEMU.

Now start QEMU, for virtio-fs we need to add parameters

- to create the communications socket

-chardev socket,id=char0,path=/tmp/vhostqemu

- instantiate the device

-device vhost-user-fs-pci,queue-size=1024,chardev=char0,tag=myfs

The tag name is arbitrary and must match the tag given in the guests mount command. - force use of memory sharable with virtiofsd.

-m 4G -object memory-backend-file,id=mem,size=4G,mem-path=/dev/shm,share=on -numa node,memdev=mem

Add all these options to your standard QEMU command line; note the '-m' option and values are replacements for the existing option to set the memory size.

A typical QEMU command line (run from the qemu build directory) might be:

./x86_64-softmmu/qemu-system-x86_64 -M pc -cpu host --enable-kvm -smp 2

-m 4G -object memory-backend-file,id=mem,size=4G,mem-path=/dev/shm,share=on -numa node,memdev=mem

-chardev socket,id=char0,path=/tmp/vhostqemu -device vhost-user-fs-pci,queue-size=1024,chardev=char0,tag=myfs

-chardev stdio,mux=on,id=mon -mon chardev=mon,mode=readline -device virtio-serial-pci -device virtconsole,chardev=mon -vga none -display none

-drive if=virtio,file=rootfsimage.qcow2

That assumes that 'rootfsimage.qcow2' is the VM built with the modified kernel. Log into the guest as root, and issue the mount command:

mount -t virtiofs myfs /mnt

Note that Linux 4.19-based virtio-fs kernels required a different mount syntax mount -t virtio_fs none /mnt -o tag=myfs,rootmode=040000,user_id=0,group_id=0 instead.

The contents of the /mnt directory in the guest should now reflect the $TESTDIR on the host.

Enabling DAX

DAX mapping allows the guest to directly access the file contents from the hosts caches and thus avoids duplication between the guest and host.

A mapping area ('cache') is shared between virtiofsd and QEMU; this size must be specified on the command lines for QEMU, the command line for virtiofsd is unchanged.

The device section of the qemu command line changes to:

-device vhost-user-fs-pci,queue-size=1024,chardev=char0,tag=myfs,cache-size=2G

Inside the guest the mount command becomes:

mount -t virtiofs myfs /mnt

Note that Linux 4.19-based virtio-fs kernels required a different mount syntax mount -t virtio_fs none /mnt -o tag=myfs,rootmode=040000,user_id=0,group_id=0,dax instead.

Note that the size of the 'cache' used doesn't increase the host RAM used directly, since it's just a mapping area for files.

Building ireg

On the host, download the virtio-fs ireg tree by:

git clone https://gitlab.com/virtio-fs/ireg.git

and build with:

make

Enabling ireg [more experimental]

'ireg' is an external daemon that provides a shared cache of meta-data updates and allows guest kernels to check for changes to files quickly.

Start ireg as root:

./ireg &

Start virtiofsd, passing it the flag to set the caching mode to shared:

./virtiofsd -o virtio_socket=/tmp/vhostqemu / -o source=/home/dgilbert/virtio-fs/fs -o shared

Start qemu chanding the device option to point to the shared meta-data table:

-device vhost-user-fs-pci,queue-size=1024,chardev=char0,tag=myfs,cache-size=2G,versiontable=/dev/shm/fuse_shared_versions

The mount options are unchanged.