DPDK技术栈在电信云中的最佳实践(三)

ethtool -k enp6s0 Features for enp6s0: rx-checksumming: off [fixed] tx-checksumming: off tx-checksum-ipv4: off [fixed] tx-checksum-ip-generic: off [fixed] tx-checksum-ipv6: off [fixed] tx-checksum-fcoe-crc: off [fixed] tx-checksum-sctp: off [fixed] scatter-gather: on tx-scatter-gather: on tx-scatter-gather-fraglist: off [fixed] tcp-segmentation-offload: off tx-tcp-segmentation: off [fixed] tx-tcp-ecn-segmentation: off [fixed] tx-tcp-mangleid-segmentation: off [fixed] tx-tcp6-segmentation: off [fixed] udp-fragmentation-offload: off generic-segmentation-offload: on generic-receive-offload: on large-receive-offload: off [fixed] rx-vlan-offload: off [fixed] tx-vlan-offload: off [fixed] ntuple-filters: off [fixed] receive-hashing: off [fixed] highdma: on rx-vlan-filter: on [fixed] vlan-challenged: off [fixed] tx-lockless: off [fixed] netns-local: off [fixed] tx-gso-robust: off [fixed] tx-fcoe-segmentation: off [fixed] tx-gre-segmentation: off [fixed] tx-gre-csum-segmentation: off [fixed] tx-ipxip4-segmentation: off [fixed] tx-ipxip6-segmentation: off [fixed] tx-udp_tnl-segmentation: off [fixed] tx-udp_tnl-csum-segmentation: off [fixed] tx-gso-partial: off [fixed] tx-sctp-segmentation: off [fixed] tx-esp-segmentation: off [fixed] fcoe-mtu: off [fixed] tx-nocache-copy: off loopback: off [fixed] rx-fcs: off [fixed] rx-all: off [fixed] tx-vlan-stag-hw-insert: off [fixed] rx-vlan-stag-hw-parse: off [fixed] rx-vlan-stag-filter: off [fixed] l2-fwd-offload: off [fixed] hw-tc-offload: off [fixed] esp-hw-offload: off [fixed] esp-tx-csum-hw-offload: off [fixed] rx-udp_tunnel-port-offload: off [fixed]

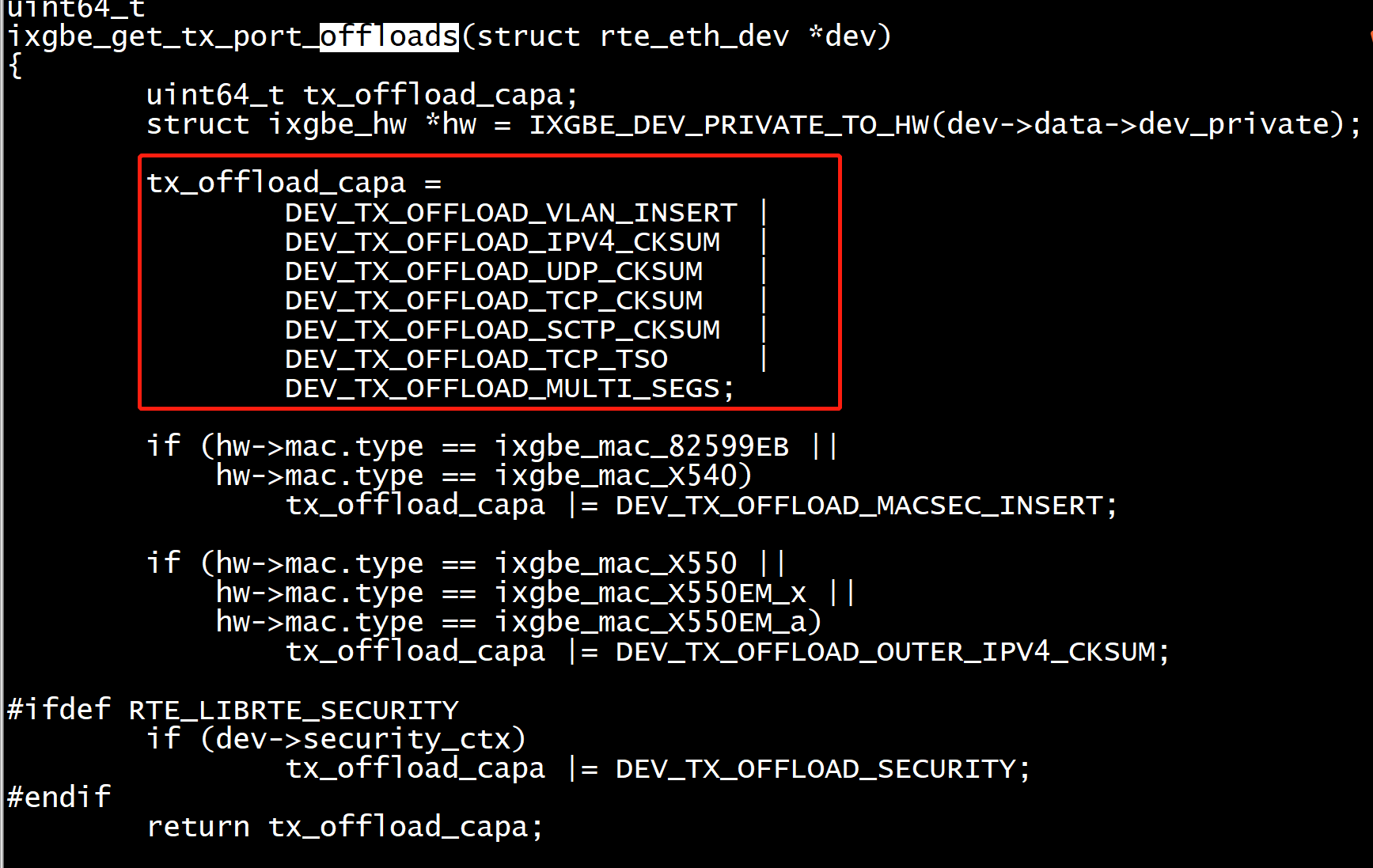

.txmode = {

.offloads = DEV_TX_OFFLOAD_MULTI_SEGS,

DMA的实现简述

在实现DMA传输时,是由DMA控制器直接掌管总线,因此,存在着一个总线控制权转移问题。即DMA传输前,CPU要把总线控制权交给DMA控制器,而在结束DMA传输后,DMA控制器应立即把总线控制权再交回给CPU。一个完整的DMA传输过程必须经过DMA请求、DMA响应、DMA传输、DMA结束 4个步骤。

scatter-gather DMA 与 block DMA

传统的block DMA 一次只能传输物理上连续的一个块的数据, 完成传输后发起中断。而scatter-gather DMA允许一次传输多个物理上不连续的块,完成传输后只发起一次中断。

传统的block DMA像这样:

先进的scatter-gather DMA像这样:

这样做的好处是直观的,大大减少了中断的次数,提高了数据传输的效率。

scatter-gather DMA的应用

dpdk在ip分片的实现中,采用了一种称作零拷贝的技术。而这种实现方式的底层,正是由scatter-gather DMA支撑的。dpdk的分片包采用了链式管理,同一个数据包的数据,分散存储在不连续的块中(mbuf结构)。这就要求DMA一次操作,需要从不连续的多个块中搬移数据。附上e1000驱动发包部分代码:

uint16_t eth_em_xmit_pkts(void *tx_queue, struct rte_mbuf **tx_pkts, uint16_t nb_pkts) { //e1000驱动部分代码 ... m_seg = tx_pkt; do { txd = &txr[tx_id]; txn = &sw_ring[txe->next_id]; if (txe->mbuf != NULL) rte_pktmbuf_free_seg(txe->mbuf); txe->mbuf = m_seg; /* * Set up Transmit Data Descriptor. */ slen = m_seg->data_len; buf_dma_addr = rte_mbuf_data_iova(m_seg); txd->buffer_addr = rte_cpu_to_le_64(buf_dma_addr); txd->lower.data = rte_cpu_to_le_32(cmd_type_len | slen); txd->upper.data = rte_cpu_to_le_32(popts_spec); txe->last_id = tx_last; tx_id = txe->next_id; txe = txn; m_seg = m_seg->next; } while (m_seg != NULL); /* * The last packet data descriptor needs End Of Packet (EOP) */ cmd_type_len |= E1000_TXD_CMD_EOP; txq->nb_tx_used = (uint16_t)(txq->nb_tx_used + nb_used); txq->nb_tx_free = (uint16_t)(txq->nb_tx_free - nb_used); ... }

DEV_TX_OFFLOAD_IPV4_CKSUM;

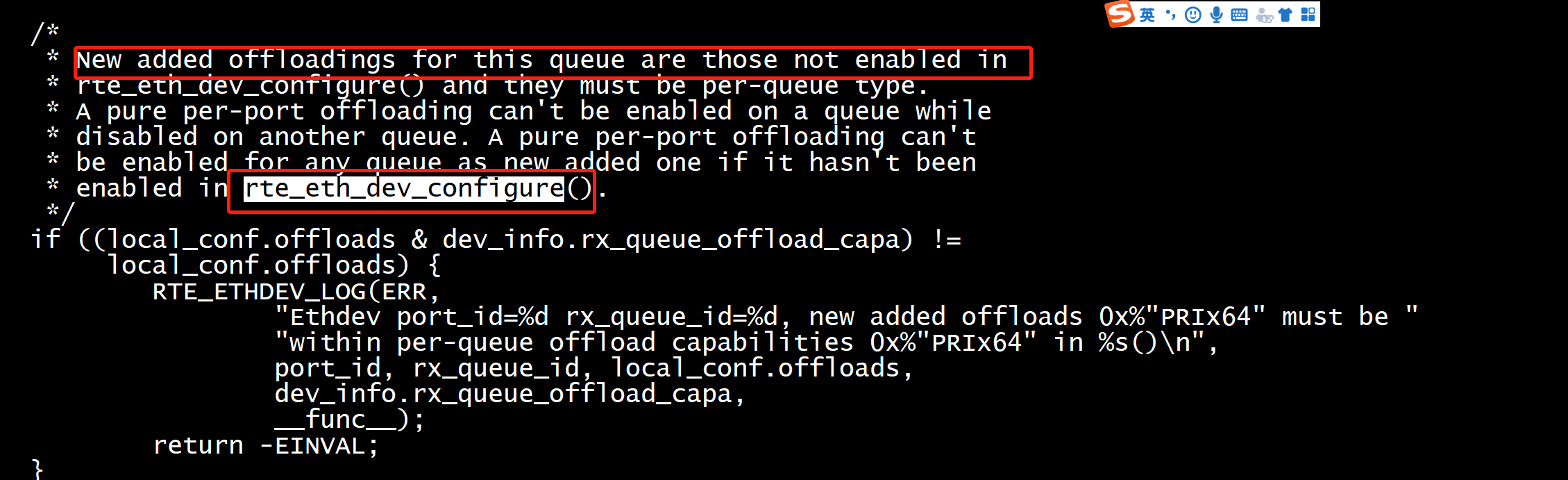

txq_conf.offloads |= DEV_TX_OFFLOAD_IPV4_CKSUM; ret = rte_eth_tx_queue_setup(portid, 0, nb_txd, rte_eth_dev_socket_id(portid), &txq_conf);

Ethdev port_id=0 tx_queue_id=0, new added offloads 0x2 must be within per-queue offload capabilities 0x0 in rte_eth_tx_queue_setup() EAL: Error - exiting with code: 1 Cause: rte_eth_tx_queue_setup:err=-22, port=0

添加:

local_port_conf.txmode.offloads |= DEV_TX_OFFLOAD_IPV4_CKSUM; ret = rte_eth_dev_configure(portid, 1, 1, &local_port_conf);

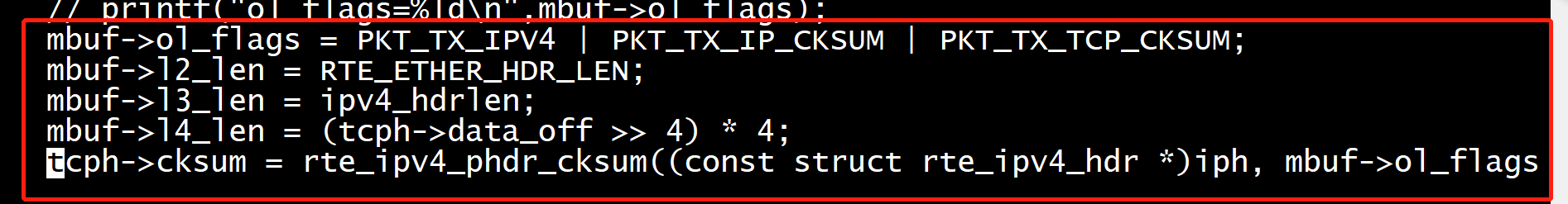

//ip_h->hdr_checksum = ipv4_hdr_cksum(ip_h); ip_h->hdr_checksum = 0; pkt->ol_flags |= PKT_TX_IP_CKSUM;

Breakpoint 1, hinic_tx_offload_pkt_prepare (m=0x13e82a480, off_info=0xffffbd40cd28) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:794 794 u16 eth_type = 0; (gdb) bt #0 hinic_tx_offload_pkt_prepare (m=0x13e82a480, off_info=0xffffbd40cd28) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:794 #1 0x000000000078a5cc in hinic_get_sge_txoff_info (mbuf_pkt=0x13e82a480, sqe_info=0xffffbd40cd38, off_info=0xffffbd40cd28) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:991 #2 0x000000000078a890 in hinic_xmit_pkts (tx_queue=0x13e7e7000, tx_pkts=0xffffbd40ce08, nb_pkts=1)

(gdb) s 796 uint64_t ol_flags = m->ol_flags; (gdb) list 791 struct rte_udp_hdr *udp_hdr; 792 struct rte_ether_hdr *eth_hdr; 793 struct rte_vlan_hdr *vlan_hdr; 794 u16 eth_type = 0; 795 uint64_t inner_l3_offset; 796 uint64_t ol_flags = m->ol_flags; 797 798 /* Check if the packets set available offload flags */ 799 if (!(ol_flags & HINIC_TX_CKSUM_OFFLOAD_MASK)) 800 return 0; (gdb) n 799 if (!(ol_flags & HINIC_TX_CKSUM_OFFLOAD_MASK)) (gdb) n 800 return 0; (gdb) n 978 } (gdb) n hinic_get_sge_txoff_info (mbuf_pkt=0x13e82a480, sqe_info=0xffffbd40cd38, off_info=0xffffbd40cd28) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:992 992 if (unlikely(ret)) (gdb) n 995 sqe_info->cpy_mbuf_cnt = 0; (gdb) n 998 if (likely(!(mbuf_pkt->ol_flags & PKT_TX_TCP_SEG))) { (gdb) n 999 if (unlikely(mbuf_pkt->pkt_len > MAX_SINGLE_SGE_SIZE)) { (gdb) n 1002 } else if (unlikely(HINIC_NONTSO_SEG_NUM_INVALID(sge_cnt))) { (gdb) n 1024 sqe_info->sge_cnt = sge_cnt; (gdb) n 1037 return true; (gdb) n 1038 } (gdb) n hinic_xmit_pkts (tx_queue=0x13e7e7000, tx_pkts=0xffffbd40ce08, nb_pkts=1) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:1093 1093 wqe_wqebb_cnt = HINIC_SQ_WQEBB_CNT(sqe_info.sge_cnt); (gdb) n 1094 free_wqebb_cnt = HINIC_GET_SQ_FREE_WQEBBS(txq); (gdb) n 1095 if (unlikely(wqe_wqebb_cnt > free_wqebb_cnt)) { (gdb) n 1108 sq_wqe = hinic_get_sq_wqe(txq, wqe_wqebb_cnt, &sqe_info); (gdb) n 1111 if (unlikely(!hinic_mbuf_dma_map_sge(txq, mbuf_pkt, (gdb) n 1121 task = &sq_wqe->task; (gdb) n 1124 hinic_fill_tx_offload_info(mbuf_pkt, task, &queue_info, (gdb) n 1128 tx_info = &txq->tx_info[sqe_info.pi]; (gdb) n 1129 tx_info->mbuf = mbuf_pkt; (gdb) n 1130 tx_info->wqebb_cnt = wqe_wqebb_cnt; (gdb) n 1133 hinic_fill_sq_wqe_header(&sq_wqe->ctrl, queue_info, (gdb) n 1134 sqe_info.sge_cnt, sqe_info.owner); (gdb) n 1133 hinic_fill_sq_wqe_header(&sq_wqe->ctrl, queue_info, (gdb) c Continuing.

Breakpoint 1, hinic_tx_offload_pkt_prepare (m=0x13e82ac00, off_info=0xffffc357c558) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:794 794 u16 eth_type = 0; (gdb) s 796 uint64_t ol_flags = m->ol_flags; (gdb) n 799 if (!(ol_flags & HINIC_TX_CKSUM_OFFLOAD_MASK)) (gdb) n 803 if ((ol_flags & PKT_TX_TUNNEL_MASK) && (gdb) n 812 if (ol_flags & PKT_TX_TUNNEL_VXLAN) { (gdb) n 847 inner_l3_offset = m->l2_len; (gdb) n 848 off_info->inner_l2_len = m->l2_len; (gdb) n 849 off_info->inner_l3_len = m->l3_len; (gdb) n 850 off_info->inner_l4_len = m->l4_len; (gdb) n 851 off_info->tunnel_type = NOT_TUNNEL; (gdb) n 853 hinic_get_pld_offset(m, off_info, (gdb) n 858 if (unlikely(off_info->payload_offset > MAX_PLD_OFFSET)) (gdb) n 862 if ((ol_flags & PKT_TX_TUNNEL_VXLAN) && ((ol_flags & PKT_TX_TCP_SEG) || (gdb) n 901 } else if (ol_flags & PKT_TX_OUTER_IPV4) { (gdb) n 907 if (ol_flags & PKT_TX_IPV4) (gdb) n 908 off_info->inner_l3_type = (ol_flags & PKT_TX_IP_CKSUM) ? (gdb) n 915 if ((ol_flags & PKT_TX_L4_MASK) == PKT_TX_UDP_CKSUM) { (gdb) n 942 } else if (((ol_flags & PKT_TX_L4_MASK) == PKT_TX_TCP_CKSUM) || (gdb) n 943 (ol_flags & PKT_TX_TCP_SEG)) { (gdb) n 942 } else if (((ol_flags & PKT_TX_L4_MASK) == PKT_TX_TCP_CKSUM) || (gdb) n 971 } else if ((ol_flags & PKT_TX_L4_MASK) == PKT_TX_SCTP_CKSUM) { (gdb) n 977 return 0; (gdb) n 978 } (gdb) n hinic_get_sge_txoff_info (mbuf_pkt=0x13e82ac00, sqe_info=0xffffc357c568, off_info=0xffffc357c558) at /data1/dpdk-19.11/drivers/net/hinic/hinic_pmd_tx.c:992 992 if (unlikely(ret)) (gdb)