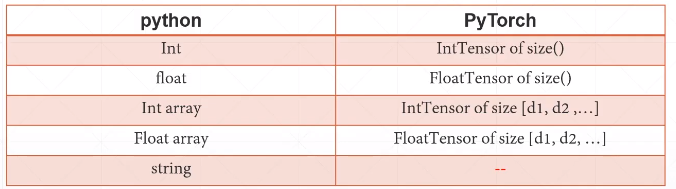

1. 数据类型

如何表示string?

-

One-hot

- [0, 1, 0, 0, ...]

-

Embedding

-

Word2vec

-

glove

-

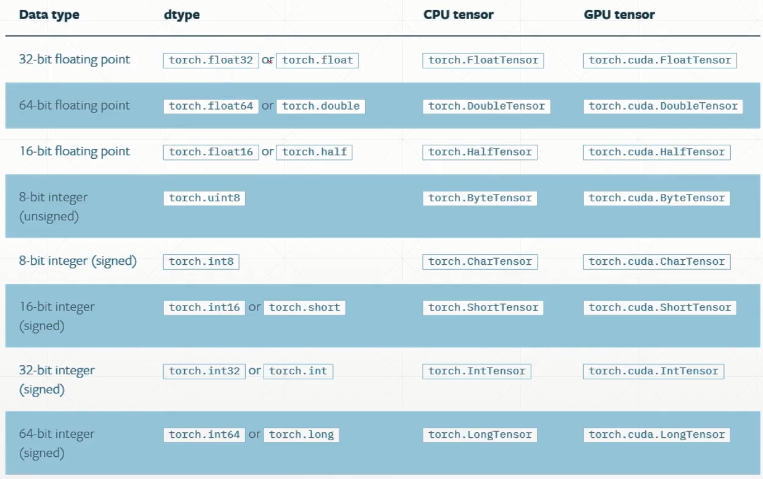

类型推断

import torch

# type check

a = torch.randn(2, 3)

print(a.type()) #torch.FloatTensor

print(type(a)) #<class 'torch.Tensor'> 这种情况比较少

print(isinstance(a, torch.FloatTensor)) #True

标量

- 标量 dimension 0/rank 0(常用于loss)

# 标量 dimension 0/rank 0(常用于loss)

b = torch.tensor(1.3)

print(b) # tensor(1.3000)

print(b.shape) # torch.Size([]) 成员

print(len(b.shape)) # 0

print(b.size()) # torch.Size([]) 成员函数

张量(tensor)

- 张量 dimension 1(常用于bias)

# 张量 dimension 1(常用于bias)

print(torch.tensor([1.1])) # tensor([1.1000]) 指定具体数据,可以是 N 维

print(torch.FloatTensor(1)) # tensor([9.6429e-39]) 指定第一维度的长度,随机初始化

data = np.ones(2) # 长度为2的numpy array

print(data) # array([1., 1.])

print(torch.from_numpy(data)) # tensor([1., 1.], dtype=torch.float64) 从numpy引入

c = torch.ones(2)

print(c.shape) # torch.Size([2]), 第一维的长度为2

- 张量 dimension 2(常用于batch等)

# 张量 dimension 2

d = torch.randn(2, 3)

print(d)

#tensor([[-1.8543, -0.7280, 0.6671],

# [ 1.1492, -0.6379, -0.4835]])

print(d.shape) # torch.Size([2, 3]),第一维长度为2,第二维长度为3(两行3列)

print(d.shape[0]) # 2

print(d.size(1)) # 或者d.shape[1],3

- 张量 dimension 3(常用于RNN等)

# 张量 dimension 3

f = torch.rand(1,2,3) # 理解:1个数据集,2个Tx,每个Tx有3个输出

print(f)

#tensor([[[0.3690, 0.5702, 0.2382],

# [0.3130, 0.5591, 0.3829]]])

print(f.shape) # torch.Size([1, 2, 3])

print(f[0]) # 取第一个维度

#tensor([[0.4535, 0.4307, 0.6469],

# [0.1591, 0.0778, 0.4489]])

print(f[0][1]) # tensor([0.1591, 0.0778, 0.4489])

- 张量 dimension 4(常用于表示图片类型)

eg:a = torch.rand(b,c,h,w) 表示b张 c通道、h*w的图片

a = torch.rand(2,3,28,28) # 2张图片,每张图片3通道,大小为28x28

print(a)

print(a.shape)

print(a.numel()) # 2x3x28x28=4704, 全部元素个数

print(a.dim()) # 4, 维度数量

tensor([[[[0.2607, 0.6929, 0.4447, ..., 0.7346, 0.1117, 0.6536],

...,

[0.4591, 0.7439, 0.0944, ..., 0.0986, 0.9818, 0.9580]],

[[0.2049, 0.2220, 0.6390, ..., 0.7402, 0.0301, 0.1057],

...,

[0.4375, 0.9904, 0.0813, ..., 0.5896, 0.6167, 0.2628]],

[[0.4288, 0.6137, 0.6558, ..., 0.0282, 0.5398, 0.0905],

...,

[0.0021, 0.2103, 0.1029, ..., 0.4861, 0.5915, 0.4245]]],

[[[0.4978, 0.4922, 0.8510, ..., 0.7856, 0.6859, 0.7466],

...,

[0.7721, 0.9057, 0.9594, ..., 0.8764, 0.0646, 0.3901]],

[[0.0570, 0.9745, 0.9952, ..., 0.8184, 0.5966, 0.6161],

...,

[0.1213, 0.6930, 0.9880, ..., 0.6633, 0.0317, 0.9526]],

[[0.6238, 0.6210, 0.7574, ..., 0.1725, 0.6625, 0.9828],

...,

[0.6864, 0.2697, 0.2041, ..., 0.9683, 0.6482, 0.1793]]]])

torch.Size([2, 3, 28, 28])

4704

4

2. 创建Tensor

import torch

import numpy as np

# 方法一:import from numpy

a = np.ones([2,3])

print(torch.from_numpy(a))

#tensor([[1., 1., 1.],

# [1., 1., 1.]], dtype=torch.float64)

# 方法二:import from List

print(torch.tensor([[2.,3.2],[1.,22.3]]))

# tensor([[ 2.0000, 3.2000],

# [ 1.0000, 22.3000]])

# 方法三:接受shape或者现成数据

print(torch.Tensor(2, 3))

print(torch.FloatTensor(2, 4))

# tensor([[0., 0., 0.],

# [0., 0., 0.]])

# tensor([[0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00],

# [0.0000e+00, 1.8750e+00, 4.3485e-05, 1.6595e-07]], dtype=torch.float32)

Tips:

-

torch.tensor:接受现成的数据

-

torch.Tensor/torch.FloatTensor:接受shape或者现成的数据

未初始化的方法(作为容器,要用其他类型把数据覆盖掉!):

-

torch.empty()

-

torch.FloatTensor(d1, d2, d3)

-

torch.IntTensor(d1,d2,d3)

print(torch.empty(1, 2))

print(torch.FloatTensor(1, 2))

print(torch.IntTensor(2, 3))

# tensor([[ 0.0000e+00, 2.1220e-314]])

# tensor([[0., 0.]], dtype=torch.float32)

# tensor([[1664692530, 1630878054, 1681351009],

# [ 842019426, 1664312883, 828728417]], dtype=torch.int32)

设置默认类型

- torch.set_default_tensor_type

# 设置默认类型

print(torch.tensor([1.2,3]).type()) # torch.FloatTensor(不设定的话,默认值)

torch.set_default_tensor_type(torch.DoubleTensor)

print(torch.tensor([1.2,3]).type()) # torch.DoubleTensor

随机初始化

- rand/rand_like, randint

# rand [0,1]

a = torch.rand(2,2)

print(a)

# tensor([[0.9422, 0.6025],

# [0.1540, 0.6282]])

print(torch.rand_like(a)) # 根据a的形状生成,也可用dtype指定新类型

# tensor([[0.4822, 0.6752],

# [0.3491, 0.8990]])

# randint [min,max) 不包含max

print(torch.randint(1,10, [3,3])) # 第1个参数是min,第二个参数是max,第三个参数是shape

# tensor([[8, 9, 7],

# [5, 7, 5],

# [9, 6, 9]])

正态分布

print(torch.full([2, 3], 2.3)) # 2维3列,全部为2.3的tensor

# tensor([[2.3000, 2.3000, 2.3000],

# [2.3000, 2.3000, 2.3000]])

# 正态分布

print(torch.randn(3,3)) # N(0,1), 均值为0,方差为1

# tensor([[-1.6209, 0.0208, -0.8792],

# [ 2.4513, -0.1906, 3.4904],

# [ 0.5434, 0.8524, 0.6850]])

print(torch.normal(mean=torch.full([10], 0),std = torch.arange(1,0,-0.1))) # normal得到的维度为1,均值为0,方差为1

# tensor([ 0.1445, -0.5133, -0.5565, 0.0831, 0.1350, 0.1023, -0.6264, -0.1651, 0.2856, 0.0187])

其他

-

torch.full

-

torch.arange

-

torch.linspace

-

torch.logspace(0, 1, step=10): (10^0 o 10^{1})

-

torch.randperm

# ones全1,zeros全0,eye对角,ones_like

print(torch.full([2,3],7)) # 第一个参数是shape,第二个参数是value

# tensor([[7., 7., 7.],

# [7., 7., 7.]])

print(torch.full([], 7)) # tensor(7.) 生成标量

# 生成 [0, n-1]的等差数列

print(torch.arange(0,10,2)) # tensor([0, 2, 4, 6, 8])

# [0,10]等间距切割成steps份

print(torch.linspace(0, 10, steps=6)) # tensor([ 0., 2., 4., 6., 8., 10.])

print(torch.logspace(0, 1, steps=5)) # tensor([ 1.0000, 1.7783, 3.1623, 5.6234, 10.0000])

# randperm: [0, n-1]打乱出现,不重复

print(torch.randperm(10)) # tensor([8, 5, 2, 4, 7, 1, 3, 9, 6, 0])

a = torch.rand(2, 3)

idx = torch.randperm(2)

print(idx) # tensor([1, 0])

print(a)

print(a[idx])

# tensor([[0.7896, 0.0143, 0.7092],

# [0.8881, 0.5194, 0.6708]])

# tensor([[0.8881, 0.5194, 0.6708],

# [0.7896, 0.0143, 0.7092]])

3. 索引与切片

# 切片与索引

a = torch.rand(4, 3, 28, 28) # 4张图片,每张图片有3个channel,每个通道图片大小:28x28

print(a[0].shape) # torch.Size([3, 28, 28])

print(a[0,0].shape) # torch.Size([28, 28])

print(a[0,0,2,4]) # tensor(0.5385)

print(a[:2,:1].shape) # torch.Size([2, 1, 28, 28]) 等价于a[:2,:1,:,:].shape

print(a[:,:,::2,::2].shape) # torch.Size([4, 3, 14, 14])

使用特定索引 index_select

#select by specific index

print(a.index_select(2, torch.arange(8)).shape) # torch.Size([4, 3, 8, 28]) 第1个参数:选择的维度,第2个参数:索引号(Tensor类型)

# ...表示任意多的维度

print(a[0, ...].shape) # torch.Size([3, 28, 28])

print(a[:, 1, ...].shape) # torch.Size([4, 28, 28])

使用掩码索引 masked_select

- torch.masked_select(x, mask)后变成一维

x = torch.randn(3,4)

print(x)

mask = x.ge(0.5) # greater equal: >=

print(mask)

# select by mask

torch.masked_select(x, mask) # 维度为1

# tensor([[ 0.2953, -2.4538, -0.0996, 1.6746],

# [ 0.5318, -1.2000, -0.8475, 0.3967],

# [-1.2162, -1.4913, 0.5404, -0.1377]])

# tensor([[False, False, False, True],

# [ True, False, False, False],

# [False, False, True, False]])

# tensor([1.6746, 0.5318, 0.5404])

take索引

- take: 在原来Tensor的shape基础上打平,然后在打平后的Tensor上进行索引)

# select by flatten index

src = torch.tensor([[4, 3, 5], [6, 7, 8]])

print(torch.take(src, torch.tensor([0, 2, 5]))) # tensor([4, 5, 8])

4. 维度变换

reshape/view

-

可以调整Tensor的shape,返回一个新shape的Tensor

-

要记住维度展开的顺序,如果要还原维度,就得按原来顺序还原

a = torch.rand(4, 1, 28, 28)

print(a.view(4, 28*28).shape) # torch.Size([4, 784]), 语法没错,数据被破坏了

print(a.reshape(4*28, 28).shape) # torch.Size([112, 28])

unsqueeze(index)增加维度

-

index的范围: [-a.dim()-1, a.dim()+1)

- 如:a.unsqueeze(2): 在二维处添加一维。若 a 的维度=4,index范围是[-5,5)

-

新增加的这一个维度,不会改变数据本身,只是为数据新增加了一个组别,这个组别是什么由我们自己定义。

a = torch.rand(4,1,28,28)

print(a.shape) # torch.Size([4, 1, 28, 28])

print(a.unsqueeze(0).shape) # torch.Size([1, 4, 1, 28, 28]

print('='*30)

b = torch.tensor([1.2, 2.3]) # torch.Size([2])

print(b)

print(b.unsqueeze(0)) # tensor([[1.2000, 2.3000]]) torch.Size([1, 2])

print(b.unsqueeze(-1)) # torch.Size([2, 1])

print(b.unsqueeze(1)) # torch.Size([2, 1])

# tensor([[1.2000],

# [2.3000]])

print('='*30)

x = torch.rand(32)

print(x.shape) # torch.Size([32])

print(x.unsqueeze(1).shape) # torch.Size([32, 1])

print(x.unsqueeze(1).unsqueeze(2).shape) # torch.Size([32, 1, 1])

x = x.unsqueeze(1).unsqueeze(2).unsqueeze(0) # torch.Size([1, 32, 1, 1])

print(x.shape) # torch.Size([1, 32, 1, 1])) 再进行扩展即可计算x+y

squeeze(index)删减维度

-

删除 size=1 的维度

-

size不等于1,删减不了

a=torch.rand(1,32,1,1)

print(a.squeeze().shape) # torch.Size([32]) 不指定维度就挤压全部

print(a.squeeze(0).shape) # torch.Size([32, 1, 1])

print(a.squeeze(-1).shape) # torch.Size([1, 32, 1])

print(a.squeeze(1).shape) # torch.Size([1, 32, 1, 1]) size不等于1,删减不了

expand维度扩展:broadcasting(只是改变了理解方式,并没有增加数据)

-

某个 size=1 的维度上扩展到size, 缺失处填补该维度的数

-

-1 指该维度不变

x = torch.rand(3)

x = x.unsqueeze(1).unsqueeze(2).unsqueeze(0) # [3]->[3,1]->[3,1,1]->[1,3,1,1]

print(x.shape) # torch.Size([1, 3, 1, 1])

print(x)

# tensor([[[[0.5826]],

# [[0.6370]],

# [[0.6199]]]])

print(x.expand(-1, 3, 3, 2))

# tensor([[[[0.3054, 0.3054],

# [0.3054, 0.3054],

# [0.3054, 0.3054]],

# [[0.4798, 0.4798],

# [0.4798, 0.4798],

# [0.4798, 0.4798]],

# [[0.7628, 0.7628],

# [0.7628, 0.7628],

# [0.7628, 0.7628]]]])

y = torch.rand(4, 3, 14, 14)

print(x.expand(4,3,14,14).shape) # torch.Size([4, 3, 14, 14])

print(x.expand(-1,3,3,-1).shape) # torch.Size([1, 3, 3, 1]) -1:指该维度不变

print((x.expand(4, 3, 14, 14) + y).shape) # torch.Size([4, 3, 14, 14])

repeat维度重复:memory copied(增加了数据)

- repeat会重新申请内存空间,repeat()参数表示各个维度指定的重复次数。

a = torch.rand(1,32,1,1)

print(a.repeat(4,32,1,1).shape) # torch.Size([1x4, 32x32, 1x1, 1x1])->torch.Size([4, 1024, 1, 1])

print(a.repeat(4,1,1,1).shape) # torch.Size([4, 32, 1, 1])

y = torch.rand(5)

print(y.shape) # torch.Size([5])

y_unsqueeze = y.unsqueeze(1)

print(y_unsqueeze.shape) # torch.Size([5, 1])

print(y_unsqueeze)

# tensor([[0.3372],

# [0.1044],

# [0.8305],

# [0.1960],

# [0.7674]])

print(y_unsqueeze.repeat(2, 2))

# tensor([[0.3372, 0.3372],

# [0.1044, 0.1044],

# [0.8305, 0.8305],

# [0.1960, 0.1960],

# [0.7674, 0.7674],

# [0.3372, 0.3372],

# [0.1044, 0.1044],

# [0.8305, 0.8305],

# [0.1960, 0.1960],

# [0.7674, 0.7674]])

转置操作

.t操作指适用于矩阵

a = torch.rand(3, 4)

print(a.t().shape) #torch.Size([4, 3])

transpose维度交换

a = torch.rand(4,3,32,32)

print(a.transpose(1, 3).shape) # torch.Size([4, 32, 32, 3])

print(a.transpose(1, 3).contiguous().view(4, 3*32*32).shape) # torch.Size([4, 3072]), 如果不加contiguous就需要把view改成reshape,contiguous是把数据重新变成连续

print(a.transpose(1, 3).contiguous().view(4, 3*32*32).reshape(4, 32, 32, 3).shape) # torch.Size([4, 32, 32, 3])

a1 = a.transpose(1, 3).contiguous().view(4, 3*32*32).view(4, 3, 32, 32)

b = a.transpose(1, 3).contiguous().view(4, 3*32*32).view(4,32,32,3).transpose(1, 3) # view(4,3*32*32).view(4,3,32,32)这样写就错了

# [b,c,h,w]->[b,w,h,c]->[b,whc]->[b,w,h,c]->[b,c,h,w] 展开时按原来的顺序展开whc对应32,32,3

print(a.shape, b.shape)

print(torch.all(torch.eq(a, b))) # tensor(True)

print(torch.all(torch.eq(a, a1))) # tensor(False)

t = torch.IntTensor([[[1,2,3],[4,5,6]], [[6,7,8],[9,10,11]]])

print(t, t.shape)

print(t.transpose(0, 2).contiguous().view(3, 4).view(2, 2, 3)) # 并没有恢复成原来的数据

# tensor([[[ 1, 6, 4],

# [ 9, 2, 7]],

# [[ 5, 10, 3],

# [ 8, 6, 11]]], dtype=torch.int32)

# 正确写法

print(t.transpose(0, 2).contiguous().view(3, 4).view(3, 2, 2).transpose(0, 2))

# tensor([[[ 1, 2, 3],

# [ 4, 5, 6]],

# [[ 6, 7, 8],

# [ 9, 10, 11]]], dtype=torch.int32)

permute(区别 transpose)

-

四个维度表示的[batch,channel,h,w],如果想把channel放到最后去,形成[batch,h,w,channel]

-

那么如果使用前面的维度交换,至少要交换两次(先13交换再12交换)

-

而使用permute 可以 直接指定维度新的所处位置,更加方便。

b = torch.rand(4,3,28,32)

print(b.transpose(1, 3).shape) #torch.Size([4, 32, 28, 3])

print(b.transpose(1, 3).transpose(1, 2).shape) #torch.Size([4, 28, 32, 3])

print(b.permute(0,2,3,1).shape) #torch.Size([4, 28, 32, 3]