An elegant and powerful method for finding maximum likelihood solutions for models with latent variables is called the expectation-maximization algorithm, or EM algorithm.

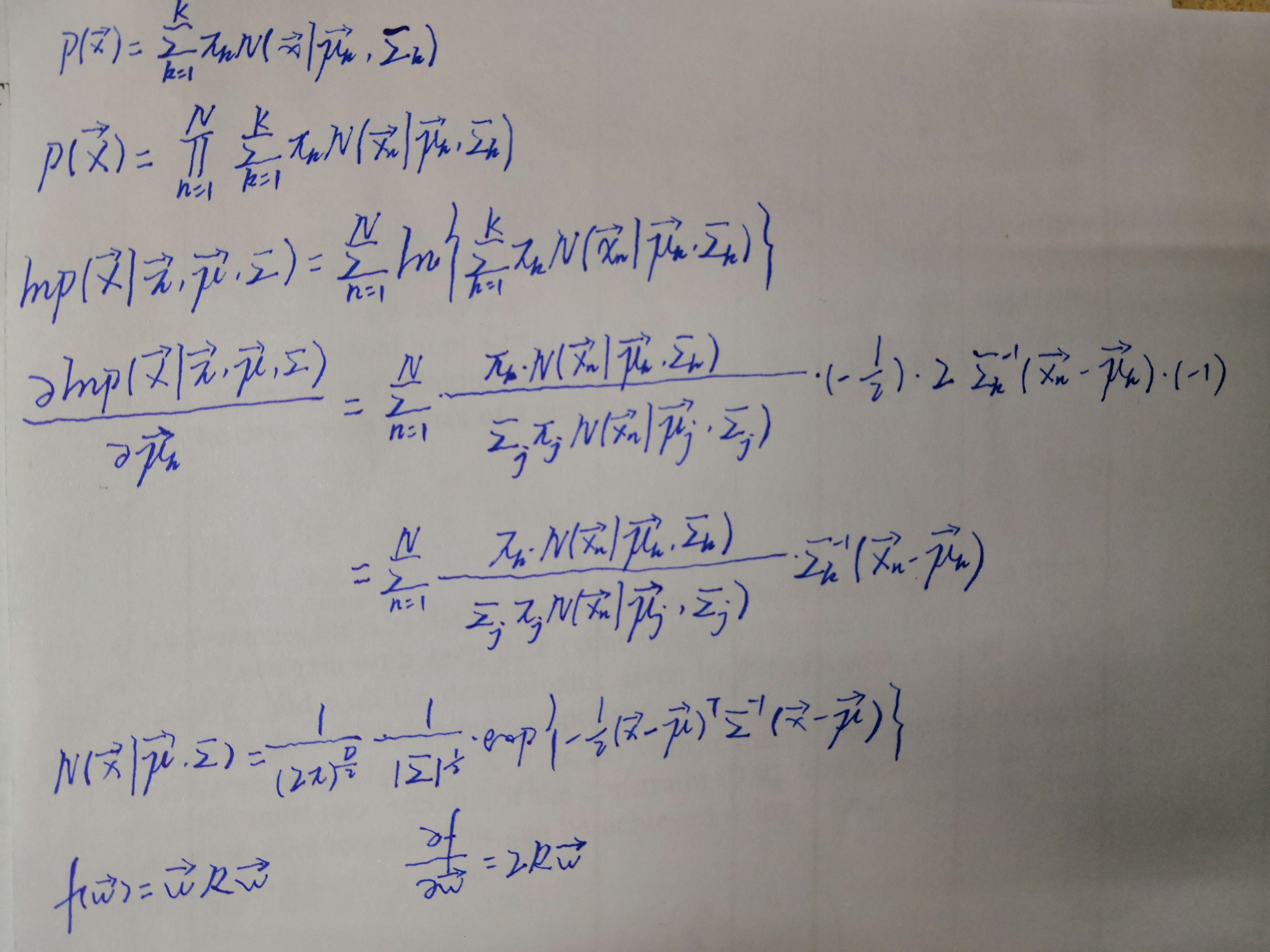

If we assume that the data points are drawn independently from the distribution, then the log of the likelihood function is given by

lnp(X|π,μ,Σ)=Σnln{ΣkπkN(xn|μk,Σk)}

EM for Gaussian Mixtures

Given a Gaussian mixture model, the goal is to maximize the likelihood function with respect to the parameters(comprising the means and covariances of the components

and the mixing coefficients).

1.Initialize the means μk, covariances Σk and mixing coefficients πk, and evaluate the initial value of the log likelihood.

2.E step. Evaluate the responsibilities using the current parameter values

3.M step. Re-estimate the parameters using the current responsibilities.

4.Evaluate the log likelihood

lnp(X|π,μ,Σ)=Σnln{ΣkπkN(xn|μk,Σk)}