1、下载atlas2.0源码

https://www.apache.org/dyn/closer.cgi/atlas/2.0.0/apache-atlas-2.0.0-sources.tar.gz

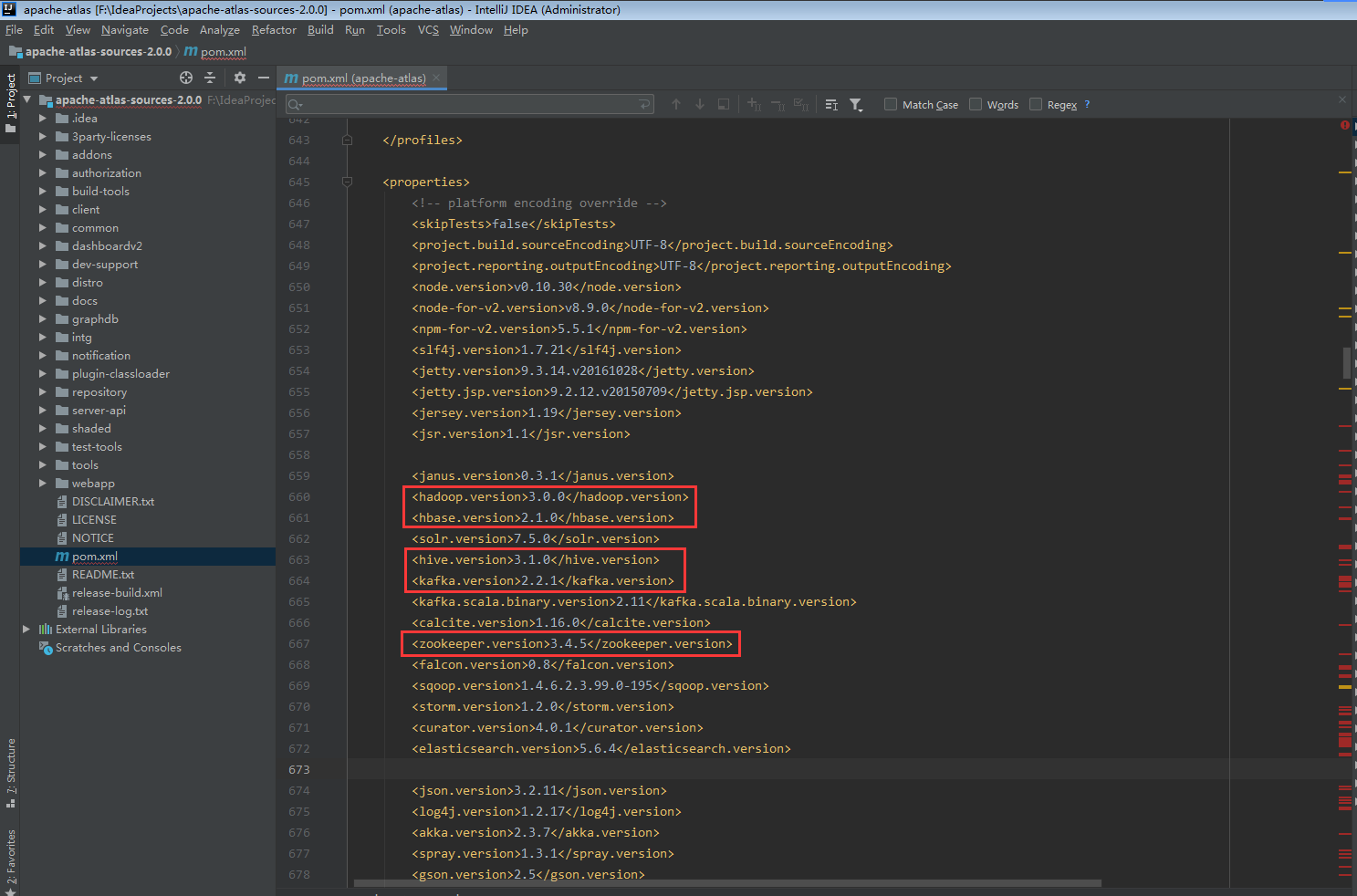

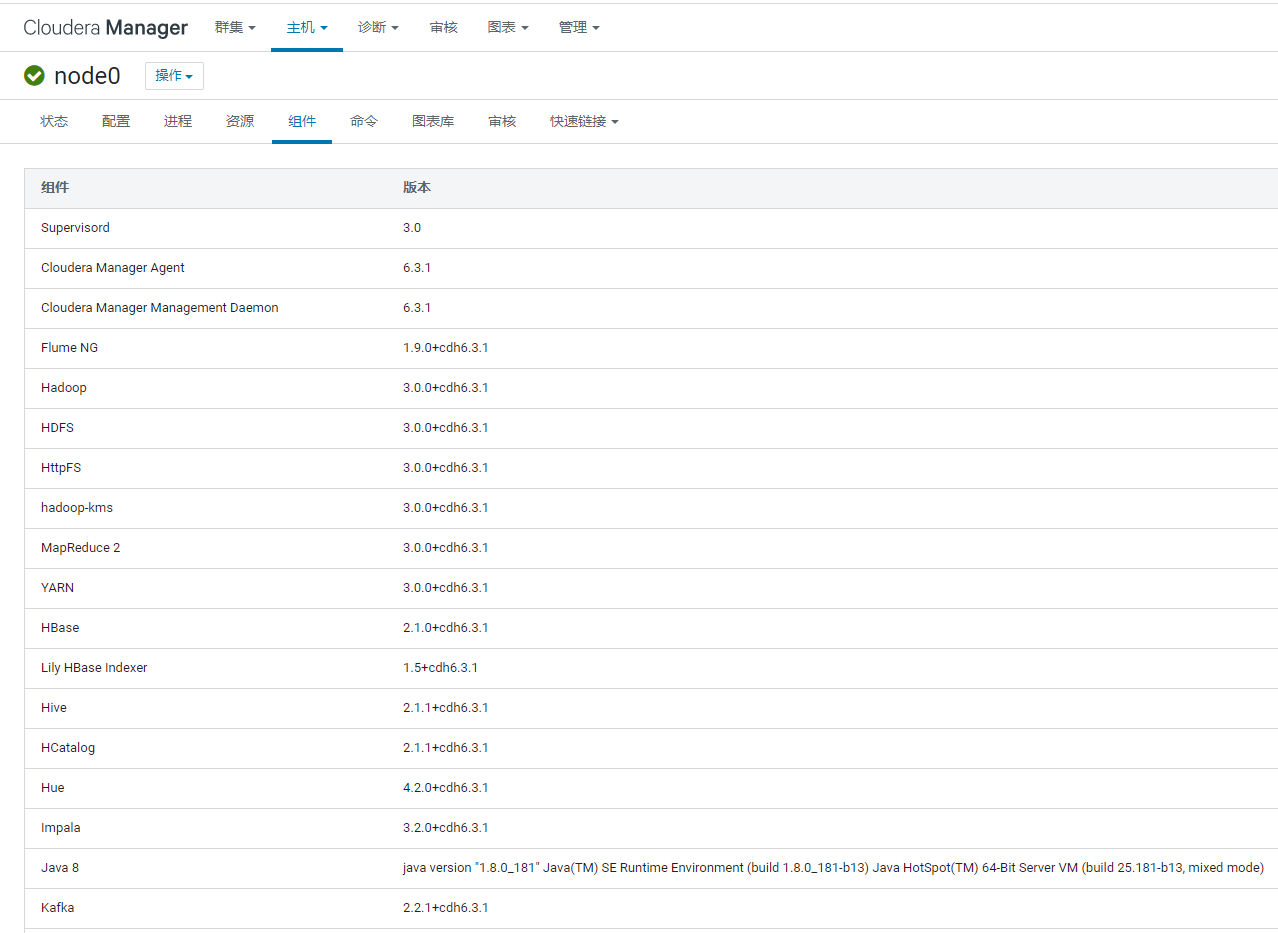

2、使用idea打开源码,修改源码中pom文件依赖,将Hadoop、hbase、kafka、zookeeper版本改成与CDH中版本一致

查看版本

修改atlas-application.properties配置文件(在distrosrcconf中)

#hbase #atlas.graph.storage.backend=${graph.storage.backend} atlas.graph.storage.backend=hbase atlas.graph.storage.hostname=node0:2181,node1:2181,node2:2181,node3:2181 atlas.graph.storage.hbase.table=apache_atlas_janus #Solr #Solr cloud mode properties atlas.graph.index.search.solr.mode=cloud atlas.graph.index.search.solr.wait-searcher=true # ZK quorum setup for solr as comma separated value. Example: 10.1.6.4:2181,10.1.6.5:2181 atlas.graph.index.search.solr.zookeeper-url=node0:2181,node1:2181,node2:2181,node3:2181 # SolrCloud Zookeeper Connection Timeout. Default value is 60000 ms atlas.graph.index.search.solr.zookeeper-connect-timeout=60000 # SolrCloud Zookeeper Session Timeout. Default value is 60000 ms atlas.graph.index.search.solr.zookeeper-session-timeout=60000 # Default limit used when limit is not specified in API atlas.search.defaultlimit=100 # Maximum limit allowed in API. Limits maximum results that can be fetched to make sure the atlas server doesn't run out of memory atlas.search.maxlimit=10000 #Solr http mode properties #atlas.graph.index.search.solr.mode=http #atlas.graph.index.search.solr.http-urls=http://xxxxx:8983/solr ######### Notification Configs ######### atlas.notification.embedded=false atlas.kafka.data=${sys:atlas.home}/data/kafka atlas.kafka.zookeeper.connect=node0:2181,node1:2181,node2:2181,node3:2181 atlas.kafka.bootstrap.servers=node0:2181,node1:2181,node2:2181,node3:2181 atlas.kafka.zookeeper.session.timeout.ms=60000 atlas.kafka.zookeeper.connection.timeout.ms=30000 atlas.kafka.zookeeper.sync.time.ms=20 atlas.kafka.auto.commit.interval.ms=1000 atlas.kafka.hook.group.id=atlas atlas.kafka.enable.auto.commit=true atlas.kafka.auto.offset.reset=earliest atlas.kafka.session.timeout.ms=30000

4、修改atlas-env.sh(在distrosrcconf中)

#集成添加hbase配置->下面的目录为atlas下的hbase配置目录,需要后面加入集群hbase配置 export HBASE_CONF_DIR=/usr/local/src/atlas/apache-atlas-2.0.0/conf/hbase/conf #export MANAGE_LOCAL_HBASE=false (false外置的zk和hbase) #export MANAGE_LOCAL_SOLR=false (false外置的solr) #修改内存指标(根据线上机器配置) export ATLAS_SERVER_OPTS="-server -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+CMSClassUnloadingEnabled -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+PrintTenuringDistribution -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=dumps/atlas_server.hprof -Xloggc:logs/gc-worker.log -verbose:gc -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=1m -XX:+PrintGCDetails -XX:+PrintHeapAtGC -XX:+PrintGCTimeStamps" #优化 JDK1.8(以下需要16G内存) export ATLAS_SERVER_HEAP="-Xms15360m -Xmx15360m -XX:MaxNewSize=5120m -XX:MetaspaceSize=100M -XX:MaxMetaspaceSize=512m"

5、修改atlas-log4j.xml(位置同上)

#去掉如下代码的注释(开启如下代码) <appender name="perf_appender" class="org.apache.log4j.DailyRollingFileAppender"> <param name="file" value="${atlas.log.dir}/atlas_perf.log" /> <param name="datePattern" value="'.'yyyy-MM-dd" /> <param name="append" value="true" /> <layout class="org.apache.log4j.PatternLayout"> <param name="ConversionPattern" value="%d|%t|%m%n" /> </layout> </appender> <logger name="org.apache.atlas.perf" additivity="false"> <level value="debug" /> <appender-ref ref="perf_appender" /> </logger>

6、编译打包

mvn clean -DskipTests install

mvn clean -DskipTests package -Pdist

参考博客:

CDH集成atlas

https://blog.csdn.net/tom_fans/article/details/85506662

https://blog.csdn.net/fairynini/article/details/106134361?utm_medium=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromMachineLearnPai2-1.add_param_isCf&depth_1-utm_source=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromMachineLearnPai2-1.add_param_isCf

end