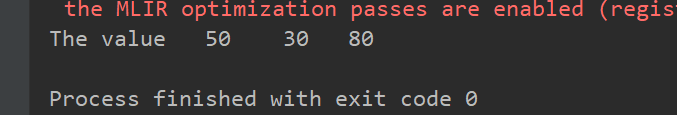

阶段1:变量的使用

""" __author__="dazhi" 2021/3/6-12:52 """ import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' def variable_demo(): tf.compat.v1.disable_eager_execution() #ensure sess.run () can operate normally """tensorflow Basic structure""" #Demonstration of variable #Creating variables a1 = tf.Variable(initial_value=50) b1 = tf.Variable(initial_value=30) c1 = tf.add(a1,b1) #initialize variable init = tf.compat.v1.global_variables_initializer() #Return call must be turned on to display results with tf.compat.v1.Session() as sess: sess.run(init) #run placeholder a2,b2,c2 = sess.run([a1,b1,c1]) print("The value ",a2," ",b2," ",c2) return None if __name__=="__main__": variable_demo()

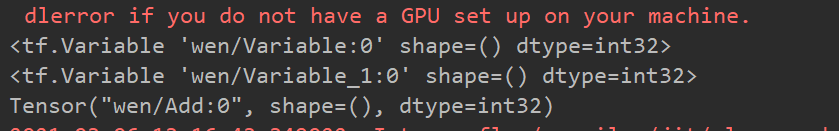

阶段2:修改变量的命名空间

""" __author__="dazhi" 2021/3/6-12:52 """ import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' def variable_demo(): tf.compat.v1.disable_eager_execution() #ensure sess.run () can operate normally """tensorflow Basic structure""" #Demonstration of variable #Creating variables with tf.compat.v1.variable_scope("wen"): a1 = tf.Variable(initial_value=50) b1 = tf.Variable(initial_value=30) c1 = tf.add(a1,b1) print(a1) print(b1) print(c1) #initialize variable init = tf.compat.v1.global_variables_initializer() #Return call must be turned on to display results with tf.compat.v1.Session() as sess: sess.run(init) #run placeholder a2,b2,c2 = sess.run([a1,b1,c1]) print("The value ",a2," ",b2," ",c2) return None if __name__=="__main__": variable_demo()

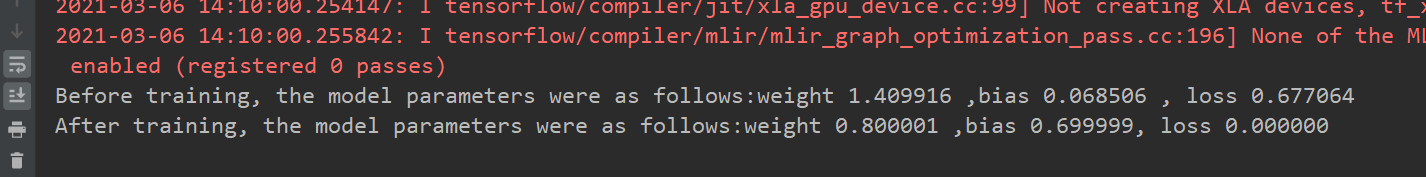

阶段3:自实现线性回归

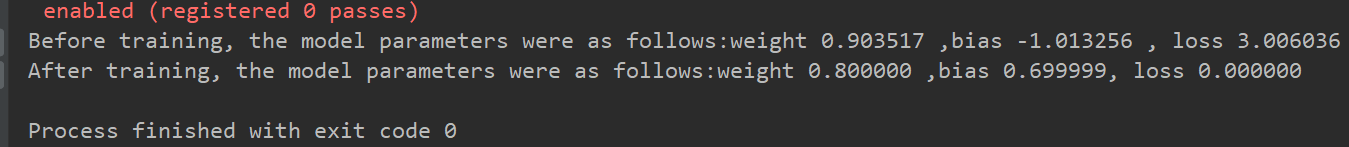

""" __author__="dazhi" 2021/3/6-13:35 """ import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' #Self realization of a linear regression def linear_regression(): tf.compat.v1.disable_eager_execution() #ensure sess.run () can operate normally """tensorflow Basic structure""" #1 Prepare data x = tf.compat.v1.random_normal(shape=[100,1]) y_true = tf.matmul(x,[[0.8]])+0.7 #2 construct model #Defining model parameters using variables weights = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1,1])) bias = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1,1])) y_predict = tf.matmul(x,weights)+bias #3 construct loss function error = tf.reduce_mean(tf.square(y_predict - y_true)) #4 optimize loss function optimizer = tf.compat.v1.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error) #initialize variable init = tf.compat.v1.global_variables_initializer() #Return call must be turned on to display results with tf.compat.v1.Session() as sess: sess.run(init) #Query the value of initialization operation model parameters print("Before training, the model parameters were as follows:weight %f ,bias %f , loss %f " % (weights.eval(),bias.eval(),error.eval())) #Start training for i in range(1000): sess.run(optimizer) print("After training, the model parameters were as follows:weight %f ,bias %f, loss %f " % (weights.eval(),bias.eval(),error.eval())) return None if __name__=="__main__": linear_regression()

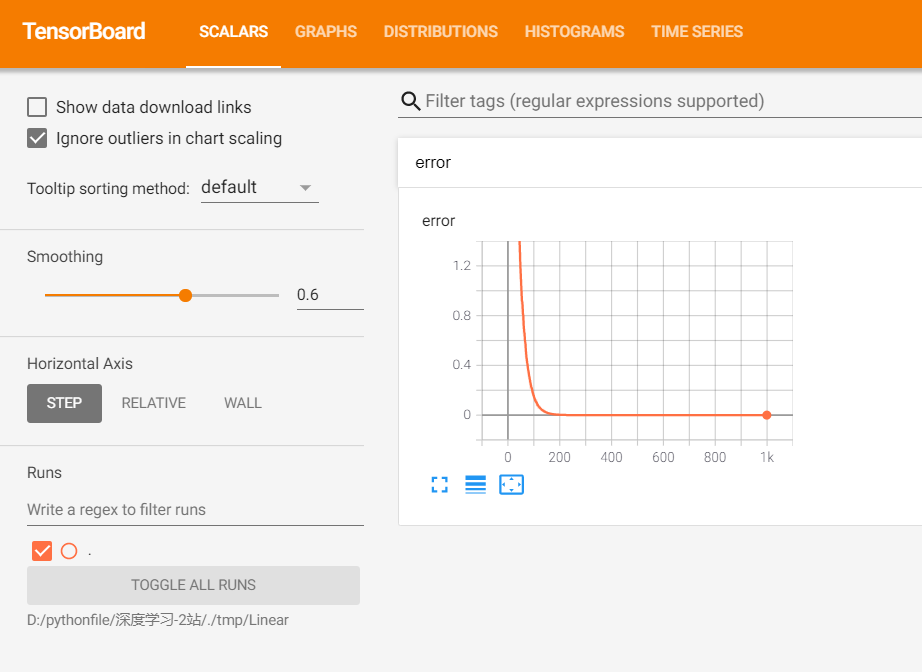

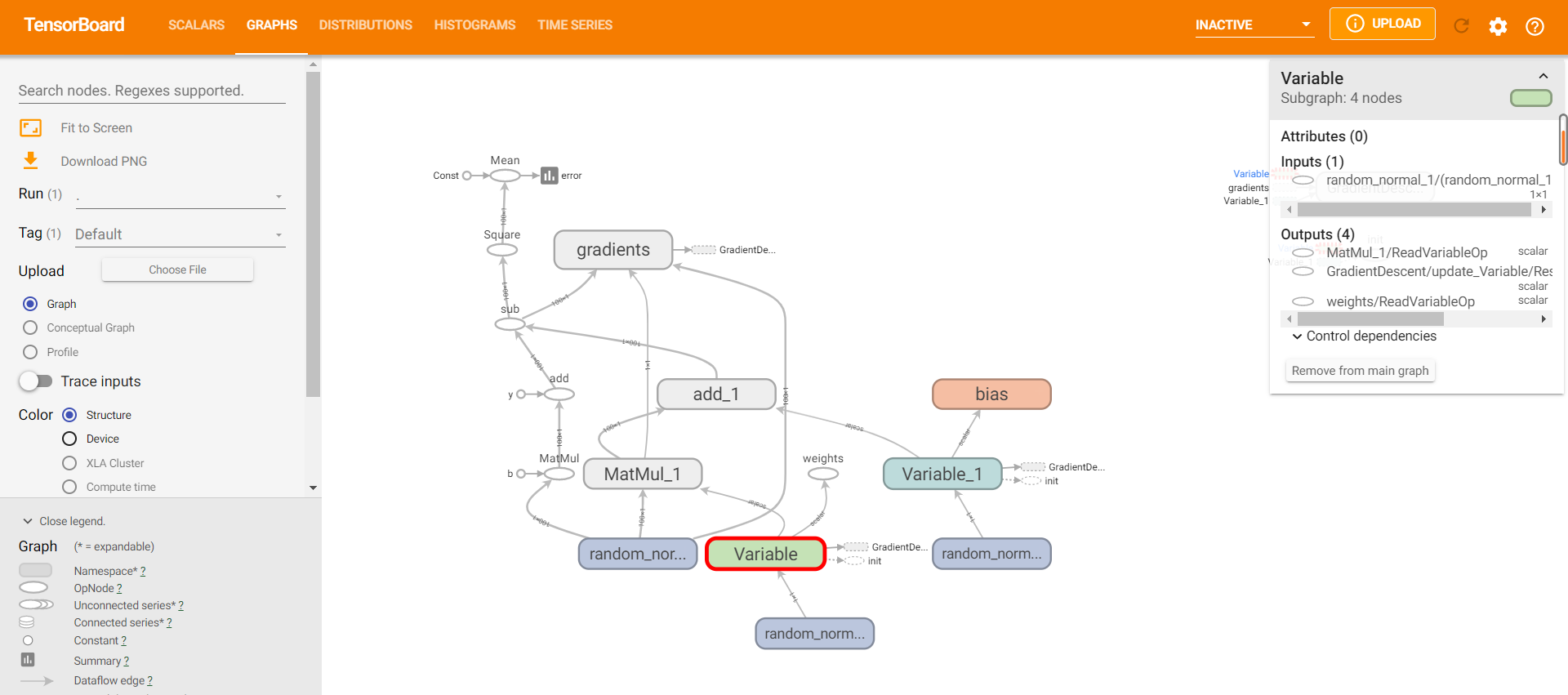

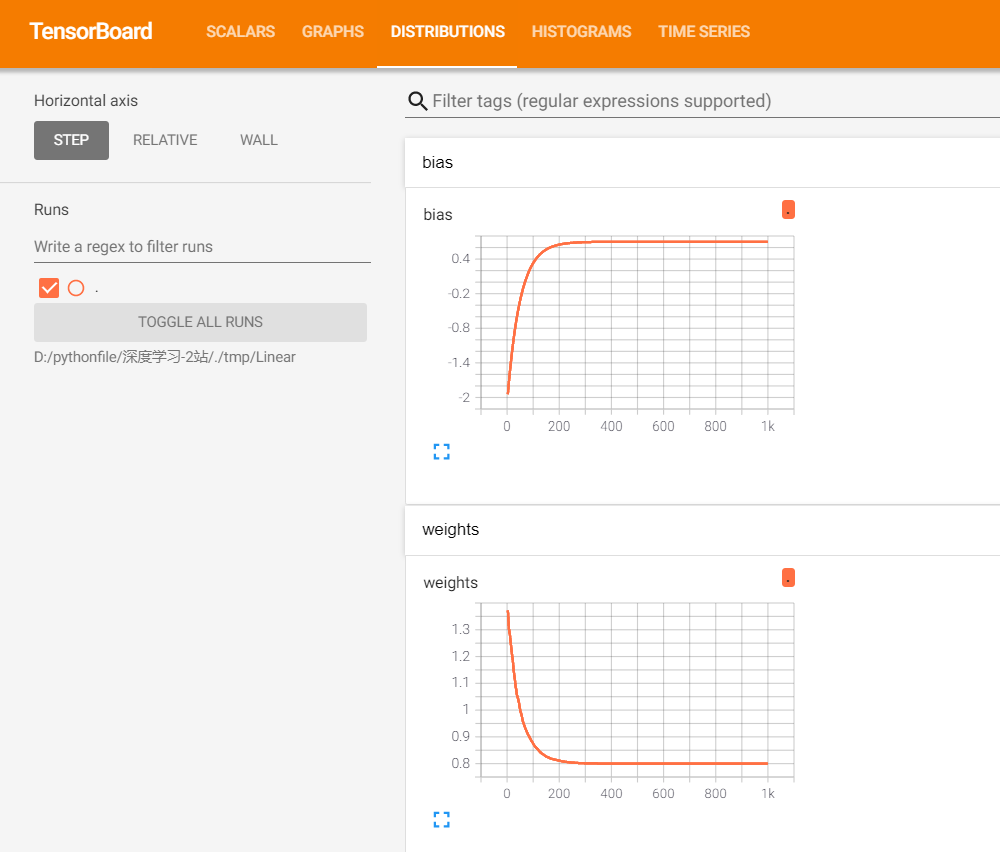

阶段4:可视化展示线性回归中的数据

""" __author__="dazhi" 2021/3/6-13:35 """ import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # Self realization of a linear regression def linear_regression(): tf.compat.v1.disable_eager_execution() # ensure sess.run () can operate normally """tensorflow Basic structure""" # 1 Prepare data x = tf.compat.v1.random_normal(shape=[100, 1]) y_true = tf.matmul(x, [[0.8]]) + 0.7 # 2 construct model # Defining model parameters using variables weights = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1])) bias = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1])) y_predict = tf.matmul(x, weights) + bias # 3 construct loss function error = tf.reduce_mean(tf.square(y_predict - y_true)) # 4 optimize loss function optimizer = tf.compat.v1.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error) # 2++Collect variables tf.compat.v1.summary.scalar("error", error) tf.compat.v1.summary.histogram("weights", weights) tf.compat.v1.summary.histogram("bias", bias) # 3++Merge variables merged = tf.compat.v1.summary.merge_all() # initialize variable init = tf.compat.v1.global_variables_initializer() # Return call must be turned on to display results with tf.compat.v1.Session() as sess: sess.run(init) # 1++Create event file file_writer = tf.compat.v1.summary.FileWriter("./tmp/Linear", graph=sess.graph) # Query the value of initialization operation model parameters print("Before training, the model parameters were as follows:weight %f ,bias %f , loss %f " % (weights.eval(), bias.eval(), error.eval())) # Start training for i in range(1000): sess.run(optimizer) # Run merge variable operation summary = sess.run(merged) # Write the data after each iteration to the event file file_writer.add_summary(summary, i) print("After training, the model parameters were as follows:weight %f ,bias %f, loss %f " % (weights.eval(), bias.eval(), error.eval())) return None if __name__ == "__main__": linear_regression()

阶段5:增加命名空间,使代码变得清晰

""" __author__="dazhi" 2021/3/6-13:35 """ import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # Self realization of a linear regression def linear_regression(): tf.compat.v1.disable_eager_execution() # ensure sess.run () can operate normally """tensorflow Basic structure""" # 1 Prepare data with tf.compat.v1.variable_scope("prepare_data"): x = tf.compat.v1.random_normal(shape=[100, 1]) y_true = tf.matmul(x, [[0.8]]) + 0.7 # 2 construct model # Defining model parameters using variables with tf.compat.v1.variable_scope("create_model"): weights = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1])) bias = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1])) y_predict = tf.matmul(x, weights) + bias # 3 construct loss function with tf.compat.v1.variable_scope("loss_function"): error = tf.reduce_mean(tf.square(y_predict - y_true)) # 4 optimize loss function with tf.compat.v1.variable_scope("optimizer"): optimizer = tf.compat.v1.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error) # 2++Collect variables tf.compat.v1.summary.scalar("error", error) tf.compat.v1.summary.histogram("weights", weights) tf.compat.v1.summary.histogram("bias", bias) # 3++Merge variables merged = tf.compat.v1.summary.merge_all() # initialize variable init = tf.compat.v1.global_variables_initializer() # Return call must be turned on to display results with tf.compat.v1.Session() as sess: sess.run(init) # 1++Create event file file_writer = tf.compat.v1.summary.FileWriter("./tmp/Linear", graph=sess.graph) # Query the value of initialization operation model parameters print("Before training, the model parameters were as follows:weight %f ,bias %f , loss %f " % (weights.eval(), bias.eval(), error.eval())) # Start training for i in range(1000): sess.run(optimizer) # Run merge variable operation summary = sess.run(merged) # Write the data after each iteration to the event file file_writer.add_summary(summary, i) print("After training, the model parameters were as follows:weight %f ,bias %f, loss %f " % (weights.eval(), bias.eval(), error.eval())) return None if __name__ == "__main__": linear_regression()

阶段6:修改指令名称

""" __author__="dazhi" 2021/3/6-13:35 """ import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # Self realization of a linear regression def linear_regression(): tf.compat.v1.disable_eager_execution() # ensure sess.run () can operate normally """tensorflow Basic structure""" # 1 Prepare data with tf.compat.v1.variable_scope("prepare_data"): x = tf.compat.v1.random_normal(shape=[100, 1], name="feature") y_true = tf.matmul(x, [[0.8]]) + 0.7 # 2 construct model # Defining model parameters using variables with tf.compat.v1.variable_scope("create_model"): weights = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1]), name="Weights") bias = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1]), name="Bias") y_predict = tf.matmul(x, weights) + bias # 3 construct loss function with tf.compat.v1.variable_scope("loss_function"): error = tf.reduce_mean(tf.square(y_predict - y_true)) # 4 optimize loss function with tf.compat.v1.variable_scope("optimizer"): optimizer = tf.compat.v1.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error) # 2++Collect variables tf.compat.v1.summary.scalar("error", error) tf.compat.v1.summary.histogram("weights", weights) tf.compat.v1.summary.histogram("bias", bias) # 3++Merge variables merged = tf.compat.v1.summary.merge_all() # initialize variable init = tf.compat.v1.global_variables_initializer() # Return call must be turned on to display results with tf.compat.v1.Session() as sess: sess.run(init) # 1++Create event file file_writer = tf.compat.v1.summary.FileWriter("./tmp/Linear", graph=sess.graph) # Query the value of initialization operation model parameters print("Before training, the model parameters were as follows:weight %f ,bias %f , loss %f " % (weights.eval(), bias.eval(), error.eval())) # Start training for i in range(1000): sess.run(optimizer) # Run merge variable operation summary = sess.run(merged) # Write the data after each iteration to the event file file_writer.add_summary(summary, i) print("After training, the model parameters were as follows:weight %f ,bias %f, loss %f " % (weights.eval(), bias.eval(), error.eval())) return None if __name__ == "__main__": linear_regression()

阶段7:模型的保存和加载

""" __author__="dazhi" 2021/3/6-13:35 """ import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # Self realization of a linear regression def linear_regression(): tf.compat.v1.disable_eager_execution() # ensure sess.run () can operate normally """tensorflow Basic structure""" # 1 Prepare data with tf.compat.v1.variable_scope("prepare_data"): x = tf.compat.v1.random_normal(shape=[100, 1], name="feature") y_true = tf.matmul(x, [[0.8]]) + 0.7 # 2 construct model # Defining model parameters using variables with tf.compat.v1.variable_scope("create_model"): weights = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1]), name="Weights") bias = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1]), name="Bias") y_predict = tf.matmul(x, weights) + bias # 3 construct loss function with tf.compat.v1.variable_scope("loss_function"): error = tf.reduce_mean(tf.square(y_predict - y_true)) # 4 optimize loss function with tf.compat.v1.variable_scope("optimizer"): optimizer = tf.compat.v1.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error) # 2++Collect variables tf.compat.v1.summary.scalar("error", error) tf.compat.v1.summary.histogram("weights", weights) tf.compat.v1.summary.histogram("bias", bias) # 3++Merge variables merged = tf.compat.v1.summary.merge_all() #Creating a saver object saver=tf.compat.v1.train.Saver() # initialize variable init = tf.compat.v1.global_variables_initializer() # Return call must be turned on to display results with tf.compat.v1.Session() as sess: sess.run(init) # 1++Create event file file_writer = tf.compat.v1.summary.FileWriter("./tmp/Linear", graph=sess.graph) # Query the value of initialization operation model parameters print("Before training, the model parameters were as follows:weight %f ,bias %f , loss %f " % (weights.eval(), bias.eval(), error.eval())) # Start training for i in range(1000): sess.run(optimizer) # Run merge variable operation summary = sess.run(merged) # Write the data after each iteration to the event file file_writer.add_summary(summary, i) #Save model if i % 10 == 0: saver.save(sess, "./tmp/model/my_linear.ckpt") print("After training, the model parameters were as follows:weight %f ,bias %f, loss %f " % (weights.eval(), bias.eval(), error.eval())) return None if __name__ == "__main__": linear_regression()

""" __author__="dazhi" 2021/3/6-13:35 """ import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # Self realization of a linear regression def linear_regression(): tf.compat.v1.disable_eager_execution() # ensure sess.run () can operate normally """tensorflow Basic structure""" # 1 Prepare data with tf.compat.v1.variable_scope("prepare_data"): x = tf.compat.v1.random_normal(shape=[100, 1], name="feature") y_true = tf.matmul(x, [[0.8]]) + 0.7 # 2 construct model # Defining model parameters using variables with tf.compat.v1.variable_scope("create_model"): weights = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1]), name="Weights") bias = tf.Variable(initial_value=tf.compat.v1.random_normal(shape=[1, 1]), name="Bias") y_predict = tf.matmul(x, weights) + bias # 3 construct loss function with tf.compat.v1.variable_scope("loss_function"): error = tf.reduce_mean(tf.square(y_predict - y_true)) # 4 optimize loss function with tf.compat.v1.variable_scope("optimizer"): optimizer = tf.compat.v1.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error) # 2++Collect variables tf.compat.v1.summary.scalar("error", error) tf.compat.v1.summary.histogram("weights", weights) tf.compat.v1.summary.histogram("bias", bias) # 3++Merge variables merged = tf.compat.v1.summary.merge_all() #Creating a saver object saver=tf.compat.v1.train.Saver() # initialize variable init = tf.compat.v1.global_variables_initializer() # Return call must be turned on to display results with tf.compat.v1.Session() as sess: sess.run(init) # 1++Create event file file_writer = tf.compat.v1.summary.FileWriter("./tmp/Linear", graph=sess.graph) # Query the value of initialization operation model parameters print("Before training, the model parameters were as follows:weight %f ,bias %f , loss %f " % (weights.eval(), bias.eval(), error.eval())) # Start training # for i in range(1000): # sess.run(optimizer) # # # Run merge variable operation # summary = sess.run(merged) # # # Write the data after each iteration to the event file # file_writer.add_summary(summary, i) # # #Save model # if i % 10 == 0: # saver.save(sess, "./tmp/model/my_linear.ckpt") # # print("After training, the model parameters were as follows:weight %f ,bias %f, loss %f " % (weights.eval(), bias.eval(), error.eval())) #Loading model if os.path.exists("./tmp/model/checkpoint"): saver.restore(sess, "./tmp/model/my_linear.ckpt") print("After training, the model parameters were as follows:weight %f ,bias %f, loss %f " % (weights.eval(), bias.eval(), error.eval())) return None if __name__ == "__main__": linear_regression()

阶段8:命令行参数

""" __author__="dazhi" 2021/3/6-21:28 """ import tensorflow as tf import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # 1)Define command line parameters tf.compat.v1.app.flags.DEFINE_integer("max_step", 100, "Steps of training model") tf.compat.v1.app.flags.DEFINE_string("model_dir", "Unknown", "Path to save model + model name") # 2)Simplified variable name FLAGS = tf.compat.v1.app.flags.FLAGS def command_demo(): """ Demonstration of command line parameters :return: """ print("max_step value :", FLAGS.max_step) print("model_dir value :", FLAGS.model_dir) return None if __name__ == "__main__": command_demo()