1.准备步骤

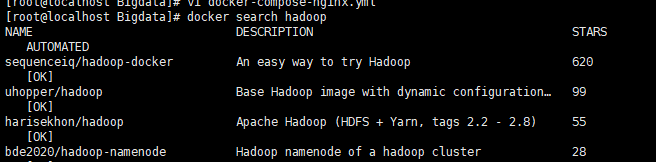

docker search找下hadoop

然后下载

docker pull sequenceiq/hadoop-docker

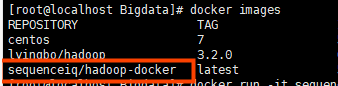

用docker images可以查看到镜像

然后启动

docker run -it -p 8088:8088 sequenceiq/hadoop-docker /etc/bootstrap.sh -bash

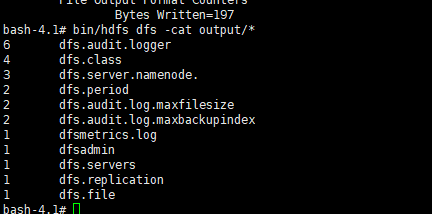

我们测试一下

cd $HADOOP_PREFIX

# run the mapreduce

bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.1.jar grep input output 'dfs[a-z.]+'

# check the output

bin/hdfs dfs -cat output/*最后输出

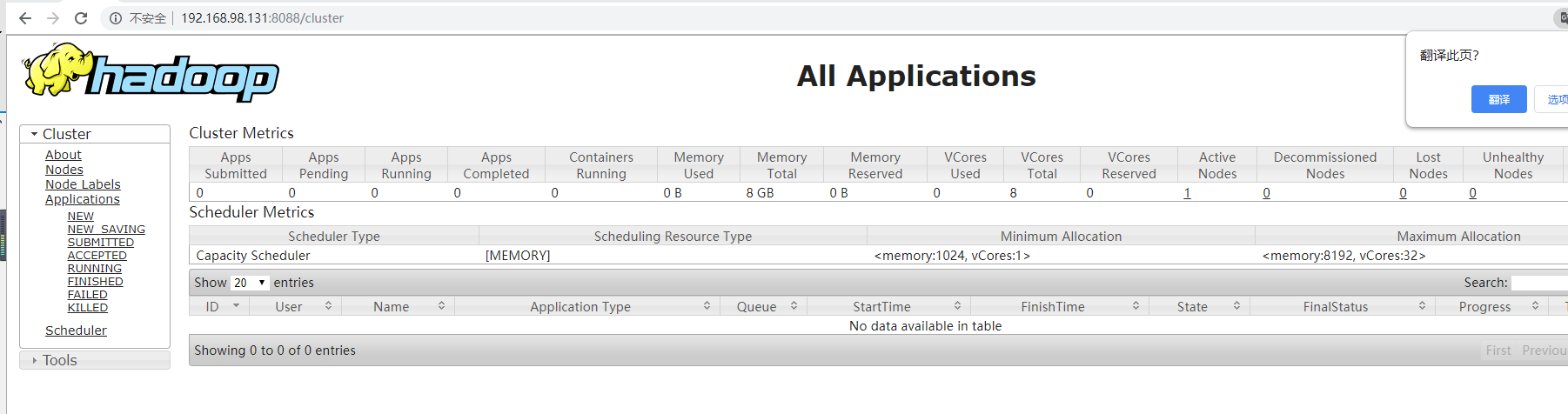

进入yarn控制台

DockerFile如下

# Creates pseudo distributed hadoop 2.7.1 # # docker build -t sequenceiq/hadoop . FROM sequenceiq/pam:centos-6.5 MAINTAINER SequenceIQ USER root # install dev tools RUN yum clean all; rpm --rebuilddb; yum install -y curl which tar sudo openssh-server openssh-clients rsync # update libselinux. see https://github.com/sequenceiq/hadoop-docker/issues/14 RUN yum update -y libselinux # passwordless ssh RUN ssh-keygen -q -N "" -t dsa -f /etc/ssh/ssh_host_dsa_key RUN ssh-keygen -q -N "" -t rsa -f /etc/ssh/ssh_host_rsa_key RUN ssh-keygen -q -N "" -t rsa -f /root/.ssh/id_rsa RUN cp /root/.ssh/id_rsa.pub /root/.ssh/authorized_keys # java RUN curl -LO 'http://download.oracle.com/otn-pub/java/jdk/7u71-b14/jdk-7u71-linux-x64.rpm' -H 'Cookie: oraclelicense=accept-securebackup-cookie' RUN rpm -i jdk-7u71-linux-x64.rpm RUN rm jdk-7u71-linux-x64.rpm ENV JAVA_HOME /usr/java/default ENV PATH $PATH:$JAVA_HOME/bin RUN rm /usr/bin/java && ln -s $JAVA_HOME/bin/java /usr/bin/java # download native support RUN mkdir -p /tmp/native RUN curl -L https://github.com/sequenceiq/docker-hadoop-build/releases/download/v2.7.1/hadoop-native-64-2.7.1.tgz | tar -xz -C /tmp/native # hadoop RUN curl -s http://www.eu.apache.org/dist/hadoop/common/hadoop-2.7.1/hadoop-2.7.1.tar.gz | tar -xz -C /usr/local/ RUN cd /usr/local && ln -s ./hadoop-2.7.1 hadoop ENV HADOOP_PREFIX /usr/local/hadoop ENV HADOOP_COMMON_HOME /usr/local/hadoop ENV HADOOP_HDFS_HOME /usr/local/hadoop ENV HADOOP_MAPRED_HOME /usr/local/hadoop ENV HADOOP_YARN_HOME /usr/local/hadoop ENV HADOOP_CONF_DIR /usr/local/hadoop/etc/hadoop ENV YARN_CONF_DIR $HADOOP_PREFIX/etc/hadoop RUN sed -i '/^export JAVA_HOME/ s:.*:export JAVA_HOME=/usr/java/default export HADOOP_PREFIX=/usr/local/hadoop export HADOOP_HOME=/usr/local/hadoop :' $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh RUN sed -i '/^export HADOOP_CONF_DIR/ s:.*:export HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop/:' $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh #RUN . $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh RUN mkdir $HADOOP_PREFIX/input RUN cp $HADOOP_PREFIX/etc/hadoop/*.xml $HADOOP_PREFIX/input # pseudo distributed ADD core-site.xml.template $HADOOP_PREFIX/etc/hadoop/core-site.xml.template RUN sed s/HOSTNAME/localhost/ /usr/local/hadoop/etc/hadoop/core-site.xml.template > /usr/local/hadoop/etc/hadoop/core-site.xml ADD hdfs-site.xml $HADOOP_PREFIX/etc/hadoop/hdfs-site.xml ADD mapred-site.xml $HADOOP_PREFIX/etc/hadoop/mapred-site.xml ADD yarn-site.xml $HADOOP_PREFIX/etc/hadoop/yarn-site.xml RUN $HADOOP_PREFIX/bin/hdfs namenode -format # fixing the libhadoop.so like a boss RUN rm -rf /usr/local/hadoop/lib/native RUN mv /tmp/native /usr/local/hadoop/lib ADD ssh_config /root/.ssh/config RUN chmod 600 /root/.ssh/config RUN chown root:root /root/.ssh/config # # installing supervisord # RUN yum install -y python-setuptools # RUN easy_install pip # RUN curl https://bitbucket.org/pypa/setuptools/raw/bootstrap/ez_setup.py -o - | python # RUN pip install supervisor # # ADD supervisord.conf /etc/supervisord.conf ADD bootstrap.sh /etc/bootstrap.sh RUN chown root:root /etc/bootstrap.sh RUN chmod 700 /etc/bootstrap.sh ENV BOOTSTRAP /etc/bootstrap.sh # workingaround docker.io build error RUN ls -la /usr/local/hadoop/etc/hadoop/*-env.sh RUN chmod +x /usr/local/hadoop/etc/hadoop/*-env.sh RUN ls -la /usr/local/hadoop/etc/hadoop/*-env.sh # fix the 254 error code RUN sed -i "/^[^#]*UsePAM/ s/.*/#&/" /etc/ssh/sshd_config RUN echo "UsePAM no" >> /etc/ssh/sshd_config RUN echo "Port 2122" >> /etc/ssh/sshd_config RUN service sshd start && $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh && $HADOOP_PREFIX/sbin/start-dfs.sh && $HADOOP_PREFIX/bin/hdfs dfs -mkdir -p /user/root RUN service sshd start && $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh && $HADOOP_PREFIX/sbin/start-dfs.sh && $HADOOP_PREFIX/bin/hdfs dfs -put $HADOOP_PREFIX/etc/hadoop/ input CMD ["/etc/bootstrap.sh", "-d"] # Hdfs ports EXPOSE 50010 50020 50070 50075 50090 8020 9000 # Mapred ports EXPOSE 10020 19888 #Yarn ports EXPOSE 8030 8031 8032 8033 8040 8042 8088 #Other ports EXPOSE 49707 2122

。。

。。

。。