K8S中使用gfs当存储

一开始是参考《再也不踩坑的Kubernetes实战指南》中的以容器方式去安装GFS集群的,但是在安装Heketi的过程中卡了将近几十分钟也没有好,就换成来EndPoints安装。

环境信息

三台机器,操作系统CentOS 7.8或者阿里云服务器。此处我都安装测试过是可行的

k8s-master 192.168.1.180

k8s-node1 192.168.1.151

k8s-node2 192.168.1.152

1、搭建glusterFS集群

执行

[root@k8s-master ]# yum install centos-release-gluster

[root@k8s-master ]# yum install -y glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

配置 GlusterFS 集群

[root@k8s-master ]# systemctl start glusterd.service

[root@k8s-master ]# systemctl enable glusterd.service

主节点执行

[root@k8s-master ]# gluster peer probe k8s-master

[root@k8s-master ]# gluster peer probe k8s-node1

[root@k8s-master ]# gluster peer probe k8s-node2

创建数据目录

[root@k8s-master ]# mkdir -p /opt/gfs_data

创建复制卷

[root@k8s-master ]# gluster volume create k8s-volume replica 3 k8s-master:/opt/gfs_data k8s-node1:/opt/gfs_data k8s-node2:/opt/gfs_data force

启动卷

[root@k8s-master ]# gluster volume start k8s-volume

查询卷状态

[root@k8s-master ~]# gluster volume status

Status of volume: k8s-volume

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick k8s-master:/opt/gfs_data 49152 0 Y 13987

Brick k8s-node1:/opt/gfs_data 49152 0 Y 13216

Brick k8s-node2:/opt/gfs_data 49152 0 Y 13932

Self-heal Daemon on localhost N/A N/A Y 14014

Self-heal Daemon on k8s-node1 N/A N/A Y 13233

Self-heal Daemon on k8s-node2 N/A N/A Y 13949

Task Status of Volume k8s-volume

------------------------------------------------------------------------------

There are no active volume tasks

[root@k8s-master ~]#

[root@k8s-master ~]# gluster volume info

Volume Name: k8s-volume

Type: Replicate

Volume ID: fce4a311-6afb-428b-90dd-e0e9c335de3e

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: k8s-master:/opt/gfs_data

Brick2: k8s-node1:/opt/gfs_data

Brick3: k8s-node2:/opt/gfs_data

Options Reconfigured:

cluster.granular-entry-heal: on

storage.fips-mode-rchecksum: on

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

[root@k8s-master ~]#

验证glusterFS集群可用

选择其中一台主机执行

yum install -y glusterfs glusterfs-fuse

mount -t glusterfs 192.168.1.180:/k8s-volume /mnt

cd /mnt

touch test

2、使用glusterfs(以下均在k8s master节点执行)

创建glusterfs的endpoints:kubectl apply -f glusterfs-cluster.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs-cluster

namespace: default

subsets:

- addresses:

- ip: 192.168.1.180

- ip: 192.168.1.151

- ip: 192.168.1.152

ports:

- port: 30003

protocol: TCP

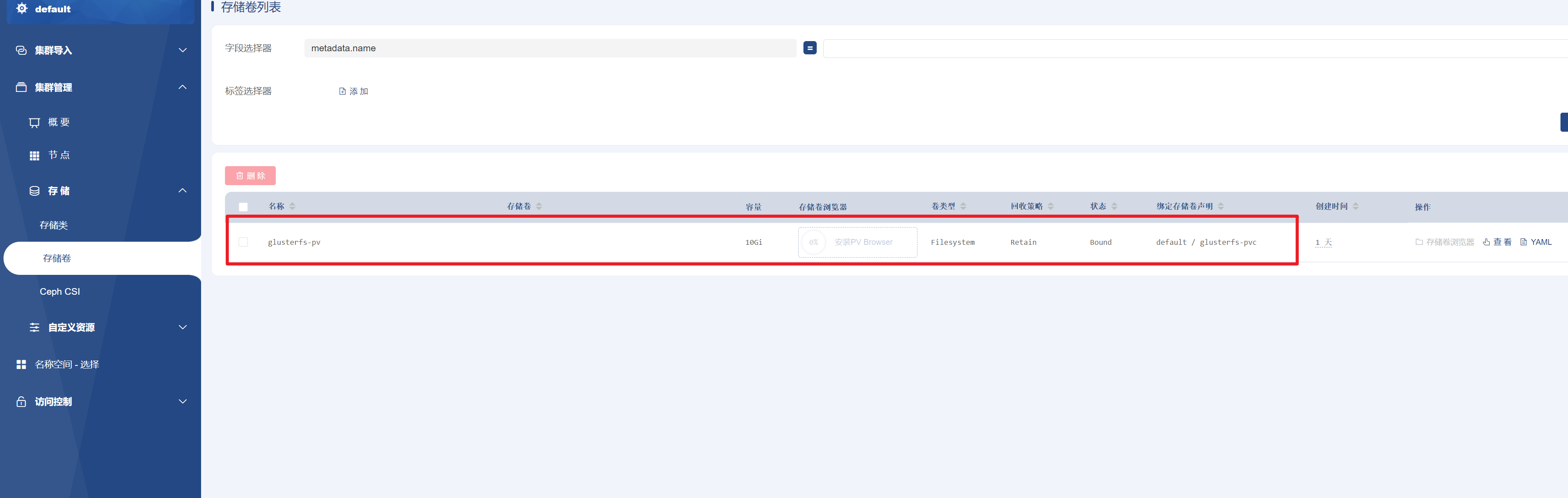

创建pv使用glusterfs:kubectl apply -f glusterfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: glusterfs-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

glusterfs:

endpoints: glusterfs-cluster

path: k8s-volume

readOnly: false

创建pvc声明:kubectl apply -f glusterfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: glusterfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

创建应用使用pvc:kubectl apply -f nginx_deployment.yaml

[root@k8s-master ]# cat nginx_deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: storage001

mountPath: "/usr/share/nginx/html"

volumes:

- name: storage001

persistentVolumeClaim:

claimName: glusterfs-pvc

执行下面命令会发现有test文件

kubectl exec nginx-deployment-6594f875fb-8dnl8 ls /usr/share/nginx/html/